Jiawen Wei

eXIAA: eXplainable Injections for Adversarial Attack

Nov 13, 2025Abstract:Post-hoc explainability methods are a subset of Machine Learning (ML) that aim to provide a reason for why a model behaves in a certain way. In this paper, we show a new black-box model-agnostic adversarial attack for post-hoc explainable Artificial Intelligence (XAI), particularly in the image domain. The goal of the attack is to modify the original explanations while being undetected by the human eye and maintain the same predicted class. In contrast to previous methods, we do not require any access to the model or its weights, but only to the model's computed predictions and explanations. Additionally, the attack is accomplished in a single step while significantly changing the provided explanations, as demonstrated by empirical evaluation. The low requirements of our method expose a critical vulnerability in current explainability methods, raising concerns about their reliability in safety-critical applications. We systematically generate attacks based on the explanations generated by post-hoc explainability methods (saliency maps, integrated gradients, and DeepLIFT SHAP) for pretrained ResNet-18 and ViT-B16 on ImageNet. The results show that our attacks could lead to dramatically different explanations without changing the predictive probabilities. We validate the effectiveness of our attack, compute the induced change based on the explanation with mean absolute difference, and verify the closeness of the original image and the corrupted one with the Structural Similarity Index Measure (SSIM).

XAI4Extremes: An interpretable machine learning framework for understanding extreme-weather precursors under climate change

Mar 11, 2025Abstract:Extreme weather events are increasing in frequency and intensity due to climate change. This, in turn, is exacting a significant toll in communities worldwide. While prediction skills are increasing with advances in numerical weather prediction and artificial intelligence tools, extreme weather still present challenges. More specifically, identifying the precursors of such extreme weather events and how these precursors may evolve under climate change remain unclear. In this paper, we propose to use post-hoc interpretability methods to construct relevance weather maps that show the key extreme-weather precursors identified by deep learning models. We then compare this machine view with existing domain knowledge to understand whether deep learning models identified patterns in data that may enrich our understanding of extreme-weather precursors. We finally bin these relevant maps into different multi-year time periods to understand the role that climate change is having on these precursors. The experiments are carried out on Indochina heatwaves, but the methodology can be readily extended to other extreme weather events worldwide.

Revisiting the robustness of post-hoc interpretability methods

Jul 29, 2024

Abstract:Post-hoc interpretability methods play a critical role in explainable artificial intelligence (XAI), as they pinpoint portions of data that a trained deep learning model deemed important to make a decision. However, different post-hoc interpretability methods often provide different results, casting doubts on their accuracy. For this reason, several evaluation strategies have been proposed to understand the accuracy of post-hoc interpretability. Many of these evaluation strategies provide a coarse-grained assessment -- i.e., they evaluate how the performance of the model degrades on average by corrupting different data points across multiple samples. While these strategies are effective in selecting the post-hoc interpretability method that is most reliable on average, they fail to provide a sample-level, also referred to as fine-grained, assessment. In other words, they do not measure the robustness of post-hoc interpretability methods. We propose an approach and two new metrics to provide a fine-grained assessment of post-hoc interpretability methods. We show that the robustness is generally linked to its coarse-grained performance.

An Embedded Feature Selection Framework for Control

Jun 19, 2022

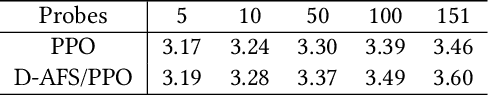

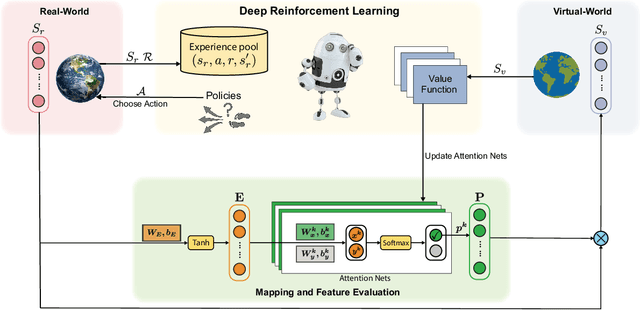

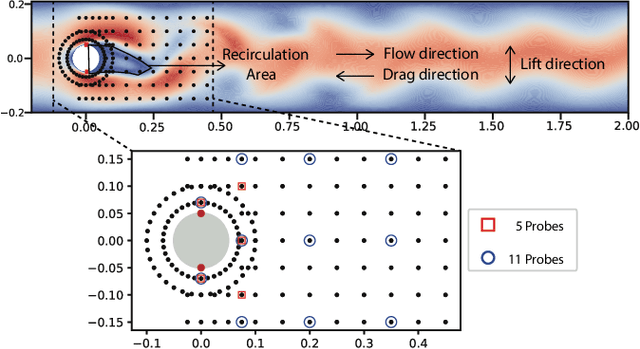

Abstract:Reducing sensor requirements while keeping optimal control performance is crucial to many industrial control applications to achieve robust, low-cost, and computation-efficient controllers. However, existing feature selection solutions for the typical machine learning domain can hardly be applied in the domain of control with changing dynamics. In this paper, a novel framework, namely the Dual-world embedded Attentive Feature Selection (D-AFS), can efficiently select the most relevant sensors for the system under dynamic control. Rather than the one world used in most Deep Reinforcement Learning (DRL) algorithms, D-AFS has both the real world and its virtual peer with twisted features. By analyzing the DRL's response in two worlds, D-AFS can quantitatively identify respective features' importance towards control. A well-known active flow control problem, cylinder drag reduction, is used for evaluation. Results show that D-AFS successfully finds an optimized five-probes layout with 18.7\% drag reduction than the state-of-the-art solution with 151 probes and 49.2\% reduction than five-probes layout by human experts. We also apply this solution to four OpenAI classical control cases. In all cases, D-AFS achieves the same or better sensor configurations than originally provided solutions. Results highlight, we argued, a new way to achieve efficient and optimal sensor designs for experimental or industrial systems. Our source codes are made publicly available at https://github.com/G-AILab/DAFSFluid.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge