Wenwei Lin

Learning Monocular Depth from Events via Egomotion Compensation

Dec 26, 2024Abstract:Event cameras are neuromorphically inspired sensors that sparsely and asynchronously report brightness changes. Their unique characteristics of high temporal resolution, high dynamic range, and low power consumption make them well-suited for addressing challenges in monocular depth estimation (e.g., high-speed or low-lighting conditions). However, current existing methods primarily treat event streams as black-box learning systems without incorporating prior physical principles, thus becoming over-parameterized and failing to fully exploit the rich temporal information inherent in event camera data. To address this limitation, we incorporate physical motion principles to propose an interpretable monocular depth estimation framework, where the likelihood of various depth hypotheses is explicitly determined by the effect of motion compensation. To achieve this, we propose a Focus Cost Discrimination (FCD) module that measures the clarity of edges as an essential indicator of focus level and integrates spatial surroundings to facilitate cost estimation. Furthermore, we analyze the noise patterns within our framework and improve it with the newly introduced Inter-Hypotheses Cost Aggregation (IHCA) module, where the cost volume is refined through cost trend prediction and multi-scale cost consistency constraints. Extensive experiments on real-world and synthetic datasets demonstrate that our proposed framework outperforms cutting-edge methods by up to 10\% in terms of the absolute relative error metric, revealing superior performance in predicting accuracy.

An Embedded Feature Selection Framework for Control

Jun 19, 2022

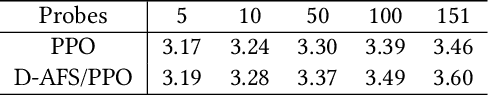

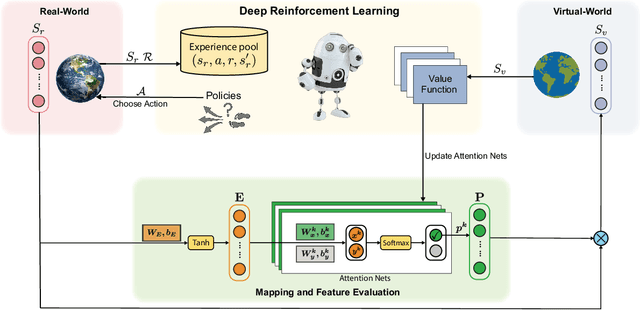

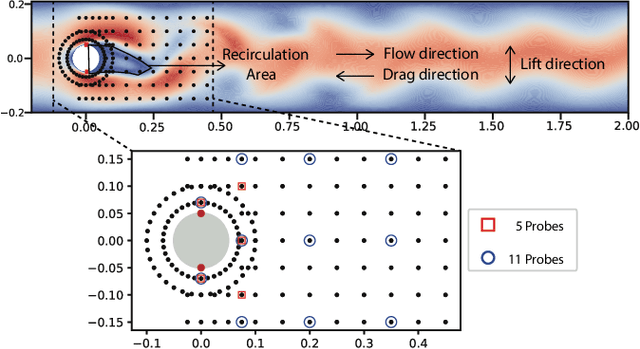

Abstract:Reducing sensor requirements while keeping optimal control performance is crucial to many industrial control applications to achieve robust, low-cost, and computation-efficient controllers. However, existing feature selection solutions for the typical machine learning domain can hardly be applied in the domain of control with changing dynamics. In this paper, a novel framework, namely the Dual-world embedded Attentive Feature Selection (D-AFS), can efficiently select the most relevant sensors for the system under dynamic control. Rather than the one world used in most Deep Reinforcement Learning (DRL) algorithms, D-AFS has both the real world and its virtual peer with twisted features. By analyzing the DRL's response in two worlds, D-AFS can quantitatively identify respective features' importance towards control. A well-known active flow control problem, cylinder drag reduction, is used for evaluation. Results show that D-AFS successfully finds an optimized five-probes layout with 18.7\% drag reduction than the state-of-the-art solution with 151 probes and 49.2\% reduction than five-probes layout by human experts. We also apply this solution to four OpenAI classical control cases. In all cases, D-AFS achieves the same or better sensor configurations than originally provided solutions. Results highlight, we argued, a new way to achieve efficient and optimal sensor designs for experimental or industrial systems. Our source codes are made publicly available at https://github.com/G-AILab/DAFSFluid.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge