Dongdong Ge

StepORLM: A Self-Evolving Framework With Generative Process Supervision For Operations Research Language Models

Sep 26, 2025Abstract:Large Language Models (LLMs) have shown promising capabilities for solving Operations Research (OR) problems. While reinforcement learning serves as a powerful paradigm for LLM training on OR problems, existing works generally face two key limitations. First, outcome reward suffers from the credit assignment problem, where correct final answers can reinforce flawed reasoning. Second, conventional discriminative process supervision is myopic, failing to evaluate the interdependent steps of OR modeling holistically. To this end, we introduce StepORLM, a novel self-evolving framework with generative process supervision. At its core, StepORLM features a co-evolutionary loop where a policy model and a generative process reward model (GenPRM) iteratively improve on each other. This loop is driven by a dual-feedback mechanism: definitive, outcome-based verification from an external solver, and nuanced, holistic process evaluation from the GenPRM. The combined signal is used to align the policy via Weighted Direct Preference Optimization (W-DPO) and simultaneously refine the GenPRM. Our resulting 8B-parameter StepORLM establishes a new state-of-the-art across six benchmarks, significantly outperforming vastly larger generalist models, agentic methods, and specialized baselines. Moreover, the co-evolved GenPRM is able to act as a powerful and universally applicable process verifier, substantially boosting the inference scaling performance of both our own model and other existing LLMs.

Auto-Formulating Dynamic Programming Problems with Large Language Models

Jul 15, 2025Abstract:Dynamic programming (DP) is a fundamental method in operations research, but formulating DP models has traditionally required expert knowledge of both the problem context and DP techniques. Large Language Models (LLMs) offer the potential to automate this process. However, DP problems pose unique challenges due to their inherently stochastic transitions and the limited availability of training data. These factors make it difficult to directly apply existing LLM-based models or frameworks developed for other optimization problems, such as linear or integer programming. We introduce DP-Bench, the first benchmark covering a wide range of textbook-level DP problems to enable systematic evaluation. We present Dynamic Programming Language Model (DPLM), a 7B-parameter specialized model that achieves performance comparable to state-of-the-art LLMs like OpenAI's o1 and DeepSeek-R1, and surpasses them on hard problems. Central to DPLM's effectiveness is DualReflect, our novel synthetic data generation pipeline, designed to scale up training data from a limited set of initial examples. DualReflect combines forward generation for diversity and backward generation for reliability. Our results reveal a key insight: backward generation is favored in low-data regimes for its strong correctness guarantees, while forward generation, though lacking such guarantees, becomes increasingly valuable at scale for introducing diverse formulations. This trade-off highlights the complementary strengths of both approaches and the importance of combining them.

Solver-Informed RL: Grounding Large Language Models for Authentic Optimization Modeling

May 17, 2025Abstract:Optimization modeling is fundamental to decision-making across diverse domains.Despite progress in automating optimization formulation from natural language descriptions, Large Language Models (LLMs) often struggle to generate formally correct and usable models due to hallucinations, posing a challenge for reliable automation. Inspired by the success of Reinforcement Learning (RL) in enhancing Large Reasoning Models, we present Solver-Informed Reinforcement Learning (SIRL).This novel framework leverages external optimization solvers as verifiable reward mechanisms to significantly improve the authenticity of LLMs for optimization modeling.Acting as precise verifiers, these solvers automatically assess the executable code and the instance-level mathematical model represented by the associated LP file, yielding precise and comprehensive feedback signals -- including syntax, feasibility, and solution quality that directly inform the RL process. This automated verification process, powered by classic optimization solvers, also underpins our instance-enhanced self-consistency method to synthesize high-quality training data. Extensive experiments on diverse public benchmarks demonstrate that SIRL achieves state-of-the-art performance, substantially outperforming existing methods in generating accurate and executable optimization models.

Beyond $\mathcal{O}(\sqrt{T})$ Regret: Decoupling Learning and Decision-making in Online Linear Programming

Jan 06, 2025

Abstract:Online linear programming plays an important role in both revenue management and resource allocation, and recent research has focused on developing efficient first-order online learning algorithms. Despite the empirical success of first-order methods, they typically achieve a regret no better than $\mathcal{O} ( \sqrt{T} )$, which is suboptimal compared to the $\mathcal{O} (\log T)$ bound guaranteed by the state-of-the-art linear programming (LP)-based online algorithms. This paper establishes a general framework that improves upon the $\mathcal{O} ( \sqrt{T} )$ result when the LP dual problem exhibits certain error bound conditions. For the first time, we show that first-order learning algorithms achieve $o( \sqrt{T} )$ regret in the continuous support setting and $\mathcal{O} (\log T)$ regret in the finite support setting beyond the non-degeneracy assumption. Our results significantly improve the state-of-the-art regret results and provide new insights for sequential decision-making.

A Robust Anchor-based Method for Multi-Camera Pedestrian Localization

Oct 25, 2024

Abstract:This paper addresses the problem of vision-based pedestrian localization, which estimates a pedestrian's location using images and camera parameters. In practice, however, calibrated camera parameters often deviate from the ground truth, leading to inaccuracies in localization. To address this issue, we propose an anchor-based method that leverages fixed-position anchors to reduce the impact of camera parameter errors. We provide a theoretical analysis that demonstrates the robustness of our approach. Experiments conducted on simulated, real-world, and public datasets show that our method significantly improves localization accuracy and remains resilient to noise in camera parameters, compared to methods without anchors.

Reward Learning From Preference With Ties

Oct 05, 2024

Abstract:Reward learning plays a pivotal role in Reinforcement Learning from Human Feedback (RLHF), ensuring the alignment of language models. The Bradley-Terry (BT) model stands as the prevalent choice for capturing human preferences from datasets containing pairs of chosen and rejected responses. In preference modeling, the focus is not on absolute values but rather on the reward difference between chosen and rejected responses, referred to as preference strength. Thus, precise evaluation of preference strength holds paramount importance in preference modeling. However, an easily overlooked factor significantly affecting preference strength measurement is that human attitudes towards two responses may not solely indicate a preference for one over the other and ties are also a common occurrence. To address this, we propose the adoption of the generalized Bradley-Terry model -- the Bradley-Terry model with ties (BTT) -- to accommodate tied preferences, thus leveraging additional information. We prove that even with the access to the true distributions of prompt and response, disregarding ties can lead to a notable bias in preference strength measurement. Comprehensive experiments further validate the advantages of incorporating ties in preference modeling. Notably, fine-tuning with BTT significantly outperforms fine-tuning with BT on synthetic preference datasets with ties, labeled by state-of-the-art open-source LLMs.

Solving Integrated Process Planning and Scheduling Problem via Graph Neural Network Based Deep Reinforcement Learning

Sep 02, 2024Abstract:The Integrated Process Planning and Scheduling (IPPS) problem combines process route planning and shop scheduling to achieve high efficiency in manufacturing and maximize resource utilization, which is crucial for modern manufacturing systems. Traditional methods using Mixed Integer Linear Programming (MILP) and heuristic algorithms can not well balance solution quality and speed when solving IPPS. In this paper, we propose a novel end-to-end Deep Reinforcement Learning (DRL) method. We model the IPPS problem as a Markov Decision Process (MDP) and employ a Heterogeneous Graph Neural Network (GNN) to capture the complex relationships among operations, machines, and jobs. To optimize the scheduling strategy, we use Proximal Policy Optimization (PPO). Experimental results show that, compared to traditional methods, our approach significantly improves solution efficiency and quality in large-scale IPPS instances, providing superior scheduling strategies for modern intelligent manufacturing systems.

ORLM: Training Large Language Models for Optimization Modeling

May 30, 2024

Abstract:Large Language Models (LLMs) have emerged as powerful tools for tackling complex Operations Research (OR) problem by providing the capacity in automating optimization modeling. However, current methodologies heavily rely on prompt engineering (e.g., multi-agent cooperation) with proprietary LLMs, raising data privacy concerns that could be prohibitive in industry applications. To tackle this issue, we propose training open-source LLMs for optimization modeling. We identify four critical requirements for the training dataset of OR LLMs, design and implement OR-Instruct, a semi-automated process for creating synthetic data tailored to specific requirements. We also introduce the IndustryOR benchmark, the first industrial benchmark for testing LLMs on solving real-world OR problems. We apply the data from OR-Instruct to various open-source LLMs of 7b size (termed as ORLMs), resulting in a significantly improved capability for optimization modeling. Our best-performing ORLM achieves state-of-the-art performance on the NL4OPT, MAMO, and IndustryOR benchmarks. Our code and data are available at \url{https://github.com/Cardinal-Operations/ORLM}.

Decoupling Learning and Decision-Making: Breaking the $\mathcal{O}(\sqrt{T})$ Barrier in Online Resource Allocation with First-Order Methods

Feb 11, 2024Abstract:Online linear programming plays an important role in both revenue management and resource allocation, and recent research has focused on developing efficient first-order online learning algorithms. Despite the empirical success of first-order methods, they typically achieve a regret no better than $\mathcal{O}(\sqrt{T})$, which is suboptimal compared to the $\mathcal{O}(\log T)$ bound guaranteed by the state-of-the-art linear programming (LP)-based online algorithms. This paper establishes several important facts about online linear programming, which unveils the challenge for first-order-method-based online algorithms to achieve beyond $\mathcal{O}(\sqrt{T})$ regret. To address the challenge, we introduce a new algorithmic framework that decouples learning from decision-making. More importantly, for the first time, we show that first-order methods can attain regret $\mathcal{O}(T^{1/3})$ with this new framework. Lastly, we conduct numerical experiments to validate our theoretical findings.

A Homogenization Approach for Gradient-Dominated Stochastic Optimization

Aug 21, 2023

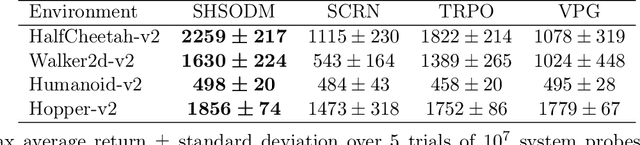

Abstract:Gradient dominance property is a condition weaker than strong convexity, yet it sufficiently ensures global convergence for first-order methods even in non-convex optimization. This property finds application in various machine learning domains, including matrix decomposition, linear neural networks, and policy-based reinforcement learning (RL). In this paper, we study the stochastic homogeneous second-order descent method (SHSODM) for gradient-dominated optimization with $\alpha \in [1, 2]$ based on a recently proposed homogenization approach. Theoretically, we show that SHSODM achieves a sample complexity of $O(\epsilon^{-7/(2 \alpha) +1})$ for $\alpha \in [1, 3/2)$ and $\tilde{O}(\epsilon^{-2/\alpha})$ for $\alpha \in [3/2, 2]$. We further provide a SHSODM with a variance reduction technique enjoying an improved sample complexity of $O( \epsilon ^{-( 7-3\alpha ) /( 2\alpha )})$ for $\alpha \in [1,3/2)$. Our results match the state-of-the-art sample complexity bounds for stochastic gradient-dominated optimization without \emph{cubic regularization}. Since the homogenization approach only relies on solving extremal eigenvector problems instead of Newton-type systems, our methods gain the advantage of cheaper iterations and robustness in ill-conditioned problems. Numerical experiments on several RL tasks demonstrate the efficiency of SHSODM compared to other off-the-shelf methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge