Caelan Reed Garrett

The Reality Gap in Robotics: Challenges, Solutions, and Best Practices

Oct 23, 2025Abstract:Machine learning has facilitated significant advancements across various robotics domains, including navigation, locomotion, and manipulation. Many such achievements have been driven by the extensive use of simulation as a critical tool for training and testing robotic systems prior to their deployment in real-world environments. However, simulations consist of abstractions and approximations that inevitably introduce discrepancies between simulated and real environments, known as the reality gap. These discrepancies significantly hinder the successful transfer of systems from simulation to the real world. Closing this gap remains one of the most pressing challenges in robotics. Recent advances in sim-to-real transfer have demonstrated promising results across various platforms, including locomotion, navigation, and manipulation. By leveraging techniques such as domain randomization, real-to-sim transfer, state and action abstractions, and sim-real co-training, many works have overcome the reality gap. However, challenges persist, and a deeper understanding of the reality gap's root causes and solutions is necessary. In this survey, we present a comprehensive overview of the sim-to-real landscape, highlighting the causes, solutions, and evaluation metrics for the reality gap and sim-to-real transfer.

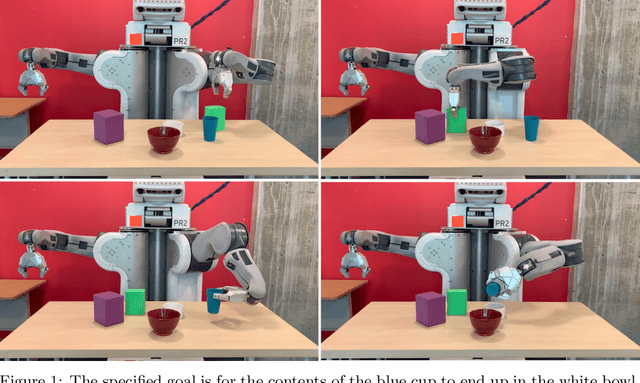

HAMSTER: Hierarchical Action Models For Open-World Robot Manipulation

Feb 08, 2025

Abstract:Large foundation models have shown strong open-world generalization to complex problems in vision and language, but similar levels of generalization have yet to be achieved in robotics. One fundamental challenge is the lack of robotic data, which are typically obtained through expensive on-robot operation. A promising remedy is to leverage cheaper, off-domain data such as action-free videos, hand-drawn sketches or simulation data. In this work, we posit that hierarchical vision-language-action (VLA) models can be more effective in utilizing off-domain data than standard monolithic VLA models that directly finetune vision-language models (VLMs) to predict actions. In particular, we study a class of hierarchical VLA models, where the high-level VLM is finetuned to produce a coarse 2D path indicating the desired robot end-effector trajectory given an RGB image and a task description. The intermediate 2D path prediction is then served as guidance to the low-level, 3D-aware control policy capable of precise manipulation. Doing so alleviates the high-level VLM from fine-grained action prediction, while reducing the low-level policy's burden on complex task-level reasoning. We show that, with the hierarchical design, the high-level VLM can transfer across significant domain gaps between the off-domain finetuning data and real-robot testing scenarios, including differences on embodiments, dynamics, visual appearances and task semantics, etc. In the real-robot experiments, we observe an average of 20% improvement in success rate across seven different axes of generalization over OpenVLA, representing a 50% relative gain. Visual results are provided at: https://hamster-robot.github.io/

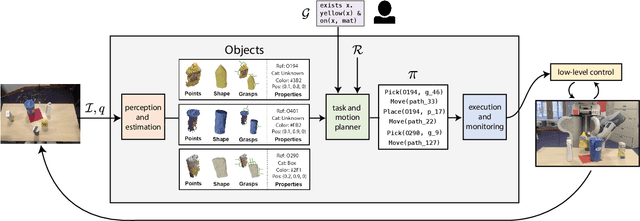

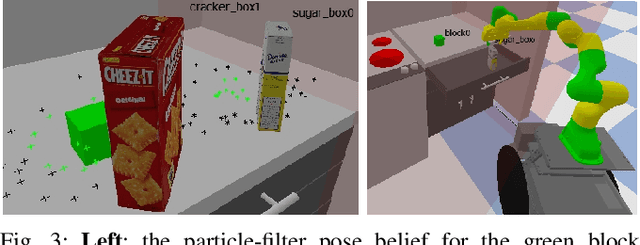

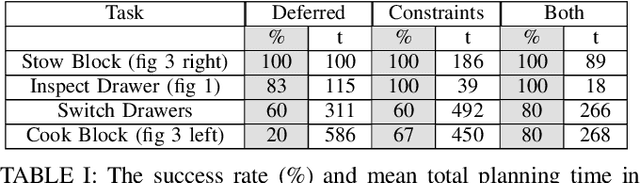

Open-World Task and Motion Planning via Vision-Language Model Inferred Constraints

Nov 13, 2024Abstract:Foundation models trained on internet-scale data, such as Vision-Language Models (VLMs), excel at performing tasks involving common sense, such as visual question answering. Despite their impressive capabilities, these models cannot currently be directly applied to challenging robot manipulation problems that require complex and precise continuous reasoning. Task and Motion Planning (TAMP) systems can control high-dimensional continuous systems over long horizons through combining traditional primitive robot operations. However, these systems require detailed model of how the robot can impact its environment, preventing them from directly interpreting and addressing novel human objectives, for example, an arbitrary natural language goal. We propose deploying VLMs within TAMP systems by having them generate discrete and continuous language-parameterized constraints that enable TAMP to reason about open-world concepts. Specifically, we propose algorithms for VLM partial planning that constrain a TAMP system's discrete temporal search and VLM continuous constraints interpretation to augment the traditional manipulation constraints that TAMP systems seek to satisfy. We demonstrate our approach on two robot embodiments, including a real world robot, across several manipulation tasks, where the desired objectives are conveyed solely through language.

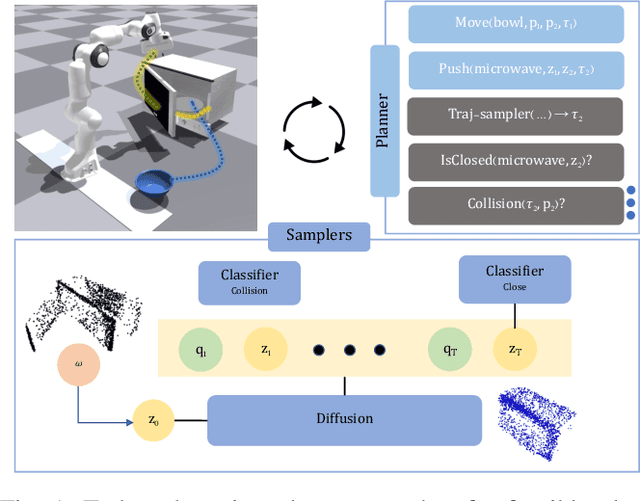

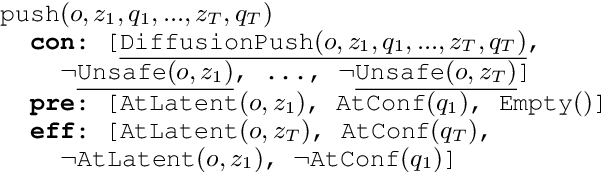

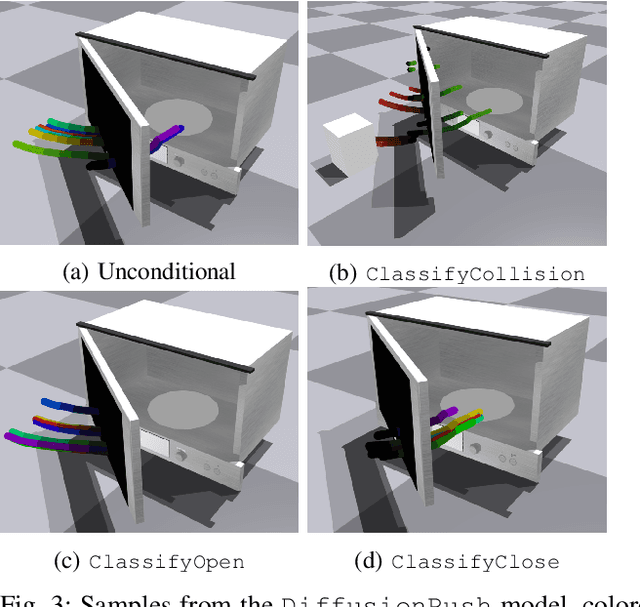

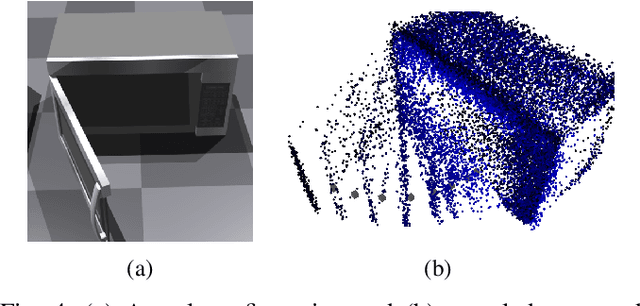

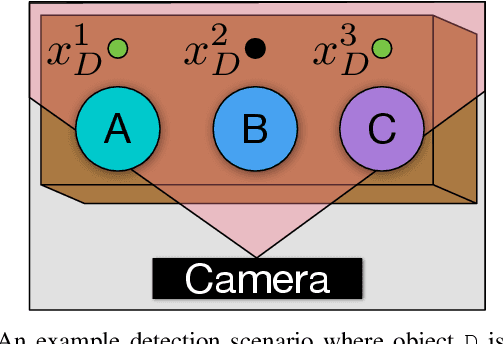

DiMSam: Diffusion Models as Samplers for Task and Motion Planning under Partial Observability

Jun 22, 2023

Abstract:Task and Motion Planning (TAMP) approaches are effective at planning long-horizon autonomous robot manipulation. However, because they require a planning model, it can be difficult to apply them to domains where the environment and its dynamics are not fully known. We propose to overcome these limitations by leveraging deep generative modeling, specifically diffusion models, to learn constraints and samplers that capture these difficult-to-engineer aspects of the planning model. These learned samplers are composed and combined within a TAMP solver in order to find action parameter values jointly that satisfy the constraints along a plan. To tractably make predictions for unseen objects in the environment, we define these samplers on low-dimensional learned latent embeddings of changing object state. We evaluate our approach in an articulated object manipulation domain and show how the combination of classical TAMP, generative learning, and latent embeddings enables long-horizon constraint-based reasoning.

Sequence-Based Plan Feasibility Prediction for Efficient Task and Motion Planning

Nov 03, 2022Abstract:Robots planning long-horizon behavior in complex environments must be able to quickly reason about the impact of the environment's geometry on what plans are feasible, i.e., whether there exist action parameter values that satisfy all constraints on a candidate plan. In tasks involving articulated and movable obstacles, typical Task and Motion Planning (TAMP) algorithms spend most of their runtime attempting to solve unsolvable constraint satisfaction problems imposed by infeasible plan skeletons. We developed a novel Transformer-based architecture, PIGINet, that predicts plan feasibility based on the initial state, goal, and candidate plans, fusing image and text embeddings with state features. The model sorts the plan skeletons produced by a TAMP planner according to the predicted satisfiability likelihoods. We evaluate the runtime of our learning-enabled TAMP algorithm on several distributions of kitchen rearrangement problems, comparing its performance to that of non-learning baselines and algorithm ablations. Our experiments show that PIGINet substantially improves planning efficiency, cutting down runtime by 80% on average on pick-and-place problems with articulated obstacles. It also achieves zero-shot generalization to problems with unseen object categories thanks to its visual encoding of objects.

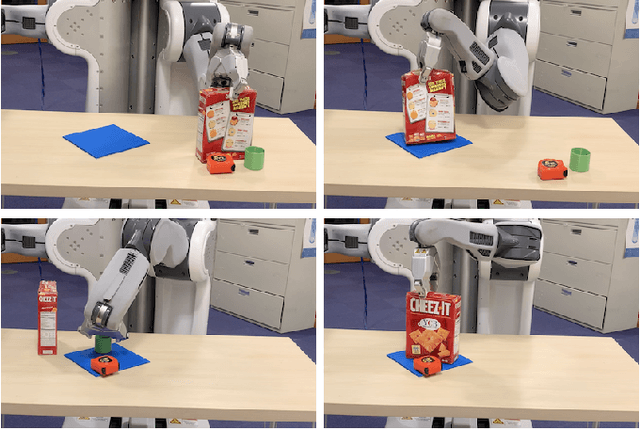

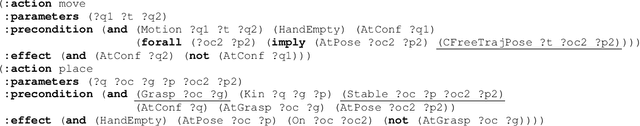

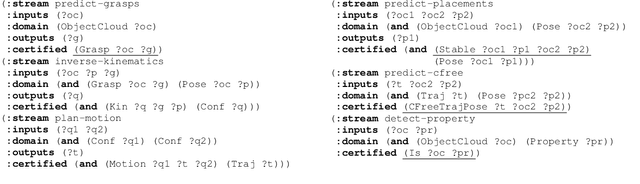

Long-Horizon Manipulation of Unknown Objects via Task and Motion Planning with Estimated Affordances

Aug 10, 2021

Abstract:We present a strategy for designing and building very general robot manipulation systems involving the integration of a general-purpose task-and-motion planner with engineered and learned perception modules that estimate properties and affordances of unknown objects. Such systems are closed-loop policies that map from RGB images, depth images, and robot joint encoder measurements to robot joint position commands. We show that following this strategy a task-and-motion planner can be used to plan intelligent behaviors even in the absence of a priori knowledge regarding the set of manipulable objects, their geometries, and their affordances. We explore several different ways of implementing such perceptual modules for segmentation, property detection, shape estimation, and grasp generation. We show how these modules are integrated within the PDDLStream task and motion planning framework. Finally, we demonstrate that this strategy can enable a single system to perform a wide variety of real-world multi-step manipulation tasks, generalizing over a broad class of objects, object arrangements, and goals, without any prior knowledge of the environment and without re-training.

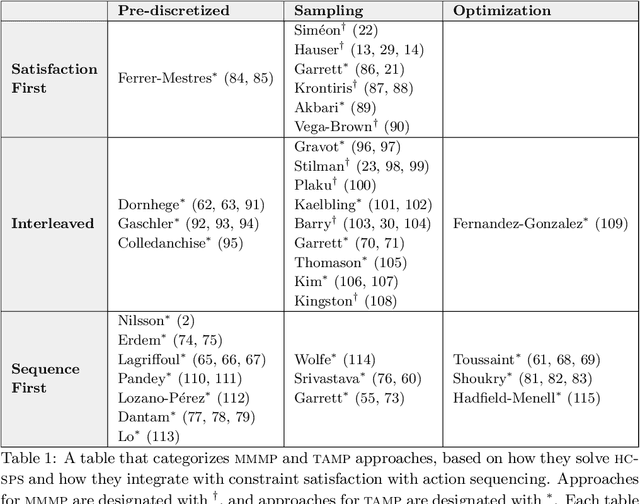

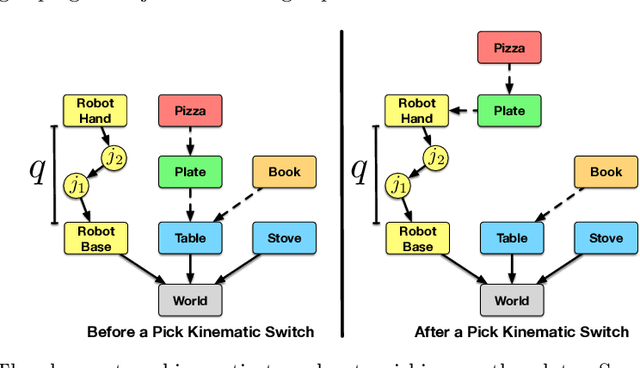

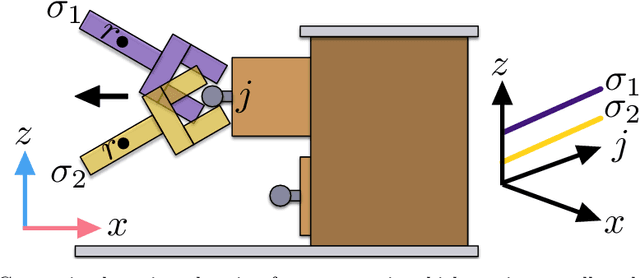

Integrated Task and Motion Planning

Oct 02, 2020

Abstract:The problem of planning for a robot that operates in environments containing a large number of objects, taking actions to move itself through the world as well as to change the state of the objects, is known as task and motion planning (TAMP). TAMP problems contain elements of discrete task planning, discrete-continuous mathematical programming, and continuous motion planning, and thus cannot be effectively addressed by any of these fields directly. In this paper, we define a class of TAMP problems and survey algorithms for solving them, characterizing the solution methods in terms of their strategies for solving the continuous-space subproblems and their techniques for integrating the discrete and continuous components of the search.

Learning compositional models of robot skills for task and motion planning

Jun 08, 2020

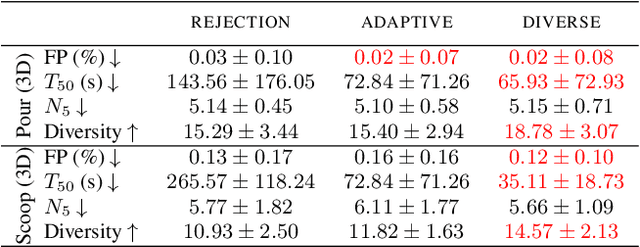

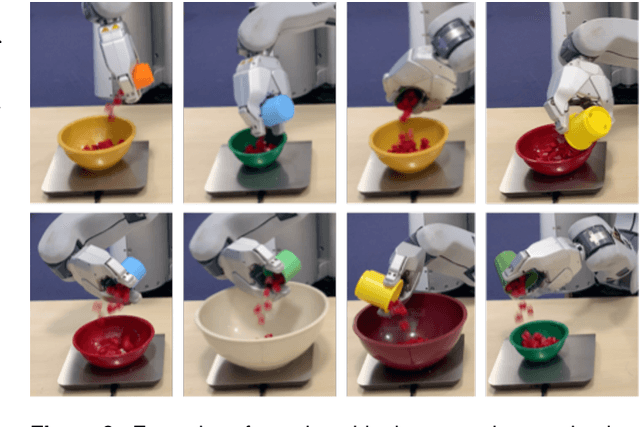

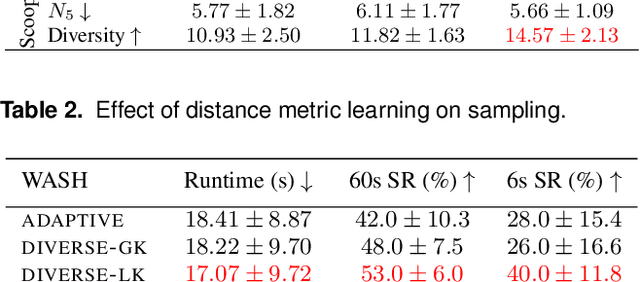

Abstract:The objective of this work is to augment the basic abilities of a robot by learning to use new sensorimotor primitives to solve complex long-horizon manipulation problems. This requires flexible generative planning that can combine primitive abilities in novel combinations and thus generalize across a wide variety of problems. In order to plan with primitive actions, we must have models of the preconditions and effects of those actions: under what circumstances will executing this primitive successfully achieve some particular effect in the world? We use, and develop novel improvements on, state-of-the-art methods for active learning and sampling. We use Gaussian process methods for learning the conditions of operator effectiveness from small numbers of expensive training examples. We develop adaptive sampling methods for generating a comprehensive and diverse sequence of continuous parameter values (such as pouring waypoints for a cup) configurations and during planning for solving a new task, so that a complete robot plan can be found as efficiently as possible. We demonstrate our approach in an integrated system, combining traditional robotics primitives with our newly learned models using an efficient robot task and motion planner. We evaluate our approach both in simulation and in the real world through measuring the quality of the selected pours and scoops. Finally, we apply our integrated system to a variety of long-horizon simulated and real-world manipulation problems.

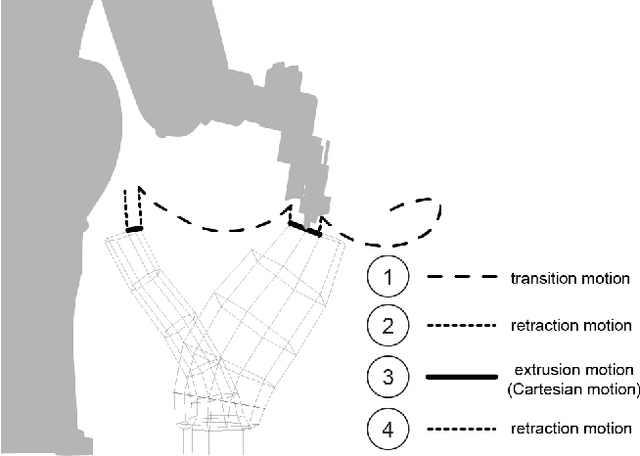

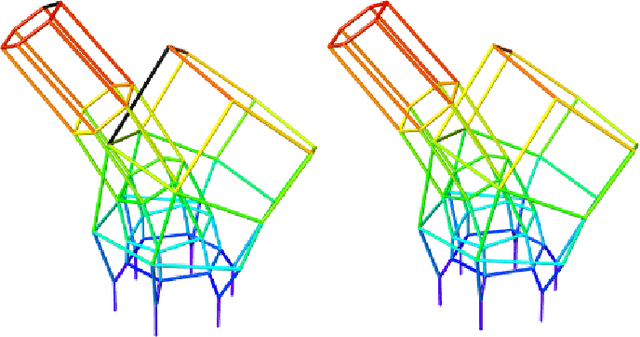

Scalable and Probabilistically Complete Planning for Robotic Spatial Extrusion

Feb 06, 2020

Abstract:There is increasing demand for automated systems that can fabricate 3D structures. Robotic spatial extrusion has become an attractive alternative to traditional layer-based 3D printing due to a manipulator's flexibility to print large, directionally-dependent structures. However, existing extrusion planning algorithms require a substantial amount of human input, do not scale to large instances, and lack theoretical guarantees. In this work, we present a rigorous formalization of robotic spatial extrusion planning and provide several efficient and probabilistically complete planning algorithms. The key planning challenge is, throughout the printing process, satisfying both stiffness constraints that limit the deformation of the structure and geometric constraints that ensure the robot does not collide with the structure. We show that, although these constraints often conflict with each other, a greedy backward state-space search guided by a stiffness-aware heuristic is able to successfully balance both constraints. We empirically compare our methods on a benchmark of over 40 simulated extrusion problems. Finally, we apply our approach to 3 real-world extrusion problems.

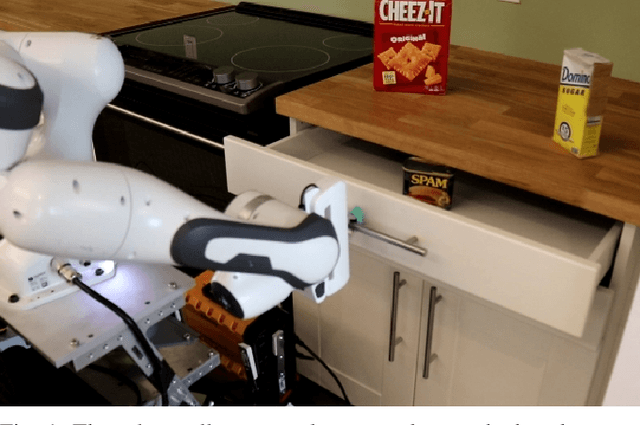

Online Replanning in Belief Space for Partially Observable Task and Motion Problems

Nov 11, 2019

Abstract:To solve multi-step manipulation tasks in the real world, an autonomous robot must take actions to observe its environment and react to unexpected observations. This may require opening a drawer to observe its contents or moving an object out of the way to examine the space behind it. If the robot fails to detect an important object, it must update its belief about the world and compute a new plan of action. Additionally, a robot that acts noisily will never exactly arrive at a desired state. Still, it is important that the robot adjusts accordingly in order to keep making progress towards achieving the goal. In this work, we present an online planning and execution system for robots faced with these kinds of challenges. Our approach is able to efficiently solve partially observable problems both in simulation and in a real-world kitchen.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge