Arjun Sharma

Towards Autonomous and Safe Last-mile Deliveries with AI-augmented Self-driving Delivery Robots

May 28, 2023

Abstract:In addition to its crucial impact on customer satisfaction, last-mile delivery (LMD) is notorious for being the most time-consuming and costly stage of the shipping process. Pressing environmental concerns combined with the recent surge of e-commerce sales have sparked renewed interest in automation and electrification of last-mile logistics. To address the hurdles faced by existing robotic couriers, this paper introduces a customer-centric and safety-conscious LMD system for small urban communities based on AI-assisted autonomous delivery robots. The presented framework enables end-to-end automation and optimization of the logistic process while catering for real-world imposed operational uncertainties, clients' preferred time schedules, and safety of pedestrians. To this end, the integrated optimization component is modeled as a robust variant of the Cumulative Capacitated Vehicle Routing Problem with Time Windows, where routes are constructed under uncertain travel times with an objective to minimize the total latency of deliveries (i.e., the overall waiting time of customers, which can negatively affect their satisfaction). We demonstrate the proposed LMD system's utility through real-world trials in a university campus with a single robotic courier. Implementation aspects as well as the findings and practical insights gained from the deployment are discussed in detail. Lastly, we round up the contributions with numerical simulations to investigate the scalability of the developed mathematical formulation with respect to the number of robotic vehicles and customers.

Chest ImaGenome Dataset for Clinical Reasoning

Jul 31, 2021

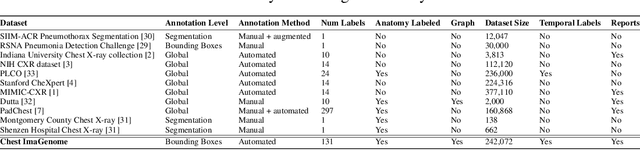

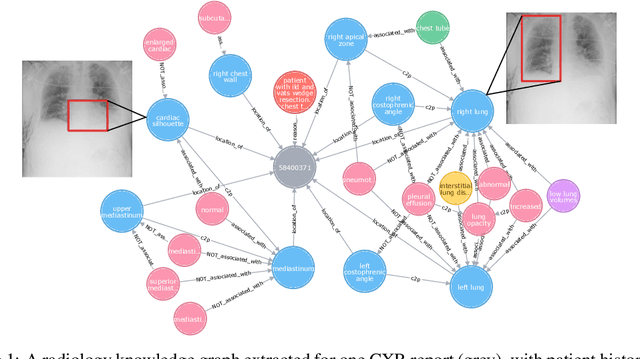

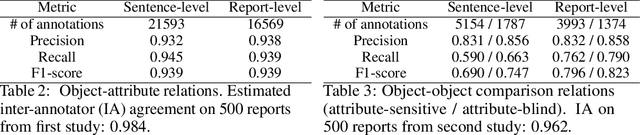

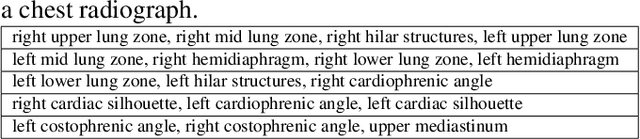

Abstract:Despite the progress in automatic detection of radiologic findings from chest X-ray (CXR) images in recent years, a quantitative evaluation of the explainability of these models is hampered by the lack of locally labeled datasets for different findings. With the exception of a few expert-labeled small-scale datasets for specific findings, such as pneumonia and pneumothorax, most of the CXR deep learning models to date are trained on global "weak" labels extracted from text reports, or trained via a joint image and unstructured text learning strategy. Inspired by the Visual Genome effort in the computer vision community, we constructed the first Chest ImaGenome dataset with a scene graph data structure to describe $242,072$ images. Local annotations are automatically produced using a joint rule-based natural language processing (NLP) and atlas-based bounding box detection pipeline. Through a radiologist constructed CXR ontology, the annotations for each CXR are connected as an anatomy-centered scene graph, useful for image-level reasoning and multimodal fusion applications. Overall, we provide: i) $1,256$ combinations of relation annotations between $29$ CXR anatomical locations (objects with bounding box coordinates) and their attributes, structured as a scene graph per image, ii) over $670,000$ localized comparison relations (for improved, worsened, or no change) between the anatomical locations across sequential exams, as well as ii) a manually annotated gold standard scene graph dataset from $500$ unique patients.

AnaXNet: Anatomy Aware Multi-label Finding Classification in Chest X-ray

May 20, 2021

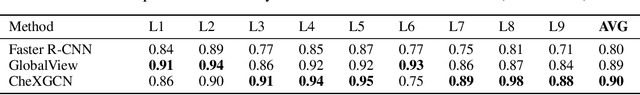

Abstract:Radiologists usually observe anatomical regions of chest X-ray images as well as the overall image before making a decision. However, most existing deep learning models only look at the entire X-ray image for classification, failing to utilize important anatomical information. In this paper, we propose a novel multi-label chest X-ray classification model that accurately classifies the image finding and also localizes the findings to their correct anatomical regions. Specifically, our model consists of two modules, the detection module and the anatomical dependency module. The latter utilizes graph convolutional networks, which enable our model to learn not only the label dependency but also the relationship between the anatomical regions in the chest X-ray. We further utilize a method to efficiently create an adjacency matrix for the anatomical regions using the correlation of the label across the different regions. Detailed experiments and analysis of our results show the effectiveness of our method when compared to the current state-of-the-art multi-label chest X-ray image classification methods while also providing accurate location information.

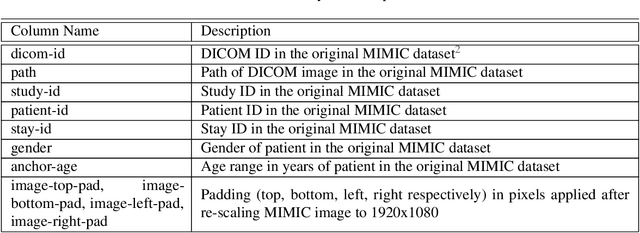

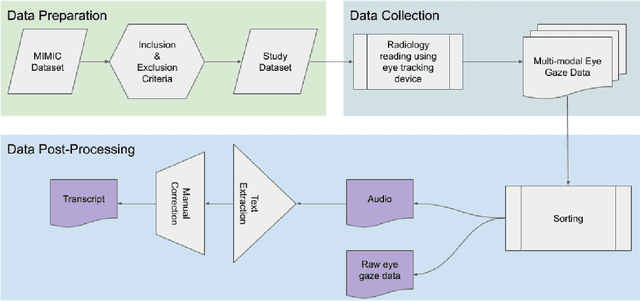

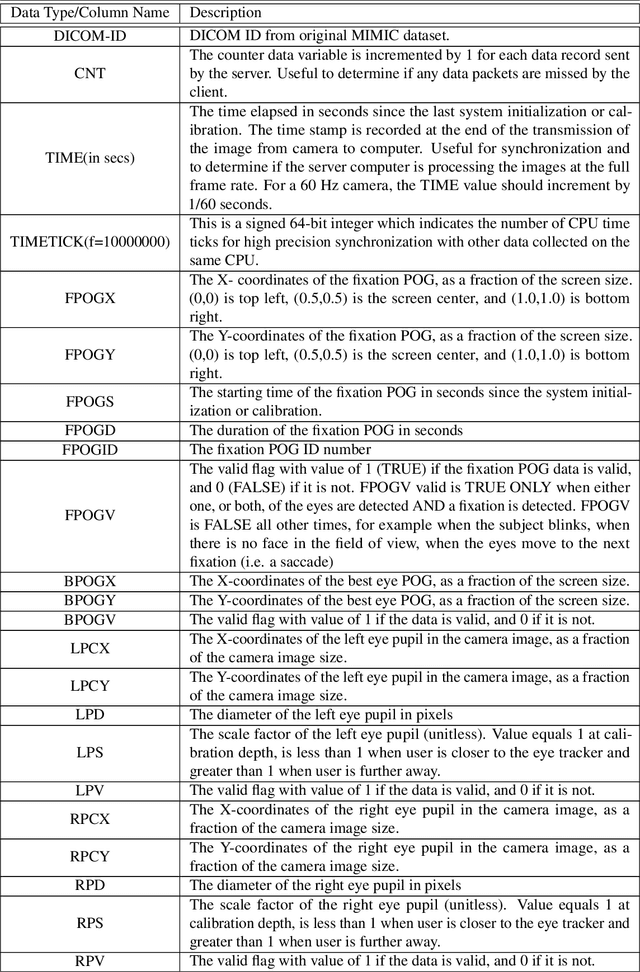

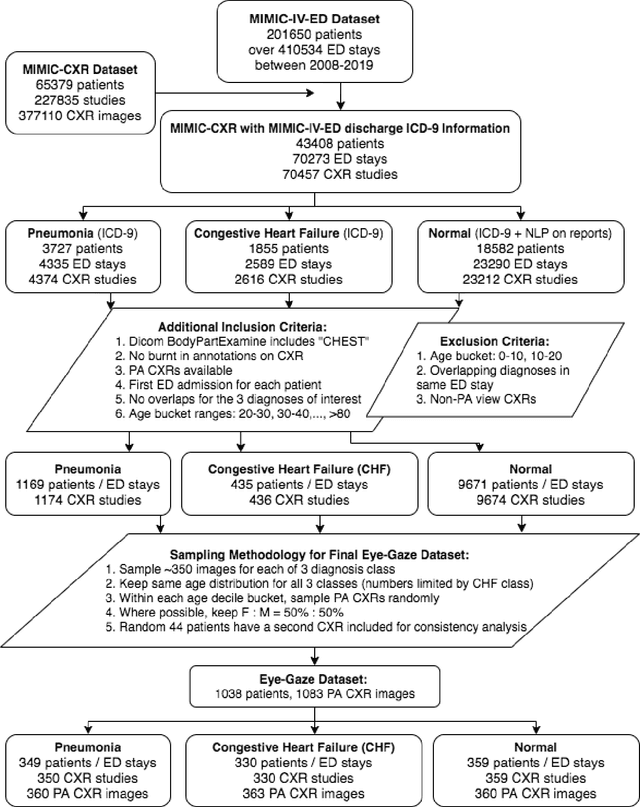

Creation and Validation of a Chest X-Ray Dataset with Eye-tracking and Report Dictation for AI Development

Oct 08, 2020

Abstract:We developed a rich dataset of Chest X-Ray (CXR) images to assist investigators in artificial intelligence. The data were collected using an eye tracking system while a radiologist reviewed and reported on 1,083 CXR images. The dataset contains the following aligned data: CXR image, transcribed radiology report text, radiologist's dictation audio and eye gaze coordinates data. We hope this dataset can contribute to various areas of research particularly towards explainable and multimodal deep learning / machine learning methods. Furthermore, investigators in disease classification and localization, automated radiology report generation, and human-machine interaction can benefit from these data. We report deep learning experiments that utilize the attention maps produced by eye gaze dataset to show the potential utility of this data.

Spatio-temporal relationships between rainfall and convective clouds during Indian Monsoon through a discrete lens

Aug 19, 2020

Abstract:The Indian monsoon, a multi-variable process causing heavy rains during June-September every year, is very heterogeneous in space and time. We study the relationship between rainfall and Outgoing Longwave Radiation (OLR, convective cloud cover) for monsoon between 2004-2010. To identify, classify and visualize spatial patterns of rainfall and OLR we use a discrete and spatio-temporally coherent representation of the data, created using a statistical model based on Markov Random Field. Our approach clusters the days with similar spatial distributions of rainfall and OLR into a small number of spatial patterns. We find that eight daily spatial patterns each in rainfall and OLR, and seven joint patterns of rainfall and OLR, describe over 90\% of all days. Through these patterns, we find that OLR generally has a strong negative correlation with precipitation, but with significant spatial variations. In particular, peninsular India (except west coast) is under significant convective cloud cover over a majority of days but remains rainless. We also find that much of the monsoon rainfall co-occurs with low OLR, but some amount of rainfall in Eastern and North-western India in June occurs on OLR days, presumably from shallow clouds. To study day-to-day variations of both quantities, we identify spatial patterns in the temporal gradients computed from the observations. We find that changes in convective cloud activity across India most commonly occur due to the establishment of a north-south OLR gradient which persists for 1-2 days and shifts the convective cloud cover from light to deep or vice versa. Such changes are also accompanied by changes in the spatial distribution of precipitation. The present work thus provides a highly reduced description of the complex spatial patterns and their day-to-day variations, and could form a useful tool for future simplified descriptions of this process.

Looking in the Right place for Anomalies: Explainable AI through Automatic Location Learning

Aug 02, 2020

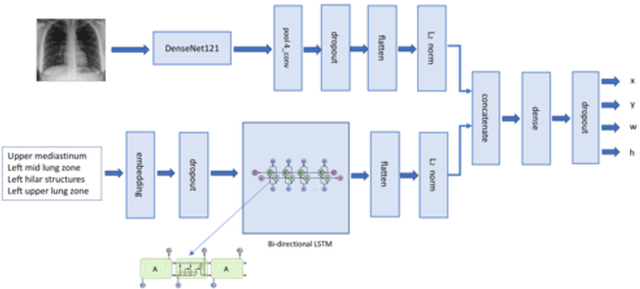

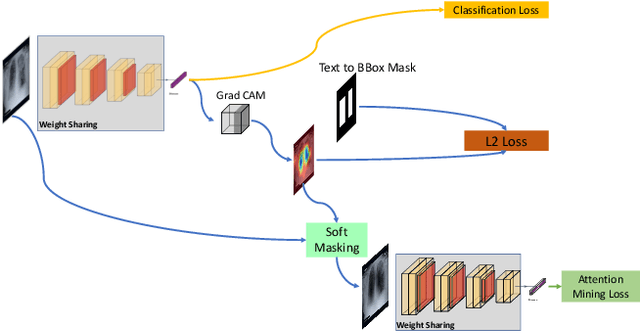

Abstract:Deep learning has now become the de facto approach to the recognition of anomalies in medical imaging. Their 'black box' way of classifying medical images into anomaly labels poses problems for their acceptance, particularly with clinicians. Current explainable AI methods offer justifications through visualizations such as heat maps but cannot guarantee that the network is focusing on the relevant image region fully containing the anomaly. In this paper, we develop an approach to explainable AI in which the anomaly is assured to be overlapping the expected location when present. This is made possible by automatically extracting location-specific labels from textual reports and learning the association of expected locations to labels using a hybrid combination of Bi-Directional Long Short-Term Memory Recurrent Neural Networks (Bi-LSTM) and DenseNet-121. Use of this expected location to bias the subsequent attention-guided inference network based on ResNet101 results in the isolation of the anomaly at the expected location when present. The method is evaluated on a large chest X-ray dataset.

* 5 pages, Paper presented as a poster at the International Symposium on Biomedical Imaging, 2020, Paper Number 655

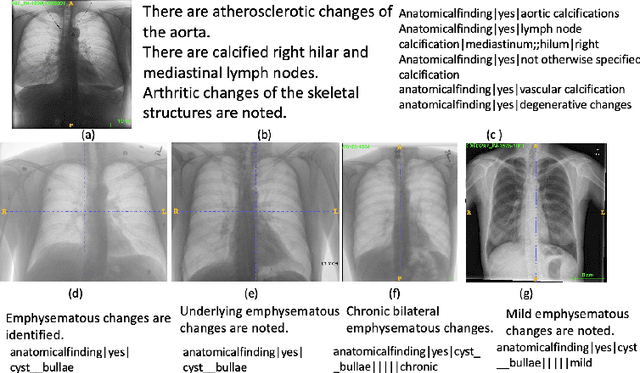

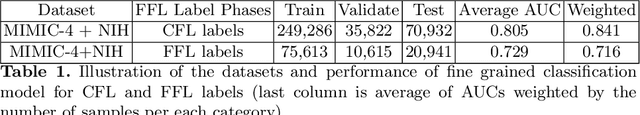

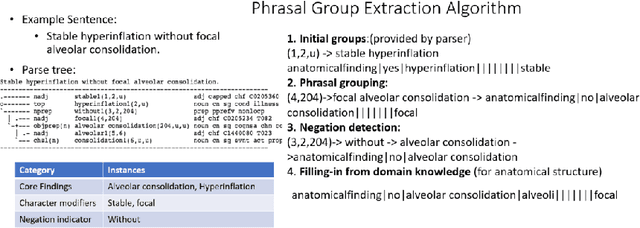

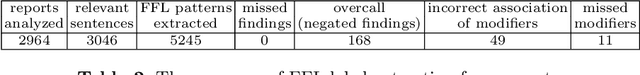

Chest X-ray Report Generation through Fine-Grained Label Learning

Jul 27, 2020

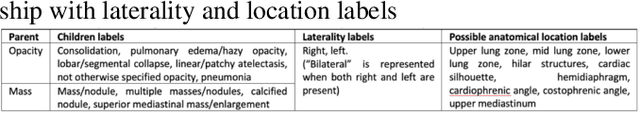

Abstract:Obtaining automated preliminary read reports for common exams such as chest X-rays will expedite clinical workflows and improve operational efficiencies in hospitals. However, the quality of reports generated by current automated approaches is not yet clinically acceptable as they cannot ensure the correct detection of a broad spectrum of radiographic findings nor describe them accurately in terms of laterality, anatomical location, severity, etc. In this work, we present a domain-aware automatic chest X-ray radiology report generation algorithm that learns fine-grained description of findings from images and uses their pattern of occurrences to retrieve and customize similar reports from a large report database. We also develop an automatic labeling algorithm for assigning such descriptors to images and build a novel deep learning network that recognizes both coarse and fine-grained descriptions of findings. The resulting report generation algorithm significantly outperforms the state of the art using established score metrics.

Automated Detection and Type Classification of Central Venous Catheters in Chest X-Rays

Jul 25, 2019

Abstract:Central venous catheters (CVCs) are commonly used in critical care settings for monitoring body functions and administering medications. They are often described in radiology reports by referring to their presence, identity and placement. In this paper, we address the problem of automatic detection of their presence and identity through automated segmentation using deep learning networks and classification based on their intersection with previously learned shape priors from clinician annotations of CVCs. The results not only outperform existing methods of catheter detection achieving 85.2% accuracy at 91.6% precision, but also enable high precision (95.2%) classification of catheter types on a large dataset of over 10,000 chest X-rays, presenting a robust and practical solution to this problem.

Age prediction using a large chest X-ray dataset

Mar 09, 2019Abstract:Age prediction based on appearances of different anatomies in medical images has been clinically explored for many decades. In this paper, we used deep learning to predict a persons age on Chest X-Rays. Specifically, we trained a CNN in regression fashion on a large publicly available dataset. Moreover, for interpretability, we explored activation maps to identify which areas of a CXR image are important for the machine (i.e. CNN) to predict a patients age, offering insight. Overall, amongst correctly predicted CXRs, we see areas near the clavicles, shoulders, spine, and mediastinum being most activated for age prediction, as one would expect biologically. Amongst incorrectly predicted CXRs, we have qualitatively identified disease patterns that could possibly make the anatomies appear older or younger than expected. A further technical and clinical evaluation would improve this work. As CXR is the most commonly requested imaging exam, a potential use case for estimating age may be found in the preventative counseling of patient health status compared to their age-expected average, particularly when there is a large discrepancy between predicted age and the real patient age.

Directed-Info GAIL: Learning Hierarchical Policies from Unsegmented Demonstrations using Directed Information

Sep 29, 2018

Abstract:The use of imitation learning to learn a single policy for a complex task that has multiple modes or hierarchical structure can be challenging. In fact, previous work has shown that when the modes are known, learning separate policies for each mode or sub-task can greatly improve the performance of imitation learning. In this work, we discover the interaction between sub-tasks from their resulting state-action trajectory sequences using a directed graphical model. We propose a new algorithm based on the generative adversarial imitation learning framework which automatically learns sub-task policies from unsegmented demonstrations. Our approach maximizes the directed information flow in the graphical model between sub-task latent variables and their generated trajectories. We also show how our approach connects with the existing Options framework, which is commonly used to learn hierarchical policies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge