Jorge Dias

Red grape detection with accelerated artificial neural networks in the FPGA's programmable logic

Jul 03, 2025Abstract:Robots usually slow down for canning to detect objects while moving. Additionally, the robot's camera is configured with a low framerate to track the velocity of the detection algorithms. This would be constrained while executing tasks and exploring, making robots increase the task execution time. AMD has developed the Vitis-AI framework to deploy detection algorithms into FPGAs. However, this tool does not fully use the FPGAs' PL. In this work, we use the FINN architecture to deploy three ANNs, MobileNet v1 with 4-bit quantisation, CNV with 2-bit quantisation, and CNV with 1-bit quantisation (BNN), inside an FPGA's PL. The models were trained on the RG2C dataset. This is a self-acquired dataset released in open access. MobileNet v1 performed better, reaching a success rate of 98 % and an inference speed of 6611 FPS. In this work, we proved that we can use FPGAs to speed up ANNs and make them suitable for attention mechanisms.

Collaborative Last-Mile Delivery: A Multi-Platform Vehicle Routing Problem With En-route Charging

May 29, 2025

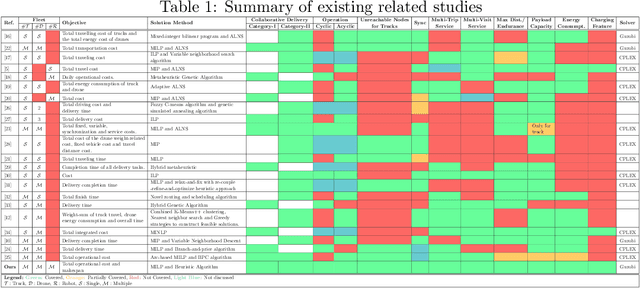

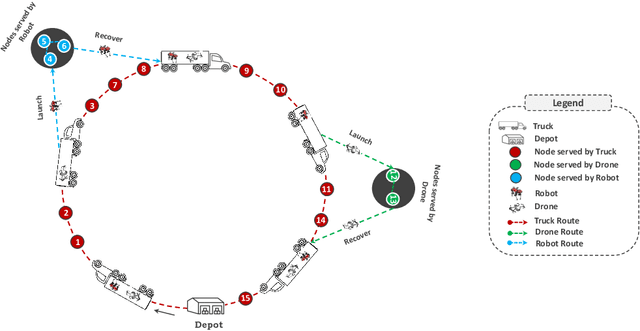

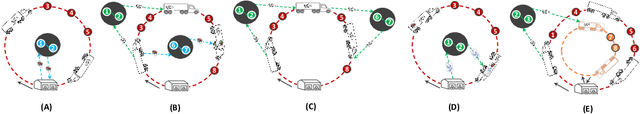

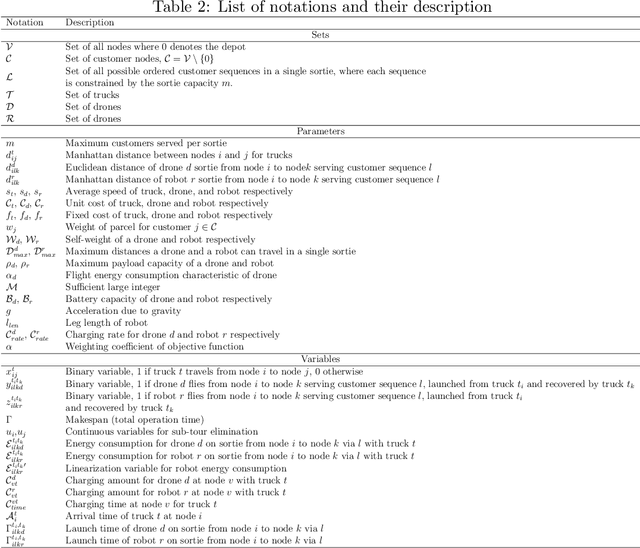

Abstract:The rapid growth of e-commerce and the increasing demand for timely, cost-effective last-mile delivery have increased interest in collaborative logistics. This research introduces a novel collaborative synchronized multi-platform vehicle routing problem with drones and robots (VRP-DR), where a fleet of $\mathcal{M}$ trucks, $\mathcal{N}$ drones and $\mathcal{K}$ robots, cooperatively delivers parcels. Trucks serve as mobile platforms, enabling the launching, retrieving, and en-route charging of drones and robots, thereby addressing critical limitations such as restricted payload capacities, limited range, and battery constraints. The VRP-DR incorporates five realistic features: (1) multi-visit service per trip, (2) multi-trip operations, (3) flexible docking, allowing returns to the same or different trucks (4) cyclic and acyclic operations, enabling return to the same or different nodes; and (5) en-route charging, enabling drones and robots to recharge while being transported on the truck, maximizing operational efficiency by utilizing idle transit time. The VRP-DR is formulated as a mixed-integer linear program (MILP) to minimize both operational costs and makespan. To overcome the computational challenges of solving large-scale instances, a scalable heuristic algorithm, FINDER (Flexible INtegrated Delivery with Energy Recharge), is developed, to provide efficient, near-optimal solutions. Numerical experiments across various instance sizes evaluate the performance of the MILP and heuristic approaches in terms of solution quality and computation time. The results demonstrate significant time savings of the combined delivery mode over the truck-only mode and substantial cost reductions from enabling multi-visits. The study also provides insights into the effects of en-route charging, docking flexibility, drone count, speed, and payload capacity on system performance.

Towards Accurate State Estimation: Kalman Filter Incorporating Motion Dynamics for 3D Multi-Object Tracking

May 12, 2025

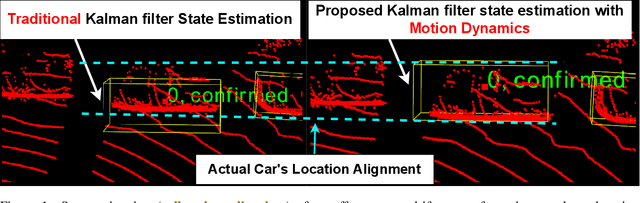

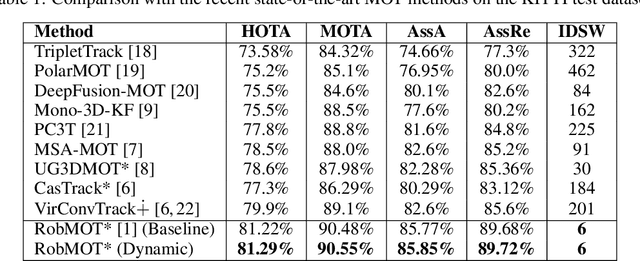

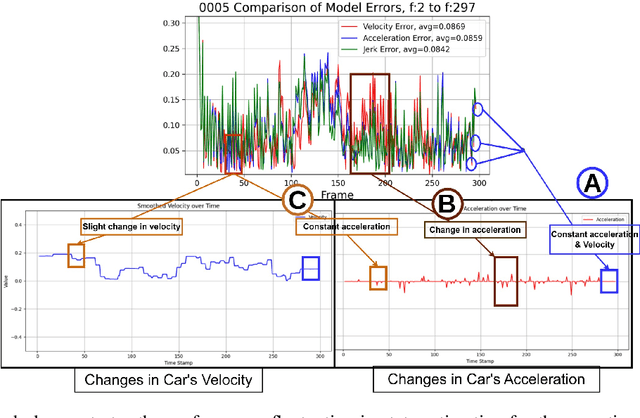

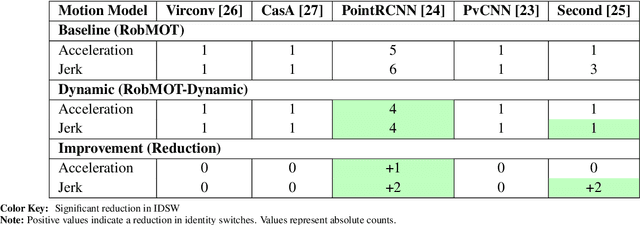

Abstract:This work addresses the critical lack of precision in state estimation in the Kalman filter for 3D multi-object tracking (MOT) and the ongoing challenge of selecting the appropriate motion model. Existing literature commonly relies on constant motion models for estimating the states of objects, neglecting the complex motion dynamics unique to each object. Consequently, trajectory division and imprecise object localization arise, especially under occlusion conditions. The core of these challenges lies in the limitations of the current Kalman filter formulation, which fails to account for the variability of motion dynamics as objects navigate their environments. This work introduces a novel formulation of the Kalman filter that incorporates motion dynamics, allowing the motion model to adaptively adjust according to changes in the object's movement. The proposed Kalman filter substantially improves state estimation, localization, and trajectory prediction compared to the traditional Kalman filter. This is reflected in tracking performance that surpasses recent benchmarks on the KITTI and Waymo Open Datasets, with margins of 0.56\% and 0.81\% in higher order tracking accuracy (HOTA) and multi-object tracking accuracy (MOTA), respectively. Furthermore, the proposed Kalman filter consistently outperforms the baseline across various detectors. Additionally, it shows an enhanced capability in managing long occlusions compared to the baseline Kalman filter, achieving margins of 1.22\% in higher order tracking accuracy (HOTA) and 1.55\% in multi-object tracking accuracy (MOTA) on the KITTI dataset. The formulation's efficiency is evident, with an additional processing time of only approximately 0.078 ms per frame, ensuring its applicability in real-time applications.

snnTrans-DHZ: A Lightweight Spiking Neural Network Architecture for Underwater Image Dehazing

Apr 13, 2025Abstract:Underwater image dehazing is critical for vision-based marine operations because light scattering and absorption can severely reduce visibility. This paper introduces snnTrans-DHZ, a lightweight Spiking Neural Network (SNN) specifically designed for underwater dehazing. By leveraging the temporal dynamics of SNNs, snnTrans-DHZ efficiently processes time-dependent raw image sequences while maintaining low power consumption. Static underwater images are first converted into time-dependent sequences by repeatedly inputting the same image over user-defined timesteps. These RGB sequences are then transformed into LAB color space representations and processed concurrently. The architecture features three key modules: (i) a K estimator that extracts features from multiple color space representations; (ii) a Background Light Estimator that jointly infers the background light component from the RGB-LAB images; and (iii) a soft image reconstruction module that produces haze-free, visibility-enhanced outputs. The snnTrans-DHZ model is directly trained using a surrogate gradient-based backpropagation through time (BPTT) strategy alongside a novel combined loss function. Evaluated on the UIEB benchmark, snnTrans-DHZ achieves a PSNR of 21.68 dB and an SSIM of 0.8795, and on the EUVP dataset, it yields a PSNR of 23.46 dB and an SSIM of 0.8439. With only 0.5670 million network parameters, and requiring just 7.42 GSOPs and 0.0151 J of energy, the algorithm significantly outperforms existing state-of-the-art methods in terms of efficiency. These features make snnTrans-DHZ highly suitable for deployment in underwater robotics, marine exploration, and environmental monitoring.

Underwater Image Enhancement by Convolutional Spiking Neural Networks

Mar 26, 2025

Abstract:Underwater image enhancement (UIE) is fundamental for marine applications, including autonomous vision-based navigation. Deep learning methods using convolutional neural networks (CNN) and vision transformers advanced UIE performance. Recently, spiking neural networks (SNN) have gained attention for their lightweight design, energy efficiency, and scalability. This paper introduces UIE-SNN, the first SNN-based UIE algorithm to improve visibility of underwater images. UIE-SNN is a 19- layered convolutional spiking encoder-decoder framework with skip connections, directly trained using surrogate gradient-based backpropagation through time (BPTT) strategy. We explore and validate the influence of training datasets on energy reduction, a unique advantage of UIE-SNN architecture, in contrast to the conventional learning-based architectures, where energy consumption is model-dependent. UIE-SNN optimizes the loss function in latent space representation to reconstruct clear underwater images. Our algorithm performs on par with its non-spiking counterpart methods in terms of PSNR and structural similarity index (SSIM) at reduced timesteps ($T=5$) and energy consumption of $85\%$. The algorithm is trained on two publicly available benchmark datasets, UIEB and EUVP, and tested on unseen images from UIEB, EUVP, LSUI, U45, and our custom UIE dataset. The UIE-SNN algorithm achieves PSNR of \(17.7801~dB\) and SSIM of \(0.7454\) on UIEB, and PSNR of \(23.1725~dB\) and SSIM of \(0.7890\) on EUVP. UIE-SNN achieves this algorithmic performance with fewer operators (\(147.49\) GSOPs) and energy (\(0.1327~J\)) compared to its non-spiking counterpart (GFLOPs = \(218.88\) and Energy=\(1.0068~J\)). Compared with existing SOTA UIE methods, UIE-SNN achieves an average of \(6.5\times\) improvement in energy efficiency. The source code is available at \href{https://github.com/vidya-rejul/UIE-SNN.git}{UIE-SNN}.

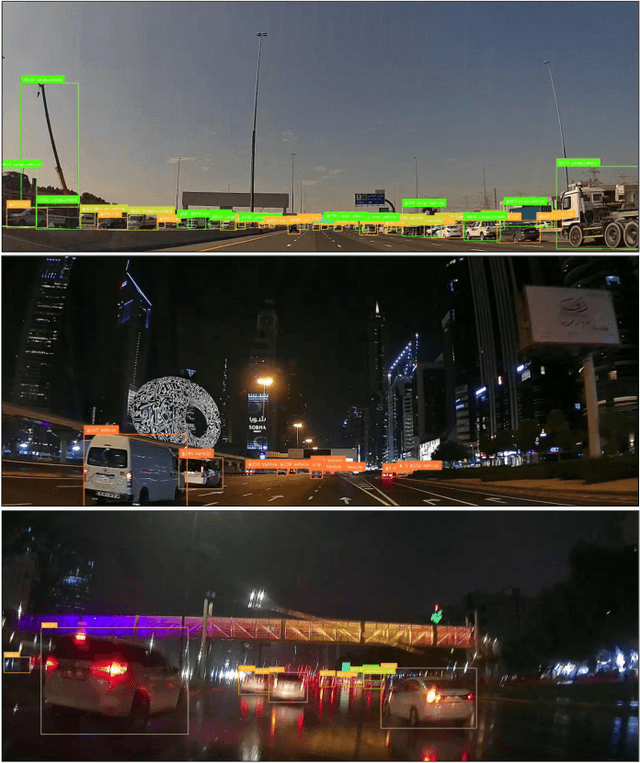

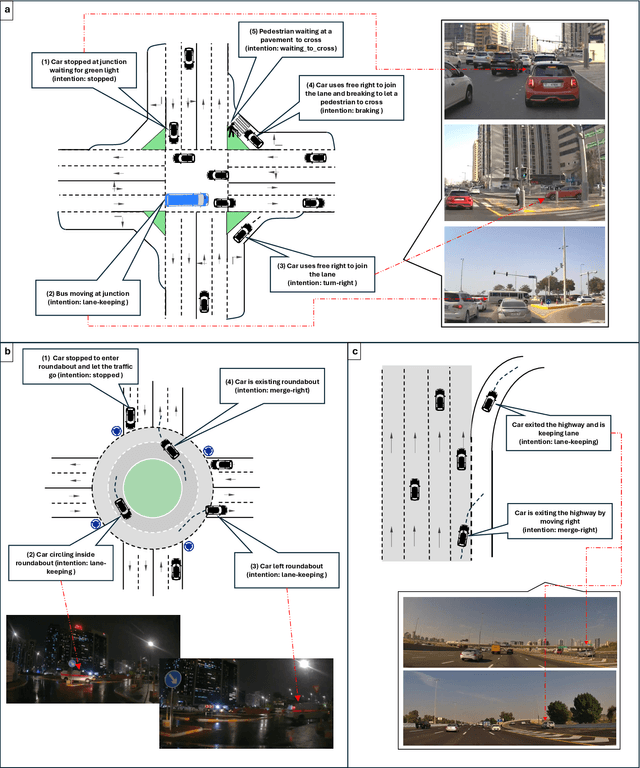

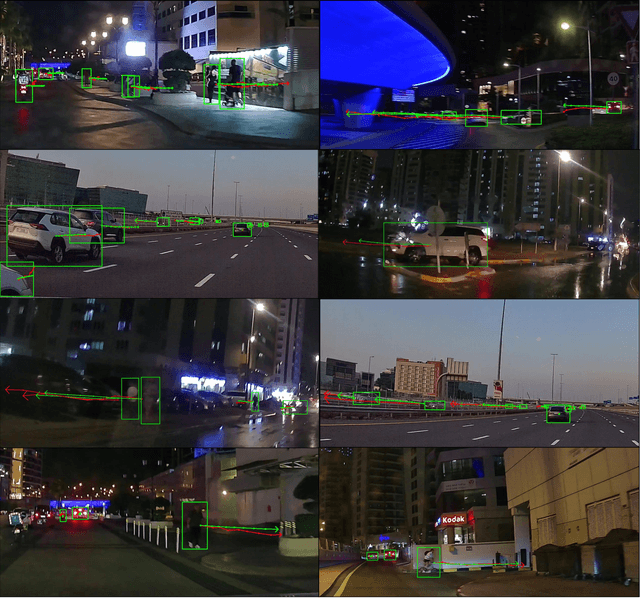

EMT: A Visual Multi-Task Benchmark Dataset for Autonomous Driving in the Arab Gulf Region

Feb 26, 2025

Abstract:This paper introduces the Emirates Multi-Task (EMT) dataset - the first publicly available dataset for autonomous driving collected in the Arab Gulf region. The EMT dataset captures the unique road topology, high traffic congestion, and distinctive characteristics of the Gulf region, including variations in pedestrian clothing and weather conditions. It contains over 30,000 frames from a dash-camera perspective, along with 570,000 annotated bounding boxes, covering approximately 150 kilometers of driving routes. The EMT dataset supports three primary tasks: tracking, trajectory forecasting and intention prediction. Each benchmark dataset is complemented with corresponding evaluations: (1) multi-agent tracking experiments, focusing on multi-class scenarios and occlusion handling; (2) trajectory forecasting evaluation using deep sequential and interaction-aware models; and (3) intention benchmark experiments conducted for predicting agents intentions from observed trajectories. The dataset is publicly available at https://avlab.io/emt-dataset, and pre-processing scripts along with evaluation models can be accessed at https://github.com/AV-Lab/emt-dataset.

Dehazing-aided Multi-Rate Multi-Modal Pose Estimation Framework for Mitigating Visual Disturbances in Extreme Underwater Domain

Nov 21, 2024

Abstract:This paper delves into the potential of DU-VIO, a dehazing-aided hybrid multi-rate multi-modal Visual-Inertial Odometry (VIO) estimation framework, designed to thrive in the challenging realm of extreme underwater environments. The cutting-edge DU-VIO framework is incorporating a GAN-based pre-processing module and a hybrid CNN-LSTM module for precise pose estimation, using visibility-enhanced underwater images and raw IMU data. Accurate pose estimation is paramount for various underwater robotics and exploration applications. However, underwater visibility is often compromised by suspended particles and attenuation effects, rendering visual-inertial pose estimation a formidable challenge. DU-VIO aims to overcome these limitations by effectively removing visual disturbances from raw image data, enhancing the quality of image features used for pose estimation. We demonstrate the effectiveness of DU-VIO by calculating RMSE scores for translation and rotation vectors in comparison to their reference values. These scores are then compared to those of a base model using a modified AQUALOC Dataset. This study's significance lies in its potential to revolutionize underwater robotics and exploration. DU-VIO offers a robust solution to the persistent challenge of underwater visibility, significantly improving the accuracy of pose estimation. This research contributes valuable insights and tools for advancing underwater technology, with far-reaching implications for scientific research, environmental monitoring, and industrial applications.

Hybrid-Neuromorphic Approach for Underwater Robotics Applications: A Conceptual Framework

Nov 21, 2024Abstract:This paper introduces the concept of employing neuromorphic methodologies for task-oriented underwater robotics applications. In contrast to the increasing computational demands of conventional deep learning algorithms, neuromorphic technology, leveraging spiking neural network architectures, promises sophisticated artificial intelligence with significantly reduced computational requirements and power consumption, emulating human brain operational principles. Despite documented neuromorphic technology applications in various robotic domains, its utilization in marine robotics remains largely unexplored. Thus, this article proposes a unified framework for integrating neuromorphic technologies for perception, pose estimation, and haptic-guided conditional control of underwater vehicles, customized to specific user-defined objectives. This conceptual framework stands to revolutionize underwater robotics, enhancing efficiency and autonomy while reducing energy consumption. By enabling greater adaptability and robustness, this advancement could facilitate applications such as underwater exploration, environmental monitoring, and infrastructure maintenance, thereby contributing to significant progress in marine science and technology.

BVE + EKF: A viewpoint estimator for the estimation of the object's position in the 3D task space using Extended Kalman Filters

Jun 05, 2024Abstract:RGB-D sensors face multiple challenges operating under open-field environments because of their sensitivity to external perturbations such as radiation or rain. Multiple works are approaching the challenge of perceiving the 3D position of objects using monocular cameras. However, most of these works focus mainly on deep learning-based solutions, which are complex, data-driven, and difficult to predict. So, we aim to approach the problem of predicting the 3D objects' position using a Gaussian viewpoint estimator named best viewpoint estimator (BVE) powered by an extended Kalman filter (EKF). The algorithm proved efficient on the tasks and reached a maximum average Euclidean error of about 32 mm. The experiments were deployed and evaluated in MATLAB using artificial Gaussian noise. Future work aims to implement the system in a robotic system.

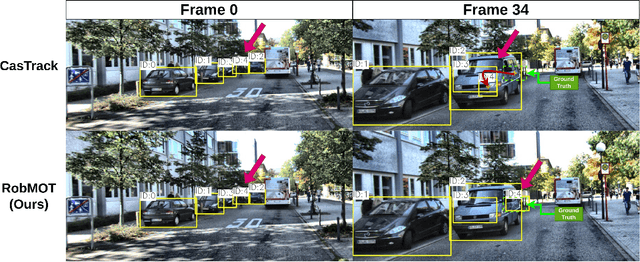

RobMOT: Robust 3D Multi-Object Tracking by Observational Noise and State Estimation Drift Mitigation on LiDAR PointCloud

May 19, 2024

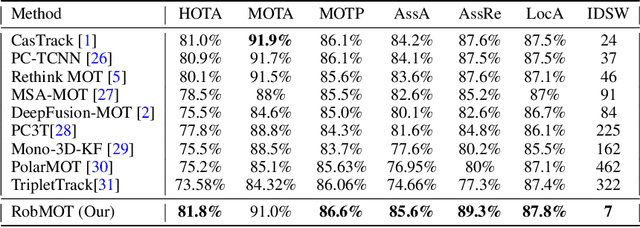

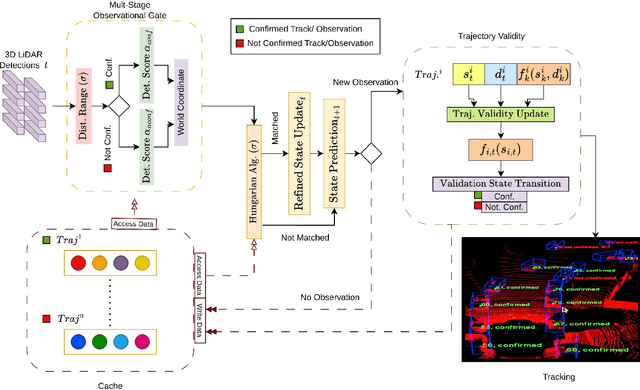

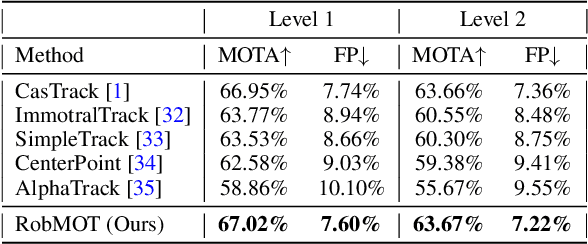

Abstract:This work addresses the inherited limitations in the current state-of-the-art 3D multi-object tracking (MOT) methods that follow the tracking-by-detection paradigm, notably trajectory estimation drift for long-occluded objects in LiDAR point cloud streams acquired by autonomous cars. In addition, the absence of adequate track legitimacy verification results in ghost track accumulation. To tackle these issues, we introduce a two-fold innovation. Firstly, we propose refinement in Kalman filter that enhances trajectory drift noise mitigation, resulting in more robust state estimation for occluded objects. Secondly, we propose a novel online track validity mechanism to distinguish between legitimate and ghost tracks combined with a multi-stage observational gating process for incoming observations. This mechanism substantially reduces ghost tracks by up to 80\% and improves HOTA by 7\%. Accordingly, we propose an online 3D MOT framework, RobMOT, that demonstrates superior performance over the top-performing state-of-the-art methods, including deep learning approaches, across various detectors with up to 3.28\% margin in MOTA and 2.36\% in HOTA. RobMOT excels under challenging conditions, such as prolonged occlusions and the tracking of distant objects, with up to 59\% enhancement in processing latency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge