Sajid Javed

Rethinking Memory Design in SAM-Based Visual Object Tracking

Dec 27, 2025Abstract:\noindent Memory has become the central mechanism enabling robust visual object tracking in modern segmentation-based frameworks. Recent methods built upon Segment Anything Model 2 (SAM2) have demonstrated strong performance by refining how past observations are stored and reused. However, existing approaches address memory limitations in a method-specific manner, leaving the broader design principles of memory in SAM-based tracking poorly understood. Moreover, it remains unclear how these memory mechanisms transfer to stronger, next-generation foundation models such as Segment Anything Model 3 (SAM3). In this work, we present a systematic memory-centric study of SAM-based visual object tracking. We first analyze representative SAM2-based trackers and show that most methods primarily differ in how short-term memory frames are selected, while sharing a common object-centric representation. Building on this insight, we faithfully reimplement these memory mechanisms within the SAM3 framework and conduct large-scale evaluations across ten diverse benchmarks, enabling a controlled analysis of memory design independent of backbone strength. Guided by our empirical findings, we propose a unified hybrid memory framework that explicitly decomposes memory into short-term appearance memory and long-term distractor-resolving memory. This decomposition enables the integration of existing memory policies in a modular and principled manner. Extensive experiments demonstrate that the proposed framework consistently improves robustness under long-term occlusion, complex motion, and distractor-heavy scenarios on both SAM2 and SAM3 backbones. Code is available at: https://github.com/HamadYA/SAM3_Tracking_Zoo. \textbf{This is a preprint. Some results are being finalized and may be updated in a future revision.}

Cytoplasmic Strings Analysis in Human Embryo Time-Lapse Videos using Deep Learning Framework

Dec 10, 2025Abstract:Infertility is a major global health issue, and while in-vitro fertilization has improved treatment outcomes, embryo selection remains a critical bottleneck. Time-lapse imaging enables continuous, non-invasive monitoring of embryo development, yet most automated assessment methods rely solely on conventional morphokinetic features and overlook emerging biomarkers. Cytoplasmic Strings, thin filamentous structures connecting the inner cell mass and trophectoderm in expanded blastocysts, have been associated with faster blastocyst formation, higher blastocyst grades, and improved viability. However, CS assessment currently depends on manual visual inspection, which is labor-intensive, subjective, and severely affected by detection and subtle visual appearance. In this work, we present, to the best of our knowledge, the first computational framework for CS analysis in human IVF embryos. We first design a human-in-the-loop annotation pipeline to curate a biologically validated CS dataset from TLI videos, comprising 13,568 frames with highly sparse CS-positive instances. Building on this dataset, we propose a two-stage deep learning framework that (i) classifies CS presence at the frame level and (ii) localizes CS regions in positive cases. To address severe imbalance and feature uncertainty, we introduce the Novel Uncertainty-aware Contractive Embedding (NUCE) loss, which couples confidence-aware reweighting with an embedding contraction term to form compact, well-separated class clusters. NUCE consistently improves F1-score across five transformer backbones, while RF-DETR-based localization achieves state-of-the-art (SOTA) detection performance for thin, low-contrast CS structures. The source code will be made publicly available at: https://github.com/HamadYA/CS_Detection.

Spatio-Temporal State Space Model For Efficient Event-Based Optical Flow

Jun 09, 2025Abstract:Event cameras unlock new frontiers that were previously unthinkable with standard frame-based cameras. One notable example is low-latency motion estimation (optical flow), which is critical for many real-time applications. In such applications, the computational efficiency of algorithms is paramount. Although recent deep learning paradigms such as CNN, RNN, or ViT have shown remarkable performance, they often lack the desired computational efficiency. Conversely, asynchronous event-based methods including SNNs and GNNs are computationally efficient; however, these approaches fail to capture sufficient spatio-temporal information, a powerful feature required to achieve better performance for optical flow estimation. In this work, we introduce Spatio-Temporal State Space Model (STSSM) module along with a novel network architecture to develop an extremely efficient solution with competitive performance. Our STSSM module leverages state-space models to effectively capture spatio-temporal correlations in event data, offering higher performance with lower complexity compared to ViT, CNN-based architectures in similar settings. Our model achieves 4.5x faster inference and 8x lower computations compared to TMA and 2x lower computations compared to EV-FlowNet with competitive performance on the DSEC benchmark. Our code will be available at https://github.com/AhmedHumais/E-STMFlow

CLDTracker: A Comprehensive Language Description for Visual Tracking

May 29, 2025Abstract:VOT remains a fundamental yet challenging task in computer vision due to dynamic appearance changes, occlusions, and background clutter. Traditional trackers, relying primarily on visual cues, often struggle in such complex scenarios. Recent advancements in VLMs have shown promise in semantic understanding for tasks like open-vocabulary detection and image captioning, suggesting their potential for VOT. However, the direct application of VLMs to VOT is hindered by critical limitations: the absence of a rich and comprehensive textual representation that semantically captures the target object's nuances, limiting the effective use of language information; inefficient fusion mechanisms that fail to optimally integrate visual and textual features, preventing a holistic understanding of the target; and a lack of temporal modeling of the target's evolving appearance in the language domain, leading to a disconnect between the initial description and the object's subsequent visual changes. To bridge these gaps and unlock the full potential of VLMs for VOT, we propose CLDTracker, a novel Comprehensive Language Description framework for robust visual Tracking. Our tracker introduces a dual-branch architecture consisting of a textual and a visual branch. In the textual branch, we construct a rich bag of textual descriptions derived by harnessing the powerful VLMs such as CLIP and GPT-4V, enriched with semantic and contextual cues to address the lack of rich textual representation. Experiments on six standard VOT benchmarks demonstrate that CLDTracker achieves SOTA performance, validating the effectiveness of leveraging robust and temporally-adaptive vision-language representations for tracking. Code and models are publicly available at: https://github.com/HamadYA/CLDTracker

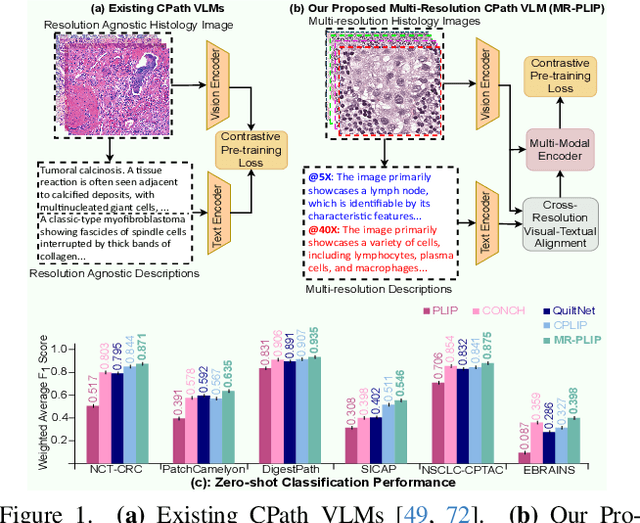

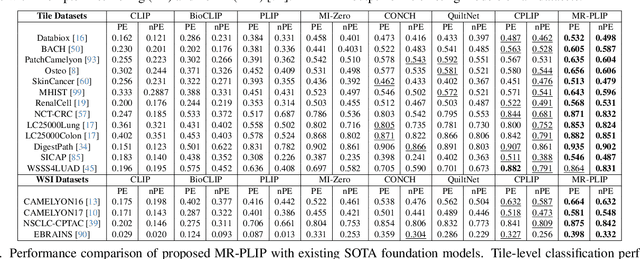

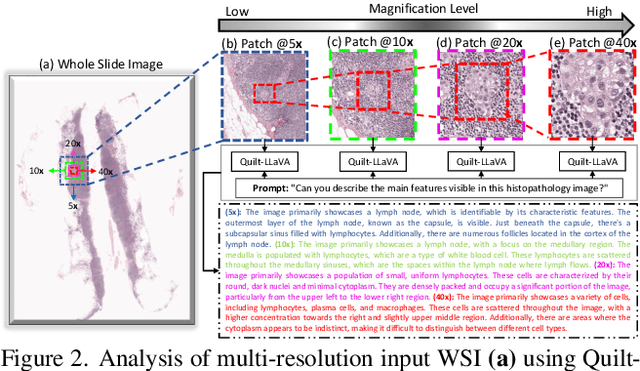

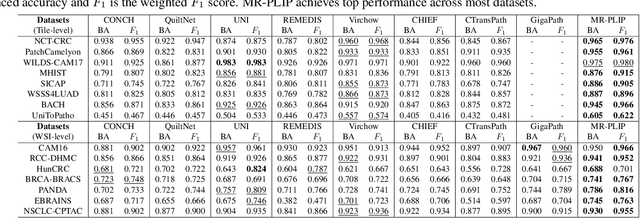

Multi-Resolution Pathology-Language Pre-training Model with Text-Guided Visual Representation

Apr 26, 2025

Abstract:In Computational Pathology (CPath), the introduction of Vision-Language Models (VLMs) has opened new avenues for research, focusing primarily on aligning image-text pairs at a single magnification level. However, this approach might not be sufficient for tasks like cancer subtype classification, tissue phenotyping, and survival analysis due to the limited level of detail that a single-resolution image can provide. Addressing this, we propose a novel multi-resolution paradigm leveraging Whole Slide Images (WSIs) to extract histology patches at multiple resolutions and generate corresponding textual descriptions through advanced CPath VLM. We introduce visual-textual alignment at multiple resolutions as well as cross-resolution alignment to establish more effective text-guided visual representations. Cross-resolution alignment using a multimodal encoder enhances the model's ability to capture context from multiple resolutions in histology images. Our model aims to capture a broader range of information, supported by novel loss functions, enriches feature representation, improves discriminative ability, and enhances generalization across different resolutions. Pre-trained on a comprehensive TCGA dataset with 34 million image-language pairs at various resolutions, our fine-tuned model outperforms state-of-the-art (SOTA) counterparts across multiple datasets and tasks, demonstrating its effectiveness in CPath. The code is available on GitHub at: https://github.com/BasitAlawode/MR-PLIP

snnTrans-DHZ: A Lightweight Spiking Neural Network Architecture for Underwater Image Dehazing

Apr 13, 2025Abstract:Underwater image dehazing is critical for vision-based marine operations because light scattering and absorption can severely reduce visibility. This paper introduces snnTrans-DHZ, a lightweight Spiking Neural Network (SNN) specifically designed for underwater dehazing. By leveraging the temporal dynamics of SNNs, snnTrans-DHZ efficiently processes time-dependent raw image sequences while maintaining low power consumption. Static underwater images are first converted into time-dependent sequences by repeatedly inputting the same image over user-defined timesteps. These RGB sequences are then transformed into LAB color space representations and processed concurrently. The architecture features three key modules: (i) a K estimator that extracts features from multiple color space representations; (ii) a Background Light Estimator that jointly infers the background light component from the RGB-LAB images; and (iii) a soft image reconstruction module that produces haze-free, visibility-enhanced outputs. The snnTrans-DHZ model is directly trained using a surrogate gradient-based backpropagation through time (BPTT) strategy alongside a novel combined loss function. Evaluated on the UIEB benchmark, snnTrans-DHZ achieves a PSNR of 21.68 dB and an SSIM of 0.8795, and on the EUVP dataset, it yields a PSNR of 23.46 dB and an SSIM of 0.8439. With only 0.5670 million network parameters, and requiring just 7.42 GSOPs and 0.0151 J of energy, the algorithm significantly outperforms existing state-of-the-art methods in terms of efficiency. These features make snnTrans-DHZ highly suitable for deployment in underwater robotics, marine exploration, and environmental monitoring.

Underwater Image Enhancement by Convolutional Spiking Neural Networks

Mar 26, 2025

Abstract:Underwater image enhancement (UIE) is fundamental for marine applications, including autonomous vision-based navigation. Deep learning methods using convolutional neural networks (CNN) and vision transformers advanced UIE performance. Recently, spiking neural networks (SNN) have gained attention for their lightweight design, energy efficiency, and scalability. This paper introduces UIE-SNN, the first SNN-based UIE algorithm to improve visibility of underwater images. UIE-SNN is a 19- layered convolutional spiking encoder-decoder framework with skip connections, directly trained using surrogate gradient-based backpropagation through time (BPTT) strategy. We explore and validate the influence of training datasets on energy reduction, a unique advantage of UIE-SNN architecture, in contrast to the conventional learning-based architectures, where energy consumption is model-dependent. UIE-SNN optimizes the loss function in latent space representation to reconstruct clear underwater images. Our algorithm performs on par with its non-spiking counterpart methods in terms of PSNR and structural similarity index (SSIM) at reduced timesteps ($T=5$) and energy consumption of $85\%$. The algorithm is trained on two publicly available benchmark datasets, UIEB and EUVP, and tested on unseen images from UIEB, EUVP, LSUI, U45, and our custom UIE dataset. The UIE-SNN algorithm achieves PSNR of \(17.7801~dB\) and SSIM of \(0.7454\) on UIEB, and PSNR of \(23.1725~dB\) and SSIM of \(0.7890\) on EUVP. UIE-SNN achieves this algorithmic performance with fewer operators (\(147.49\) GSOPs) and energy (\(0.1327~J\)) compared to its non-spiking counterpart (GFLOPs = \(218.88\) and Energy=\(1.0068~J\)). Compared with existing SOTA UIE methods, UIE-SNN achieves an average of \(6.5\times\) improvement in energy efficiency. The source code is available at \href{https://github.com/vidya-rejul/UIE-SNN.git}{UIE-SNN}.

AquaticCLIP: A Vision-Language Foundation Model for Underwater Scene Analysis

Feb 03, 2025

Abstract:The preservation of aquatic biodiversity is critical in mitigating the effects of climate change. Aquatic scene understanding plays a pivotal role in aiding marine scientists in their decision-making processes. In this paper, we introduce AquaticCLIP, a novel contrastive language-image pre-training model tailored for aquatic scene understanding. AquaticCLIP presents a new unsupervised learning framework that aligns images and texts in aquatic environments, enabling tasks such as segmentation, classification, detection, and object counting. By leveraging our large-scale underwater image-text paired dataset without the need for ground-truth annotations, our model enriches existing vision-language models in the aquatic domain. For this purpose, we construct a 2 million underwater image-text paired dataset using heterogeneous resources, including YouTube, Netflix, NatGeo, etc. To fine-tune AquaticCLIP, we propose a prompt-guided vision encoder that progressively aggregates patch features via learnable prompts, while a vision-guided mechanism enhances the language encoder by incorporating visual context. The model is optimized through a contrastive pretraining loss to align visual and textual modalities. AquaticCLIP achieves notable performance improvements in zero-shot settings across multiple underwater computer vision tasks, outperforming existing methods in both robustness and interpretability. Our model sets a new benchmark for vision-language applications in underwater environments. The code and dataset for AquaticCLIP are publicly available on GitHub at xxx.

Anomaly Detection for Industrial Applications, Its Challenges, Solutions, and Future Directions: A Review

Jan 20, 2025

Abstract:Anomaly detection from images captured using camera sensors is one of the mainstream applications at the industrial level. Particularly, it maintains the quality and optimizes the efficiency in production processes across diverse industrial tasks, including advanced manufacturing and aerospace engineering. Traditional anomaly detection workflow is based on a manual inspection by human operators, which is a tedious task. Advances in intelligent automated inspection systems have revolutionized the Industrial Anomaly Detection (IAD) process. Recent vision-based approaches can automatically extract, process, and interpret features using computer vision and align with the goals of automation in industrial operations. In light of the shift in inspection methodologies, this survey reviews studies published since 2019, with a specific focus on vision-based anomaly detection. The components of an IAD pipeline that are overlooked in existing surveys are presented, including areas related to data acquisition, preprocessing, learning mechanisms, and evaluation. In addition to the collected publications, several scientific and industry-related challenges and their perspective solutions are highlighted. Popular and relevant industrial datasets are also summarized, providing further insight into inspection applications. Finally, future directions of vision-based IAD are discussed, offering researchers insight into the state-of-the-art of industrial inspection.

Hybrid-Neuromorphic Approach for Underwater Robotics Applications: A Conceptual Framework

Nov 21, 2024Abstract:This paper introduces the concept of employing neuromorphic methodologies for task-oriented underwater robotics applications. In contrast to the increasing computational demands of conventional deep learning algorithms, neuromorphic technology, leveraging spiking neural network architectures, promises sophisticated artificial intelligence with significantly reduced computational requirements and power consumption, emulating human brain operational principles. Despite documented neuromorphic technology applications in various robotic domains, its utilization in marine robotics remains largely unexplored. Thus, this article proposes a unified framework for integrating neuromorphic technologies for perception, pose estimation, and haptic-guided conditional control of underwater vehicles, customized to specific user-defined objectives. This conceptual framework stands to revolutionize underwater robotics, enhancing efficiency and autonomy while reducing energy consumption. By enabling greater adaptability and robustness, this advancement could facilitate applications such as underwater exploration, environmental monitoring, and infrastructure maintenance, thereby contributing to significant progress in marine science and technology.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge