Asim Khan

Multi-Resolution Pathology-Language Pre-training Model with Text-Guided Visual Representation

Apr 26, 2025

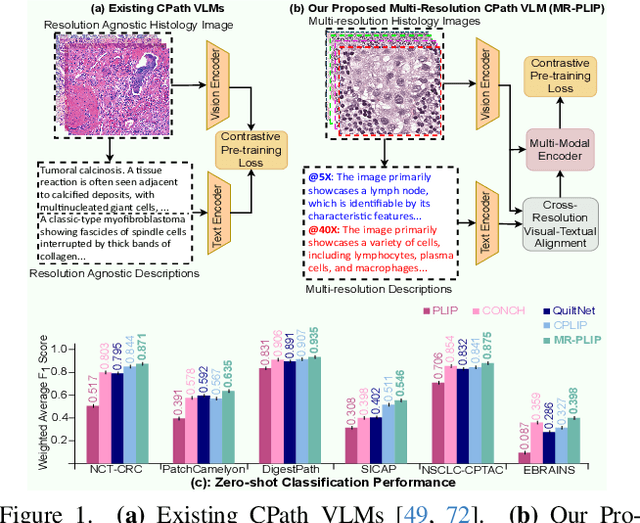

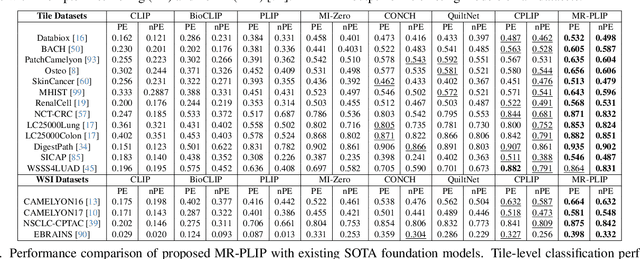

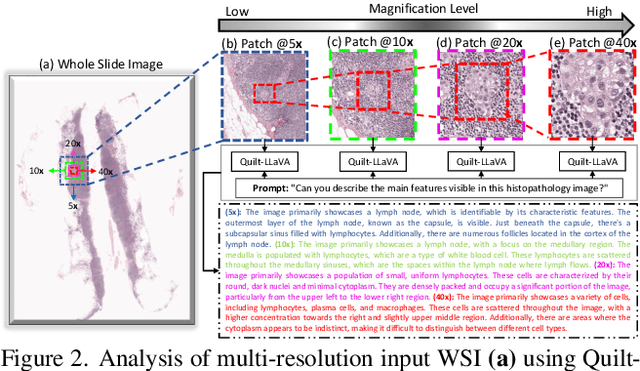

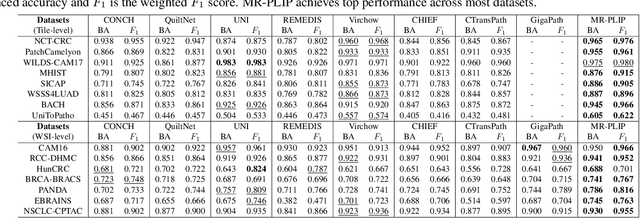

Abstract:In Computational Pathology (CPath), the introduction of Vision-Language Models (VLMs) has opened new avenues for research, focusing primarily on aligning image-text pairs at a single magnification level. However, this approach might not be sufficient for tasks like cancer subtype classification, tissue phenotyping, and survival analysis due to the limited level of detail that a single-resolution image can provide. Addressing this, we propose a novel multi-resolution paradigm leveraging Whole Slide Images (WSIs) to extract histology patches at multiple resolutions and generate corresponding textual descriptions through advanced CPath VLM. We introduce visual-textual alignment at multiple resolutions as well as cross-resolution alignment to establish more effective text-guided visual representations. Cross-resolution alignment using a multimodal encoder enhances the model's ability to capture context from multiple resolutions in histology images. Our model aims to capture a broader range of information, supported by novel loss functions, enriches feature representation, improves discriminative ability, and enhances generalization across different resolutions. Pre-trained on a comprehensive TCGA dataset with 34 million image-language pairs at various resolutions, our fine-tuned model outperforms state-of-the-art (SOTA) counterparts across multiple datasets and tasks, demonstrating its effectiveness in CPath. The code is available on GitHub at: https://github.com/BasitAlawode/MR-PLIP

Accurate and Efficient Urban Street Tree Inventory with Deep Learning on Mobile Phone Imagery

Jan 02, 2024

Abstract:Deforestation, a major contributor to climate change, poses detrimental consequences such as agricultural sector disruption, global warming, flash floods, and landslides. Conventional approaches to urban street tree inventory suffer from inaccuracies and necessitate specialised equipment. To overcome these challenges, this paper proposes an innovative method that leverages deep learning techniques and mobile phone imaging for urban street tree inventory. Our approach utilises a pair of images captured by smartphone cameras to accurately segment tree trunks and compute the diameter at breast height (DBH). Compared to traditional methods, our approach exhibits several advantages, including superior accuracy, reduced dependency on specialised equipment, and applicability in hard-to-reach areas. We evaluated our method on a comprehensive dataset of 400 trees and achieved a DBH estimation accuracy with an error rate of less than 2.5%. Our method holds significant potential for substantially improving forest management practices. By enhancing the accuracy and efficiency of tree inventory, our model empowers urban management to mitigate the adverse effects of deforestation and climate change.

Early and Accurate Detection of Tomato Leaf Diseases Using TomFormer

Dec 26, 2023Abstract:Tomato leaf diseases pose a significant challenge for tomato farmers, resulting in substantial reductions in crop productivity. The timely and precise identification of tomato leaf diseases is crucial for successfully implementing disease management strategies. This paper introduces a transformer-based model called TomFormer for the purpose of tomato leaf disease detection. The paper's primary contributions include the following: Firstly, we present a novel approach for detecting tomato leaf diseases by employing a fusion model that combines a visual transformer and a convolutional neural network. Secondly, we aim to apply our proposed methodology to the Hello Stretch robot to achieve real-time diagnosis of tomato leaf diseases. Thirdly, we assessed our method by comparing it to models like YOLOS, DETR, ViT, and Swin, demonstrating its ability to achieve state-of-the-art outcomes. For the purpose of the experiment, we used three datasets of tomato leaf diseases, namely KUTomaDATA, PlantDoc, and PlanVillage, where KUTomaDATA is being collected from a greenhouse in Abu Dhabi, UAE. Finally, we present a comprehensive analysis of the performance of our model and thoroughly discuss the limitations inherent in our approach. TomFormer performed well on the KUTomaDATA, PlantDoc, and PlantVillage datasets, with mean average accuracy (mAP) scores of 87%, 81%, and 83%, respectively. The comparative results in terms of mAP demonstrate that our method exhibits robustness, accuracy, efficiency, and scalability. Furthermore, it can be readily adapted to new datasets. We are confident that our work holds the potential to significantly influence the tomato industry by effectively mitigating crop losses and enhancing crop yields.

Convolutional Transformer for Autonomous Recognition and Grading of Tomatoes Under Various Lighting, Occlusion, and Ripeness Conditions

Jul 04, 2023Abstract:Harvesting fully ripe tomatoes with mobile robots presents significant challenges in real-world scenarios. These challenges arise from factors such as occlusion caused by leaves and branches, as well as the color similarity between tomatoes and the surrounding foliage during the fruit development stage. The natural environment further compounds these issues with varying light conditions, viewing angles, occlusion factors, and different maturity levels. To overcome these obstacles, this research introduces a novel framework that leverages a convolutional transformer architecture to autonomously recognize and grade tomatoes, irrespective of their occlusion level, lighting conditions, and ripeness. The proposed model is trained and tested using carefully annotated images curated specifically for this purpose. The dataset is prepared under various lighting conditions, viewing perspectives, and employs different mobile camera sensors, distinguishing it from existing datasets such as Laboro Tomato and Rob2Pheno Annotated Tomato. The effectiveness of the proposed framework in handling cluttered and occluded tomato instances was evaluated using two additional public datasets, Laboro Tomato and Rob2Pheno Annotated Tomato, as benchmarks. The evaluation results across these three datasets demonstrate the exceptional performance of our proposed framework, surpassing the state-of-the-art by 58.14%, 65.42%, and 66.39% in terms of mean average precision scores for KUTomaData, Laboro Tomato, and Rob2Pheno Annotated Tomato, respectively. The results underscore the superiority of the proposed model in accurately detecting and delineating tomatoes compared to baseline methods and previous approaches. Specifically, the model achieves an F1-score of 80.14%, a Dice coefficient of 73.26%, and a mean IoU of 66.41% on the KUTomaData image dataset.

Real-time Plant Health Assessment Via Implementing Cloud-based Scalable Transfer Learning On AWS DeepLens

Sep 10, 2020

Abstract:In the Agriculture sector, control of plant leaf diseases is crucial as it influences the quality and production of plant species with an impact on the economy of any country. Therefore, automated identification and classification of plant leaf disease at an early stage is essential to reduce economic loss and to conserve the specific species. Previously, to detect and classify plant leaf disease, various Machine Learning models have been proposed; however, they lack usability due to hardware incompatibility, limited scalability and inefficiency in practical usage. Our proposed DeepLens Classification and Detection Model (DCDM) approach deal with such limitations by introducing automated detection and classification of the leaf diseases in fruits (apple, grapes, peach and strawberry) and vegetables (potato and tomato) via scalable transfer learning on AWS SageMaker and importing it on AWS DeepLens for real-time practical usability. Cloud integration provides scalability and ubiquitous access to our approach. Our experiments on extensive image data set of healthy and unhealthy leaves of fruits and vegetables showed an accuracy of 98.78% with a real-time diagnosis of plant leaves diseases. We used forty thousand images for the training of deep learning model and then evaluated it on ten thousand images. The process of testing an image for disease diagnosis and classification using AWS DeepLens on average took 0.349s, providing disease information to the user in less than a second.

Computer Vision For COVID-19 Control: A Survey

May 05, 2020

Abstract:The COVID-19 pandemic has triggered an urgent need to contribute to the fight against an immense threat to the human population. Computer Vision, as a subfield of Artificial Intelligence, has enjoyed recent success in solving various complex problems in health care and has the potential to contribute to the fight of controlling COVID-19. In response to this call, computer vision researchers are putting their knowledge base at work to devise effective ways to counter COVID-19 challenge and serve the global community. New contributions are being shared with every passing day. It motivated us to review the recent work, collect information about available research resources and an indication of future research directions. We want to make it available to computer vision researchers to save precious time. This survey paper is intended to provide a preliminary review of the available literature on the computer vision efforts against COVID-19 pandemic.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge