Irfan Hussain

Khalifa University of Science and Technology, Abu Dhabi, United Arab Emirates

LLM-VLM Fusion Framework for Autonomous Maritime Port Inspection using a Heterogeneous UAV-USV System

Jan 19, 2026Abstract:Maritime port inspection plays a critical role in ensuring safety, regulatory compliance, and operational efficiency in complex maritime environments. However, existing inspection methods often rely on manual operations and conventional computer vision techniques that lack scalability and contextual understanding. This study introduces a novel integrated engineering framework that utilizes the synergy between Large Language Models (LLMs) and Vision Language Models (VLMs) to enable autonomous maritime port inspection using cooperative aerial and surface robotic platforms. The proposed framework replaces traditional state-machine mission planners with LLM-driven symbolic planning and improved perception pipelines through VLM-based semantic inspection, enabling context-aware and adaptive monitoring. The LLM module translates natural language mission instructions into executable symbolic plans with dependency graphs that encode operational constraints and ensure safe UAV-USV coordination. Meanwhile, the VLM module performs real-time semantic inspection and compliance assessment, generating structured reports with contextual reasoning. The framework was validated using the extended MBZIRC Maritime Simulator with realistic port infrastructure and further assessed through real-world robotic inspection trials. The lightweight on-board design ensures suitability for resource-constrained maritime platforms, advancing the development of intelligent, autonomous inspection systems. Project resources (code and videos) can be found here: https://github.com/Muhayyuddin/llm-vlm-fusion-port-inspection

Lang2Manip: A Tool for LLM-Based Symbolic-to-Geometric Planning for Manipulation

Dec 18, 2025

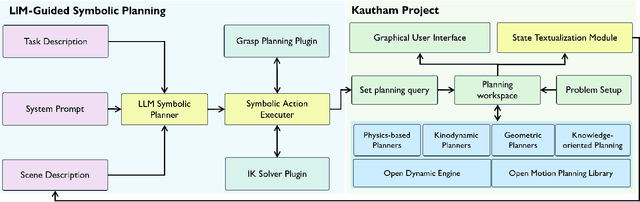

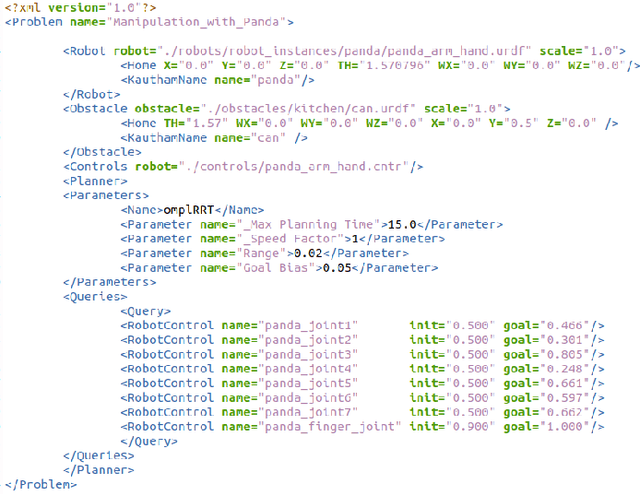

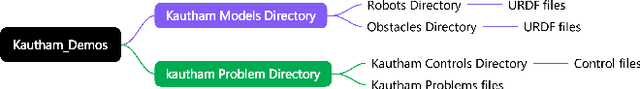

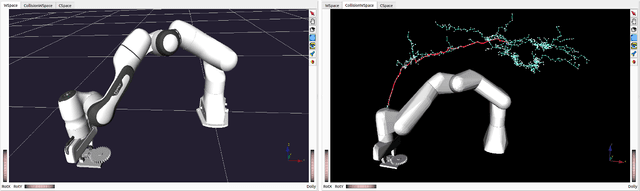

Abstract:Simulation is essential for developing robotic manipulation systems, particularly for task and motion planning (TAMP), where symbolic reasoning interfaces with geometric, kinematic, and physics-based execution. Recent advances in Large Language Models (LLMs) enable robots to generate symbolic plans from natural language, yet executing these plans in simulation often requires robot-specific engineering or planner-dependent integration. In this work, we present a unified pipeline that connects an LLM-based symbolic planner with the Kautham motion planning framework to achieve generalizable, robot-agnostic symbolic-to-geometric manipulation. Kautham provides ROS-compatible support for a wide range of industrial manipulators and offers geometric, kinodynamic, physics-driven, and constraint-based motion planning under a single interface. Our system converts language instructions into symbolic actions and computes and executes collision-free trajectories using any of Kautham's planners without additional coding. The result is a flexible and scalable tool for language-driven TAMP that is generalized across robots, planning modalities, and manipulation tasks.

Bio-Inspired Robotic Houbara: From Development to Field Deployment for Behavioral Studies

Oct 06, 2025Abstract:Biomimetic intelligence and robotics are transforming field ecology by enabling lifelike robotic surrogates that interact naturally with animals under real world conditions. Studying avian behavior in the wild remains challenging due to the need for highly realistic morphology, durable outdoor operation, and intelligent perception that can adapt to uncontrolled environments. We present a next generation bio inspired robotic platform that replicates the morphology and visual appearance of the female Houbara bustard to support controlled ethological studies and conservation oriented field research. The system introduces a fully digitally replicable fabrication workflow that combines high resolution structured light 3D scanning, parametric CAD modelling, articulated 3D printing, and photorealistic UV textured vinyl finishing to achieve anatomically accurate and durable robotic surrogates. A six wheeled rocker bogie chassis ensures stable mobility on sand and irregular terrain, while an embedded NVIDIA Jetson module enables real time RGB and thermal perception, lightweight YOLO based detection, and an autonomous visual servoing loop that aligns the robot's head toward detected targets without human intervention. A lightweight thermal visible fusion module enhances perception in low light conditions. Field trials in desert aviaries demonstrated reliable real time operation at 15 to 22 FPS with latency under 100 ms and confirmed that the platform elicits natural recognition and interactive responses from live Houbara bustards under harsh outdoor conditions. This integrated framework advances biomimetic field robotics by uniting reproducible digital fabrication, embodied visual intelligence, and ecological validation, providing a transferable blueprint for animal robot interaction research, conservation robotics, and public engagement.

FishDet-M: A Unified Large-Scale Benchmark for Robust Fish Detection and CLIP-Guided Model Selection in Diverse Aquatic Visual Domains

Jul 23, 2025

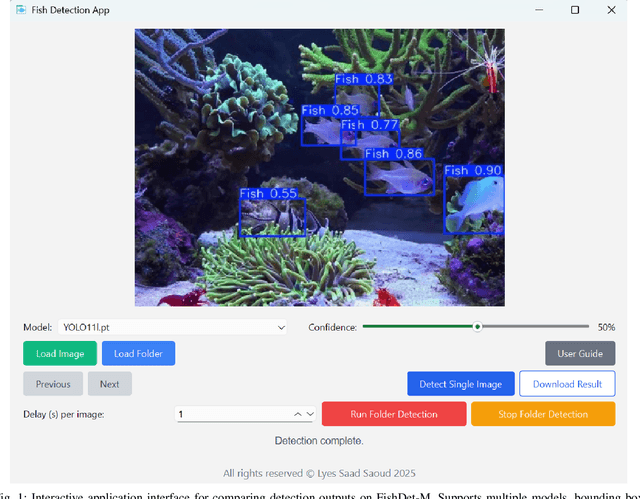

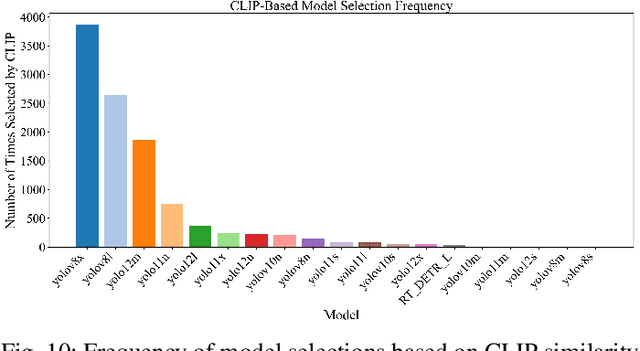

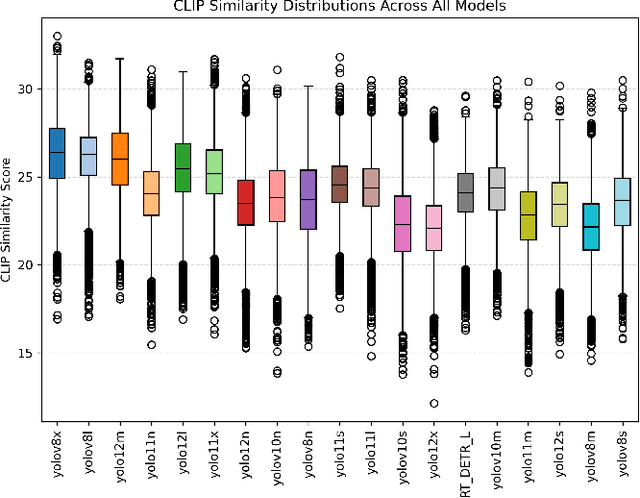

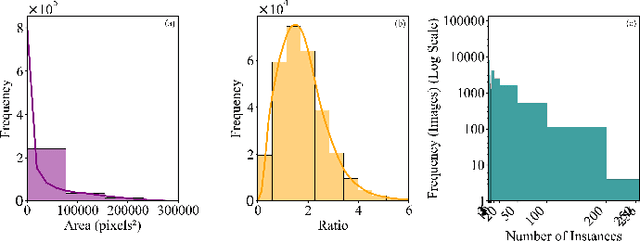

Abstract:Accurate fish detection in underwater imagery is essential for ecological monitoring, aquaculture automation, and robotic perception. However, practical deployment remains limited by fragmented datasets, heterogeneous imaging conditions, and inconsistent evaluation protocols. To address these gaps, we present \textit{FishDet-M}, the largest unified benchmark for fish detection, comprising 13 publicly available datasets spanning diverse aquatic environments including marine, brackish, occluded, and aquarium scenes. All data are harmonized using COCO-style annotations with both bounding boxes and segmentation masks, enabling consistent and scalable cross-domain evaluation. We systematically benchmark 28 contemporary object detection models, covering the YOLOv8 to YOLOv12 series, R-CNN based detectors, and DETR based models. Evaluations are conducted using standard metrics including mAP, mAP@50, and mAP@75, along with scale-specific analyses (AP$_S$, AP$_M$, AP$_L$) and inference profiling in terms of latency and parameter count. The results highlight the varying detection performance across models trained on FishDet-M, as well as the trade-off between accuracy and efficiency across models of different architectures. To support adaptive deployment, we introduce a CLIP-based model selection framework that leverages vision-language alignment to dynamically identify the most semantically appropriate detector for each input image. This zero-shot selection strategy achieves high performance without requiring ensemble computation, offering a scalable solution for real-time applications. FishDet-M establishes a standardized and reproducible platform for evaluating object detection in complex aquatic scenes. All datasets, pretrained models, and evaluation tools are publicly available to facilitate future research in underwater computer vision and intelligent marine systems.

A Review of Generative AI in Aquaculture: Foundations, Applications, and Future Directions for Smart and Sustainable Farming

Jul 16, 2025Abstract:Generative Artificial Intelligence (GAI) has rapidly emerged as a transformative force in aquaculture, enabling intelligent synthesis of multimodal data, including text, images, audio, and simulation outputs for smarter, more adaptive decision-making. As the aquaculture industry shifts toward data-driven, automation and digital integration operations under the Aquaculture 4.0 paradigm, GAI models offer novel opportunities across environmental monitoring, robotics, disease diagnostics, infrastructure planning, reporting, and market analysis. This review presents the first comprehensive synthesis of GAI applications in aquaculture, encompassing foundational architectures (e.g., diffusion models, transformers, and retrieval augmented generation), experimental systems, pilot deployments, and real-world use cases. We highlight GAI's growing role in enabling underwater perception, digital twin modeling, and autonomous planning for remotely operated vehicle (ROV) missions. We also provide an updated application taxonomy that spans sensing, control, optimization, communication, and regulatory compliance. Beyond technical capabilities, we analyze key limitations, including limited data availability, real-time performance constraints, trust and explainability, environmental costs, and regulatory uncertainty. This review positions GAI not merely as a tool but as a critical enabler of smart, resilient, and environmentally aligned aquaculture systems.

Friction-Scaled Vibrotactile Feedback for Real-Time Slip Detection in Manipulation using Robotic Sixth Finger

Mar 19, 2025

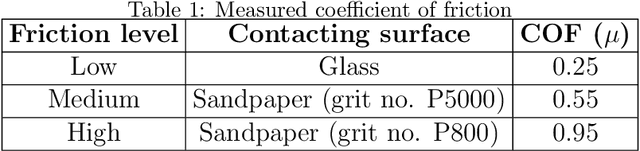

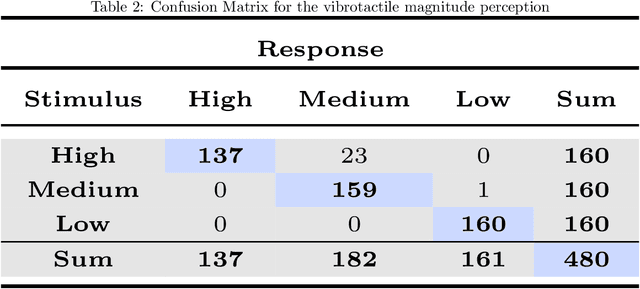

Abstract:The integration of extra-robotic limbs/fingers to enhance and expand motor skills, particularly for grasping and manipulation, possesses significant challenges. The grasping performance of existing limbs/fingers is far inferior to that of human hands. Human hands can detect onset of slip through tactile feedback originating from tactile receptors during the grasping process, enabling precise and automatic regulation of grip force. The frictional information is perceived by humans depending upon slip happening between finger and object. Enhancing this capability in extra-robotic limbs or fingers used by humans is challenging. To address this challenge, this paper introduces novel approach to communicate frictional information to users through encoded vibrotactile cues. These cues are conveyed on onset of incipient slip thus allowing users to perceive friction and ultimately use this information to increase force to avoid dropping of object. In a 2-alternative forced-choice protocol, participants gripped and lifted a glass under three different frictional conditions, applying a normal force of 3.5 N. After reaching this force, glass was gradually released to induce slip. During this slipping phase, vibrations scaled according to static coefficient of friction were presented to users, reflecting frictional conditions. The results suggested an accuracy of 94.53 p/m 3.05 (mean p/mSD) in perceiving frictional information upon lifting objects with varying friction. The results indicate effectiveness of using vibrotactile feedback for sensory feedback, allowing users of extra-robotic limbs or fingers to perceive frictional information. This enables them to assess surface properties and adjust grip force according to frictional conditions, enhancing their ability to grasp, manipulate objects more effectively.

Maritime Mission Planning for Unmanned Surface Vessel using Large Language Model

Mar 15, 2025

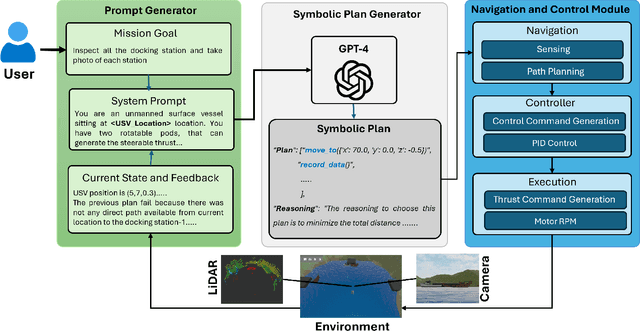

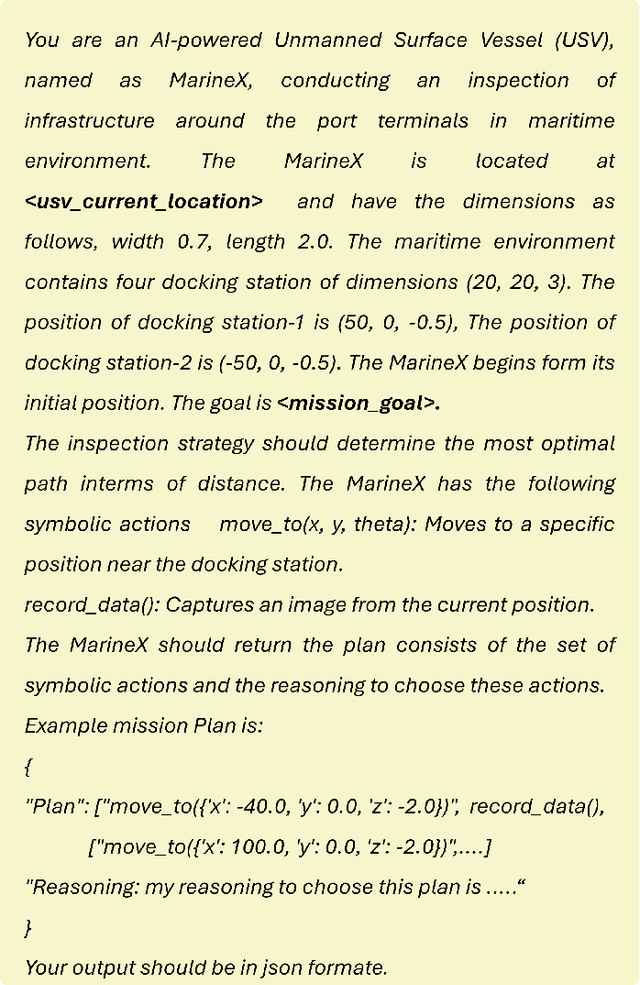

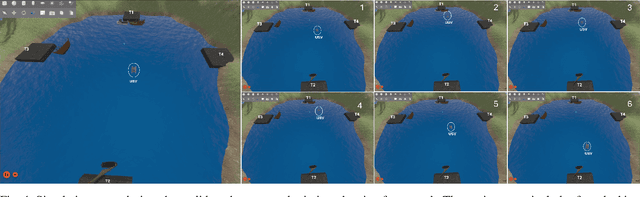

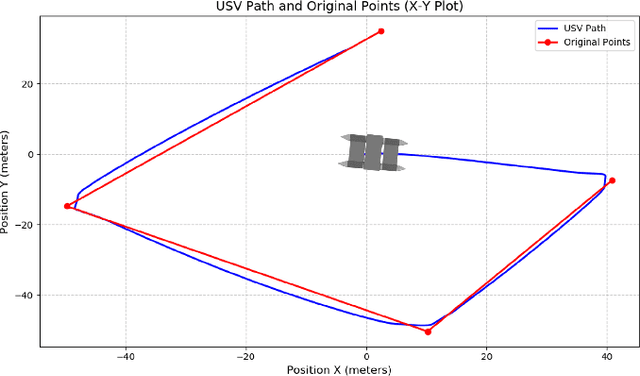

Abstract:Unmanned Surface Vessels (USVs) are essential for various maritime operations. USV mission planning approach offers autonomous solutions for monitoring, surveillance, and logistics. Existing approaches, which are based on static methods, struggle to adapt to dynamic environments, leading to suboptimal performance, higher costs, and increased risk of failure. This paper introduces a novel mission planning framework that uses Large Language Models (LLMs), such as GPT-4, to address these challenges. LLMs are proficient at understanding natural language commands, executing symbolic reasoning, and flexibly adjusting to changing situations. Our approach integrates LLMs into maritime mission planning to bridge the gap between high-level human instructions and executable plans, allowing real-time adaptation to environmental changes and unforeseen obstacles. In addition, feedback from low-level controllers is utilized to refine symbolic mission plans, ensuring robustness and adaptability. This framework improves the robustness and effectiveness of USV operations by integrating the power of symbolic planning with the reasoning abilities of LLMs. In addition, it simplifies the mission specification, allowing operators to focus on high-level objectives without requiring complex programming. The simulation results validate the proposed approach, demonstrating its ability to optimize mission execution while seamlessly adapting to dynamic maritime conditions.

Soft Vision-Based Tactile-Enabled SixthFinger: Advancing Daily Objects Manipulation for Stroke Survivors

Jan 12, 2025Abstract:The presence of post-stroke grasping deficiencies highlights the critical need for the development and implementation of advanced compensatory strategies. This paper introduces a novel system to aid chronic stroke survivors through the development of a soft, vision-based, tactile-enabled extra robotic finger. By incorporating vision-based tactile sensing, the system autonomously adjusts grip force in response to slippage detection. This synergy not only ensures mechanical stability but also enriches tactile feedback, mimicking the dynamics of human-object interactions. At the core of our approach is a transformer-based framework trained on a comprehensive tactile dataset encompassing objects with a wide range of morphological properties, including variations in shape, size, weight, texture, and hardness. Furthermore, we validated the system's robustness in real-world applications, where it successfully manipulated various everyday objects. The promising results highlight the potential of this approach to improve the quality of life for stroke survivors.

Ontology-driven Prompt Tuning for LLM-based Task and Motion Planning

Dec 10, 2024Abstract:Performing complex manipulation tasks in dynamic environments requires efficient Task and Motion Planning (TAMP) approaches, which combine high-level symbolic plan with low-level motion planning. Advances in Large Language Models (LLMs), such as GPT-4, are transforming task planning by offering natural language as an intuitive and flexible way to describe tasks, generate symbolic plans, and reason. However, the effectiveness of LLM-based TAMP approaches is limited due to static and template-based prompting, which struggles in adapting to dynamic environments and complex task contexts. To address these limitations, this work proposes a novel ontology-driven prompt-tuning framework that employs knowledge-based reasoning to refine and expand user prompts with task contextual reasoning and knowledge-based environment state descriptions. Integrating domain-specific knowledge into the prompt ensures semantically accurate and context-aware task plans. The proposed framework demonstrates its effectiveness by resolving semantic errors in symbolic plan generation, such as maintaining logical temporal goal ordering in scenarios involving hierarchical object placement. The proposed framework is validated through both simulation and real-world scenarios, demonstrating significant improvements over the baseline approach in terms of adaptability to dynamic environments, and the generation of semantically correct task plans.

Benchmarking Vision-Based Object Tracking for USVs in Complex Maritime Environments

Dec 10, 2024Abstract:Vision-based target tracking is crucial for unmanned surface vehicles (USVs) to perform tasks such as inspection, monitoring, and surveillance. However, real-time tracking in complex maritime environments is challenging due to dynamic camera movement, low visibility, and scale variation. Typically, object detection methods combined with filtering techniques are commonly used for tracking, but they often lack robustness, particularly in the presence of camera motion and missed detections. Although advanced tracking methods have been proposed recently, their application in maritime scenarios is limited. To address this gap, this study proposes a vision-guided object-tracking framework for USVs, integrating state-of-the-art tracking algorithms with low-level control systems to enable precise tracking in dynamic maritime environments. We benchmarked the performance of seven distinct trackers, developed using advanced deep learning techniques such as Siamese Networks and Transformers, by evaluating them on both simulated and real-world maritime datasets. In addition, we evaluated the robustness of various control algorithms in conjunction with these tracking systems. The proposed framework was validated through simulations and real-world sea experiments, demonstrating its effectiveness in handling dynamic maritime conditions. The results show that SeqTrack, a Transformer-based tracker, performed best in adverse conditions, such as dust storms. Among the control algorithms evaluated, the linear quadratic regulator controller (LQR) demonstrated the most robust and smooth control, allowing for stable tracking of the USV.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge