Jan Rosell

Lang2Manip: A Tool for LLM-Based Symbolic-to-Geometric Planning for Manipulation

Dec 18, 2025

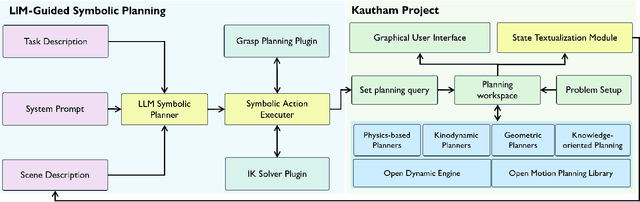

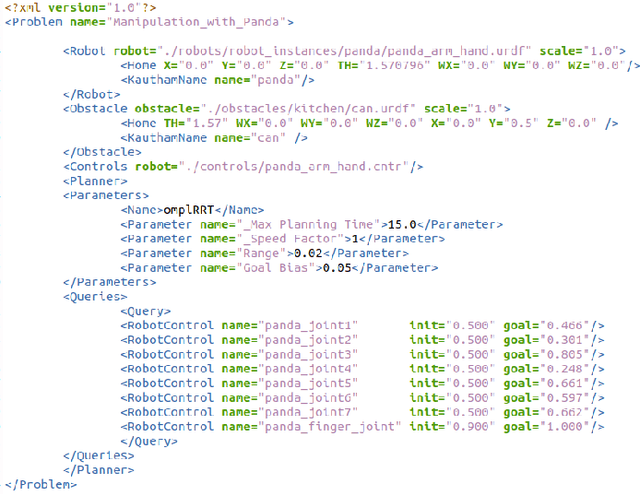

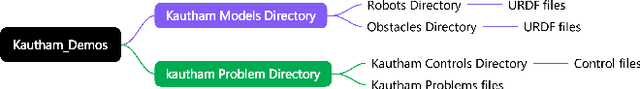

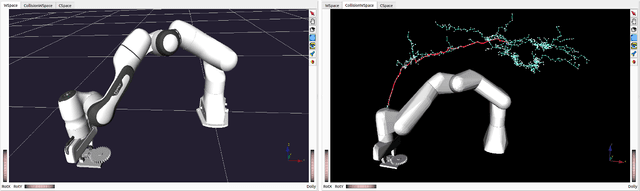

Abstract:Simulation is essential for developing robotic manipulation systems, particularly for task and motion planning (TAMP), where symbolic reasoning interfaces with geometric, kinematic, and physics-based execution. Recent advances in Large Language Models (LLMs) enable robots to generate symbolic plans from natural language, yet executing these plans in simulation often requires robot-specific engineering or planner-dependent integration. In this work, we present a unified pipeline that connects an LLM-based symbolic planner with the Kautham motion planning framework to achieve generalizable, robot-agnostic symbolic-to-geometric manipulation. Kautham provides ROS-compatible support for a wide range of industrial manipulators and offers geometric, kinodynamic, physics-driven, and constraint-based motion planning under a single interface. Our system converts language instructions into symbolic actions and computes and executes collision-free trajectories using any of Kautham's planners without additional coding. The result is a flexible and scalable tool for language-driven TAMP that is generalized across robots, planning modalities, and manipulation tasks.

Ontology-driven Prompt Tuning for LLM-based Task and Motion Planning

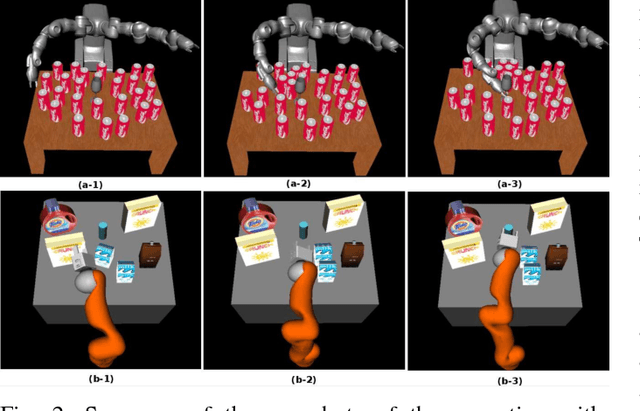

Dec 10, 2024Abstract:Performing complex manipulation tasks in dynamic environments requires efficient Task and Motion Planning (TAMP) approaches, which combine high-level symbolic plan with low-level motion planning. Advances in Large Language Models (LLMs), such as GPT-4, are transforming task planning by offering natural language as an intuitive and flexible way to describe tasks, generate symbolic plans, and reason. However, the effectiveness of LLM-based TAMP approaches is limited due to static and template-based prompting, which struggles in adapting to dynamic environments and complex task contexts. To address these limitations, this work proposes a novel ontology-driven prompt-tuning framework that employs knowledge-based reasoning to refine and expand user prompts with task contextual reasoning and knowledge-based environment state descriptions. Integrating domain-specific knowledge into the prompt ensures semantically accurate and context-aware task plans. The proposed framework demonstrates its effectiveness by resolving semantic errors in symbolic plan generation, such as maintaining logical temporal goal ordering in scenarios involving hierarchical object placement. The proposed framework is validated through both simulation and real-world scenarios, demonstrating significant improvements over the baseline approach in terms of adaptability to dynamic environments, and the generation of semantically correct task plans.

Randomized Physics-based Motion Planning for Grasping in Cluttered and Uncertain Environments

Nov 27, 2017

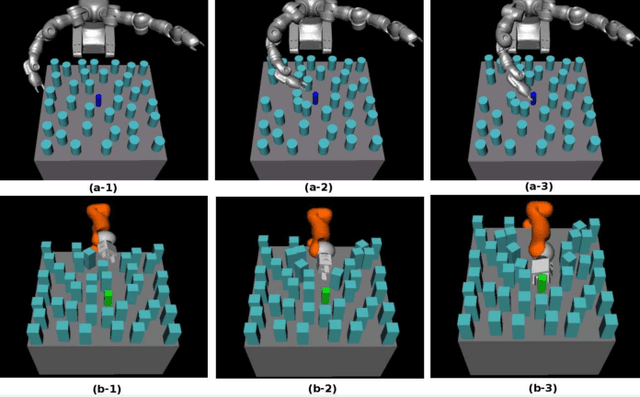

Abstract:Planning motions to grasp an object in cluttered and uncertain environments is a challenging task, particularly when a collision-free trajectory does not exist and objects obstructing the way are required to be carefully grasped and moved out. This paper takes a different approach and proposes to address this problem by using a randomized physics-based motion planner that permits robot-object and object-object interactions. The main idea is to avoid an explicit high-level reasoning of the task by providing the motion planner with a physics engine to evaluate possible complex multi-body dynamical interactions. The approach is able to solve the problem in complex scenarios, also considering uncertainty in the objects pose and in the contact dynamics. The work enhances the state validity checker, the control sampler and the tree exploration strategy of a kinodynamic motion planner called KPIECE. The enhanced algorithm, called p-KPIECE, has been validated in simulation and with real experiments. The results have been compared with an ontological physics-based motion planner and with task and motion planning approaches, resulting in a significant improvement in terms of planning time, success rate and quality of the solution path.

Ontological Physics-based Motion Planning for Manipulation

Oct 29, 2017

Abstract:Robotic manipulation involves actions where contacts occur between the robot and the objects. In this scope, the availability of physics-based engines allows motion planners to comprise dynamics between rigid bodies, which is necessary for planning this type of actions. However, physics-based motion planning is computationally intensive due to the high dimensionality of the state space and the need to work with a low integration step to find accurate solutions. On the other hand, manipulation actions change the environment and conditions further actions and motions. To cope with this issue, the representation of manipulation actions using ontologies enables a semantic-based inference process that alleviates the computational cost of motion planning. This paper proposes a manipulation planning framework where physics-based motion planning is enhanced with ontological knowledge representation and reasoning. The proposal has been implemented and is illustrated and validated with a simple example. Its use in grasping tasks in cluttered environments is currently under development.

Physics-based Motion Planning with Temporal Logic Specifications

Oct 01, 2017

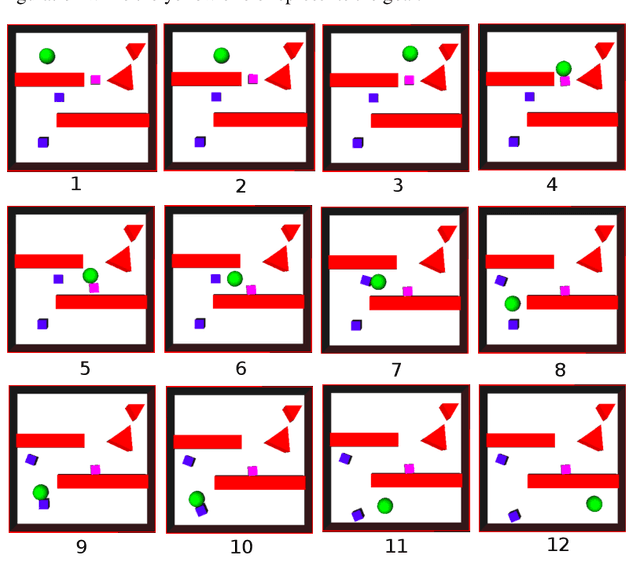

Abstract:One of the main foci of robotics is nowadays centered in providing a great degree of autonomy to robots. A fundamental step in this direction is to give them the ability to plan in discrete and continuous spaces to find the required motions to complete a complex task. In this line, some recent approaches describe tasks with Linear Temporal Logic (LTL) and reason on discrete actions to guide sampling-based motion planning, with the aim of finding dynamically-feasible motions that satisfy the temporal-logic task specifications. The present paper proposes an LTL planning approach enhanced with the use of ontologies to describe and reason about the task, on the one hand, and that includes physics-based motion planning to allow the purposeful manipulation of objects, on the other hand. The proposal has been implemented and is illustrated with didactic examples with a mobile robot in simple scenarios where some of the goals are occupied with objects that must be removed in order to fulfill the task.

$κ$-PMP: Enhancing Physics-based Motion Planners with Knowledge-based Reasoning

Sep 30, 2017

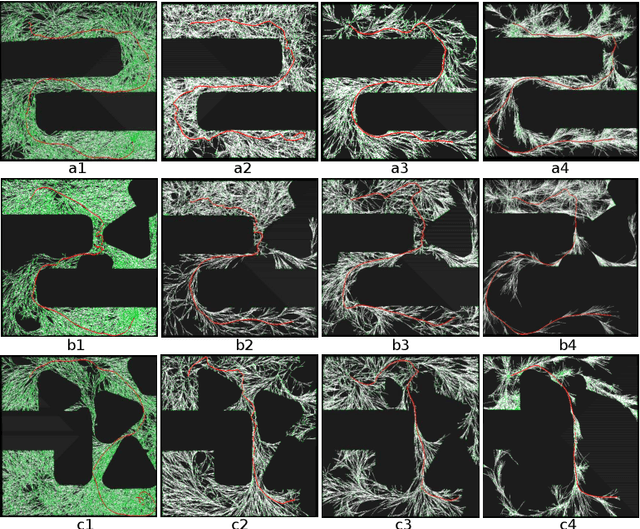

Abstract:Physics-based motion planning is a challenging task, since it requires the computation of the robot motions while allowing possible interactions with (some of) the obstacles in the environment. Kinodynamic motion planners equipped with a dynamic engine acting as state propagator are usually used for that purpose. The difficulties arise in the setting of the adequate forces for the interactions and because these interactions may change the pose of the manipulatable obstacles, thus either facilitating or preventing the finding of a solution path. The use of knowledge can alleviate the stated difficulties. This paper proposes the use of an enhanced state propagator composed of a dynamic engine and a low-level geometric reasoning process that is used to determine how to interact with the objects, i.e. from where and with which forces. The proposal, called \k{appa}-PMP can be used with any kinodynamic planner, thus giving rise to e.g. \k{appa}-RRT. The approach also includes a preprocessing step that infers from a semantic abstract knowledge described in terms of an ontology the manipulation knowledge required by the reasoning process. The proposed approach has been validated with several examples involving an holonomic mobile robot, a robot with differential constraints and a serial manipulator, and benchmarked using several state-of-the art kinodynamic planners. The results showed a significant difference in the power consumption with respect to simple physics-based planning, an improvement in the success rate and in the quality of the solution paths.

Physics-based Motion Planning: Evaluation Criteria and Benchmarking

Sep 30, 2017

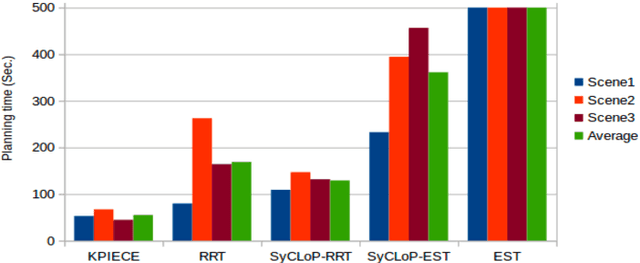

Abstract:Motion planning has evolved from coping with simply geometric problems to physics-based ones that incorporate the kinodynamic and the physical constraints imposed by the robot and the physical world. Therefore, the criteria for evaluating physics-based motion planners goes beyond the computational complexity (e.g. in terms of planning time) usually used as a measure for evaluating geometrical planners, in order to consider also the quality of the solution in terms of dynamical parameters. This study proposes an evaluation criteria and analyzes the performance of several kinodynamic planners, which are at the core of physics-based motion planning, using different scenarios with fixed and manipulatable objects. RRT, EST, KPIECE and SyCLoP are used for the benchmarking. The results show that KPIECE computes the time-optimal solution with heighest success rate, whereas, SyCLoP compute the most power-optimal solution among the planners used.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge