Yihao Dong

Maritime Mission Planning for Unmanned Surface Vessel using Large Language Model

Mar 15, 2025

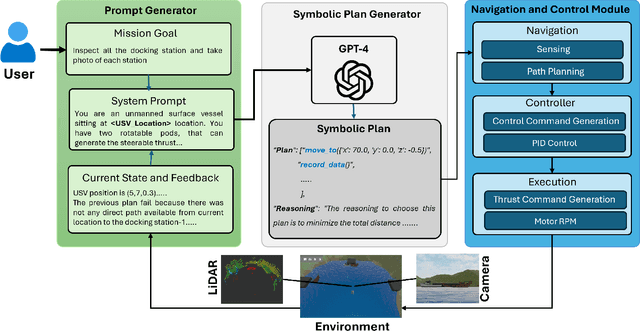

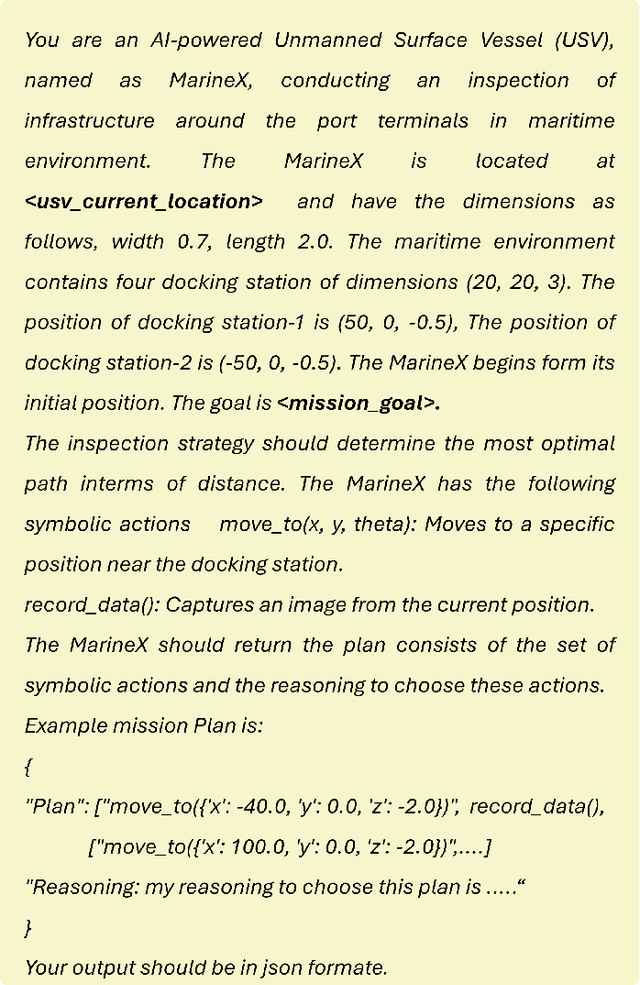

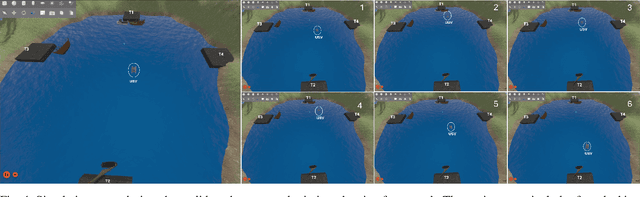

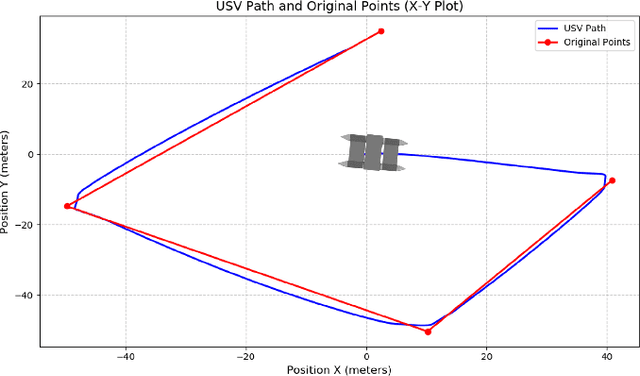

Abstract:Unmanned Surface Vessels (USVs) are essential for various maritime operations. USV mission planning approach offers autonomous solutions for monitoring, surveillance, and logistics. Existing approaches, which are based on static methods, struggle to adapt to dynamic environments, leading to suboptimal performance, higher costs, and increased risk of failure. This paper introduces a novel mission planning framework that uses Large Language Models (LLMs), such as GPT-4, to address these challenges. LLMs are proficient at understanding natural language commands, executing symbolic reasoning, and flexibly adjusting to changing situations. Our approach integrates LLMs into maritime mission planning to bridge the gap between high-level human instructions and executable plans, allowing real-time adaptation to environmental changes and unforeseen obstacles. In addition, feedback from low-level controllers is utilized to refine symbolic mission plans, ensuring robustness and adaptability. This framework improves the robustness and effectiveness of USV operations by integrating the power of symbolic planning with the reasoning abilities of LLMs. In addition, it simplifies the mission specification, allowing operators to focus on high-level objectives without requiring complex programming. The simulation results validate the proposed approach, demonstrating its ability to optimize mission execution while seamlessly adapting to dynamic maritime conditions.

Benchmarking Vision-Based Object Tracking for USVs in Complex Maritime Environments

Dec 10, 2024Abstract:Vision-based target tracking is crucial for unmanned surface vehicles (USVs) to perform tasks such as inspection, monitoring, and surveillance. However, real-time tracking in complex maritime environments is challenging due to dynamic camera movement, low visibility, and scale variation. Typically, object detection methods combined with filtering techniques are commonly used for tracking, but they often lack robustness, particularly in the presence of camera motion and missed detections. Although advanced tracking methods have been proposed recently, their application in maritime scenarios is limited. To address this gap, this study proposes a vision-guided object-tracking framework for USVs, integrating state-of-the-art tracking algorithms with low-level control systems to enable precise tracking in dynamic maritime environments. We benchmarked the performance of seven distinct trackers, developed using advanced deep learning techniques such as Siamese Networks and Transformers, by evaluating them on both simulated and real-world maritime datasets. In addition, we evaluated the robustness of various control algorithms in conjunction with these tracking systems. The proposed framework was validated through simulations and real-world sea experiments, demonstrating its effectiveness in handling dynamic maritime conditions. The results show that SeqTrack, a Transformer-based tracker, performed best in adverse conditions, such as dust storms. Among the control algorithms evaluated, the linear quadratic regulator controller (LQR) demonstrated the most robust and smooth control, allowing for stable tracking of the USV.

An Aerial Transport System in Marine GNSS-Denied Environment

Nov 03, 2024

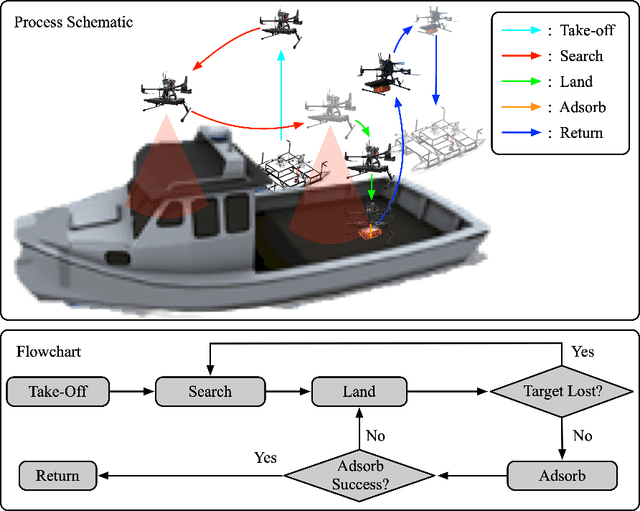

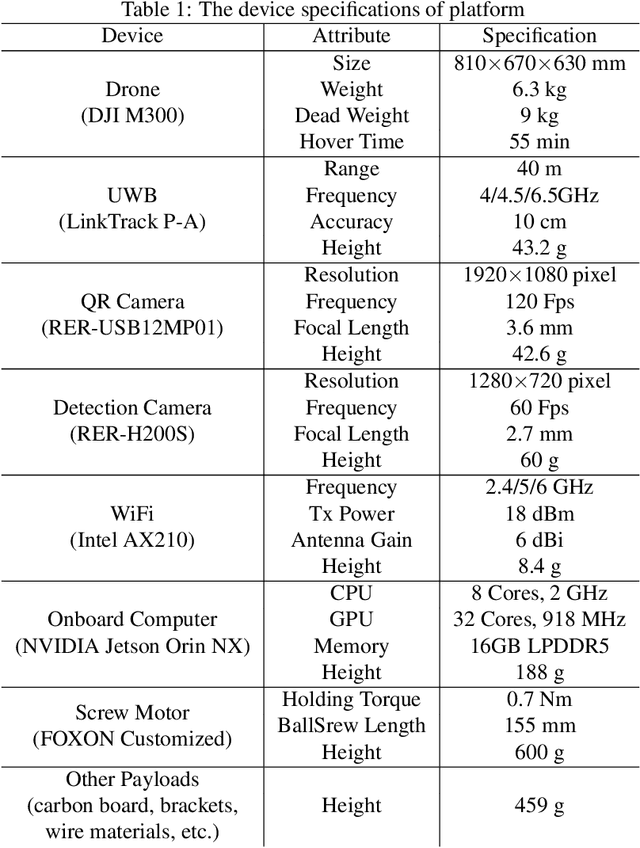

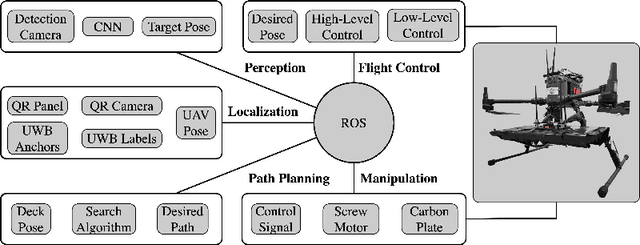

Abstract:This paper presents an autonomous aerial system specifically engineered for operation in challenging marine GNSS-denied environments, aimed at transporting small cargo from a target vessel. In these environments, characterized by weakly textured sea surfaces with few feature points, chaotic deck oscillations due to waves, and significant wind gusts, conventional navigation methods often prove inadequate. Leveraging the DJI M300 platform, our system is designed to autonomously navigate and transport cargo while overcoming these environmental challenges. In particular, this paper proposes an anchor-based localization method using ultrawideband (UWB) and QR codes facilities, which decouples the UAV's attitude from that of the moving landing platform, thus reducing control oscillations caused by platform movement. Additionally, a motor-driven attachment mechanism for cargo is designed, which enhances the UAV's field of view during descent and ensures a reliable attachment to the cargo upon landing. The system's reliability and effectiveness were progressively enhanced through multiple outdoor experimental iterations and were validated by the successful cargo transport during the 2024 Mohamed BinZayed International Robotics Challenge (MBZIRC2024) competition. Crucially, the system addresses uncertainties and interferences inherent in maritime transportation missions without prior knowledge of cargo locations on the deck and with strict limitations on intervention throughout the transportation.

Long-Range Vision-Based UAV-assisted Localization for Unmanned Surface Vehicles

Aug 21, 2024Abstract:The global positioning system (GPS) has become an indispensable navigation method for field operations with unmanned surface vehicles (USVs) in marine environments. However, GPS may not always be available outdoors because it is vulnerable to natural interference and malicious jamming attacks. Thus, an alternative navigation system is required when the use of GPS is restricted or prohibited. To this end, we present a novel method that utilizes an Unmanned Aerial Vehicle (UAV) to assist in localizing USVs in GNSS-restricted marine environments. In our approach, the UAV flies along the shoreline at a consistent altitude, continuously tracking and detecting the USV using a deep learning-based approach on camera images. Subsequently, triangulation techniques are applied to estimate the USV's position relative to the UAV, utilizing geometric information and datalink range from the UAV. We propose adjusting the UAV's camera angle based on the pixel error between the USV and the image center throughout the localization process to enhance accuracy. Additionally, visual measurements are integrated into an Extended Kalman Filter (EKF) for robust state estimation. To validate our proposed method, we utilize a USV equipped with onboard sensors and a UAV equipped with a camera. A heterogeneous robotic interface is established to facilitate communication between the USV and UAV. We demonstrate the efficacy of our approach through a series of experiments conducted during the ``Muhammad Bin Zayed International Robotic Challenge (MBZIRC-2024)'' in real marine environments, incorporating noisy measurements and ocean disturbances. The successful outcomes indicate the potential of our method to complement GPS for USV navigation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge