Oussama Khatib

Friction-Scaled Vibrotactile Feedback for Real-Time Slip Detection in Manipulation using Robotic Sixth Finger

Mar 19, 2025

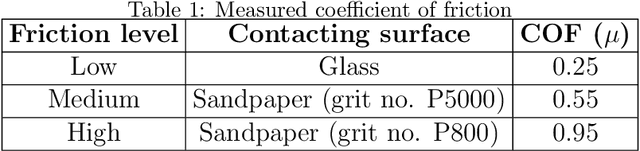

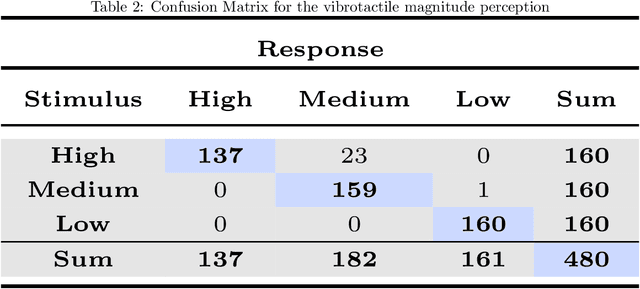

Abstract:The integration of extra-robotic limbs/fingers to enhance and expand motor skills, particularly for grasping and manipulation, possesses significant challenges. The grasping performance of existing limbs/fingers is far inferior to that of human hands. Human hands can detect onset of slip through tactile feedback originating from tactile receptors during the grasping process, enabling precise and automatic regulation of grip force. The frictional information is perceived by humans depending upon slip happening between finger and object. Enhancing this capability in extra-robotic limbs or fingers used by humans is challenging. To address this challenge, this paper introduces novel approach to communicate frictional information to users through encoded vibrotactile cues. These cues are conveyed on onset of incipient slip thus allowing users to perceive friction and ultimately use this information to increase force to avoid dropping of object. In a 2-alternative forced-choice protocol, participants gripped and lifted a glass under three different frictional conditions, applying a normal force of 3.5 N. After reaching this force, glass was gradually released to induce slip. During this slipping phase, vibrations scaled according to static coefficient of friction were presented to users, reflecting frictional conditions. The results suggested an accuracy of 94.53 p/m 3.05 (mean p/mSD) in perceiving frictional information upon lifting objects with varying friction. The results indicate effectiveness of using vibrotactile feedback for sensory feedback, allowing users of extra-robotic limbs or fingers to perceive frictional information. This enables them to assess surface properties and adjust grip force according to frictional conditions, enhancing their ability to grasp, manipulate objects more effectively.

TidyBot++: An Open-Source Holonomic Mobile Manipulator for Robot Learning

Dec 11, 2024

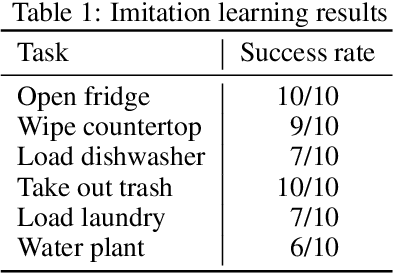

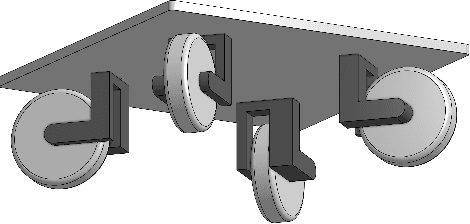

Abstract:Exploiting the promise of recent advances in imitation learning for mobile manipulation will require the collection of large numbers of human-guided demonstrations. This paper proposes an open-source design for an inexpensive, robust, and flexible mobile manipulator that can support arbitrary arms, enabling a wide range of real-world household mobile manipulation tasks. Crucially, our design uses powered casters to enable the mobile base to be fully holonomic, able to control all planar degrees of freedom independently and simultaneously. This feature makes the base more maneuverable and simplifies many mobile manipulation tasks, eliminating the kinematic constraints that create complex and time-consuming motions in nonholonomic bases. We equip our robot with an intuitive mobile phone teleoperation interface to enable easy data acquisition for imitation learning. In our experiments, we use this interface to collect data and show that the resulting learned policies can successfully perform a variety of common household mobile manipulation tasks.

Elly: A Real-Time Failure Recovery and Data Collection System for Robotic Manipulation

Aug 25, 2022

Abstract:Even the most robust autonomous behaviors can fail. The goal of this research is to both recover and collect data from failures, during autonomous task execution, so they can be prevented in the future. We propose haptic intervention for real-time failure recovery and data collection. Elly is a system that allows for seamless transitions between autonomous robot behaviors and human intervention while collecting sensory information from the human's recovery strategy. The system and our design choices were experimentally validated on a single arm task -- installing a lightbulb in a socket -- and a bimanual task -- screwing a cap on a bottle -- using two 7-DOF manipulators equipped 4-finger grippers. In these examples, Elly achieved over 80% task completion during a total of 40 runs.

WSR: A WiFi Sensor for Collaborative Robotics

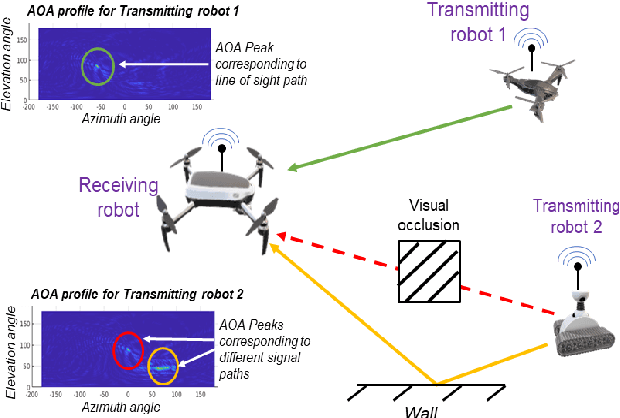

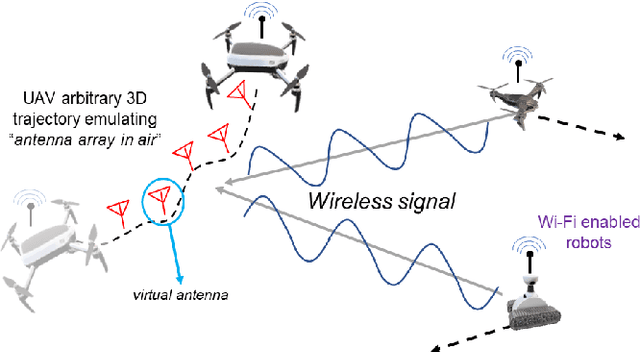

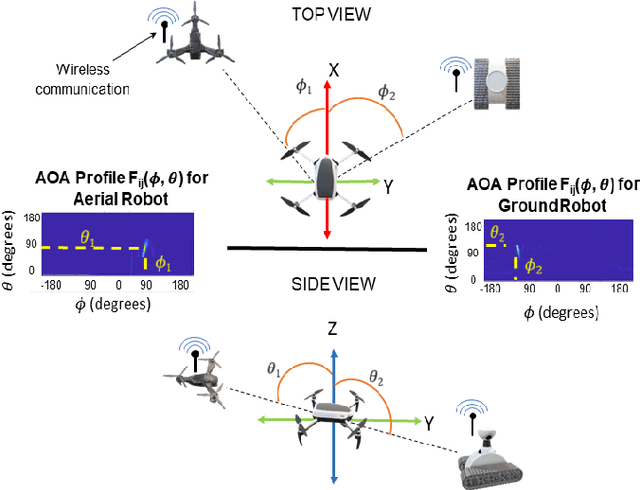

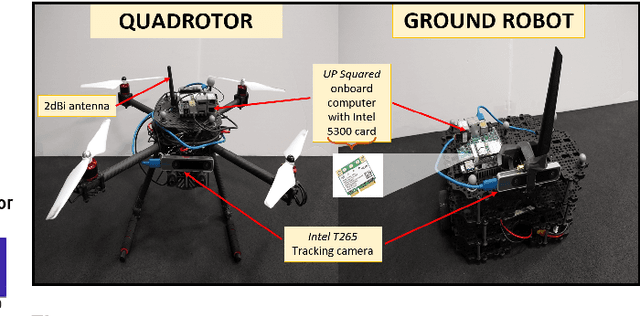

Dec 08, 2020

Abstract:In this paper we derive a new capability for robots to measure relative direction, or Angle-of-Arrival (AOA), to other robots operating in non-line-of-sight and unmapped environments with occlusions, without requiring external infrastructure. We do so by capturing all of the paths that a WiFi signal traverses as it travels from a transmitting to a receiving robot, which we term an AOA profile. The key intuition is to "emulate antenna arrays in the air" as the robots move in 3D space, a method akin to Synthetic Aperture Radar (SAR). The main contributions include development of i) a framework to accommodate arbitrary 3D trajectories, as well as continuous mobility all robots, while computing AOA profiles and ii) an accompanying analysis that provides a lower bound on variance of AOA estimation as a function of robot trajectory geometry based on the Cramer Rao Bound. This is a critical distinction with previous work on SAR that restricts robot mobility to prescribed motion patterns, does not generalize to 3D space, and/or requires transmitting robots to be static during data acquisition periods. Our method results in more accurate AOA profiles and thus better AOA estimation, and formally characterizes this observation as the informativeness of the trajectory; a computable quantity for which we derive a closed form. All theoretical developments are substantiated by extensive simulation and hardware experiments. We also show that our formulation can be used with an off-the-shelf trajectory estimation sensor. Finally, we demonstrate the performance of our system on a multi-robot dynamic rendezvous task.

UniGrasp: Learning a Unified Model to Grasp with N-Fingered Robotic Hands

Oct 24, 2019

Abstract:To achieve a successful grasp, gripper attributes including geometry and kinematics play a role equally important to the target object geometry. The majority of previous work has focused on developing grasp methods that generalize over novel object geometry but are specific to a certain robot hand. We propose UniGrasp, an efficient data-driven grasp synthesis method that considers both the object geometry and gripper attributes as inputs. UniGrasp is based on a novel deep neural network architecture that selects sets of contact points from the input point cloud of the object. The proposed model is trained on a large dataset to produce contact points that are in force closure and reachable by the robot hand. By using contact points as output, we can transfer between a diverse set of N-fingered robotic hands. Our model produces over 90 percent valid contact points in Top10 predictions in simulation and more than 90 percent successful grasps in the real world experiments for various known two-fingered and three-fingered grippers. Our model also achieves 93 percent and 83 percent successful grasps in the real world experiments for a novel two-fingered and five-fingered anthropomorphic robotic hand, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge