Weiying Wang

MULAN-WC: Multi-Robot Localization Uncertainty-aware Active NeRF with Wireless Coordination

Mar 20, 2024Abstract:This paper presents MULAN-WC, a novel multi-robot 3D reconstruction framework that leverages wireless signal-based coordination between robots and Neural Radiance Fields (NeRF). Our approach addresses key challenges in multi-robot 3D reconstruction, including inter-robot pose estimation, localization uncertainty quantification, and active best-next-view selection. We introduce a method for using wireless Angle-of-Arrival (AoA) and ranging measurements to estimate relative poses between robots, as well as quantifying and incorporating the uncertainty embedded in the wireless localization of these pose estimates into the NeRF training loss to mitigate the impact of inaccurate camera poses. Furthermore, we propose an active view selection approach that accounts for robot pose uncertainty when determining the next-best views to improve the 3D reconstruction, enabling faster convergence through intelligent view selection. Extensive experiments on both synthetic and real-world datasets demonstrate the effectiveness of our framework in theory and in practice. Leveraging wireless coordination and localization uncertainty-aware training, MULAN-WC can achieve high-quality 3d reconstruction which is close to applying the ground truth camera poses. Furthermore, the quantification of the information gain from a novel view enables consistent rendering quality improvement with incrementally captured images by commending the robot the novel view position. Our hardware experiments showcase the practicality of deploying MULAN-WC to real robotic systems.

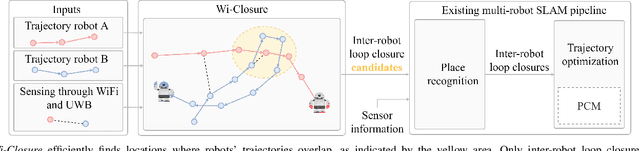

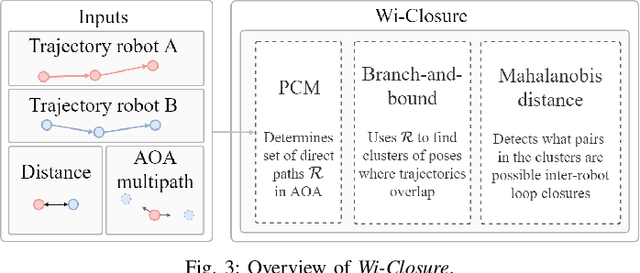

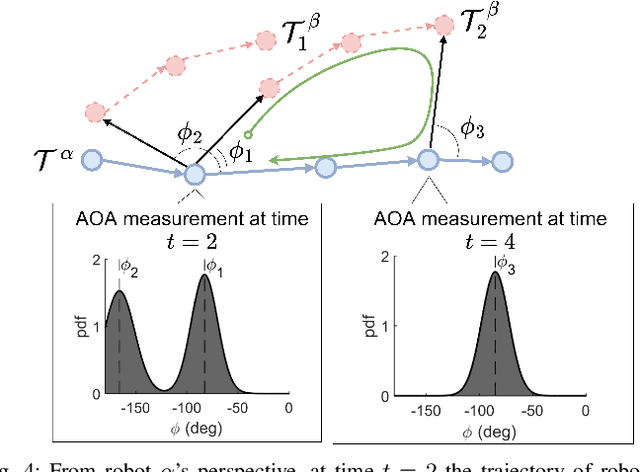

Wi-Closure: Reliable and Efficient Search of Inter-robot Loop Closures Using Wireless Sensing

Oct 04, 2022

Abstract:In this paper we propose a novel algorithm, Wi-Closure, to improve computational efficiency and robustness of loop closure detection in multi-robot SLAM. Our approach decreases the computational overhead of classical approaches by pruning the search space of potential loop closures, prior to evaluation by a typical multi-robot SLAM pipeline. Wi-Closure achieves this by identifying candidates that are spatially close to each other by using sensing over the wireless communication signal between robots, even when they are operating in non-line-of-sight or in remote areas of the environment from one another. We demonstrate the validity of our approach in simulation and hardware experiments. Our results show that using Wi-closure greatly reduces computation time, by 54% in simulation and by 77% in hardware compared, with a multi-robot SLAM baseline. Importantly, this is achieved without sacrificing accuracy. Using Wi-Closure reduces absolute trajectory estimation error by 99% in simulation and 89.2% in hardware experiments. This improvement is due in part to Wi-Closure's ability to avoid catastrophic optimization failure that typically occurs with classical approaches in challenging repetitive environments.

Image Difference Captioning with Pre-training and Contrastive Learning

Feb 09, 2022

Abstract:The Image Difference Captioning (IDC) task aims to describe the visual differences between two similar images with natural language. The major challenges of this task lie in two aspects: 1) fine-grained visual differences that require learning stronger vision and language association and 2) high-cost of manual annotations that leads to limited supervised data. To address these challenges, we propose a new modeling framework following the pre-training-finetuning paradigm. Specifically, we design three self-supervised tasks and contrastive learning strategies to align visual differences and text descriptions at a fine-grained level. Moreover, we propose a data expansion strategy to utilize extra cross-task supervision information, such as data for fine-grained image classification, to alleviate the limitation of available supervised IDC data. Extensive experiments on two IDC benchmark datasets, CLEVR-Change and Birds-to-Words, demonstrate the effectiveness of the proposed modeling framework. The codes and models will be released at https://github.com/yaolinli/IDC.

Toolbox Release: A WiFi-Based Relative Bearing Sensor for Robotics

Sep 24, 2021

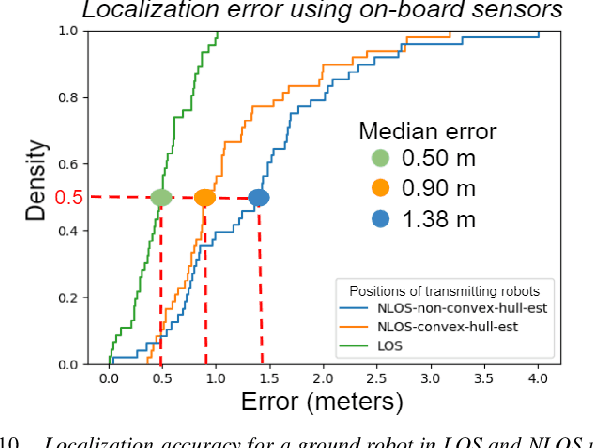

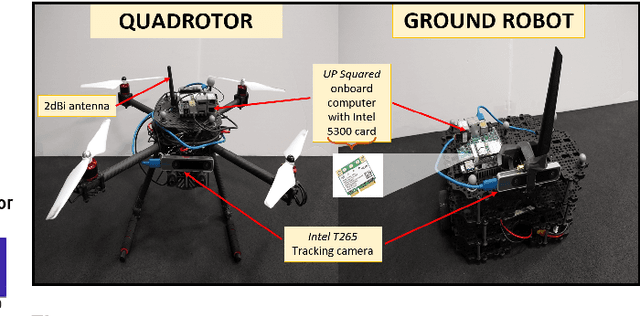

Abstract:This paper presents the WiFi-Sensor-for-Robotics (WSR) toolbox, an open source C++ framework. It enables robots in a team to obtain relative bearing to each other, even in non-line-of-sight (NLOS) settings which is a very challenging problem in robotics. It does so by analyzing the phase of their communicated WiFi signals as the robots traverse the environment. This capability, based on the theory developed in our prior works, is made available for the first time as an opensource tool. It is motivated by the lack of easily deployable solutions that use robots' local resources (e.g WiFi) for sensing in NLOS. This has implications for localization, ad-hoc robot networks, and security in multi-robot teams, amongst others. The toolbox is designed for distributed and online deployment on robot platforms using commodity hardware and on-board sensors. We also release datasets demonstrating its performance in NLOS and line-of-sight (LOS) settings for a multi-robot localization usecase. Empirical results show that the bearing estimation from our toolbox achieves mean accuracy of 5.10 degrees. This leads to a median error of 0.5m and 0.9m for localization in LOS and NLOS settings respectively, in a hardware deployment in an indoor office environment.

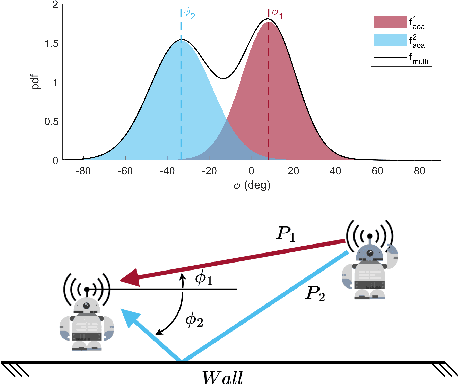

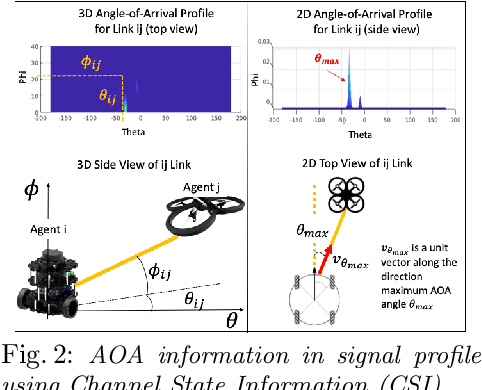

WSR: A WiFi Sensor for Collaborative Robotics

Dec 08, 2020

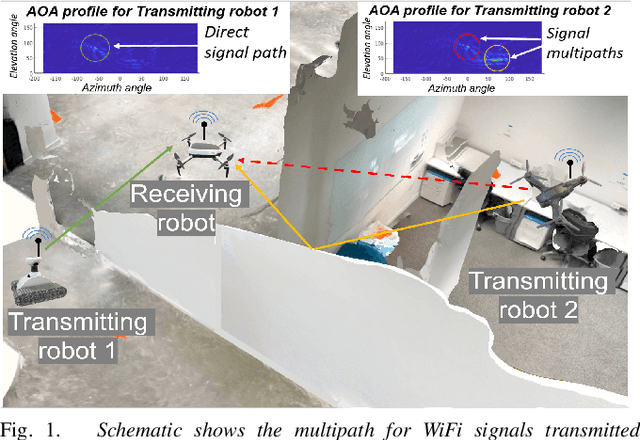

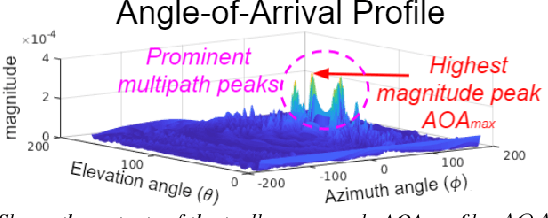

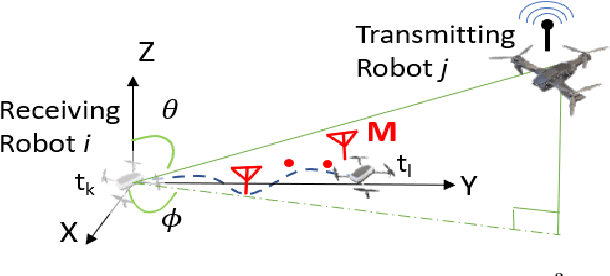

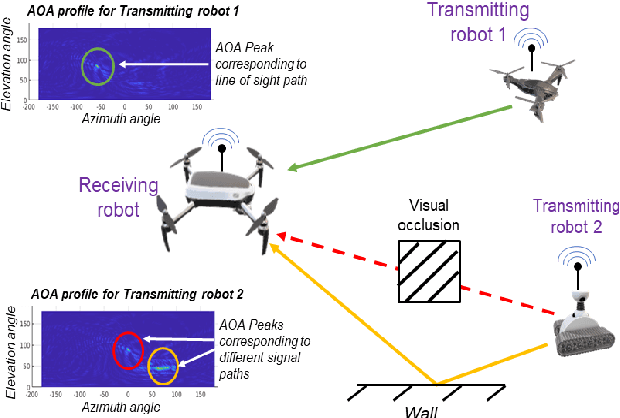

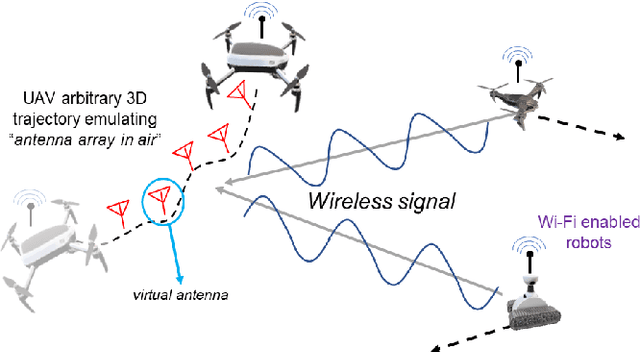

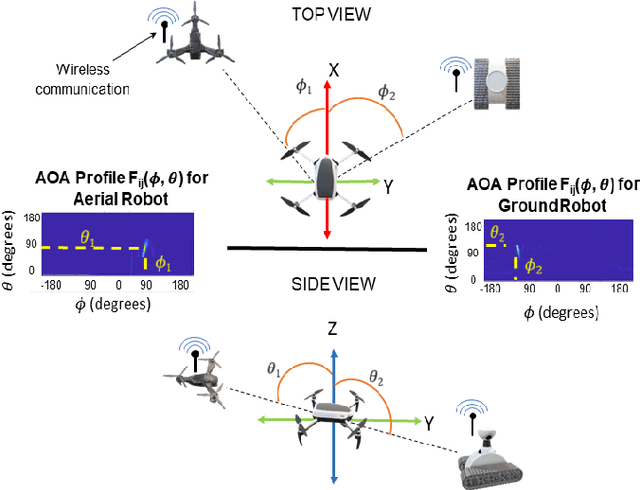

Abstract:In this paper we derive a new capability for robots to measure relative direction, or Angle-of-Arrival (AOA), to other robots operating in non-line-of-sight and unmapped environments with occlusions, without requiring external infrastructure. We do so by capturing all of the paths that a WiFi signal traverses as it travels from a transmitting to a receiving robot, which we term an AOA profile. The key intuition is to "emulate antenna arrays in the air" as the robots move in 3D space, a method akin to Synthetic Aperture Radar (SAR). The main contributions include development of i) a framework to accommodate arbitrary 3D trajectories, as well as continuous mobility all robots, while computing AOA profiles and ii) an accompanying analysis that provides a lower bound on variance of AOA estimation as a function of robot trajectory geometry based on the Cramer Rao Bound. This is a critical distinction with previous work on SAR that restricts robot mobility to prescribed motion patterns, does not generalize to 3D space, and/or requires transmitting robots to be static during data acquisition periods. Our method results in more accurate AOA profiles and thus better AOA estimation, and formally characterizes this observation as the informativeness of the trajectory; a computable quantity for which we derive a closed form. All theoretical developments are substantiated by extensive simulation and hardware experiments. We also show that our formulation can be used with an off-the-shelf trajectory estimation sensor. Finally, we demonstrate the performance of our system on a multi-robot dynamic rendezvous task.

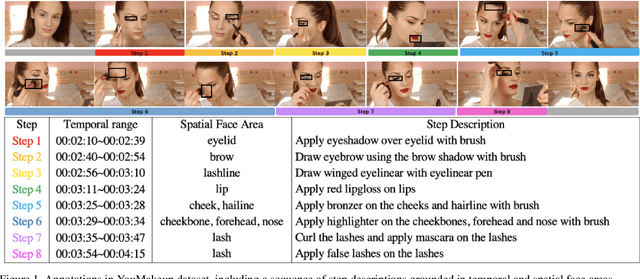

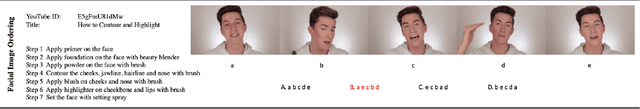

YouMakeup VQA Challenge: Towards Fine-grained Action Understanding in Domain-Specific Videos

Apr 12, 2020

Abstract:The goal of the YouMakeup VQA Challenge 2020 is to provide a common benchmark for fine-grained action understanding in domain-specific videos e.g. makeup instructional videos. We propose two novel question-answering tasks to evaluate models' fine-grained action understanding abilities. The first task is \textbf{Facial Image Ordering}, which aims to understand visual effects of different actions expressed in natural language to the facial object. The second task is \textbf{Step Ordering}, which aims to measure cross-modal semantic alignments between untrimmed videos and multi-sentence texts. In this paper, we present the challenge guidelines, the dataset used, and performances of baseline models on the two proposed tasks. The baseline codes and models are released at \url{https://github.com/AIM3-RUC/YouMakeup_Baseline}.

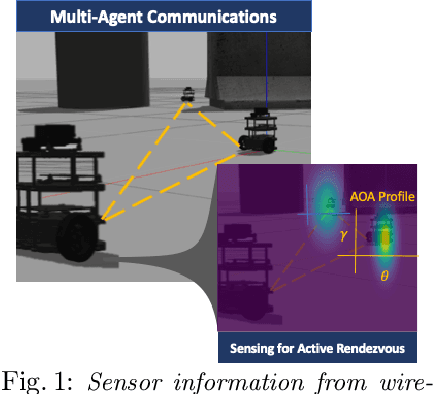

Active Rendezvous for Multi-Robot Pose Graph Optimization using Sensing over Wi-Fi

Jul 12, 2019

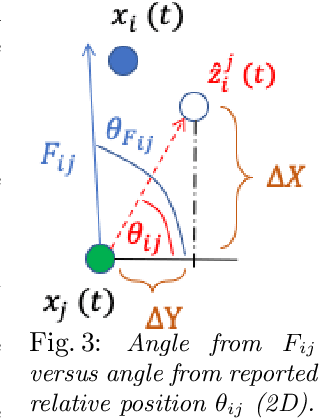

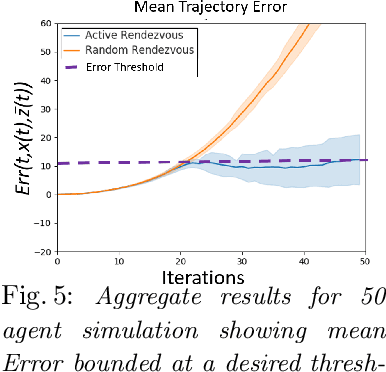

Abstract:We present a novel framework for collaboration amongst a team of robots performing Pose Graph Optimization (PGO) that addresses two important challenges for multi-robot SLAM: that of enabling information exchange "on-demand" via active rendezvous, and that of rejecting outlier measurements with high probability. Our key insight is to exploit relative position data present in the communication channel between agents, as an independent measurement in PGO. We show that our algorithmic and experimental framework for integrating Channel State Information (CSI) over the communication channels, with multi-agent PGO, addresses the two open challenges of enabling information exchange and rejecting outliers. Our presented framework is distributed and applicable in low-lighting or featureless environments where traditional sensors often fail. We present extensive experimental results on actual robots showing both the use of active rendezvous resulting in error reduction by 6X as compared to randomly occurring rendezvous and the use of CSI observations providing a reduction in ground truth pose estimation errors of 32%. These results demonstrate the promise of using a combination of multi-robot coordination and CSI to address challenges in multi-agent localization and mapping -- providing an important step towards integrating communication as a novel sensor for SLAM tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge