Diana Zhang

Toolbox Release: A WiFi-Based Relative Bearing Sensor for Robotics

Sep 24, 2021

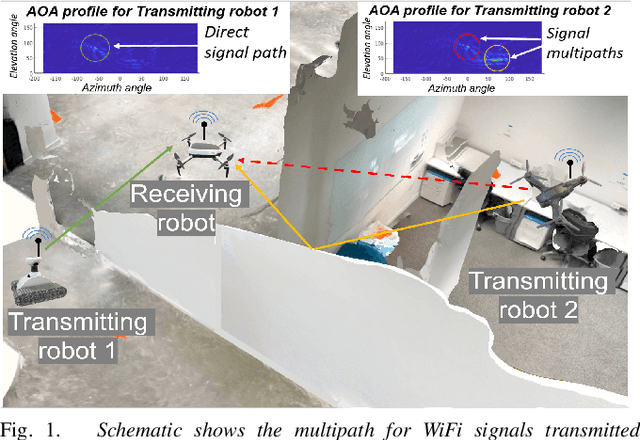

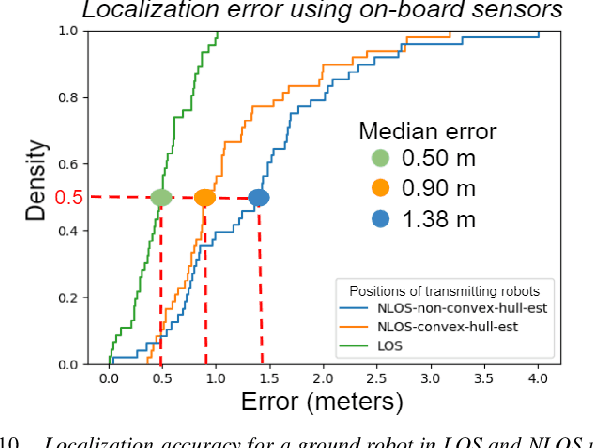

Abstract:This paper presents the WiFi-Sensor-for-Robotics (WSR) toolbox, an open source C++ framework. It enables robots in a team to obtain relative bearing to each other, even in non-line-of-sight (NLOS) settings which is a very challenging problem in robotics. It does so by analyzing the phase of their communicated WiFi signals as the robots traverse the environment. This capability, based on the theory developed in our prior works, is made available for the first time as an opensource tool. It is motivated by the lack of easily deployable solutions that use robots' local resources (e.g WiFi) for sensing in NLOS. This has implications for localization, ad-hoc robot networks, and security in multi-robot teams, amongst others. The toolbox is designed for distributed and online deployment on robot platforms using commodity hardware and on-board sensors. We also release datasets demonstrating its performance in NLOS and line-of-sight (LOS) settings for a multi-robot localization usecase. Empirical results show that the bearing estimation from our toolbox achieves mean accuracy of 5.10 degrees. This leads to a median error of 0.5m and 0.9m for localization in LOS and NLOS settings respectively, in a hardware deployment in an indoor office environment.

A Hybrid mmWave and Camera System for Long-Range Depth Imaging

Jun 15, 2021

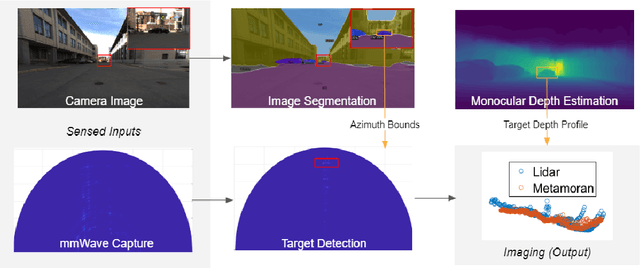

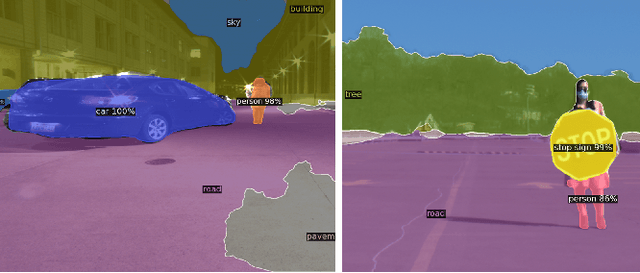

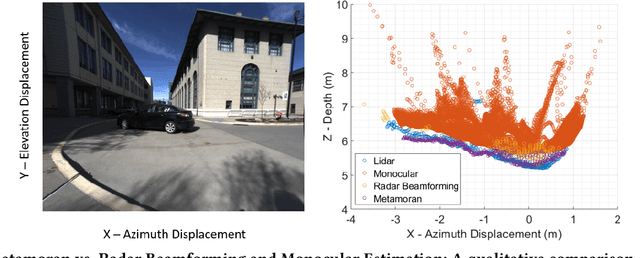

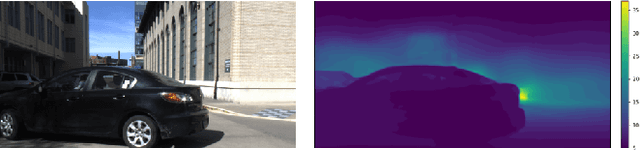

Abstract:mmWave radars offer excellent depth resolution owing to their high bandwidth at mmWave radio frequencies. Yet, they suffer intrinsically from poor angular resolution, that is an order-of-magnitude worse than camera systems, and are therefore not a capable 3-D imaging solution in isolation. We propose Metamoran, a system that combines the complimentary strengths of radar and camera systems to obtain depth images at high azimuthal resolutions at distances of several tens of meters with high accuracy, all from a single fixed vantage point. Metamoran enables rich long-range depth imaging outdoors with applications to roadside safety infrastructure, surveillance and wide-area mapping. Our key insight is to use the high azimuth resolution from cameras using computer vision techniques, including image segmentation and monocular depth estimation, to obtain object shapes and use these as priors for our novel specular beamforming algorithm. We also design this algorithm to work in cluttered environments with weak reflections and in partially occluded scenarios. We perform a detailed evaluation of Metamoran's depth imaging and sensing capabilities in 200 diverse scenes at a major U.S. city. Our evaluation shows that Metamoran estimates the depth of an object up to 60~m away with a median error of 28~cm, an improvement of 13$\times$ compared to a naive radar+camera baseline and 23$\times$ compared to monocular depth estimation.

WSR: A WiFi Sensor for Collaborative Robotics

Dec 08, 2020

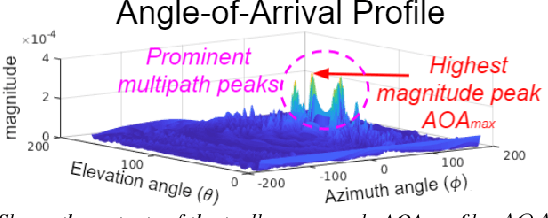

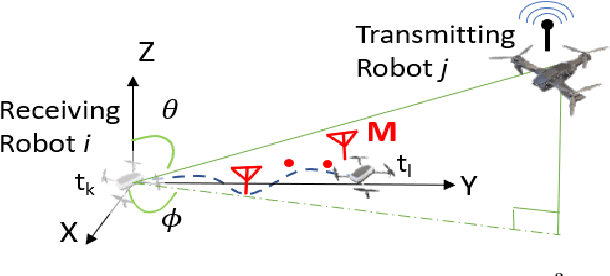

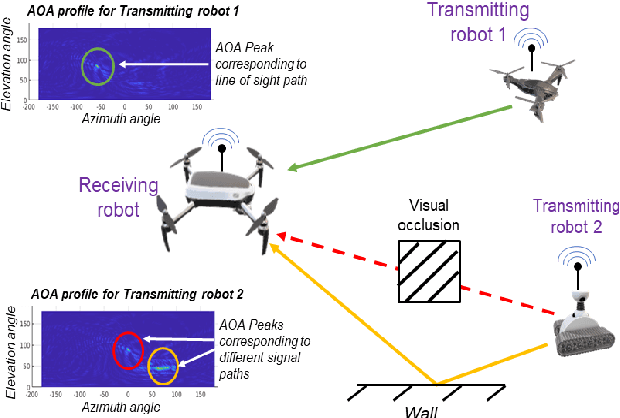

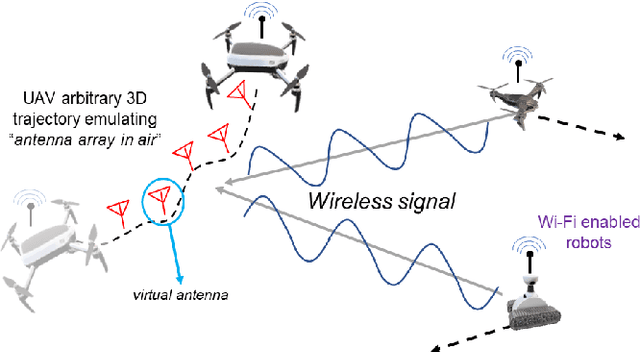

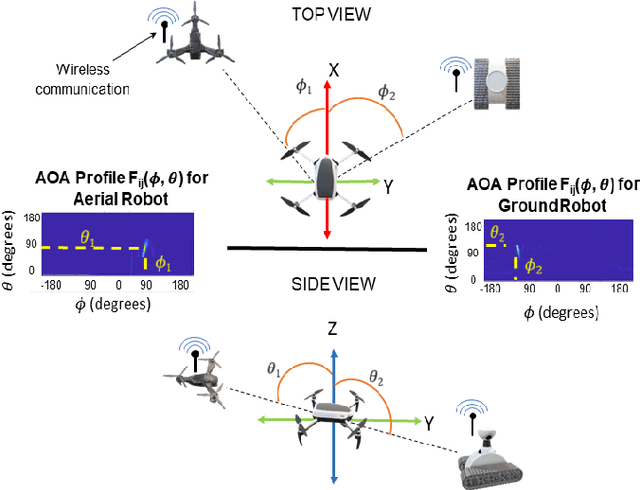

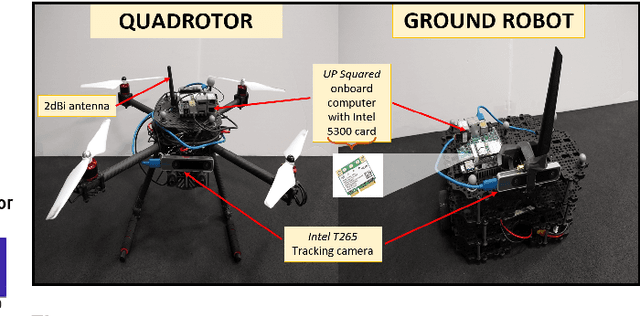

Abstract:In this paper we derive a new capability for robots to measure relative direction, or Angle-of-Arrival (AOA), to other robots operating in non-line-of-sight and unmapped environments with occlusions, without requiring external infrastructure. We do so by capturing all of the paths that a WiFi signal traverses as it travels from a transmitting to a receiving robot, which we term an AOA profile. The key intuition is to "emulate antenna arrays in the air" as the robots move in 3D space, a method akin to Synthetic Aperture Radar (SAR). The main contributions include development of i) a framework to accommodate arbitrary 3D trajectories, as well as continuous mobility all robots, while computing AOA profiles and ii) an accompanying analysis that provides a lower bound on variance of AOA estimation as a function of robot trajectory geometry based on the Cramer Rao Bound. This is a critical distinction with previous work on SAR that restricts robot mobility to prescribed motion patterns, does not generalize to 3D space, and/or requires transmitting robots to be static during data acquisition periods. Our method results in more accurate AOA profiles and thus better AOA estimation, and formally characterizes this observation as the informativeness of the trajectory; a computable quantity for which we derive a closed form. All theoretical developments are substantiated by extensive simulation and hardware experiments. We also show that our formulation can be used with an off-the-shelf trajectory estimation sensor. Finally, we demonstrate the performance of our system on a multi-robot dynamic rendezvous task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge