Akarsh Prabhakara

Privacy-Aware Sharing of Raw Spatial Sensor Data for Cooperative Perception

Dec 18, 2025Abstract:Cooperative perception between vehicles is poised to offer robust and reliable scene understanding. Recently, we are witnessing experimental systems research building testbeds that share raw spatial sensor data for cooperative perception. While there has been a marked improvement in accuracies and is the natural way forward, we take a moment to consider the problems with such an approach for eventual adoption by automakers. In this paper, we first argue that new forms of privacy concerns arise and discourage stakeholders to share raw sensor data. Next, we present SHARP, a research framework to minimize privacy leakage and drive stakeholders towards the ambitious goal of raw data based cooperative perception. Finally, we discuss open questions for networked systems, mobile computing, perception researchers, industry and government in realizing our proposed framework.

Towards Foundational Models for Single-Chip Radar

Sep 15, 2025Abstract:mmWave radars are compact, inexpensive, and durable sensors that are robust to occlusions and work regardless of environmental conditions, such as weather and darkness. However, this comes at the cost of poor angular resolution, especially for inexpensive single-chip radars, which are typically used in automotive and indoor sensing applications. Although many have proposed learning-based methods to mitigate this weakness, no standardized foundational models or large datasets for the mmWave radar have emerged, and practitioners have largely trained task-specific models from scratch using relatively small datasets. In this paper, we collect (to our knowledge) the largest available raw radar dataset with 1M samples (29 hours) and train a foundational model for 4D single-chip radar, which can predict 3D occupancy and semantic segmentation with quality that is typically only possible with much higher resolution sensors. We demonstrate that our Generalizable Radar Transformer (GRT) generalizes across diverse settings, can be fine-tuned for different tasks, and shows logarithmic data scaling of 20\% per $10\times$ data. We also run extensive ablations on common design decisions, and find that using raw radar data significantly outperforms widely-used lossy representations, equivalent to a $10\times$ increase in training data. Finally, we roughly estimate that $\approx$100M samples (3000 hours) of data are required to fully exploit the potential of GRT.

DART: Implicit Doppler Tomography for Radar Novel View Synthesis

Mar 06, 2024

Abstract:Simulation is an invaluable tool for radio-frequency system designers that enables rapid prototyping of various algorithms for imaging, target detection, classification, and tracking. However, simulating realistic radar scans is a challenging task that requires an accurate model of the scene, radio frequency material properties, and a corresponding radar synthesis function. Rather than specifying these models explicitly, we propose DART - Doppler Aided Radar Tomography, a Neural Radiance Field-inspired method which uses radar-specific physics to create a reflectance and transmittance-based rendering pipeline for range-Doppler images. We then evaluate DART by constructing a custom data collection platform and collecting a novel radar dataset together with accurate position and instantaneous velocity measurements from lidar-based localization. In comparison to state-of-the-art baselines, DART synthesizes superior radar range-Doppler images from novel views across all datasets and additionally can be used to generate high quality tomographic images.

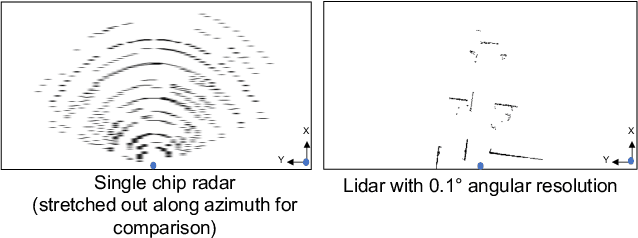

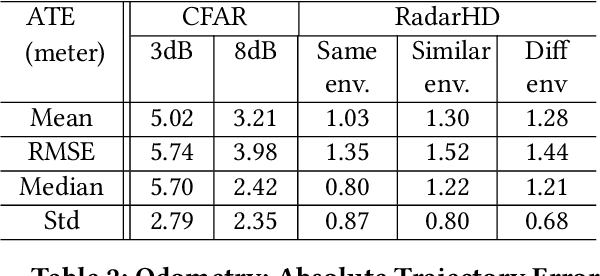

High Resolution Point Clouds from mmWave Radar

Jun 18, 2022

Abstract:This paper explores a machine learning approach for generating high resolution point clouds from a single-chip mmWave radar. Unlike lidar and vision-based systems, mmWave radar can operate in harsh environments and see through occlusions like smoke, fog, and dust. Unfortunately, current mmWave processing techniques offer poor spatial resolution compared to lidar point clouds. This paper presents RadarHD, an end-to-end neural network that constructs lidar-like point clouds from low resolution radar input. Enhancing radar images is challenging due to the presence of specular and spurious reflections. Radar data also doesn't map well to traditional image processing techniques due to the signal's sinc-like spreading pattern. We overcome these challenges by training RadarHD on a large volume of raw I/Q radar data paired with lidar point clouds across diverse indoor settings. Our experiments show the ability to generate rich point clouds even in scenes unobserved during training and in the presence of heavy smoke occlusion. Further, RadarHD's point clouds are high-quality enough to work with existing lidar odometry and mapping workflows.

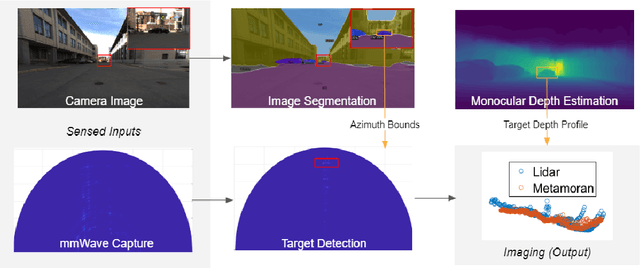

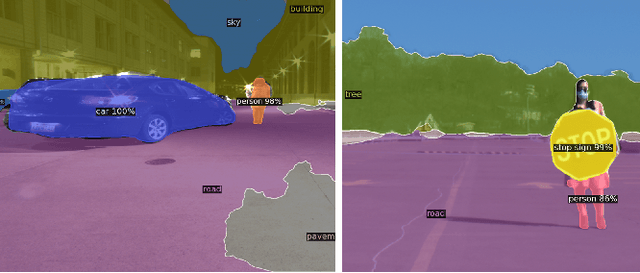

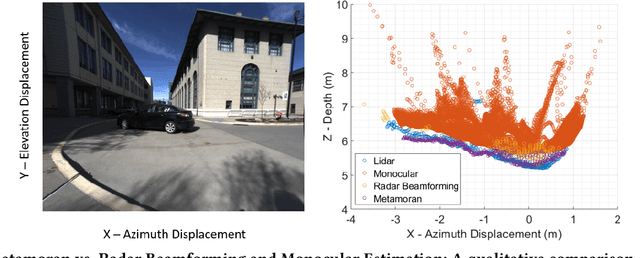

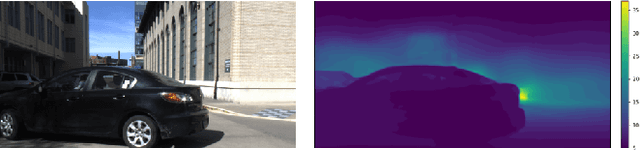

A Hybrid mmWave and Camera System for Long-Range Depth Imaging

Jun 15, 2021

Abstract:mmWave radars offer excellent depth resolution owing to their high bandwidth at mmWave radio frequencies. Yet, they suffer intrinsically from poor angular resolution, that is an order-of-magnitude worse than camera systems, and are therefore not a capable 3-D imaging solution in isolation. We propose Metamoran, a system that combines the complimentary strengths of radar and camera systems to obtain depth images at high azimuthal resolutions at distances of several tens of meters with high accuracy, all from a single fixed vantage point. Metamoran enables rich long-range depth imaging outdoors with applications to roadside safety infrastructure, surveillance and wide-area mapping. Our key insight is to use the high azimuth resolution from cameras using computer vision techniques, including image segmentation and monocular depth estimation, to obtain object shapes and use these as priors for our novel specular beamforming algorithm. We also design this algorithm to work in cluttered environments with weak reflections and in partially occluded scenarios. We perform a detailed evaluation of Metamoran's depth imaging and sensing capabilities in 200 diverse scenes at a major U.S. city. Our evaluation shows that Metamoran estimates the depth of an object up to 60~m away with a median error of 28~cm, an improvement of 13$\times$ compared to a naive radar+camera baseline and 23$\times$ compared to monocular depth estimation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge