Sirajum Munir

Decoding Psychological States Through Movement: Inferring Human Kinesic Functions with Application to Built Environments

Jan 23, 2026Abstract:Social infrastructure and other built environments are increasingly expected to support well-being and community resilience by enabling social interaction. Yet in civil and built-environment research, there is no consistent and privacy-preserving way to represent and measure socially meaningful interaction in these spaces, leaving studies to operationalize "interaction" differently across contexts and limiting practitioners' ability to evaluate whether design interventions are changing the forms of interaction that social capital theory predicts should matter. To address this field-level and methodological gap, we introduce the Dyadic User Engagement DataseT (DUET) dataset and an embedded kinesics recognition framework that operationalize Ekman and Friesen's kinesics taxonomy as a function-level interaction vocabulary aligned with social capital-relevant behaviors (e.g., reciprocity and attention coordination). DUET captures 12 dyadic interactions spanning all five kinesic functions-emblems, illustrators, affect displays, adaptors, and regulators-across four sensing modalities and three built-environment contexts, enabling privacy-preserving analysis of communicative intent through movement. Benchmarking six open-source, state-of-the-art human activity recognition models quantifies the difficulty of communicative-function recognition on DUET and highlights the limitations of ubiquitous monadic, action-level recognition when extended to dyadic, socially grounded interaction measurement. Building on DUET, our recognition framework infers communicative function directly from privacy-preserving skeletal motion without handcrafted action-to-function dictionaries; using a transfer-learning architecture, it reveals structured clustering of kinesic functions and a strong association between representation quality and classification performance while generalizing across subjects and contexts.

Data Distribution Dynamics in Real-World WiFi-Based Patient Activity Monitoring for Home Healthcare

Feb 03, 2024Abstract:This paper examines the application of WiFi signals for real-world monitoring of daily activities in home healthcare scenarios. While the state-of-the-art of WiFi-based activity recognition is promising in lab environments, challenges arise in real-world settings due to environmental, subject, and system configuration variables, affecting accuracy and adaptability. The research involved deploying systems in various settings and analyzing data shifts. It aims to guide realistic development of robust, context-aware WiFi sensing systems for elderly care. The findings suggest a shift in WiFi-based activity sensing, bridging the gap between academic research and practical applications, enhancing life quality through technology.

CarFi: Rider Localization Using Wi-Fi CSI

Dec 21, 2022Abstract:With the rise of hailing services, people are increasingly relying on shared mobility (e.g., Uber, Lyft) drivers to pick up for transportation. However, such drivers and riders have difficulties finding each other in urban areas as GPS signals get blocked by skyscrapers, in crowded environments (e.g., in stadiums, airports, and bars), at night, and in bad weather. It wastes their time, creates a bad user experience, and causes more CO2 emissions due to idle driving. In this work, we explore the potential of Wi-Fi to help drivers to determine the street side of the riders. Our proposed system is called CarFi that uses Wi-Fi CSI from two antennas placed inside a moving vehicle and a data-driven technique to determine the street side of the rider. By collecting real-world data in realistic and challenging settings by blocking the signal with other people and other parked cars, we see that CarFi is 95.44% accurate in rider-side determination in both line of sight (LoS) and non-line of sight (nLoS) conditions, and can be run on an embedded GPU in real-time.

A Hybrid mmWave and Camera System for Long-Range Depth Imaging

Jun 15, 2021

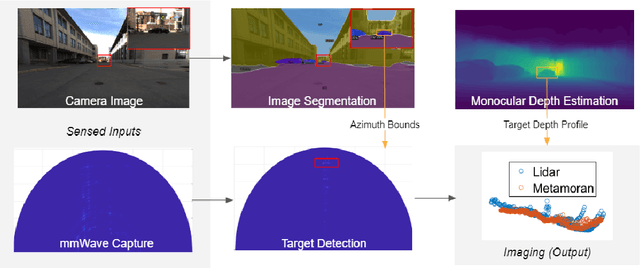

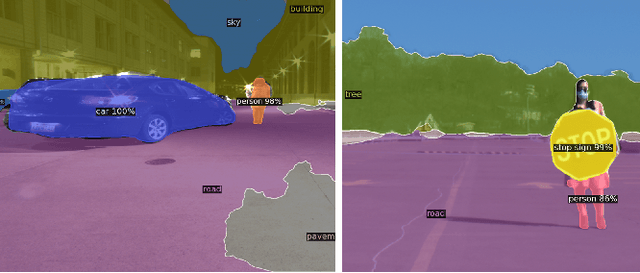

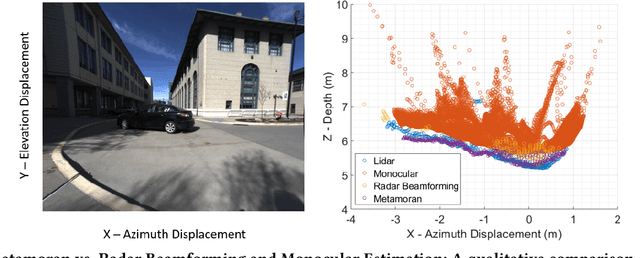

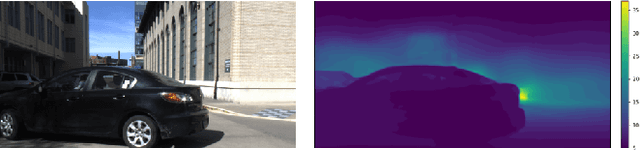

Abstract:mmWave radars offer excellent depth resolution owing to their high bandwidth at mmWave radio frequencies. Yet, they suffer intrinsically from poor angular resolution, that is an order-of-magnitude worse than camera systems, and are therefore not a capable 3-D imaging solution in isolation. We propose Metamoran, a system that combines the complimentary strengths of radar and camera systems to obtain depth images at high azimuthal resolutions at distances of several tens of meters with high accuracy, all from a single fixed vantage point. Metamoran enables rich long-range depth imaging outdoors with applications to roadside safety infrastructure, surveillance and wide-area mapping. Our key insight is to use the high azimuth resolution from cameras using computer vision techniques, including image segmentation and monocular depth estimation, to obtain object shapes and use these as priors for our novel specular beamforming algorithm. We also design this algorithm to work in cluttered environments with weak reflections and in partially occluded scenarios. We perform a detailed evaluation of Metamoran's depth imaging and sensing capabilities in 200 diverse scenes at a major U.S. city. Our evaluation shows that Metamoran estimates the depth of an object up to 60~m away with a median error of 28~cm, an improvement of 13$\times$ compared to a naive radar+camera baseline and 23$\times$ compared to monocular depth estimation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge