Anup Pillai

Receptivity of an AI Cognitive Assistant by the Radiology Community: A Report on Data Collected at RSNA

Sep 13, 2020

Abstract:Due to advances in machine learning and artificial intelligence (AI), a new role is emerging for machines as intelligent assistants to radiologists in their clinical workflows. But what systematic clinical thought processes are these machines using? Are they similar enough to those of radiologists to be trusted as assistants? A live demonstration of such a technology was conducted at the 2016 Scientific Assembly and Annual Meeting of the Radiological Society of North America (RSNA). The demonstration was presented in the form of a question-answering system that took a radiology multiple choice question and a medical image as inputs. The AI system then demonstrated a cognitive workflow, involving text analysis, image analysis, and reasoning, to process the question and generate the most probable answer. A post demonstration survey was made available to the participants who experienced the demo and tested the question answering system. Of the reported 54,037 meeting registrants, 2,927 visited the demonstration booth, 1,991 experienced the demo, and 1,025 completed a post-demonstration survey. In this paper, the methodology of the survey is shown and a summary of its results are presented. The results of the survey show a very high level of receptiveness to cognitive computing technology and artificial intelligence among radiologists.

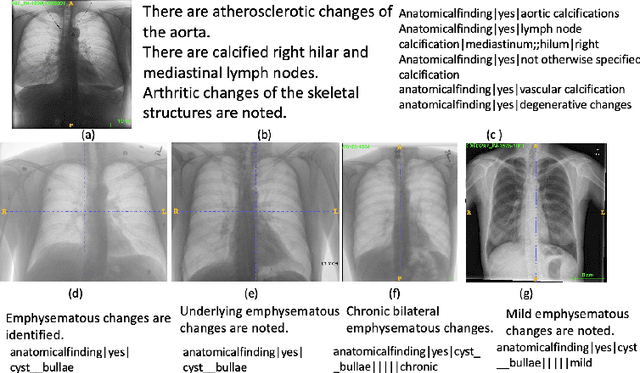

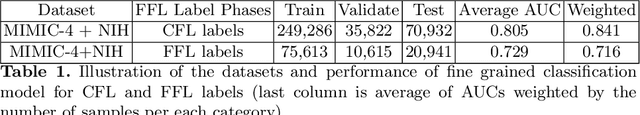

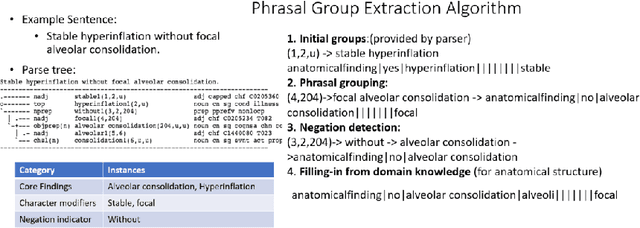

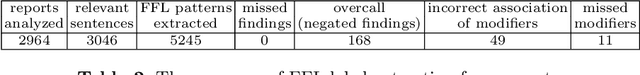

Chest X-ray Report Generation through Fine-Grained Label Learning

Jul 27, 2020

Abstract:Obtaining automated preliminary read reports for common exams such as chest X-rays will expedite clinical workflows and improve operational efficiencies in hospitals. However, the quality of reports generated by current automated approaches is not yet clinically acceptable as they cannot ensure the correct detection of a broad spectrum of radiographic findings nor describe them accurately in terms of laterality, anatomical location, severity, etc. In this work, we present a domain-aware automatic chest X-ray radiology report generation algorithm that learns fine-grained description of findings from images and uses their pattern of occurrences to retrieve and customize similar reports from a large report database. We also develop an automatic labeling algorithm for assigning such descriptors to images and build a novel deep learning network that recognizes both coarse and fine-grained descriptions of findings. The resulting report generation algorithm significantly outperforms the state of the art using established score metrics.

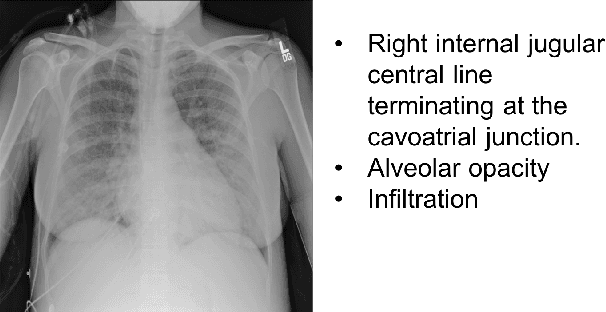

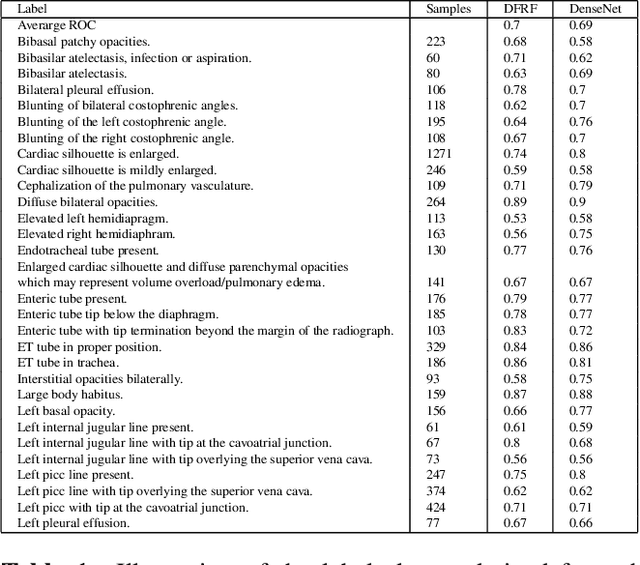

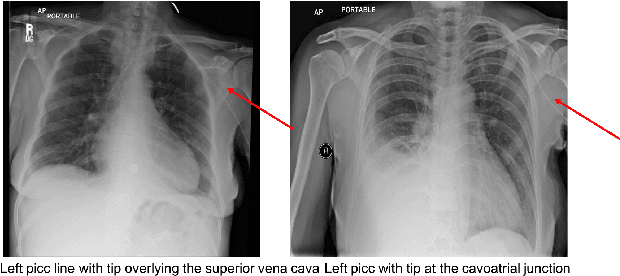

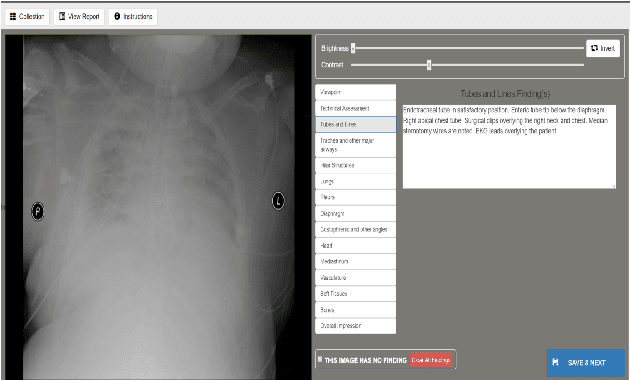

Building a Benchmark Dataset and Classifiers for Sentence-Level Findings in AP Chest X-rays

Jun 21, 2019

Abstract:Chest X-rays are the most common diagnostic exams in emergency rooms and hospitals. There has been a surge of work on automatic interpretation of chest X-rays using deep learning approaches after the availability of large open source chest X-ray dataset from NIH. However, the labels are not sufficiently rich and descriptive for training classification tools. Further, it does not adequately address the findings seen in Chest X-rays taken in anterior-posterior (AP) view which also depict the placement of devices such as central vascular lines and tubes. In this paper, we present a new chest X-ray benchmark database of 73 rich sentence-level descriptors of findings seen in AP chest X-rays. We describe our method of obtaining these findings through a semi-automated ground truth generation process from crowdsourcing of clinician annotations. We also present results of building classifiers for these findings that show that such higher granularity labels can also be learned through the framework of deep learning classifiers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge