Karthik Sheshadri

Building a Benchmark Dataset and Classifiers for Sentence-Level Findings in AP Chest X-rays

Jun 21, 2019

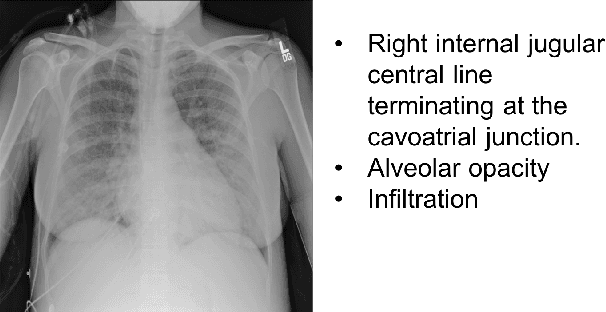

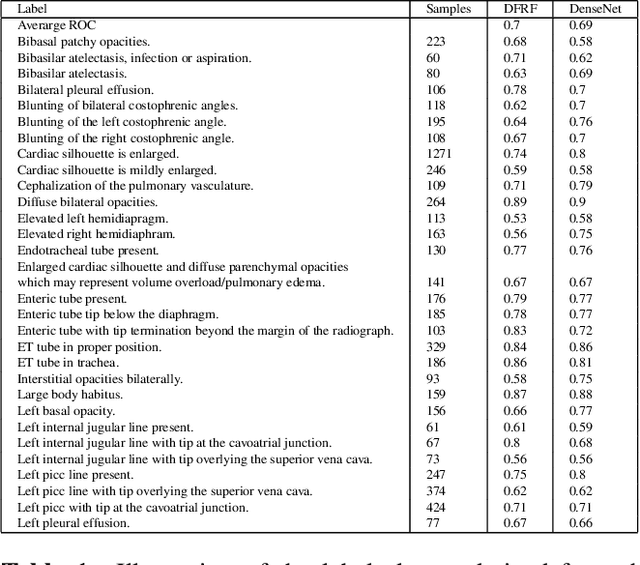

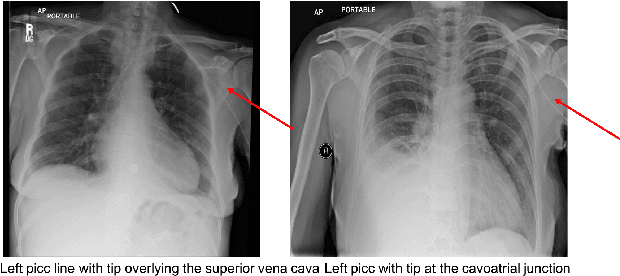

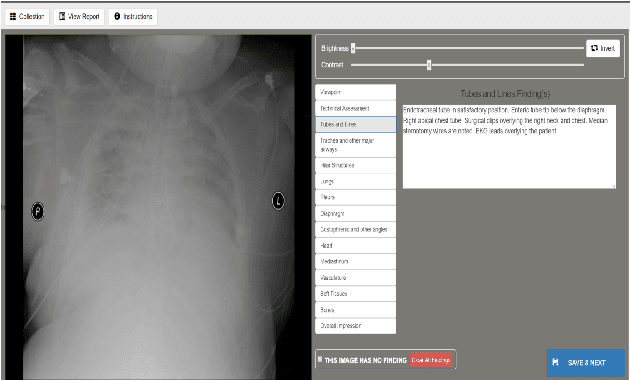

Abstract:Chest X-rays are the most common diagnostic exams in emergency rooms and hospitals. There has been a surge of work on automatic interpretation of chest X-rays using deep learning approaches after the availability of large open source chest X-ray dataset from NIH. However, the labels are not sufficiently rich and descriptive for training classification tools. Further, it does not adequately address the findings seen in Chest X-rays taken in anterior-posterior (AP) view which also depict the placement of devices such as central vascular lines and tubes. In this paper, we present a new chest X-ray benchmark database of 73 rich sentence-level descriptors of findings seen in AP chest X-rays. We describe our method of obtaining these findings through a semi-automated ground truth generation process from crowdsourcing of clinician annotations. We also present results of building classifiers for these findings that show that such higher granularity labels can also be learned through the framework of deep learning classifiers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge