Zun Li

Code World Models for General Game Playing

Oct 06, 2025

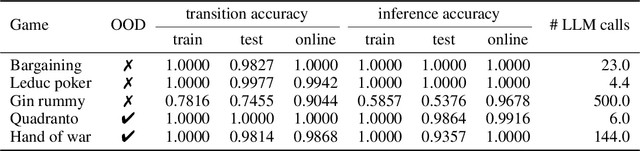

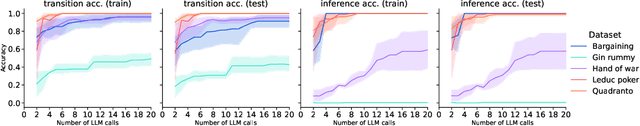

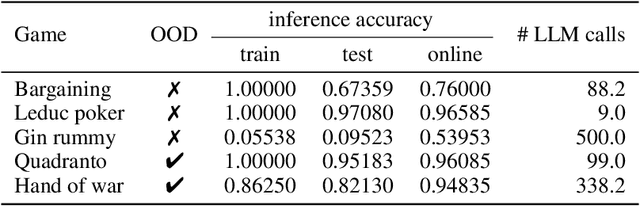

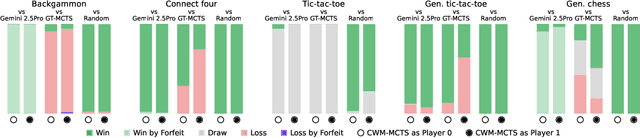

Abstract:Large Language Models (LLMs) reasoning abilities are increasingly being applied to classical board and card games, but the dominant approach -- involving prompting for direct move generation -- has significant drawbacks. It relies on the model's implicit fragile pattern-matching capabilities, leading to frequent illegal moves and strategically shallow play. Here we introduce an alternative approach: We use the LLM to translate natural language rules and game trajectories into a formal, executable world model represented as Python code. This generated model -- comprising functions for state transition, legal move enumeration, and termination checks -- serves as a verifiable simulation engine for high-performance planning algorithms like Monte Carlo tree search (MCTS). In addition, we prompt the LLM to generate heuristic value functions (to make MCTS more efficient), and inference functions (to estimate hidden states in imperfect information games). Our method offers three distinct advantages compared to directly using the LLM as a policy: (1) Verifiability: The generated CWM serves as a formal specification of the game's rules, allowing planners to algorithmically enumerate valid actions and avoid illegal moves, contingent on the correctness of the synthesized model; (2) Strategic Depth: We combine LLM semantic understanding with the deep search power of classical planners; and (3) Generalization: We direct the LLM to focus on the meta-task of data-to-code translation, enabling it to adapt to new games more easily. We evaluate our agent on 10 different games, of which 4 are novel and created for this paper. 5 of the games are fully observed (perfect information), and 5 are partially observed (imperfect information). We find that our method outperforms or matches Gemini 2.5 Pro in 9 out of the 10 considered games.

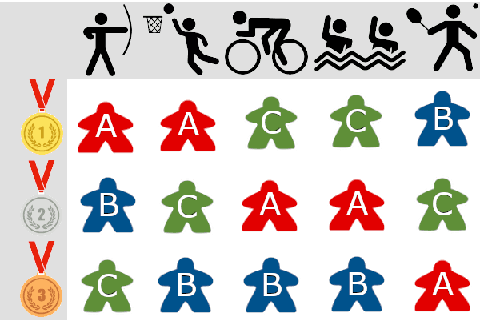

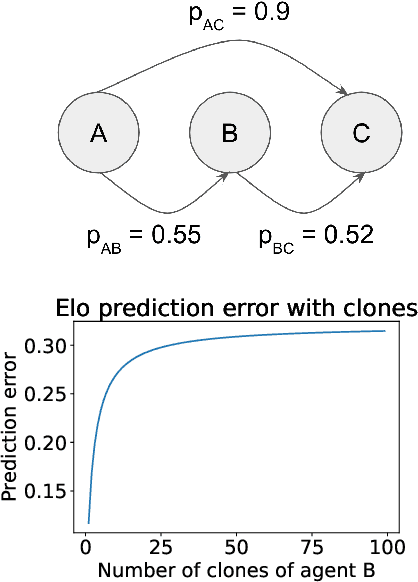

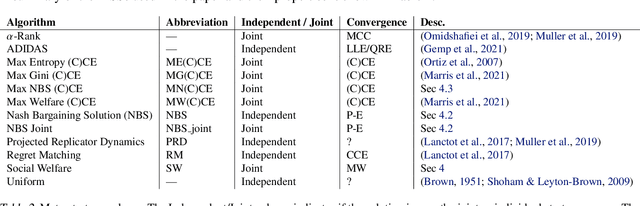

Evaluating Agents using Social Choice Theory

Dec 07, 2023

Abstract:We argue that many general evaluation problems can be viewed through the lens of voting theory. Each task is interpreted as a separate voter, which requires only ordinal rankings or pairwise comparisons of agents to produce an overall evaluation. By viewing the aggregator as a social welfare function, we are able to leverage centuries of research in social choice theory to derive principled evaluation frameworks with axiomatic foundations. These evaluations are interpretable and flexible, while avoiding many of the problems currently facing cross-task evaluation. We apply this Voting-as-Evaluation (VasE) framework across multiple settings, including reinforcement learning, large language models, and humans. In practice, we observe that VasE can be more robust than popular evaluation frameworks (Elo and Nash averaging), discovers properties in the evaluation data not evident from scores alone, and can predict outcomes better than Elo in a complex seven-player game. We identify one particular approach, maximal lotteries, that satisfies important consistency properties relevant to evaluation, is computationally efficient (polynomial in the size of the evaluation data), and identifies game-theoretic cycles.

Knowledge Augmented Relation Inference for Group Activity Recognition

Mar 01, 2023

Abstract:Most existing group activity recognition methods construct spatial-temporal relations merely based on visual representation. Some methods introduce extra knowledge, such as action labels, to build semantic relations and use them to refine the visual presentation. However, the knowledge they explored just stay at the semantic-level, which is insufficient for pursing notable accuracy. In this paper, we propose to exploit knowledge concretization for the group activity recognition, and develop a novel Knowledge Augmented Relation Inference framework that can effectively use the concretized knowledge to improve the individual representations. Specifically, the framework consists of a Visual Representation Module to extract individual appearance features, a Knowledge Augmented Semantic Relation Module explore semantic representations of individual actions, and a Knowledge-Semantic-Visual Interaction Module aims to integrate visual and semantic information by the knowledge. Benefiting from these modules, the proposed framework can utilize knowledge to enhance the relation inference process and the individual representations, thus improving the performance of group activity recognition. Experimental results on two public datasets show that the proposed framework achieves competitive performance compared with state-of-the-art methods.

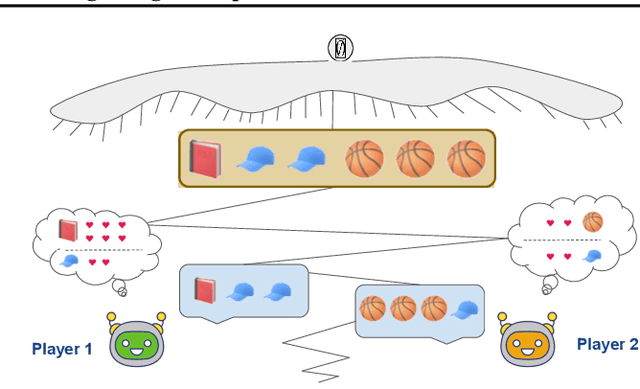

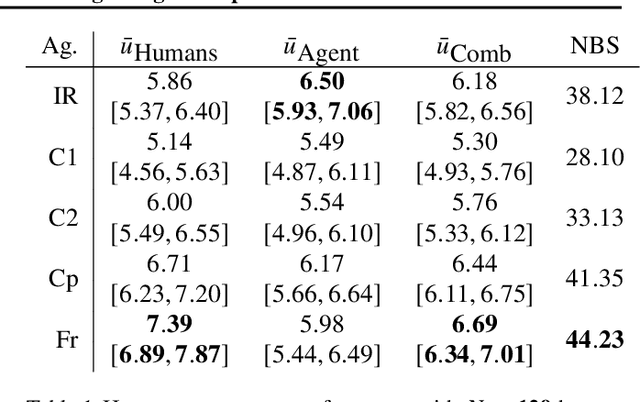

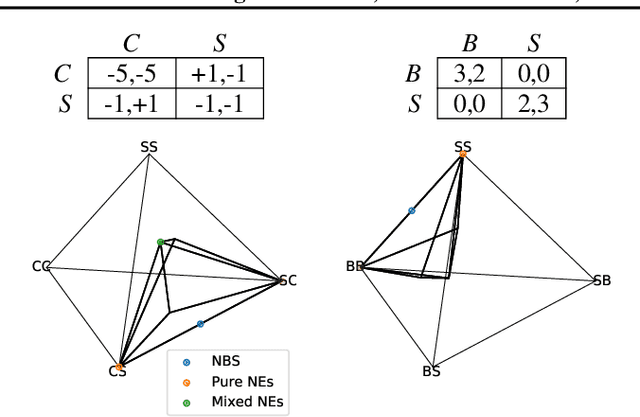

Combining Tree-Search, Generative Models, and Nash Bargaining Concepts in Game-Theoretic Reinforcement Learning

Feb 01, 2023

Abstract:Multiagent reinforcement learning (MARL) has benefited significantly from population-based and game-theoretic training regimes. One approach, Policy-Space Response Oracles (PSRO), employs standard reinforcement learning to compute response policies via approximate best responses and combines them via meta-strategy selection. We augment PSRO by adding a novel search procedure with generative sampling of world states, and introduce two new meta-strategy solvers based on the Nash bargaining solution. We evaluate PSRO's ability to compute approximate Nash equilibrium, and its performance in two negotiation games: Colored Trails, and Deal or No Deal. We conduct behavioral studies where human participants negotiate with our agents ($N = 346$). We find that search with generative modeling finds stronger policies during both training time and test time, enables online Bayesian co-player prediction, and can produce agents that achieve comparable social welfare negotiating with humans as humans trading among themselves.

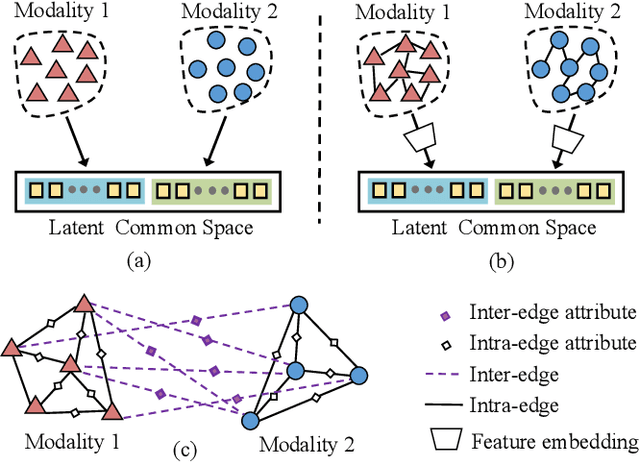

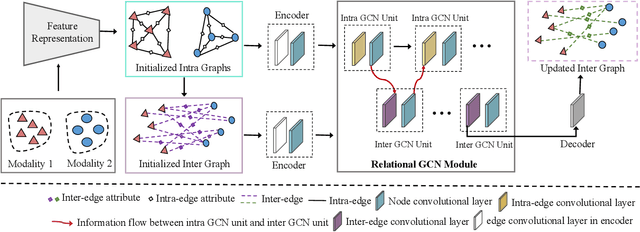

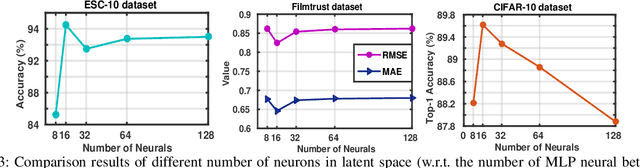

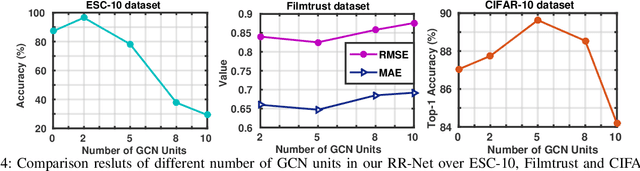

A Universal Model for Cross Modality Mapping by Relational Reasoning

Feb 26, 2021

Abstract:With the aim of matching a pair of instances from two different modalities, cross modality mapping has attracted growing attention in the computer vision community. Existing methods usually formulate the mapping function as the similarity measure between the pair of instance features, which are embedded to a common space. However, we observe that the relationships among the instances within a single modality (intra relations) and those between the pair of heterogeneous instances (inter relations) are insufficiently explored in previous approaches. Motivated by this, we redefine the mapping function with relational reasoning via graph modeling, and further propose a GCN-based Relational Reasoning Network (RR-Net) in which inter and intra relations are efficiently computed to universally resolve the cross modality mapping problem. Concretely, we first construct two kinds of graph, i.e., Intra Graph and Inter Graph, to respectively model intra relations and inter relations. Then RR-Net updates all the node features and edge features in an iterative manner for learning intra and inter relations simultaneously. Last, RR-Net outputs the probabilities over the edges which link a pair of heterogeneous instances to estimate the mapping results. Extensive experiments on three example tasks, i.e., image classification, social recommendation and sound recognition, clearly demonstrate the superiority and universality of our proposed model.

Multi-Miner: Object-Adaptive Region Mining for Weakly-Supervised Semantic Segmentation

Jun 14, 2020

Abstract:Object region mining is a critical step for weakly-supervised semantic segmentation. Most recent methods mine the object regions by expanding the seed regions localized by class activation maps. They generally do not consider the sizes of objects and apply a monotonous procedure to mining all the object regions. Thus their mined regions are often insufficient in number and scale for large objects, and on the other hand easily contaminated by surrounding backgrounds for small objects. In this paper, we propose a novel multi-miner framework to perform a region mining process that adapts to diverse object sizes and is thus able to mine more integral and finer object regions. Specifically, our multi-miner leverages a parallel modulator to check whether there are remaining object regions for each single object, and guide a category-aware generator to mine the regions of each object independently. In this way, the multi-miner adaptively takes more steps for large objects and fewer steps for small objects. Experiment results demonstrate that the multi-miner offers better region mining results and helps achieve better segmentation performance than state-of-the-art weakly-supervised semantic segmentation methods.

Cross-layer Feature Pyramid Network for Salient Object Detection

Feb 25, 2020

Abstract:Feature pyramid network (FPN) based models, which fuse the semantics and salient details in a progressive manner, have been proven highly effective in salient object detection. However, it is observed that these models often generate saliency maps with incomplete object structures or unclear object boundaries, due to the \emph{indirect} information propagation among distant layers that makes such fusion structure less effective. In this work, we propose a novel Cross-layer Feature Pyramid Network (CFPN), in which direct cross-layer communication is enabled to improve the progressive fusion in salient object detection. Specifically, the proposed network first aggregates multi-scale features from different layers into feature maps that have access to both the high- and low-level information. Then, it distributes the aggregated features to all the involved layers to gain access to richer context. In this way, the distributed features per layer own both semantics and salient details from all other layers simultaneously, and suffer reduced loss of important information. Extensive experimental results over six widely used salient object detection benchmarks and with three popular backbones clearly demonstrate that CFPN can accurately locate fairly complete salient regions and effectively segment the object boundaries.

Deep Reasoning with Multi-scale Context for Salient Object Detection

Jan 24, 2019

Abstract:To detect and segment salient objects accurately, existing methods are usually devoted to designing complex network architectures to fuse powerful features from the backbone networks. However, they put much less efforts on the saliency inference module and only use a few fully convolutional layers to perform saliency reasoning from the fused features. However, should feature fusion strategies receive much attention but saliency reasoning be ignored a lot? In this paper, we find that weakness of the saliency reasoning unit limits salient object detection performance, and claim that saliency reasoning after multi-scale convolutional features fusion is critical. To verify our findings, we first extract multi-scale features with a fully convolutional network, and then directly reason from these comprehensive features using a deep yet light-weighted network, modified from ShuffleNet, to fast and precisely predict salient objects. Such simple design is shown to be capable of reasoning from multi-scale saliency features as well as giving superior saliency detection performance with less computation cost. Experimental results show that our simple framework outperforms the best existing method with 2.3\% and 3.6\% promotion for F-measure scores, 2.8\% reduction for MAE score on PASCAL-S, DUT-OMRON and SOD datasets respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge