Zixiang Ding

Accelerating Large Batch Training via Gradient Signal to Noise Ratio (GSNR)

Sep 24, 2023

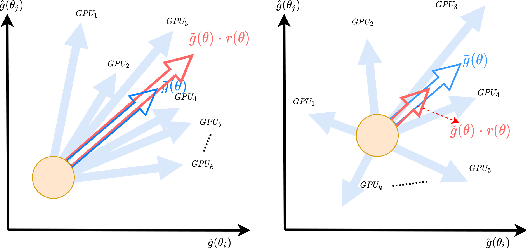

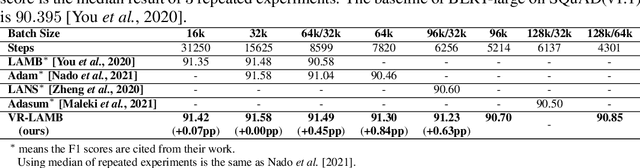

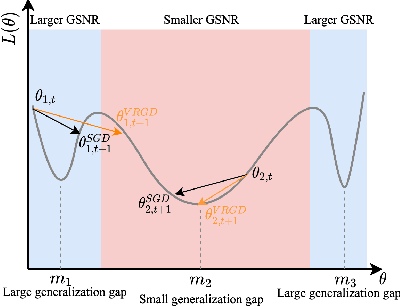

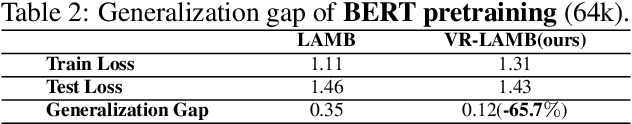

Abstract:As models for nature language processing (NLP), computer vision (CV) and recommendation systems (RS) require surging computation, a large number of GPUs/TPUs are paralleled as a large batch (LB) to improve training throughput. However, training such LB tasks often meets large generalization gap and downgrades final precision, which limits enlarging the batch size. In this work, we develop the variance reduced gradient descent technique (VRGD) based on the gradient signal to noise ratio (GSNR) and apply it onto popular optimizers such as SGD/Adam/LARS/LAMB. We carry out a theoretical analysis of convergence rate to explain its fast training dynamics, and a generalization analysis to demonstrate its smaller generalization gap on LB training. Comprehensive experiments demonstrate that VRGD can accelerate training ($1\sim 2 \times$), narrow generalization gap and improve final accuracy. We push the batch size limit of BERT pretraining up to 128k/64k and DLRM to 512k without noticeable accuracy loss. We improve ImageNet Top-1 accuracy at 96k by $0.52pp$ than LARS. The generalization gap of BERT and ImageNet training is significantly reduce by over $65\%$.

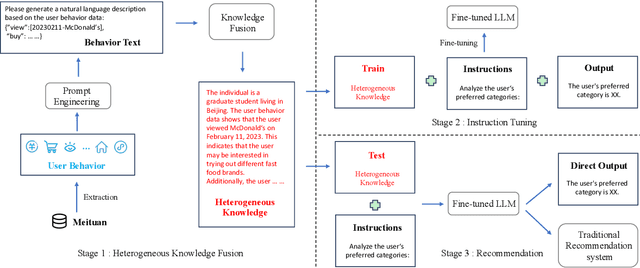

Heterogeneous Knowledge Fusion: A Novel Approach for Personalized Recommendation via LLM

Aug 18, 2023

Abstract:The analysis and mining of user heterogeneous behavior are of paramount importance in recommendation systems. However, the conventional approach of incorporating various types of heterogeneous behavior into recommendation models leads to feature sparsity and knowledge fragmentation issues. To address this challenge, we propose a novel approach for personalized recommendation via Large Language Model (LLM), by extracting and fusing heterogeneous knowledge from user heterogeneous behavior information. In addition, by combining heterogeneous knowledge and recommendation tasks, instruction tuning is performed on LLM for personalized recommendations. The experimental results demonstrate that our method can effectively integrate user heterogeneous behavior and significantly improve recommendation performance.

Is ChatGPT a Good Sentiment Analyzer? A Preliminary Study

Apr 10, 2023Abstract:Recently, ChatGPT has drawn great attention from both the research community and the public. We are particularly curious about whether it can serve as a universal sentiment analyzer. To this end, in this work, we provide a preliminary evaluation of ChatGPT on the understanding of opinions, sentiments, and emotions contained in the text. Specifically, we evaluate it in four settings, including standard evaluation, polarity shift evaluation, open-domain evaluation, and sentiment inference evaluation. The above evaluation involves 18 benchmark datasets and 5 representative sentiment analysis tasks, and we compare ChatGPT with fine-tuned BERT and corresponding state-of-the-art (SOTA) models on end-task. Moreover, we also conduct human evaluation and present some qualitative case studies to gain a deep comprehension of its sentiment analysis capabilities.

SKDBERT: Compressing BERT via Stochastic Knowledge Distillation

Nov 29, 2022Abstract:In this paper, we propose Stochastic Knowledge Distillation (SKD) to obtain compact BERT-style language model dubbed SKDBERT. In each iteration, SKD samples a teacher model from a pre-defined teacher ensemble, which consists of multiple teacher models with multi-level capacities, to transfer knowledge into student model in an one-to-one manner. Sampling distribution plays an important role in SKD. We heuristically present three types of sampling distributions to assign appropriate probabilities for multi-level teacher models. SKD has two advantages: 1) it can preserve the diversities of multi-level teacher models via stochastically sampling single teacher model in each iteration, and 2) it can also improve the efficacy of knowledge distillation via multi-level teacher models when large capacity gap exists between the teacher model and the student model. Experimental results on GLUE benchmark show that SKDBERT reduces the size of a BERT$_{\rm BASE}$ model by 40% while retaining 99.5% performances of language understanding and being 100% faster.

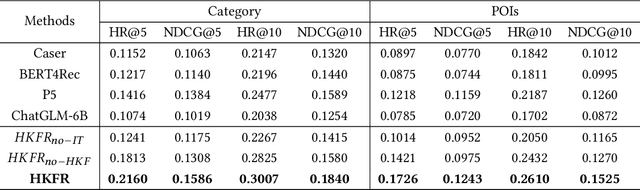

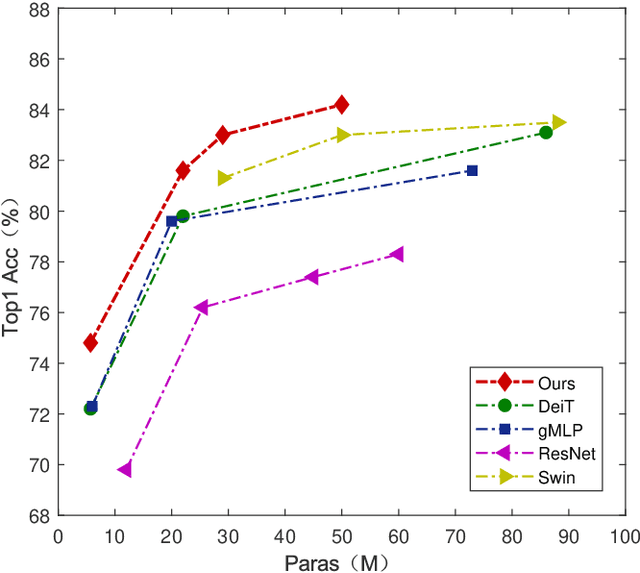

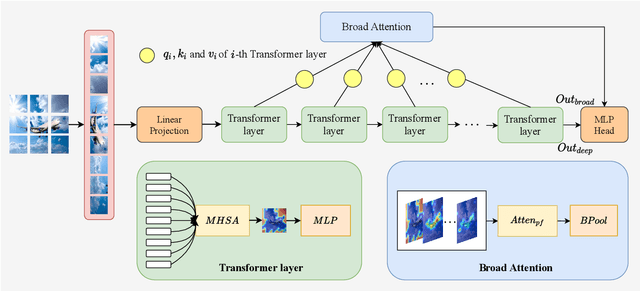

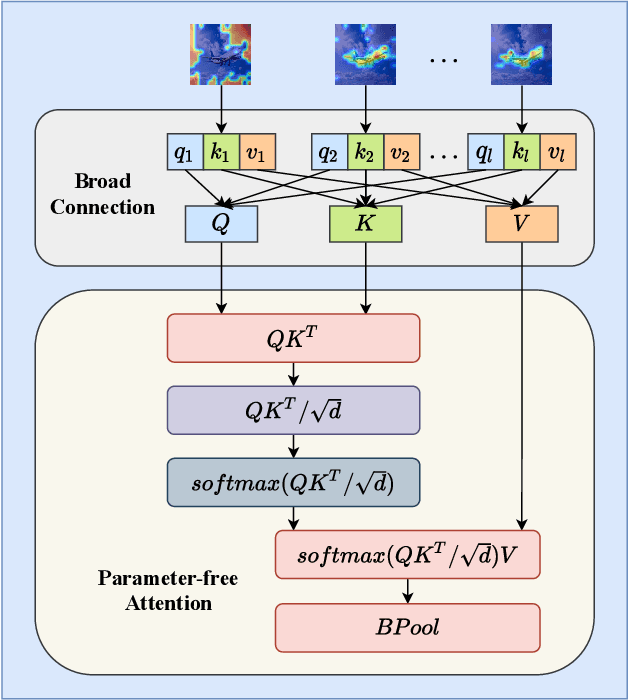

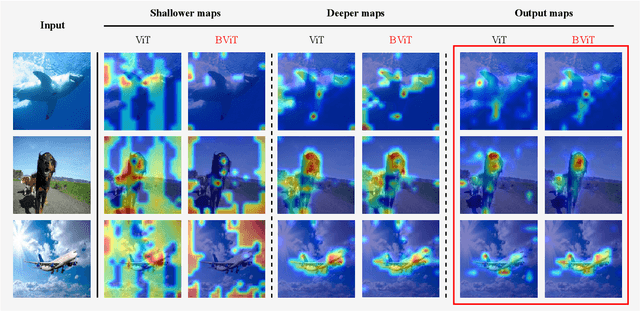

BViT: Broad Attention based Vision Transformer

Feb 13, 2022

Abstract:Recent works have demonstrated that transformer can achieve promising performance in computer vision, by exploiting the relationship among image patches with self-attention. While they only consider the attention in a single feature layer, but ignore the complementarity of attention in different levels. In this paper, we propose the broad attention to improve the performance by incorporating the attention relationship of different layers for vision transformer, which is called BViT. The broad attention is implemented by broad connection and parameter-free attention. Broad connection of each transformer layer promotes the transmission and integration of information for BViT. Without introducing additional trainable parameters, parameter-free attention jointly focuses on the already available attention information in different layers for extracting useful information and building their relationship. Experiments on image classification tasks demonstrate that BViT delivers state-of-the-art accuracy of 74.8\%/81.6\% top-1 accuracy on ImageNet with 5M/22M parameters. Moreover, we transfer BViT to downstream object recognition benchmarks to achieve 98.9\% and 89.9\% on CIFAR10 and CIFAR100 respectively that exceed ViT with fewer parameters. For the generalization test, the broad attention in Swin Transformer and T2T-ViT also bring an improvement of more than 1\%. To sum up, broad attention is promising to promote the performance of attention based models. Code and pre-trained models are available at https://github.com/DRL-CASIA/Broad_ViT.

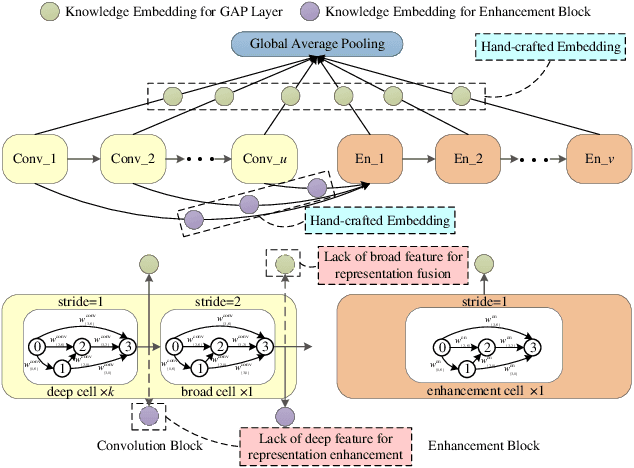

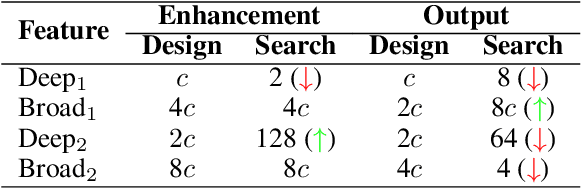

Stacked BNAS: Rethinking Broad Convolutional Neural Network for Neural Architecture Search

Nov 15, 2021

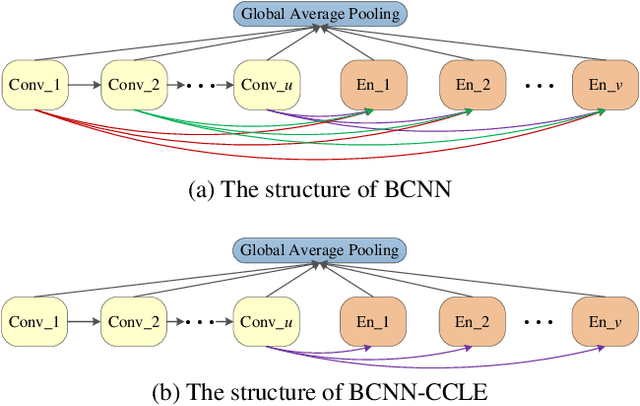

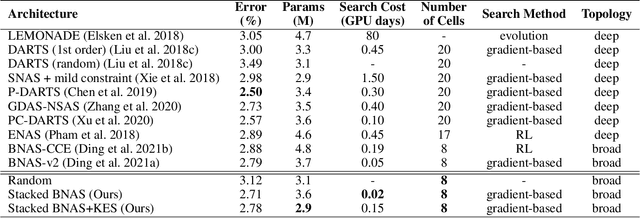

Abstract:Different from other deep scalable architecture based NAS approaches, Broad Neural Architecture Search (BNAS) proposes a broad one which consists of convolution and enhancement blocks, dubbed Broad Convolutional Neural Network (BCNN) as search space for amazing efficiency improvement. BCNN reuses the topologies of cells in convolution block, so that BNAS can employ few cells for efficient search. Moreover, multi-scale feature fusion and knowledge embedding are proposed to improve the performance of BCNN with shallow topology. However, BNAS suffers some drawbacks: 1) insufficient representation diversity for feature fusion and enhancement, and 2) time consuming of knowledge embedding design by human expert. In this paper, we propose Stacked BNAS whose search space is a developed broad scalable architecture named Stacked BCNN, with better performance than BNAS. On the one hand, Stacked BCNN treats mini-BCNN as the basic block to preserve comprehensive representation and deliver powerful feature extraction ability. On the other hand, we propose Knowledge Embedding Search (KES) to learn appropriate knowledge embeddings. Experimental results show that 1) Stacked BNAS obtains better performance than BNAS, 2) KES contributes to reduce the parameters of learned architecture with satisfactory performance, and 3) Stacked BNAS delivers state-of-the-art efficiency of 0.02 GPU days.

Multimodal Emotion-Cause Pair Extraction in Conversations

Oct 15, 2021

Abstract:Emotion cause analysis has received considerable attention in recent years. Previous studies primarily focused on emotion cause extraction from texts in news articles or microblogs. It is also interesting to discover emotions and their causes in conversations. As conversation in its natural form is multimodal, a large number of studies have been carried out on multimodal emotion recognition in conversations, but there is still a lack of work on multimodal emotion cause analysis. In this work, we introduce a new task named Multimodal Emotion-Cause Pair Extraction in Conversations, aiming to jointly extract emotions and their associated causes from conversations reflected in multiple modalities (text, audio and video). We accordingly construct a multimodal conversational emotion cause dataset, Emotion-Cause-in-Friends, which contains 9,272 multimodal emotion-cause pairs annotated on 13,509 utterances in the sitcom Friends. We finally benchmark the task by establishing a baseline system that incorporates multimodal features for emotion-cause pair extraction. Preliminary experimental results demonstrate the potential of multimodal information fusion for discovering both emotions and causes in conversations.

ABCP: Automatic Block-wise and Channel-wise Network Pruning via Joint Search

Oct 08, 2021

Abstract:Currently, an increasing number of model pruning methods are proposed to resolve the contradictions between the computer powers required by the deep learning models and the resource-constrained devices. However, most of the traditional rule-based network pruning methods can not reach a sufficient compression ratio with low accuracy loss and are time-consuming as well as laborious. In this paper, we propose Automatic Block-wise and Channel-wise Network Pruning (ABCP) to jointly search the block-wise and channel-wise pruning action with deep reinforcement learning. A joint sample algorithm is proposed to simultaneously generate the pruning choice of each residual block and the channel pruning ratio of each convolutional layer from the discrete and continuous search space respectively. The best pruning action taking both the accuracy and the complexity of the model into account is obtained finally. Compared with the traditional rule-based pruning method, this pipeline saves human labor and achieves a higher compression ratio with lower accuracy loss. Tested on the mobile robot detection dataset, the pruned YOLOv3 model saves 99.5% FLOPs, reduces 99.5% parameters, and achieves 37.3 times speed up with only 2.8% mAP loss. The results of the transfer task on the sim2real detection dataset also show that our pruned model has much better robustness performance.

Heuristic Rank Selection with Progressively Searching Tensor Ring Network

Sep 22, 2020

Abstract:Recently, Tensor Ring Networks (TRNs) have been applied in deep networks, achieving remarkable successes in compression ratio and accuracy. Although highly related to the performance of TRNs, rank is seldom studied in previous works and usually set to equal in experiments. Meanwhile, there is not any heuristic method to choose the rank, and an enumerating way to find appropriate rank is extremely time-consuming. Interestingly, we discover that part of the rank elements is sensitive and usually aggregate in a certain region, namely an interest region. Therefore, based on the above phenomenon, we propose a novel progressive genetic algorithm named Progressively Searching Tensor Ring Network Search (PSTRN), which has the ability to find optimal rank precisely and efficiently. Through the evolutionary phase and progressive phase, PSTRN can converge to the interest region quickly and harvest good performance. Experimental results show that PSTRN can significantly reduce the complexity of seeking rank, compared with the enumerating method. Furthermore, our method is validated on public benchmarks like MNIST, CIFAR10/100 and HMDB51, achieving state-of-the-art performance.

Faster Gradient-based NAS Pipeline Combining Broad Scalable Architecture with Confident Learning Rate

Sep 21, 2020

Abstract:In order to further improve the search efficiency of Neural Architecture Search (NAS), we propose B-DARTS, a novel pipeline combining broad scalable architecture with Confident Learning Rate (CLR). In B-DARTS, Broad Convolutional Neural Network (BCNN) is employed as the scalable architecture for DARTS, a popular differentiable NAS approach. On one hand, BCNN is a broad scalable architecture whose topology achieves two advantages compared with the deep one, mainly including faster single-step training speed and higher memory efficiency (i.e. larger batch size for architecture search), which are all contributed to the search efficiency improvement of NAS. On the other hand, DARTS discovers the optimal architecture by gradient-based optimization algorithm, which benefits from two superiorities of BCNN simultaneously. Similar to vanilla DARTS, B-DARTS also suffers from the performance collapse issue, where those weight-free operations are prone to be selected by the search strategy. Therefore, we propose CLR, that considers the confidence of gradient for architecture weights update increasing with the training time of over-parameterized model, to mitigate the above issue. Experimental results on CIFAR-10 and ImageNet show that 1) B-DARTS delivers state-of-the-art efficiency of 0.09 GPU day using first order approximation on CIFAR-10; 2) the learned architecture by B-DARTS achieves competitive performance using state-of-the-art composite multiply-accumulate operations and parameters on ImageNet; and 3) the proposed CLR is effective for performance collapse issue alleviation of both B-DARTS and DARTS.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge