Zhiyu He

Refer to the report for detailed contributions

ENSI: Efficient Non-Interactive Secure Inference for Large Language Models

Sep 11, 2025Abstract:Secure inference enables privacy-preserving machine learning by leveraging cryptographic protocols that support computations on sensitive user data without exposing it. However, integrating cryptographic protocols with large language models (LLMs) presents significant challenges, as the inherent complexity of these protocols, together with LLMs' massive parameter scale and sophisticated architectures, severely limits practical usability. In this work, we propose ENSI, a novel non-interactive secure inference framework for LLMs, based on the principle of co-designing the cryptographic protocols and LLM architecture. ENSI employs an optimized encoding strategy that seamlessly integrates CKKS scheme with a lightweight LLM variant, BitNet, significantly reducing the computational complexity of encrypted matrix multiplications. In response to the prohibitive computational demands of softmax under homomorphic encryption (HE), we pioneer the integration of the sigmoid attention mechanism with HE as a seamless, retraining-free alternative. Furthermore, by embedding the Bootstrapping operation within the RMSNorm process, we efficiently refresh ciphertexts while markedly decreasing the frequency of costly bootstrapping invocations. Experimental evaluations demonstrate that ENSI achieves approximately an 8x acceleration in matrix multiplications and a 2.6x speedup in softmax inference on CPU compared to state-of-the-art method, with the proportion of bootstrapping is reduced to just 1%.

Short Video Segment-level User Dynamic Interests Modeling in Personalized Recommendation

Apr 05, 2025

Abstract:The rapid growth of short videos has necessitated effective recommender systems to match users with content tailored to their evolving preferences. Current video recommendation models primarily treat each video as a whole, overlooking the dynamic nature of user preferences with specific video segments. In contrast, our research focuses on segment-level user interest modeling, which is crucial for understanding how users' preferences evolve during video browsing. To capture users' dynamic segment interests, we propose an innovative model that integrates a hybrid representation module, a multi-modal user-video encoder, and a segment interest decoder. Our model addresses the challenges of capturing dynamic interest patterns, missing segment-level labels, and fusing different modalities, achieving precise segment-level interest prediction. We present two downstream tasks to evaluate the effectiveness of our segment interest modeling approach: video-skip prediction and short video recommendation. Our experiments on real-world short video datasets with diverse modalities show promising results on both tasks. It demonstrates that segment-level interest modeling brings a deep understanding of user engagement and enhances video recommendations. We also release a unique dataset that includes segment-level video data and diverse user behaviors, enabling further research in segment-level interest modeling. This work pioneers a novel perspective on understanding user segment-level preference, offering the potential for more personalized and engaging short video experiences.

Decision-Dependent Stochastic Optimization: The Role of Distribution Dynamics

Mar 10, 2025

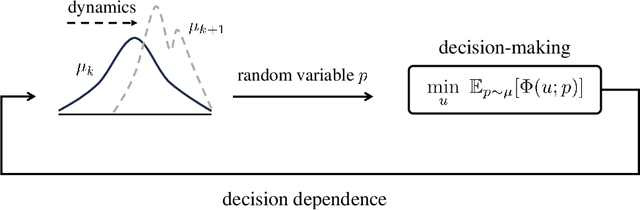

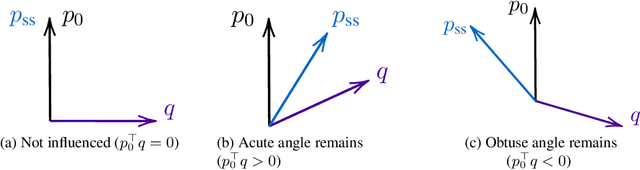

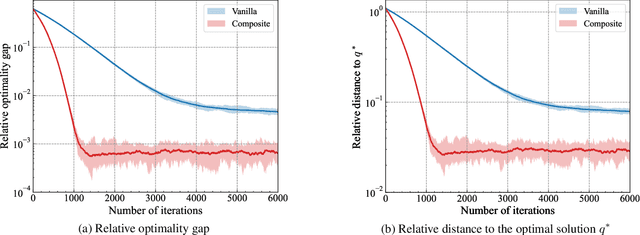

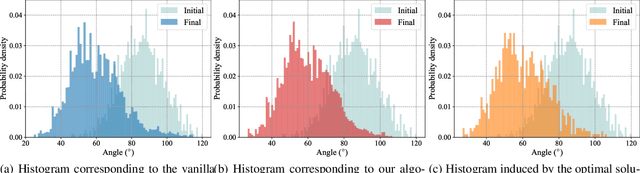

Abstract:Distribution shifts have long been regarded as troublesome external forces that a decision-maker should either counteract or conform to. An intriguing feedback phenomenon termed decision dependence arises when the deployed decision affects the environment and alters the data-generating distribution. In the realm of performative prediction, this is encoded by distribution maps parameterized by decisions due to strategic behaviors. In contrast, we formalize an endogenous distribution shift as a feedback process featuring nonlinear dynamics that couple the evolving distribution with the decision. Stochastic optimization in this dynamic regime provides a fertile ground to examine the various roles played by dynamics in the composite problem structure. To this end, we develop an online algorithm that achieves optimal decision-making by both adapting to and shaping the dynamic distribution. Throughout the paper, we adopt a distributional perspective and demonstrate how this view facilitates characterizations of distribution dynamics and the optimality and generalization performance of the proposed algorithm. We showcase the theoretical results in an opinion dynamics context, where an opportunistic party maximizes the affinity of a dynamic polarized population, and in a recommender system scenario, featuring performance optimization with discrete distributions in the probability simplex.

The Sample Complexity of Online Reinforcement Learning: A Multi-model Perspective

Jan 27, 2025Abstract:We study the sample complexity of online reinforcement learning for nonlinear dynamical systems with continuous state and action spaces. Our analysis accommodates a large class of dynamical systems ranging from a finite set of nonlinear candidate models to models with bounded and Lipschitz continuous dynamics, to systems that are parametrized by a compact and real-valued set of parameters. In the most general setting, our algorithm achieves a policy regret of $\mathcal{O}(N \epsilon^2 + \mathrm{ln}(m(\epsilon))/\epsilon^2)$, where $N$ is the time horizon, $\epsilon$ is a user-specified discretization width, and $m(\epsilon)$ measures the complexity of the function class under consideration via its packing number. In the special case where the dynamics are parametrized by a compact and real-valued set of parameters (such as neural networks, transformers, etc.), we prove a policy regret of $\mathcal{O}(\sqrt{N p})$, where $p$ denotes the number of parameters, recovering earlier sample-complexity results that were derived for linear time-invariant dynamical systems. While this article focuses on characterizing sample complexity, the proposed algorithms are likely to be useful in practice, due to their simplicity, the ability to incorporate prior knowledge, and their benign transient behavior.

HunyuanVideo: A Systematic Framework For Large Video Generative Models

Dec 03, 2024

Abstract:Recent advancements in video generation have significantly impacted daily life for both individuals and industries. However, the leading video generation models remain closed-source, resulting in a notable performance gap between industry capabilities and those available to the public. In this report, we introduce HunyuanVideo, an innovative open-source video foundation model that demonstrates performance in video generation comparable to, or even surpassing, that of leading closed-source models. HunyuanVideo encompasses a comprehensive framework that integrates several key elements, including data curation, advanced architectural design, progressive model scaling and training, and an efficient infrastructure tailored for large-scale model training and inference. As a result, we successfully trained a video generative model with over 13 billion parameters, making it the largest among all open-source models. We conducted extensive experiments and implemented a series of targeted designs to ensure high visual quality, motion dynamics, text-video alignment, and advanced filming techniques. According to evaluations by professionals, HunyuanVideo outperforms previous state-of-the-art models, including Runway Gen-3, Luma 1.6, and three top-performing Chinese video generative models. By releasing the code for the foundation model and its applications, we aim to bridge the gap between closed-source and open-source communities. This initiative will empower individuals within the community to experiment with their ideas, fostering a more dynamic and vibrant video generation ecosystem. The code is publicly available at https://github.com/Tencent/HunyuanVideo.

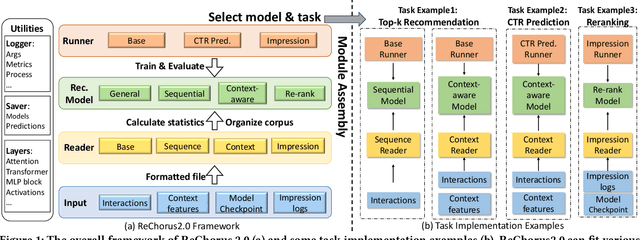

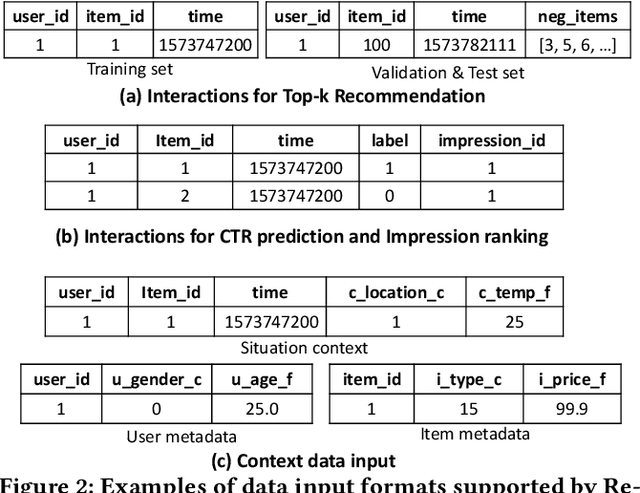

ReChorus2.0: A Modular and Task-Flexible Recommendation Library

May 28, 2024

Abstract:With the applications of recommendation systems rapidly expanding, an increasing number of studies have focused on every aspect of recommender systems with different data inputs, models, and task settings. Therefore, a flexible library is needed to help researchers implement the experimental strategies they require. Existing open libraries for recommendation scenarios have enabled reproducing various recommendation methods and provided standard implementations. However, these libraries often impose certain restrictions on data and seldom support the same model to perform different tasks and input formats, limiting users from customized explorations. To fill the gap, we propose ReChorus2.0, a modular and task-flexible library for recommendation researchers. Based on ReChorus, we upgrade the supported input formats, models, and training&evaluation strategies to help realize more recommendation tasks with more data types. The main contributions of ReChorus2.0 include: (1) Realization of complex and practical tasks, including reranking and CTR prediction tasks; (2) Inclusion of various context-aware and rerank recommenders; (3) Extension of existing and new models to support different tasks with the same models; (4) Support of highly-customized input with impression logs, negative items, or click labels, as well as user, item, and situation contexts. To summarize, ReChorus2.0 serves as a comprehensive and flexible library better aligning with the practical problems in the recommendation scenario and catering to more diverse research needs. The implementation and detailed tutorials of ReChorus2.0 can be found at https://github.com/THUwangcy/ReChorus.

Introducing EEG Analyses to Help Personal Music Preference Prediction

Apr 24, 2024Abstract:Nowadays, personalized recommender systems play an increasingly important role in music scenarios in our daily life with the preference prediction ability. However, existing methods mainly rely on users' implicit feedback (e.g., click, dwell time) which ignores the detailed user experience. This paper introduces Electroencephalography (EEG) signals to personal music preferences as a basis for the personalized recommender system. To realize collection in daily life, we use a dry-electrodes portable device to collect data. We perform a user study where participants listen to music and record preferences and moods. Meanwhile, EEG signals are collected with a portable device. Analysis of the collected data indicates a significant relationship between music preference, mood, and EEG signals. Furthermore, we conduct experiments to predict personalized music preference with the features of EEG signals. Experiments show significant improvement in rating prediction and preference classification with the help of EEG. Our work demonstrates the possibility of introducing EEG signals in personal music preference with portable devices. Moreover, our approach is not restricted to the music scenario, and the EEG signals as explicit feedback can be used in personalized recommendation tasks.

EEG-SVRec: An EEG Dataset with User Multidimensional Affective Engagement Labels in Short Video Recommendation

Apr 01, 2024

Abstract:In recent years, short video platforms have gained widespread popularity, making the quality of video recommendations crucial for retaining users. Existing recommendation systems primarily rely on behavioral data, which faces limitations when inferring user preferences due to issues such as data sparsity and noise from accidental interactions or personal habits. To address these challenges and provide a more comprehensive understanding of user affective experience and cognitive activity, we propose EEG-SVRec, the first EEG dataset with User Multidimensional Affective Engagement Labels in Short Video Recommendation. The study involves 30 participants and collects 3,657 interactions, offering a rich dataset that can be used for a deeper exploration of user preference and cognitive activity. By incorporating selfassessment techniques and real-time, low-cost EEG signals, we offer a more detailed understanding user affective experiences (valence, arousal, immersion, interest, visual and auditory) and the cognitive mechanisms behind their behavior. We establish benchmarks for rating prediction by the recommendation algorithm, showing significant improvement with the inclusion of EEG signals. Furthermore, we demonstrate the potential of this dataset in gaining insights into the affective experience and cognitive activity behind user behaviors in recommender systems. This work presents a novel perspective for enhancing short video recommendation by leveraging the rich information contained in EEG signals and multidimensional affective engagement scores, paving the way for future research in short video recommendation systems.

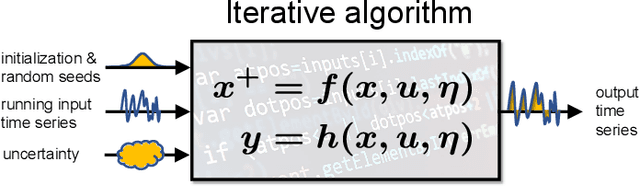

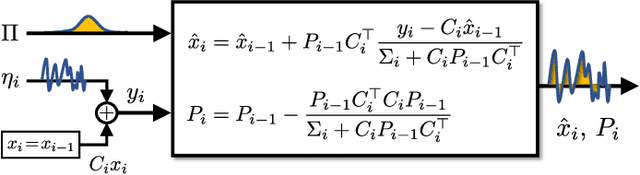

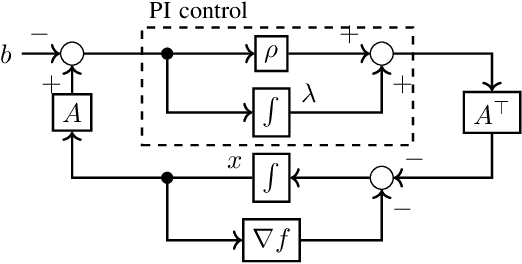

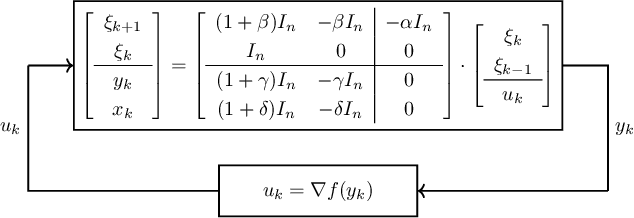

Towards a Systems Theory of Algorithms

Jan 25, 2024

Abstract:Traditionally, numerical algorithms are seen as isolated pieces of code confined to an {\em in silico} existence. However, this perspective is not appropriate for many modern computational approaches in control, learning, or optimization, wherein {\em in vivo} algorithms interact with their environment. Examples of such {\em open} include various real-time optimization-based control strategies, reinforcement learning, decision-making architectures, online optimization, and many more. Further, even {\em closed} algorithms in learning or optimization are increasingly abstracted in block diagrams with interacting dynamic modules and pipelines. In this opinion paper, we state our vision on a to-be-cultivated {\em systems theory of algorithms} and argue in favour of viewing algorithms as open dynamical systems interacting with other algorithms, physical systems, humans, or databases. Remarkably, the manifold tools developed under the umbrella of systems theory also provide valuable insights into this burgeoning paradigm shift and its accompanying challenges in the algorithmic world. We survey various instances where the principles of algorithmic systems theory are being developed and outline pertinent modeling, analysis, and design challenges.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge