Florian Dörfler

Socially-Aware Recommender Systems Mitigate Opinion Clusterization

Jan 02, 2026Abstract:Recommender systems shape online interactions by matching users with creators content to maximize engagement. Creators, in turn, adapt their content to align with users preferences and enhance their popularity. At the same time, users preferences evolve under the influence of both suggested content from the recommender system and content shared within their social circles. This feedback loop generates a complex interplay between users, creators, and recommender algorithms, which is the key cause of filter bubbles and opinion polarization. We develop a social network-aware recommender system that explicitly accounts for this user-creators feedback interaction and strategically exploits the topology of the user's own social network to promote diversification. Our approach highlights how accounting for and exploiting user's social network in the recommender system design is crucial to mediate filter bubble effects while balancing content diversity with personalization. Provably, opinion clusterization is positively correlated with the influence of recommended content on user opinions. Ultimately, the proposed approach shows the power of socially-aware recommender systems in combating opinion polarization and clusterization phenomena.

MPC for momentum counter-balanced and zero-impulse contact with a free-spinning satellite

Dec 10, 2025Abstract:In on-orbit robotics, a servicer satellite's ability to make contact with a free-spinning target satellite is essential to completing most on-orbit servicing (OOS) tasks. This manuscript develops a nonlinear model predictive control (MPC) framework that generates feasible controls for a servicer satellite to achieve zero-impulse contact with a free-spinning target satellite. The overall maneuver requires coordination between two separately actuated modules of the servicer satellite: (1) a moment generation module and (2) a manipulation module. We apply MPC to control both modules by explicitly modeling the cross-coupling dynamics between them. We demonstrate that the MPC controller can enforce actuation and state constraints that prior control approaches could not account for. We evaluate the performance of the MPC controller by simulating zero-impulse contact scenarios with a free-spinning target satellite via numerical Monte Carlo (MC) trials and comparing the simulation results with prior control approaches. Our simulation results validate the effectiveness of the MPC controller in maintaining spin synchronization and zero-impulse contact under operation constraints, moving contact location, and observation and actuation noise.

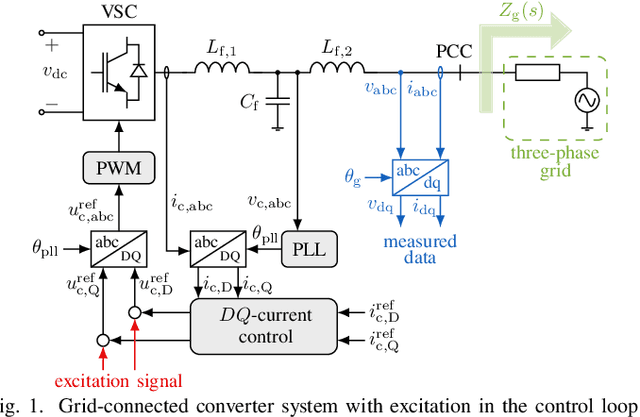

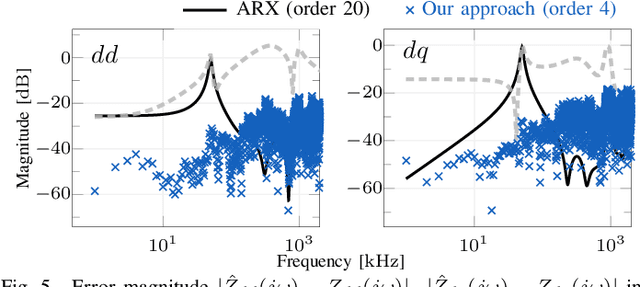

Ultrafast Grid Impedance Identification in $dq$-Asymmetric Three-Phase Power Systems

Oct 14, 2025

Abstract:We propose a non-parametric frequency-domain method to identify small-signal $dq$-asymmetric grid impedances, over a wide frequency band, using grid-connected converters. Existing identification methods are faced with significant trade-offs: e.g., passive approaches rely on ambient harmonics and rare grid events and thus can only provide estimates at a few frequencies, while many active approaches that intentionally perturb grid operation require long time series measurement and specialized equipment. Although active time-domain methods reduce the measurement time, they either make crude simplifying assumptions or require laborious model order tuning. Our approach effectively addresses these challenges: it does not require specialized excitation signals or hardware and achieves ultrafast ($<1$ s) identification, drastically reducing measurement time. Being non-parametric, our approach also makes no assumptions on the grid structure. A detailed electromagnetic transient simulation is used to validate the method and demonstrate its clear superiority over existing alternatives.

Learn to Bid as a Price-Maker Wind Power Producer

Mar 20, 2025Abstract:Wind power producers (WPPs) participating in short-term power markets face significant imbalance costs due to their non-dispatchable and variable production. While some WPPs have a large enough market share to influence prices with their bidding decisions, existing optimal bidding methods rarely account for this aspect. Price-maker approaches typically model bidding as a bilevel optimization problem, but these methods require complex market models, estimating other participants' actions, and are computationally demanding. To address these challenges, we propose an online learning algorithm that leverages contextual information to optimize WPP bids in the price-maker setting. We formulate the strategic bidding problem as a contextual multi-armed bandit, ensuring provable regret minimization. The algorithm's performance is evaluated against various benchmark strategies using a numerical simulation of the German day-ahead and real-time markets.

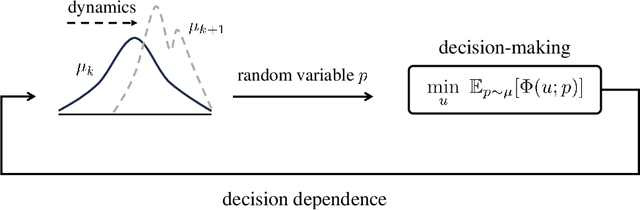

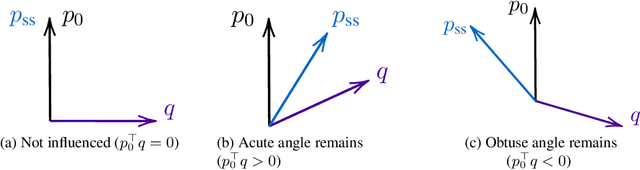

Decision-Dependent Stochastic Optimization: The Role of Distribution Dynamics

Mar 10, 2025

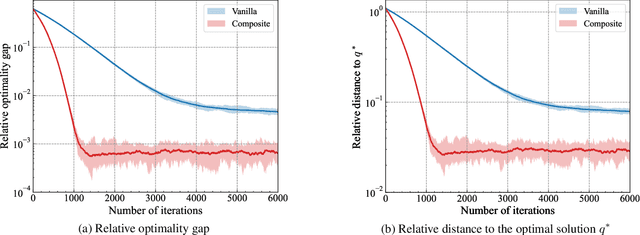

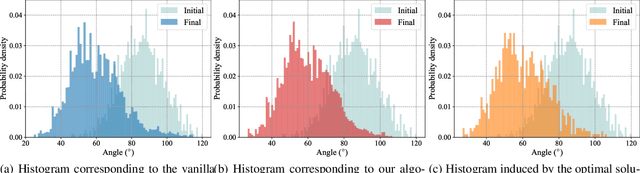

Abstract:Distribution shifts have long been regarded as troublesome external forces that a decision-maker should either counteract or conform to. An intriguing feedback phenomenon termed decision dependence arises when the deployed decision affects the environment and alters the data-generating distribution. In the realm of performative prediction, this is encoded by distribution maps parameterized by decisions due to strategic behaviors. In contrast, we formalize an endogenous distribution shift as a feedback process featuring nonlinear dynamics that couple the evolving distribution with the decision. Stochastic optimization in this dynamic regime provides a fertile ground to examine the various roles played by dynamics in the composite problem structure. To this end, we develop an online algorithm that achieves optimal decision-making by both adapting to and shaping the dynamic distribution. Throughout the paper, we adopt a distributional perspective and demonstrate how this view facilitates characterizations of distribution dynamics and the optimality and generalization performance of the proposed algorithm. We showcase the theoretical results in an opinion dynamics context, where an opportunistic party maximizes the affinity of a dynamic polarized population, and in a recommender system scenario, featuring performance optimization with discrete distributions in the probability simplex.

An Adaptive Data-Enabled Policy Optimization Approach for Autonomous Bicycle Control

Feb 19, 2025Abstract:This paper presents a unified control framework that integrates a Feedback Linearization (FL) controller in the inner loop with an adaptive Data-Enabled Policy Optimization (DeePO) controller in the outer loop to balance an autonomous bicycle. While the FL controller stabilizes and partially linearizes the inherently unstable and nonlinear system, its performance is compromised by unmodeled dynamics and time-varying characteristics. To overcome these limitations, the DeePO controller is introduced to enhance adaptability and robustness. The initial control policy of DeePO is obtained from a finite set of offline, persistently exciting input and state data. To improve stability and compensate for system nonlinearities and disturbances, a robustness-promoting regularizer refines the initial policy, while the adaptive section of the DeePO framework is enhanced with a forgetting factor to improve adaptation to time-varying dynamics. The proposed DeePO+FL approach is evaluated through simulations and real-world experiments on an instrumented autonomous bicycle. Results demonstrate its superiority over the FL-only approach, achieving more precise tracking of the reference lean angle and lean rate.

Contractivity and linear convergence in bilinear saddle-point problems: An operator-theoretic approach

Oct 18, 2024

Abstract:We study the convex-concave bilinear saddle-point problem $\min_x \max_y f(x) + y^\top Ax - g(y)$, where both, only one, or none of the functions $f$ and $g$ are strongly convex, and suitable rank conditions on the matrix $A$ hold. The solution of this problem is at the core of many machine learning tasks. By employing tools from operator theory, we systematically prove the contractivity (in turn, the linear convergence) of several first-order primal-dual algorithms, including the Chambolle-Pock method. Our approach results in concise and elegant proofs, and it yields new convergence guarantees and tighter bounds compared to known results.

Maximum likelihood inference for high-dimensional problems with multiaffine variable relations

Sep 05, 2024

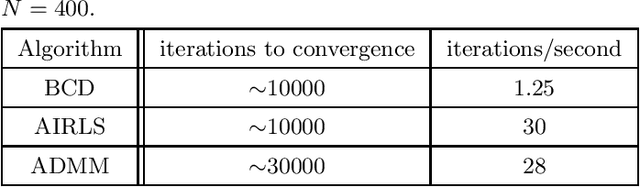

Abstract:Maximum Likelihood Estimation of continuous variable models can be very challenging in high dimensions, due to potentially complex probability distributions. The existence of multiple interdependencies among variables can make it very difficult to establish convergence guarantees. This leads to a wide use of brute-force methods, such as grid searching and Monte-Carlo sampling and, when applicable, complex and problem-specific algorithms. In this paper, we consider inference problems where the variables are related by multiaffine expressions. We propose a novel Alternating and Iteratively-Reweighted Least Squares (AIRLS) algorithm, and prove its convergence for problems with Generalized Normal Distributions. We also provide an efficient method to compute the variance of the estimates obtained using AIRLS. Finally, we show how the method can be applied to graphical statistical models. We perform numerical experiments on several inference problems, showing significantly better performance than state-of-the-art approaches in terms of scalability, robustness to noise, and convergence speed due to an empirically observed super-linear convergence rate.

Learning Diffusion at Lightspeed

Jun 18, 2024Abstract:Diffusion regulates a phenomenal number of natural processes and the dynamics of many successful generative models. Existing models to learn the diffusion terms from observational data rely on complex bilevel optimization problems and properly model only the drift of the system. We propose a new simple model, JKOnet*, which bypasses altogether the complexity of existing architectures while presenting significantly enhanced representational capacity: JKOnet* recovers the potential, interaction, and internal energy components of the underlying diffusion process. JKOnet* minimizes a simple quadratic loss, runs at lightspeed, and drastically outperforms other baselines in practice. Additionally, JKOnet* provides a closed-form optimal solution for linearly parametrized functionals. Our methodology is based on the interpretation of diffusion processes as energy-minimizing trajectories in the probability space via the so-called JKO scheme, which we study via its first-order optimality conditions, in light of few-weeks-old advancements in optimization in the probability space.

NeoRL: Efficient Exploration for Nonepisodic RL

Jun 04, 2024Abstract:We study the problem of nonepisodic reinforcement learning (RL) for nonlinear dynamical systems, where the system dynamics are unknown and the RL agent has to learn from a single trajectory, i.e., without resets. We propose Nonepisodic Optimistic RL (NeoRL), an approach based on the principle of optimism in the face of uncertainty. NeoRL uses well-calibrated probabilistic models and plans optimistically w.r.t. the epistemic uncertainty about the unknown dynamics. Under continuity and bounded energy assumptions on the system, we provide a first-of-its-kind regret bound of $\setO(\beta_T \sqrt{T \Gamma_T})$ for general nonlinear systems with Gaussian process dynamics. We compare NeoRL to other baselines on several deep RL environments and empirically demonstrate that NeoRL achieves the optimal average cost while incurring the least regret.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge