Zhifeng Hao

Temporal Latent Variable Structural Causal Model for Causal Discovery under External Interferences

Nov 13, 2025

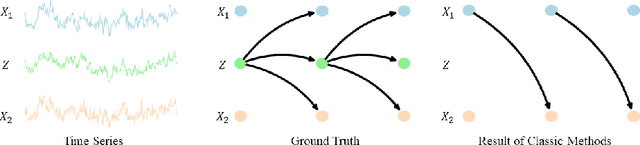

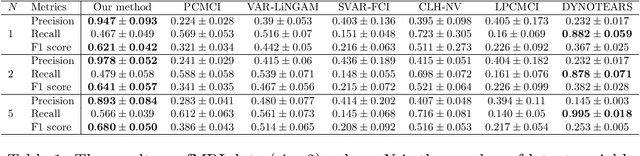

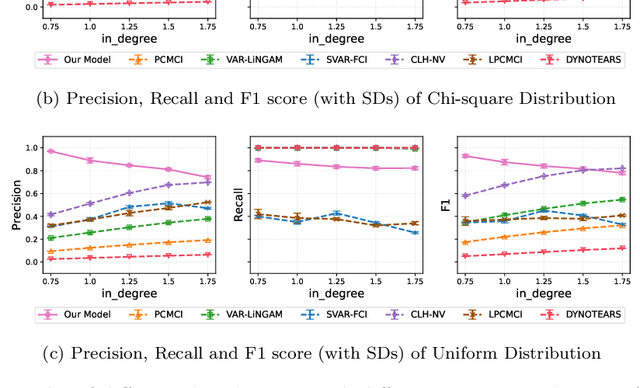

Abstract:Inferring causal relationships from observed data is an important task, yet it becomes challenging when the data is subject to various external interferences. Most of these interferences are the additional effects of external factors on observed variables. Since these external factors are often unknown, we introduce latent variables to represent these unobserved factors that affect the observed data. Specifically, to capture the causal strength and adjacency information, we propose a new temporal latent variable structural causal model, incorporating causal strength and adjacency coefficients that represent the causal relationships between variables. Considering that expert knowledge can provide information about unknown interferences in certain scenarios, we develop a method that facilitates the incorporation of prior knowledge into parameter learning based on Variational Inference, to guide the model estimation. Experimental results demonstrate the stability and accuracy of our proposed method.

Identification of Causal Direction under an Arbitrary Number of Latent Confounders

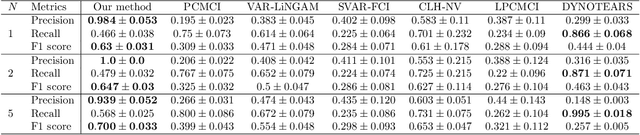

Oct 26, 2025Abstract:Recovering causal structure in the presence of latent variables is an important but challenging task. While many methods have been proposed to handle it, most of them require strict and/or untestable assumptions on the causal structure. In real-world scenarios, observed variables may be affected by multiple latent variables simultaneously, which, generally speaking, cannot be handled by these methods. In this paper, we consider the linear, non-Gaussian case, and make use of the joint higher-order cumulant matrix of the observed variables constructed in a specific way. We show that, surprisingly, causal asymmetry between two observed variables can be directly seen from the rank deficiency properties of such higher-order cumulant matrices, even in the presence of an arbitrary number of latent confounders. Identifiability results are established, and the corresponding identification methods do not even involve iterative procedures. Experimental results demonstrate the effectiveness and asymptotic correctness of our proposed method.

Emotion Transfer with Enhanced Prototype for Unseen Emotion Recognition in Conversation

Aug 27, 2025

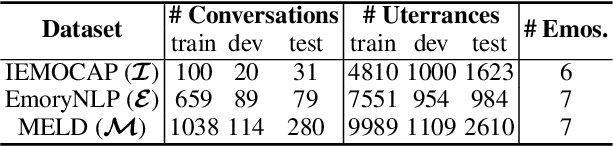

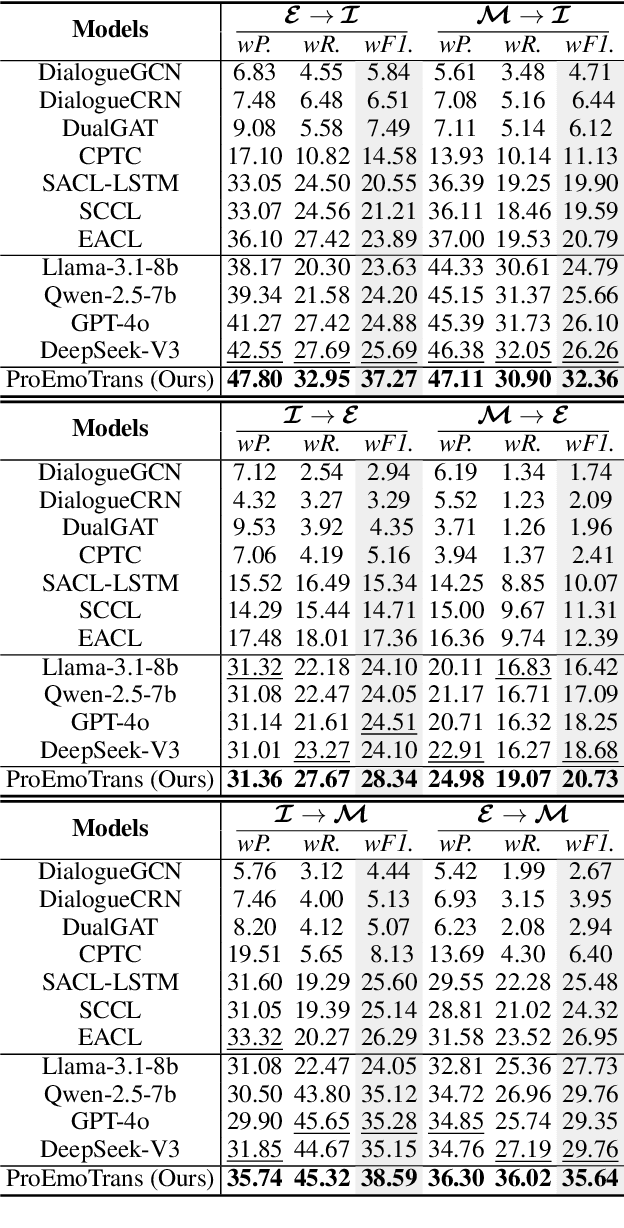

Abstract:Current Emotion Recognition in Conversation (ERC) research follows a closed-domain assumption. However, there is no clear consensus on emotion classification in psychology, which presents a challenge for models when it comes to recognizing previously unseen emotions in real-world applications. To bridge this gap, we introduce the Unseen Emotion Recognition in Conversation (UERC) task for the first time and propose ProEmoTrans, a solid prototype-based emotion transfer framework. This prototype-based approach shows promise but still faces key challenges: First, implicit expressions complicate emotion definition, which we address by proposing an LLM-enhanced description approach. Second, utterance encoding in long conversations is difficult, which we tackle with a proposed parameter-free mechanism for efficient encoding and overfitting prevention. Finally, the Markovian flow nature of emotions is hard to transfer, which we address with an improved Attention Viterbi Decoding (AVD) method to transfer seen emotion transitions to unseen emotions. Extensive experiments on three datasets show that our method serves as a strong baseline for preliminary exploration in this new area.

Dialogues Aspect-based Sentiment Quadruple Extraction via Structural Entropy Minimization Partitioning

Aug 07, 2025Abstract:Dialogues Aspect-based Sentiment Quadruple Extraction (DiaASQ) aims to extract all target-aspect-opinion-sentiment quadruples from a given multi-round, multi-participant dialogue. Existing methods typically learn word relations across entire dialogues, assuming a uniform distribution of sentiment elements. However, we find that dialogues often contain multiple semantically independent sub-dialogues without clear dependencies between them. Therefore, learning word relationships across the entire dialogue inevitably introduces additional noise into the extraction process. To address this, our method focuses on partitioning dialogues into semantically independent sub-dialogues. Achieving completeness while minimizing these sub-dialogues presents a significant challenge. Simply partitioning based on reply relationships is ineffective. Instead, we propose utilizing a structural entropy minimization algorithm to partition the dialogues. This approach aims to preserve relevant utterances while distinguishing irrelevant ones as much as possible. Furthermore, we introduce a two-step framework for quadruple extraction: first extracting individual sentiment elements at the utterance level, then matching quadruples at the sub-dialogue level. Extensive experiments demonstrate that our approach achieves state-of-the-art performance in DiaASQ with much lower computational costs.

Horizontal and Vertical Federated Causal Structure Learning via Higher-order Cumulants

Jul 09, 2025Abstract:Federated causal discovery aims to uncover the causal relationships between entities while protecting data privacy, which has significant importance and numerous applications in real-world scenarios. Existing federated causal structure learning methods primarily focus on horizontal federated settings. However, in practical situations, different clients may not necessarily contain data on the same variables. In a single client, the incomplete set of variables can easily lead to spurious causal relationships, thereby affecting the information transmitted to other clients. To address this issue, we comprehensively consider causal structure learning methods under both horizontal and vertical federated settings. We provide the identification theories and methods for learning causal structure in the horizontal and vertical federal setting via higher-order cumulants. Specifically, we first aggregate higher-order cumulant information from all participating clients to construct global cumulant estimates. These global estimates are then used for recursive source identification, ultimately yielding a global causal strength matrix. Our approach not only enables the reconstruction of causal graphs but also facilitates the estimation of causal strength coefficients. Our algorithm demonstrates superior performance in experiments conducted on both synthetic data and real-world data.

Running-time Analysis of ($μ+λ$) Evolutionary Combinatorial Optimization Based on Multiple-gain Estimation

Jul 03, 2025Abstract:The running-time analysis of evolutionary combinatorial optimization is a fundamental topic in evolutionary computation. However, theoretical results regarding the $(\mu+\lambda)$ evolutionary algorithm (EA) for combinatorial optimization problems remain relatively scarce compared to those for simple pseudo-Boolean problems. This paper proposes a multiple-gain model to analyze the running time of EAs for combinatorial optimization problems. The proposed model is an improved version of the average gain model, which is a fitness-difference drift approach under the sigma-algebra condition to estimate the running time of evolutionary numerical optimization. The improvement yields a framework for estimating the expected first hitting time of a stochastic process in both average-case and worst-case scenarios. It also introduces novel running-time results of evolutionary combinatorial optimization, including two tighter time complexity upper bounds than the known results in the case of ($\mu+\lambda$) EA for the knapsack problem with favorably correlated weights, a closed-form expression of time complexity upper bound in the case of ($\mu+\lambda$) EA for general $k$-MAX-SAT problems and a tighter time complexity upper bounds than the known results in the case of ($\mu+\lambda$) EA for the traveling salesperson problem. Experimental results indicate that the practical running time aligns with the theoretical results, verifying that the multiple-gain model is an effective tool for running-time analysis of ($\mu+\lambda$) EA for combinatorial optimization problems.

An Experimental Approach for Running-Time Estimation of Multi-objective Evolutionary Algorithms in Numerical Optimization

Jul 03, 2025Abstract:Multi-objective evolutionary algorithms (MOEAs) have become essential tools for solving multi-objective optimization problems (MOPs), making their running time analysis crucial for assessing algorithmic efficiency and guiding practical applications. While significant theoretical advances have been achieved for combinatorial optimization, existing studies for numerical optimization primarily rely on algorithmic or problem simplifications, limiting their applicability to real-world scenarios. To address this gap, we propose an experimental approach for estimating upper bounds on the running time of MOEAs in numerical optimization without simplification assumptions. Our approach employs an average gain model that characterizes algorithmic progress through the Inverted Generational Distance metric. To handle the stochastic nature of MOEAs, we use statistical methods to estimate the probabilistic distribution of gains. Recognizing that gain distributions in numerical optimization exhibit irregular patterns with varying densities across different regions, we introduce an adaptive sampling method that dynamically adjusts sampling density to ensure accurate surface fitting for running time estimation. We conduct comprehensive experiments on five representative MOEAs (NSGA-II, MOEA/D, AR-MOEA, AGEMOEA-II, and PREA) using the ZDT and DTLZ benchmark suites. The results demonstrate the effectiveness of our approach in estimating upper bounds on the running time without requiring algorithmic or problem simplifications. Additionally, we provide a web-based implementation to facilitate broader adoption of our methodology. This work provides a practical complement to theoretical research on MOEAs in numerical optimization.

Causal-aware Large Language Models: Enhancing Decision-Making Through Learning, Adapting and Acting

May 30, 2025Abstract:Large language models (LLMs) have shown great potential in decision-making due to the vast amount of knowledge stored within the models. However, these pre-trained models are prone to lack reasoning abilities and are difficult to adapt to new environments, further hindering their application to complex real-world tasks. To address these challenges, inspired by the human cognitive process, we propose Causal-aware LLMs, which integrate the structural causal model (SCM) into the decision-making process to model, update, and utilize structured knowledge of the environment in a ``learning-adapting-acting" paradigm. Specifically, in the learning stage, we first utilize an LLM to extract the environment-specific causal entities and their causal relations to initialize a structured causal model of the environment. Subsequently,in the adapting stage, we update the structured causal model through external feedback about the environment, via an idea of causal intervention. Finally, in the acting stage, Causal-aware LLMs exploit structured causal knowledge for more efficient policy-making through the reinforcement learning agent. The above processes are performed iteratively to learn causal knowledge, ultimately enabling the causal-aware LLMs to achieve a more accurate understanding of the environment and make more efficient decisions. Experimental results across 22 diverse tasks within the open-world game ``Crafter" validate the effectiveness of our proposed method.

PrePrompt: Predictive prompting for class incremental learning

May 13, 2025Abstract:Class Incremental Learning (CIL) based on pre-trained models offers a promising direction for open-world continual learning. Existing methods typically rely on correlation-based strategies, where an image's classification feature is used as a query to retrieve the most related key prompts and select the corresponding value prompts for training. However, these approaches face an inherent limitation: fitting the entire feature space of all tasks with only a few trainable prompts is fundamentally challenging. We propose Predictive Prompting (PrePrompt), a novel CIL framework that circumvents correlation-based limitations by leveraging pre-trained models' natural classification ability to predict task-specific prompts. Specifically, PrePrompt decomposes CIL into a two-stage prediction framework: task-specific prompt prediction followed by label prediction. While theoretically appealing, this framework risks bias toward recent classes due to missing historical data for older classifier calibration. PrePrompt then mitigates this by incorporating feature translation, dynamically balancing stability and plasticity. Experiments across multiple benchmarks demonstrate PrePrompt's superiority over state-of-the-art prompt-based CIL methods. The code will be released upon acceptance.

Causal View of Time Series Imputation: Some Identification Results on Missing Mechanism

May 12, 2025Abstract:Time series imputation is one of the most challenge problems and has broad applications in various fields like health care and the Internet of Things. Existing methods mainly aim to model the temporally latent dependencies and the generation process from the observed time series data. In real-world scenarios, different types of missing mechanisms, like MAR (Missing At Random), and MNAR (Missing Not At Random) can occur in time series data. However, existing methods often overlook the difference among the aforementioned missing mechanisms and use a single model for time series imputation, which can easily lead to misleading results due to mechanism mismatching. In this paper, we propose a framework for time series imputation problem by exploring Different Missing Mechanisms (DMM in short) and tailoring solutions accordingly. Specifically, we first analyze the data generation processes with temporal latent states and missing cause variables for different mechanisms. Sequentially, we model these generation processes via variational inference and estimate prior distributions of latent variables via normalizing flow-based neural architecture. Furthermore, we establish identifiability results under the nonlinear independent component analysis framework to show that latent variables are identifiable. Experimental results show that our method surpasses existing time series imputation techniques across various datasets with different missing mechanisms, demonstrating its effectiveness in real-world applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge