Yu-Xiang Wang

University of California Santa Barbara

ConvexBench: Can LLMs Recognize Convex Functions?

Feb 01, 2026Abstract:Convex analysis is a modern branch of mathematics with many applications. As Large Language Models (LLMs) start to automate research-level math and sciences, it is important for LLMs to demonstrate the ability to understand and reason with convexity. We introduce \cb, a scalable and mechanically verifiable benchmark for testing \textit{whether LLMs can identify the convexity of a symbolic objective under deep functional composition.} Experiments on frontier LLMs reveal a sharp compositional reasoning gap: performance degrades rapidly with increasing depth, dropping from an F1-score of $1.0$ at depth $2$ to approximately $0.2$ at depth $100$. Inspection of models' reasoning traces indicates two failure modes: \textit{parsing failure} and \textit{lazy reasoning}. To address these limitations, we propose an agentic divide-and-conquer framework that (i) offloads parsing to an external tool to construct an abstract syntax tree (AST) and (ii) enforces recursive reasoning over each intermediate sub-expression with focused context. This framework reliably mitigates deep-composition failures, achieving substantial performance improvement at large depths (e.g., F1-Score $= 1.0$ at depth $100$).

Private-RAG: Answering Multiple Queries with LLMs while Keeping Your Data Private

Nov 10, 2025

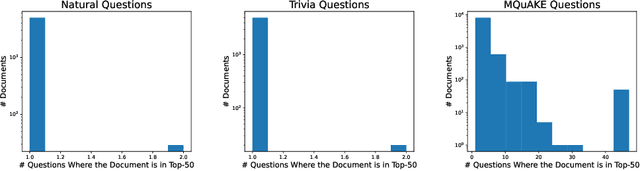

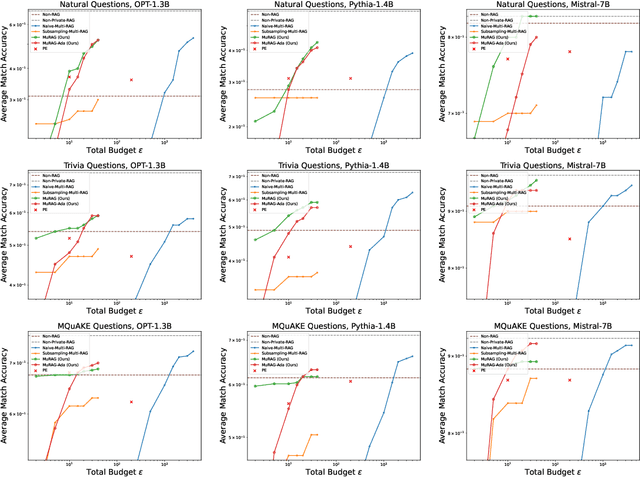

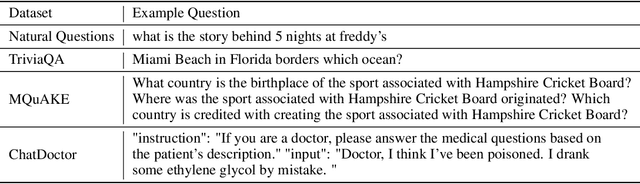

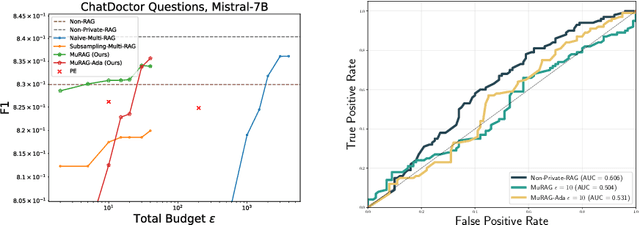

Abstract:Retrieval-augmented generation (RAG) enhances large language models (LLMs) by retrieving documents from an external corpus at inference time. When this corpus contains sensitive information, however, unprotected RAG systems are at risk of leaking private information. Prior work has introduced differential privacy (DP) guarantees for RAG, but only in single-query settings, which fall short of realistic usage. In this paper, we study the more practical multi-query setting and propose two DP-RAG algorithms. The first, MURAG, leverages an individual privacy filter so that the accumulated privacy loss only depends on how frequently each document is retrieved rather than the total number of queries. The second, MURAG-ADA, further improves utility by privately releasing query-specific thresholds, enabling more precise selection of relevant documents. Our experiments across multiple LLMs and datasets demonstrate that the proposed methods scale to hundreds of queries within a practical DP budget ($\varepsilon\approx10$), while preserving meaningful utility.

Stable Minima of ReLU Neural Networks Suffer from the Curse of Dimensionality: The Neural Shattering Phenomenon

Jun 25, 2025Abstract:We study the implicit bias of flatness / low (loss) curvature and its effects on generalization in two-layer overparameterized ReLU networks with multivariate inputs -- a problem well motivated by the minima stability and edge-of-stability phenomena in gradient-descent training. Existing work either requires interpolation or focuses only on univariate inputs. This paper presents new and somewhat surprising theoretical results for multivariate inputs. On two natural settings (1) generalization gap for flat solutions, and (2) mean-squared error (MSE) in nonparametric function estimation by stable minima, we prove upper and lower bounds, which establish that while flatness does imply generalization, the resulting rates of convergence necessarily deteriorate exponentially as the input dimension grows. This gives an exponential separation between the flat solutions vis-\`a-vis low-norm solutions (i.e., weight decay), which knowingly do not suffer from the curse of dimensionality. In particular, our minimax lower bound construction, based on a novel packing argument with boundary-localized ReLU neurons, reveals how flat solutions can exploit a kind of ''neural shattering'' where neurons rarely activate, but with high weight magnitudes. This leads to poor performance in high dimensions. We corroborate these theoretical findings with extensive numerical simulations. To the best of our knowledge, our analysis provides the first systematic explanation for why flat minima may fail to generalize in high dimensions.

Adapting to Linear Separable Subsets with Large-Margin in Differentially Private Learning

May 30, 2025Abstract:This paper studies the problem of differentially private empirical risk minimization (DP-ERM) for binary linear classification. We obtain an efficient $(\varepsilon,\delta)$-DP algorithm with an empirical zero-one risk bound of $\tilde{O}\left(\frac{1}{\gamma^2\varepsilon n} + \frac{|S_{\mathrm{out}}|}{\gamma n}\right)$ where $n$ is the number of data points, $S_{\mathrm{out}}$ is an arbitrary subset of data one can remove and $\gamma$ is the margin of linear separation of the remaining data points (after $S_{\mathrm{out}}$ is removed). Here, $\tilde{O}(\cdot)$ hides only logarithmic terms. In the agnostic case, we improve the existing results when the number of outliers is small. Our algorithm is highly adaptive because it does not require knowing the margin parameter $\gamma$ or outlier subset $S_{\mathrm{out}}$. We also derive a utility bound for the advanced private hyperparameter tuning algorithm.

Adaptive Estimation and Learning under Temporal Distribution Shift

May 21, 2025Abstract:In this paper, we study the problem of estimation and learning under temporal distribution shift. Consider an observation sequence of length $n$, which is a noisy realization of a time-varying groundtruth sequence. Our focus is to develop methods to estimate the groundtruth at the final time-step while providing sharp point-wise estimation error rates. We show that, without prior knowledge on the level of temporal shift, a wavelet soft-thresholding estimator provides an optimal estimation error bound for the groundtruth. Our proposed estimation method generalizes existing researches Mazzetto and Upfal (2023) by establishing a connection between the sequence's non-stationarity level and the sparsity in the wavelet-transformed domain. Our theoretical findings are validated by numerical experiments. Additionally, we applied the estimator to derive sparsity-aware excess risk bounds for binary classification under distribution shift and to develop computationally efficient training objectives. As a final contribution, we draw parallels between our results and the classical signal processing problem of total-variation denoising (Mammen and van de Geer,1997; Tibshirani, 2014), uncovering novel optimal algorithms for such task.

Adapting to Online Distribution Shifts in Deep Learning: A Black-Box Approach

Apr 09, 2025Abstract:We study the well-motivated problem of online distribution shift in which the data arrive in batches and the distribution of each batch can change arbitrarily over time. Since the shifts can be large or small, abrupt or gradual, the length of the relevant historical data to learn from may vary over time, which poses a major challenge in designing algorithms that can automatically adapt to the best ``attention span'' while remaining computationally efficient. We propose a meta-algorithm that takes any network architecture and any Online Learner (OL) algorithm as input and produces a new algorithm which provably enhances the performance of the given OL under non-stationarity. Our algorithm is efficient (it requires maintaining only $O(\log(T))$ OL instances) and adaptive (it automatically chooses OL instances with the ideal ``attention'' length at every timestamp). Experiments on various real-world datasets across text and image modalities show that our method consistently improves the accuracy of user specified OL algorithms for classification tasks. Key novel algorithmic ingredients include a \emph{multi-resolution instance} design inspired by wavelet theory and a cross-validation-through-time technique. Both could be of independent interest.

Purifying Approximate Differential Privacy with Randomized Post-processing

Mar 27, 2025Abstract:We propose a framework to convert $(\varepsilon, \delta)$-approximate Differential Privacy (DP) mechanisms into $(\varepsilon, 0)$-pure DP mechanisms, a process we call ``purification''. This algorithmic technique leverages randomized post-processing with calibrated noise to eliminate the $\delta$ parameter while preserving utility. By combining the tighter utility bounds and computational efficiency of approximate DP mechanisms with the stronger guarantees of pure DP, our approach achieves the best of both worlds. We illustrate the applicability of this framework in various settings, including Differentially Private Empirical Risk Minimization (DP-ERM), data-dependent DP mechanisms such as Propose-Test-Release (PTR), and query release tasks. To the best of our knowledge, this is the first work to provide a systematic method for transforming approximate DP into pure DP while maintaining competitive accuracy and computational efficiency.

Dynamic Pricing with Adversarially-Censored Demands

Feb 10, 2025

Abstract:We study an online dynamic pricing problem where the potential demand at each time period $t=1,2,\ldots, T$ is stochastic and dependent on the price. However, a perishable inventory is imposed at the beginning of each time $t$, censoring the potential demand if it exceeds the inventory level. To address this problem, we introduce a pricing algorithm based on the optimistic estimates of derivatives. We show that our algorithm achieves $\tilde{O}(\sqrt{T})$ optimal regret even with adversarial inventory series. Our findings advance the state-of-the-art in online decision-making problems with censored feedback, offering a theoretically optimal solution against adversarial observations.

A Proximal Operator for Inducing 2:4-Sparsity

Jan 29, 2025

Abstract:Recent hardware advancements in AI Accelerators and GPUs allow to efficiently compute sparse matrix multiplications, especially when 2 out of 4 consecutive weights are set to zero. However, this so-called 2:4 sparsity usually comes at a decreased accuracy of the model. We derive a regularizer that exploits the local correlation of features to find better sparsity masks in trained models. We minimize the regularizer jointly with a local squared loss by deriving the proximal operator for which we show that it has an efficient solution in the 2:4-sparse case. After optimizing the mask, we use maskedgradient updates to further minimize the local squared loss. We illustrate our method on toy problems and apply it to pruning entire large language models up to 70B parameters. On models up to 13B we improve over previous state of the art algorithms, whilst on 70B models we match their performance.

Joint Pricing and Resource Allocation: An Optimal Online-Learning Approach

Jan 29, 2025Abstract:We study an online learning problem on dynamic pricing and resource allocation, where we make joint pricing and inventory decisions to maximize the overall net profit. We consider the stochastic dependence of demands on the price, which complicates the resource allocation process and introduces significant non-convexity and non-smoothness to the problem. To solve this problem, we develop an efficient algorithm that utilizes a "Lower-Confidence Bound (LCB)" meta-strategy over multiple OCO agents. Our algorithm achieves $\tilde{O}(\sqrt{Tmn})$ regret (for $m$ suppliers and $n$ consumers), which is optimal with respect to the time horizon $T$. Our results illustrate an effective integration of statistical learning methodologies with complex operations research problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge