Jiashuo Jiang

Ask, Clarify, Optimize: Human-LLM Agent Collaboration for Smarter Inventory Control

Dec 31, 2025Abstract:Inventory management remains a challenge for many small and medium-sized businesses that lack the expertise to deploy advanced optimization methods. This paper investigates whether Large Language Models (LLMs) can help bridge this gap. We show that employing LLMs as direct, end-to-end solvers incurs a significant "hallucination tax": a performance gap arising from the model's inability to perform grounded stochastic reasoning. To address this, we propose a hybrid agentic framework that strictly decouples semantic reasoning from mathematical calculation. In this architecture, the LLM functions as an intelligent interface, eliciting parameters from natural language and interpreting results while automatically calling rigorous algorithms to build the optimization engine. To evaluate this interactive system against the ambiguity and inconsistency of real-world managerial dialogue, we introduce the Human Imitator, a fine-tuned "digital twin" of a boundedly rational manager that enables scalable, reproducible stress-testing. Our empirical analysis reveals that the hybrid agentic framework reduces total inventory costs by 32.1% relative to an interactive baseline using GPT-4o as an end-to-end solver. Moreover, we find that providing perfect ground-truth information alone is insufficient to improve GPT-4o's performance, confirming that the bottleneck is fundamentally computational rather than informational. Our results position LLMs not as replacements for operations research, but as natural-language interfaces that make rigorous, solver-based policies accessible to non-experts.

A Lyapunov Drift-Plus-Penalty Method Tailored for Reinforcement Learning with Queue Stability

Jun 04, 2025Abstract:With the proliferation of Internet of Things (IoT) devices, the demand for addressing complex optimization challenges has intensified. The Lyapunov Drift-Plus-Penalty algorithm is a widely adopted approach for ensuring queue stability, and some research has preliminarily explored its integration with reinforcement learning (RL). In this paper, we investigate the adaptation of the Lyapunov Drift-Plus-Penalty algorithm for RL applications, deriving an effective method for combining Lyapunov Drift-Plus-Penalty with RL under a set of common and reasonable conditions through rigorous theoretical analysis. Unlike existing approaches that directly merge the two frameworks, our proposed algorithm, termed Lyapunov drift-plus-penalty method tailored for reinforcement learning with queue stability (LDPTRLQ) algorithm, offers theoretical superiority by effectively balancing the greedy optimization of Lyapunov Drift-Plus-Penalty with the long-term perspective of RL. Simulation results for multiple problems demonstrate that LDPTRLQ outperforms the baseline methods using the Lyapunov drift-plus-penalty method and RL, corroborating the validity of our theoretical derivations. The results also demonstrate that our proposed algorithm outperforms other benchmarks in terms of compatibility and stability.

Adaptive Resolving Methods for Reinforcement Learning with Function Approximations

May 17, 2025

Abstract:Reinforcement learning (RL) problems are fundamental in online decision-making and have been instrumental in finding an optimal policy for Markov decision processes (MDPs). Function approximations are usually deployed to handle large or infinite state-action space. In our work, we consider the RL problems with function approximation and we develop a new algorithm to solve it efficiently. Our algorithm is based on the linear programming (LP) reformulation and it resolves the LP at each iteration improved with new data arrival. Such a resolving scheme enables our algorithm to achieve an instance-dependent sample complexity guarantee, more precisely, when we have $N$ data, the output of our algorithm enjoys an instance-dependent $\tilde{O}(1/N)$ suboptimality gap. In comparison to the $O(1/\sqrt{N})$ worst-case guarantee established in the previous literature, our instance-dependent guarantee is tighter when the underlying instance is favorable, and the numerical experiments also reveal the efficient empirical performances of our algorithms.

Adaptive Bidding Policies for First-Price Auctions with Budget Constraints under Non-stationarity

May 05, 2025Abstract:We study how a budget-constrained bidder should learn to adaptively bid in repeated first-price auctions to maximize her cumulative payoff. This problem arose due to an industry-wide shift from second-price auctions to first-price auctions in display advertising recently, which renders truthful bidding (i.e., always bidding one's private value) no longer optimal. We propose a simple dual-gradient-descent-based bidding policy that maintains a dual variable for budget constraint as the bidder consumes her budget. In analysis, we consider two settings regarding the bidder's knowledge of her private values in the future: (i) an uninformative setting where all the distributional knowledge (can be non-stationary) is entirely unknown to the bidder, and (ii) an informative setting where a prediction of the budget allocation in advance. We characterize the performance loss (or regret) relative to an optimal policy with complete information on the stochasticity. For uninformative setting, We show that the regret is \tilde{O}(\sqrt{T}) plus a variation term that reflects the non-stationarity of the value distributions, and this is of optimal order. We then show that we can get rid of the variation term with the help of the prediction; specifically, the regret is \tilde{O}(\sqrt{T}) plus the prediction error term in the informative setting.

Efficiently Solving Discounted MDPs with Predictions on Transition Matrices

Feb 21, 2025

Abstract:We study infinite-horizon Discounted Markov Decision Processes (DMDPs) under a generative model. Motivated by the Algorithm with Advice framework Mitzenmacher and Vassilvitskii 2022, we propose a novel framework to investigate how a prediction on the transition matrix can enhance the sample efficiency in solving DMDPs and improve sample complexity bounds. We focus on the DMDPs with $N$ state-action pairs and discounted factor $\gamma$. Firstly, we provide an impossibility result that, without prior knowledge of the prediction accuracy, no sampling policy can compute an $\epsilon$-optimal policy with a sample complexity bound better than $\tilde{O}((1-\gamma)^{-3} N\epsilon^{-2})$, which matches the state-of-the-art minimax sample complexity bound with no prediction. In complement, we propose an algorithm based on minimax optimization techniques that leverages the prediction on the transition matrix. Our algorithm achieves a sample complexity bound depending on the prediction error, and the bound is uniformly better than $\tilde{O}((1-\gamma)^{-4} N \epsilon^{-2})$, the previous best result derived from convex optimization methods. These theoretical findings are further supported by our numerical experiments.

Online Scheduling for LLM Inference with KV Cache Constraints

Feb 10, 2025

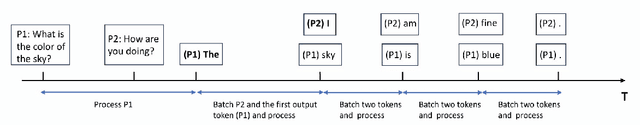

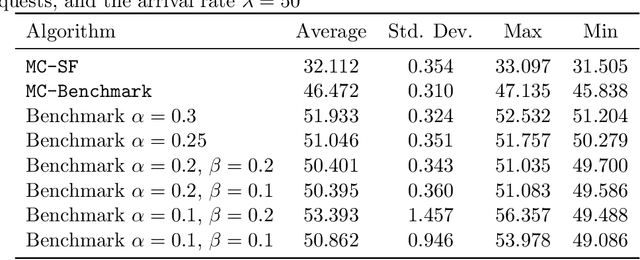

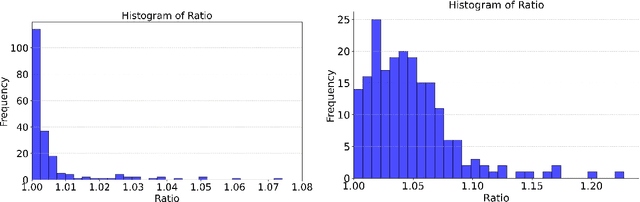

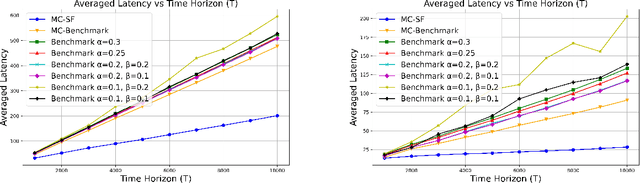

Abstract:Large Language Model (LLM) inference, where a trained model generates text one word at a time in response to user prompts, is a computationally intensive process requiring efficient scheduling to optimize latency and resource utilization. A key challenge in LLM inference is the management of the Key-Value (KV) cache, which reduces redundant computations but introduces memory constraints. In this work, we model LLM inference with KV cache constraints theoretically and propose novel batching and scheduling algorithms that minimize inference latency while effectively managing the KV cache's memory. We analyze both semi-online and fully online scheduling models, and our results are threefold. First, we provide a polynomial-time algorithm that achieves exact optimality in terms of average latency in the semi-online prompt arrival model. Second, in the fully online case with a stochastic prompt arrival, we introduce an efficient online scheduling algorithm with constant regret. Third, we prove that no algorithm (deterministic or randomized) can achieve a constant competitive ratio in fully online adversarial settings. Our empirical evaluations on a public LLM inference dataset, using the Llama-70B model on A100 GPUs, show that our approach significantly outperforms benchmark algorithms used currently in practice, achieving lower latency while reducing energy consumption. Overall, our results offer a path toward more sustainable and cost-effective LLM deployment.

Joint Pricing and Resource Allocation: An Optimal Online-Learning Approach

Jan 29, 2025Abstract:We study an online learning problem on dynamic pricing and resource allocation, where we make joint pricing and inventory decisions to maximize the overall net profit. We consider the stochastic dependence of demands on the price, which complicates the resource allocation process and introduces significant non-convexity and non-smoothness to the problem. To solve this problem, we develop an efficient algorithm that utilizes a "Lower-Confidence Bound (LCB)" meta-strategy over multiple OCO agents. Our algorithm achieves $\tilde{O}(\sqrt{Tmn})$ regret (for $m$ suppliers and $n$ consumers), which is optimal with respect to the time horizon $T$. Our results illustrate an effective integration of statistical learning methodologies with complex operations research problems.

Learning to Price with Resource Constraints: From Full Information to Machine-Learned Prices

Jan 24, 2025

Abstract:We study the dynamic pricing problem with knapsack, addressing the challenge of balancing exploration and exploitation under resource constraints. We introduce three algorithms tailored to different informational settings: a Boundary Attracted Re-solve Method for full information, an online learning algorithm for scenarios with no prior information, and an estimate-then-select re-solve algorithm that leverages machine-learned informed prices with known upper bound of estimation errors. The Boundary Attracted Re-solve Method achieves logarithmic regret without requiring the non-degeneracy condition, while the online learning algorithm attains an optimal $O(\sqrt{T})$ regret. Our estimate-then-select approach bridges the gap between these settings, providing improved regret bounds when reliable offline data is available. Numerical experiments validate the effectiveness and robustness of our algorithms across various scenarios. This work advances the understanding of online resource allocation and dynamic pricing, offering practical solutions adaptable to different informational structures.

Regret Minimization and Statistical Inference in Online Decision Making with High-dimensional Covariates

Nov 10, 2024

Abstract:This paper investigates regret minimization, statistical inference, and their interplay in high-dimensional online decision-making based on the sparse linear context bandit model. We integrate the $\varepsilon$-greedy bandit algorithm for decision-making with a hard thresholding algorithm for estimating sparse bandit parameters and introduce an inference framework based on a debiasing method using inverse propensity weighting. Under a margin condition, our method achieves either $O(T^{1/2})$ regret or classical $O(T^{1/2})$-consistent inference, indicating an unavoidable trade-off between exploration and exploitation. If a diverse covariate condition holds, we demonstrate that a pure-greedy bandit algorithm, i.e., exploration-free, combined with a debiased estimator based on average weighting can simultaneously achieve optimal $O(\log T)$ regret and $O(T^{1/2})$-consistent inference. We also show that a simple sample mean estimator can provide valid inference for the optimal policy's value. Numerical simulations and experiments on Warfarin dosing data validate the effectiveness of our methods.

Reinforcement Learning with Intrinsically Motivated Feedback Graph for Lost-sales Inventory Control

Jun 26, 2024Abstract:Reinforcement learning (RL) has proven to be well-performed and general-purpose in the inventory control (IC). However, further improvement of RL algorithms in the IC domain is impeded due to two limitations of online experience. First, online experience is expensive to acquire in real-world applications. With the low sample efficiency nature of RL algorithms, it would take extensive time to train the RL policy to convergence. Second, online experience may not reflect the true demand due to the lost sales phenomenon typical in IC, which makes the learning process more challenging. To address the above challenges, we propose a decision framework that combines reinforcement learning with feedback graph (RLFG) and intrinsically motivated exploration (IME) to boost sample efficiency. In particular, we first take advantage of the inherent properties of lost-sales IC problems and design the feedback graph (FG) specially for lost-sales IC problems to generate abundant side experiences aid RL updates. Then we conduct a rigorous theoretical analysis of how the designed FG reduces the sample complexity of RL methods. Based on the theoretical insights, we design an intrinsic reward to direct the RL agent to explore to the state-action space with more side experiences, further exploiting FG's power. Experimental results demonstrate that our method greatly improves the sample efficiency of applying RL in IC. Our code is available at https://anonymous.4open.science/r/RLIMFG4IC-811D/

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge