Yongxin Guo

Multi-Physics: A Comprehensive Benchmark for Multimodal LLMs Reasoning on Chinese Multi-Subject Physics Problems

Sep 19, 2025

Abstract:While multimodal LLMs (MLLMs) demonstrate remarkable reasoning progress, their application in specialized scientific domains like physics reveals significant gaps in current evaluation benchmarks. Specifically, existing benchmarks often lack fine-grained subject coverage, neglect the step-by-step reasoning process, and are predominantly English-centric, failing to systematically evaluate the role of visual information. Therefore, we introduce \textbf {Multi-Physics} for Chinese physics reasoning, a comprehensive benchmark that includes 5 difficulty levels, featuring 1,412 image-associated, multiple-choice questions spanning 11 high-school physics subjects. We employ a dual evaluation framework to evaluate 20 different MLLMs, analyzing both final answer accuracy and the step-by-step integrity of their chain-of-thought. Furthermore, we systematically study the impact of difficulty level and visual information by comparing the model performance before and after changing the input mode. Our work provides not only a fine-grained resource for the community but also offers a robust methodology for dissecting the multimodal reasoning process of state-of-the-art MLLMs, and our dataset and code have been open-sourced: https://github.com/luozhongze/Multi-Physics.

Adaptively Distilled ControlNet: Accelerated Training and Superior Sampling for Medical Image Synthesis

Jul 31, 2025Abstract:Medical image annotation is constrained by privacy concerns and labor-intensive labeling, significantly limiting the performance and generalization of segmentation models. While mask-controllable diffusion models excel in synthesis, they struggle with precise lesion-mask alignment. We propose \textbf{Adaptively Distilled ControlNet}, a task-agnostic framework that accelerates training and optimization through dual-model distillation. Specifically, during training, a teacher model, conditioned on mask-image pairs, regularizes a mask-only student model via predicted noise alignment in parameter space, further enhanced by adaptive regularization based on lesion-background ratios. During sampling, only the student model is used, enabling privacy-preserving medical image generation. Comprehensive evaluations on two distinct medical datasets demonstrate state-of-the-art performance: TransUNet improves mDice/mIoU by 2.4%/4.2% on KiTS19, while SANet achieves 2.6%/3.5% gains on Polyps, highlighting its effectiveness and superiority. Code is available at GitHub.

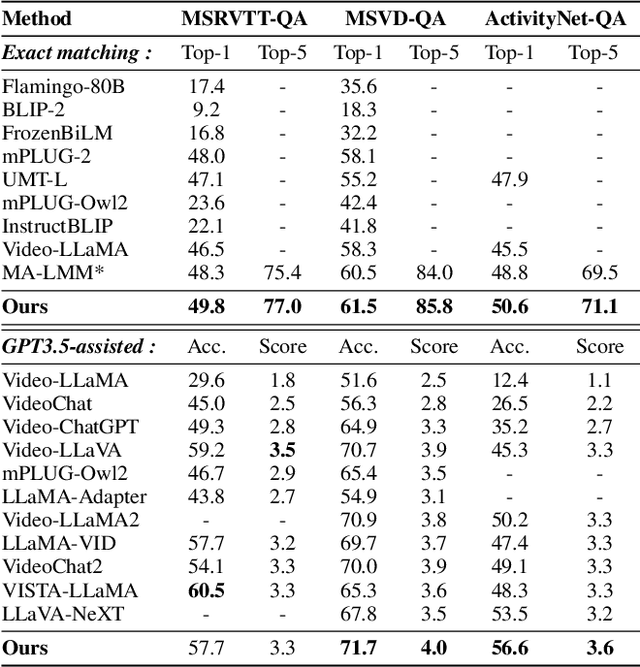

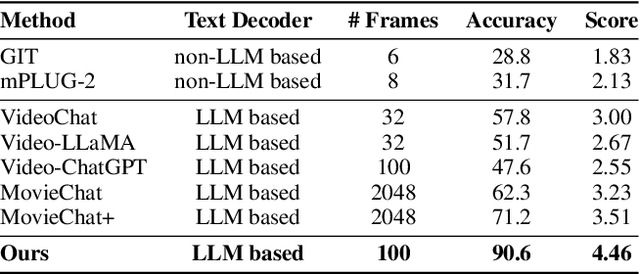

Watch and Listen: Understanding Audio-Visual-Speech Moments with Multimodal LLM

May 23, 2025Abstract:Humans naturally understand moments in a video by integrating visual and auditory cues. For example, localizing a scene in the video like "A scientist passionately speaks on wildlife conservation as dramatic orchestral music plays, with the audience nodding and applauding" requires simultaneous processing of visual, audio, and speech signals. However, existing models often struggle to effectively fuse and interpret audio information, limiting their capacity for comprehensive video temporal understanding. To address this, we present TriSense, a triple-modality large language model designed for holistic video temporal understanding through the integration of visual, audio, and speech modalities. Central to TriSense is a Query-Based Connector that adaptively reweights modality contributions based on the input query, enabling robust performance under modality dropout and allowing flexible combinations of available inputs. To support TriSense's multimodal capabilities, we introduce TriSense-2M, a high-quality dataset of over 2 million curated samples generated via an automated pipeline powered by fine-tuned LLMs. TriSense-2M includes long-form videos and diverse modality combinations, facilitating broad generalization. Extensive experiments across multiple benchmarks demonstrate the effectiveness of TriSense and its potential to advance multimodal video analysis. Code and dataset will be publicly released.

Noise-Consistent Siamese-Diffusion for Medical Image Synthesis and Segmentation

May 09, 2025Abstract:Deep learning has revolutionized medical image segmentation, yet its full potential remains constrained by the paucity of annotated datasets. While diffusion models have emerged as a promising approach for generating synthetic image-mask pairs to augment these datasets, they paradoxically suffer from the same data scarcity challenges they aim to mitigate. Traditional mask-only models frequently yield low-fidelity images due to their inability to adequately capture morphological intricacies, which can critically compromise the robustness and reliability of segmentation models. To alleviate this limitation, we introduce Siamese-Diffusion, a novel dual-component model comprising Mask-Diffusion and Image-Diffusion. During training, a Noise Consistency Loss is introduced between these components to enhance the morphological fidelity of Mask-Diffusion in the parameter space. During sampling, only Mask-Diffusion is used, ensuring diversity and scalability. Comprehensive experiments demonstrate the superiority of our method. Siamese-Diffusion boosts SANet's mDice and mIoU by 3.6% and 4.4% on the Polyps, while UNet improves by 1.52% and 1.64% on the ISIC2018. Code is available at GitHub.

FedMABA: Towards Fair Federated Learning through Multi-Armed Bandits Allocation

Oct 26, 2024

Abstract:The increasing concern for data privacy has driven the rapid development of federated learning (FL), a privacy-preserving collaborative paradigm. However, the statistical heterogeneity among clients in FL results in inconsistent performance of the server model across various clients. Server model may show favoritism towards certain clients while performing poorly for others, heightening the challenge of fairness. In this paper, we reconsider the inconsistency in client performance distribution and introduce the concept of adversarial multi-armed bandit to optimize the proposed objective with explicit constraints on performance disparities. Practically, we propose a novel multi-armed bandit-based allocation FL algorithm (FedMABA) to mitigate performance unfairness among diverse clients with different data distributions. Extensive experiments, in different Non-I.I.D. scenarios, demonstrate the exceptional performance of FedMABA in enhancing fairness.

TRACE: Temporal Grounding Video LLM via Causal Event Modeling

Oct 08, 2024

Abstract:Video Temporal Grounding (VTG) is a crucial capability for video understanding models and plays a vital role in downstream tasks such as video browsing and editing. To effectively handle various tasks simultaneously and enable zero-shot prediction, there is a growing trend in employing video LLMs for VTG tasks. However, current video LLM-based methods rely exclusively on natural language generation, lacking the ability to model the clear structure inherent in videos, which restricts their effectiveness in tackling VTG tasks. To address this issue, this paper first formally introduces causal event modeling framework, which represents videos as sequences of events, and predict the current event using previous events, video inputs, and textural instructions. Each event consists of three components: timestamps, salient scores, and textual captions. We then propose a novel task-interleaved video LLM called TRACE to effectively implement the causal event modeling framework in practice. The TRACE processes visual frames, timestamps, salient scores, and text as distinct tasks, employing various encoders and decoding heads for each. Task tokens are arranged in an interleaved sequence according to the causal event modeling framework's formulation. Extensive experiments on various VTG tasks and datasets demonstrate the superior performance of TRACE compared to state-of-the-art video LLMs. Our model and code are available at \url{https://github.com/gyxxyg/TRACE}.

Enhancing Long Video Understanding via Hierarchical Event-Based Memory

Sep 10, 2024

Abstract:Recently, integrating visual foundation models into large language models (LLMs) to form video understanding systems has attracted widespread attention. Most of the existing models compress diverse semantic information within the whole video and feed it into LLMs for content comprehension. While this method excels in short video understanding, it may result in a blend of multiple event information in long videos due to coarse compression, which causes information redundancy. Consequently, the semantics of key events might be obscured within the vast information that hinders the model's understanding capabilities. To address this issue, we propose a Hierarchical Event-based Memory-enhanced LLM (HEM-LLM) for better understanding of long videos. Firstly, we design a novel adaptive sequence segmentation scheme to divide multiple events within long videos. In this way, we can perform individual memory modeling for each event to establish intra-event contextual connections, thereby reducing information redundancy. Secondly, while modeling current event, we compress and inject the information of the previous event to enhance the long-term inter-event dependencies in videos. Finally, we perform extensive experiments on various video understanding tasks and the results show that our model achieves state-of-the-art performances.

Innovative RIS Prototyping Enhancing Wireless Communication with Real-Time Spot Beam Tracking and OAM Wavefront Manipulation

Jul 28, 2024Abstract:This paper presents a sophisticated reconfigurable metasurface architecture that introduces an advanced concept of flexible full-array space-time wavefront manipulation with enhanced dynamic capabilities. The practical 2-bit phase-shifting unit cell on the RIS is distinguished by its ability to maintain four stable phase states, each with ${90^ \circ }$ differences, and features an insertion loss of less than 0.6 dB across a bandwidth of 200 MHz. All reconfigurable units are equipped with meticulously designed control circuits, governed by an intelligent core composed of multiple Micro-Controller Units (MCUs), enabling rapid control response across the entire RIS array. Owing to the capability of each unit cell on the metasurface to independently switch states, the entire RIS is not limited to controlling general beams with specific directional patterns, but also generates beams with more complex structures, including multi-focus 3D spot beams and vortex beams. This development substantially broadens its applicability across various industrial wireless transmission scenarios. Moreover, by leveraging the rapid-respond space-time coding and the full-array independent programmability of the RIS prototyping operating at 10.7 GHz, we have demonstrated that: 1) The implementation of 3D spot beams scanning facilitates dynamic beam tracking and real-time communication under the indoor near-field scenario; 2) The rapid wavefront rotation of vortex beams enables precise modulation of signals within the Doppler domain, showcasing an innovative approach to wireless signal manipulation; 3) The beam steering experiments for blocking users under outdoor far-field scenarios, verifying the beamforming capability of the RIS board.

Smart Sampling: Helping from Friendly Neighbors for Decentralized Federated Learning

Jul 05, 2024

Abstract:Federated Learning (FL) is gaining widespread interest for its ability to share knowledge while preserving privacy and reducing communication costs. Unlike Centralized FL, Decentralized FL (DFL) employs a network architecture that eliminates the need for a central server, allowing direct communication among clients and leading to significant communication resource savings. However, due to data heterogeneity, not all neighboring nodes contribute to enhancing the local client's model performance. In this work, we introduce \textbf{\emph{AFIND+}}, a simple yet efficient algorithm for sampling and aggregating neighbors in DFL, with the aim of leveraging collaboration to improve clients' model performance. AFIND+ identifies helpful neighbors, adaptively adjusts the number of selected neighbors, and strategically aggregates the sampled neighbors' models based on their contributions. Numerical results on real-world datasets with diverse data partitions demonstrate that AFIND+ outperforms other sampling algorithms in DFL and is compatible with most existing DFL optimization algorithms.

Client2Vec: Improving Federated Learning by Distribution Shifts Aware Client Indexing

May 25, 2024Abstract:Federated Learning (FL) is a privacy-preserving distributed machine learning paradigm. Nonetheless, the substantial distribution shifts among clients pose a considerable challenge to the performance of current FL algorithms. To mitigate this challenge, various methods have been proposed to enhance the FL training process. This paper endeavors to tackle the issue of data heterogeneity from another perspective -- by improving FL algorithms prior to the actual training stage. Specifically, we introduce the Client2Vec mechanism, which generates a unique client index for each client before the commencement of FL training. Subsequently, we leverage the generated client index to enhance the subsequent FL training process. To demonstrate the effectiveness of the proposed Client2Vec method, we conduct three case studies that assess the impact of the client index on the FL training process. These case studies encompass enhanced client sampling, model aggregation, and local training. Extensive experiments conducted on diverse datasets and model architectures show the efficacy of Client2Vec across all three case studies. Our code is avaliable at \url{https://github.com/LINs-lab/client2vec}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge