Yin Huang

WearVox: An Egocentric Multichannel Voice Assistant Benchmark for Wearables

Dec 25, 2025Abstract:Wearable devices such as AI glasses are transforming voice assistants into always-available, hands-free collaborators that integrate seamlessly with daily life, but they also introduce challenges like egocentric audio affected by motion and noise, rapid micro-interactions, and the need to distinguish device-directed speech from background conversations. Existing benchmarks largely overlook these complexities, focusing instead on clean or generic conversational audio. To bridge this gap, we present WearVox, the first benchmark designed to rigorously evaluate voice assistants in realistic wearable scenarios. WearVox comprises 3,842 multi-channel, egocentric audio recordings collected via AI glasses across five diverse tasks including Search-Grounded QA, Closed-Book QA, Side-Talk Rejection, Tool Calling, and Speech Translation, spanning a wide range of indoor and outdoor environments and acoustic conditions. Each recording is accompanied by rich metadata, enabling nuanced analysis of model performance under real-world constraints. We benchmark leading proprietary and open-source speech Large Language Models (SLLMs) and find that most real-time SLLMs achieve accuracies on WearVox ranging from 29% to 59%, with substantial performance degradation on noisy outdoor audio, underscoring the difficulty and realism of the benchmark. Additionally, we conduct a case study with two new SLLMs that perform inference with single-channel and multi-channel audio, demonstrating that multi-channel audio inputs significantly enhance model robustness to environmental noise and improve discrimination between device-directed and background speech. Our results highlight the critical importance of spatial audio cues for context-aware voice assistants and establish WearVox as a comprehensive testbed for advancing wearable voice AI research.

Parking Availability Prediction via Fusing Multi-Source Data with A Self-Supervised Learning Enhanced Spatio-Temporal Inverted Transformer

Sep 04, 2025Abstract:The rapid growth of private car ownership has worsened the urban parking predicament, underscoring the need for accurate and effective parking availability prediction to support urban planning and management. To address key limitations in modeling spatio-temporal dependencies and exploiting multi-source data for parking availability prediction, this study proposes a novel approach with SST-iTransformer. The methodology leverages K-means clustering to establish parking cluster zones (PCZs), extracting and integrating traffic demand characteristics from various transportation modes (i.e., metro, bus, online ride-hailing, and taxi) associated with the targeted parking lots. Upgraded on vanilla iTransformer, SST-iTransformer integrates masking-reconstruction-based pretext tasks for self-supervised spatio-temporal representation learning, and features an innovative dual-branch attention mechanism: Series Attention captures long-term temporal dependencies via patching operations, while Channel Attention models cross-variate interactions through inverted dimensions. Extensive experiments using real-world data from Chengdu, China, demonstrate that SST-iTransformer outperforms baseline deep learning models (including Informer, Autoformer, Crossformer, and iTransformer), achieving state-of-the-art performance with the lowest mean squared error (MSE) and competitive mean absolute error (MAE). Comprehensive ablation studies quantitatively reveal the relative importance of different data sources: incorporating ride-hailing data provides the largest performance gains, followed by taxi, whereas fixed-route transit features (bus/metro) contribute marginally. Spatial correlation analysis further confirms that excluding historical data from correlated parking lots within PCZs leads to substantial performance degradation, underscoring the importance of modeling spatial dependencies.

ConfQA: Answer Only If You Are Confident

Jun 08, 2025

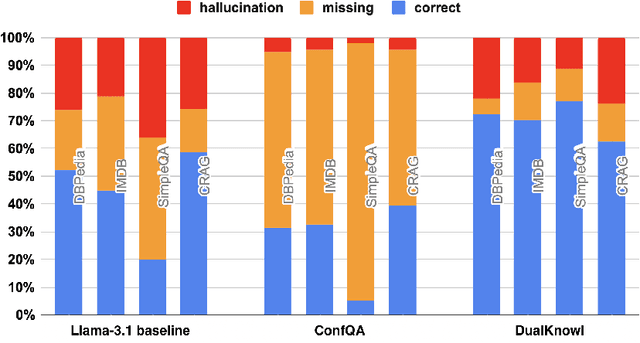

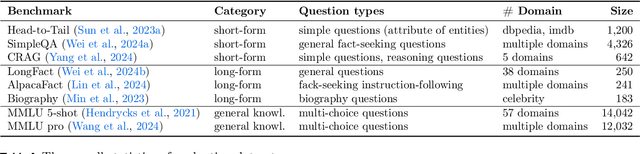

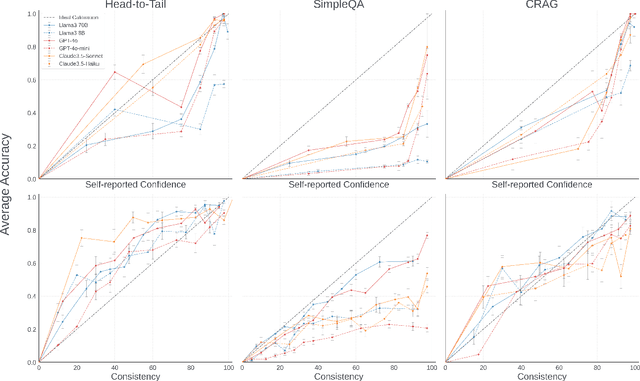

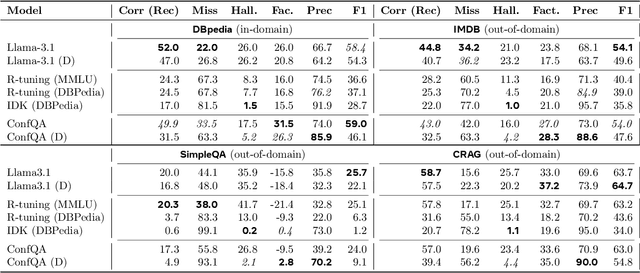

Abstract:Can we teach Large Language Models (LLMs) to refrain from hallucinating factual statements? In this paper we present a fine-tuning strategy that we call ConfQA, which can reduce hallucination rate from 20-40% to under 5% across multiple factuality benchmarks. The core idea is simple: when the LLM answers a question correctly, it is trained to continue with the answer; otherwise, it is trained to admit "I am unsure". But there are two key factors that make the training highly effective. First, we introduce a dampening prompt "answer only if you are confident" to explicitly guide the behavior, without which hallucination remains high as 15%-25%. Second, we leverage simple factual statements, specifically attribute values from knowledge graphs, to help LLMs calibrate the confidence, resulting in robust generalization across domains and question types. Building on this insight, we propose the Dual Neural Knowledge framework, which seamlessly select between internally parameterized neural knowledge and externally recorded symbolic knowledge based on ConfQA's confidence. The framework enables potential accuracy gains to beyond 95%, while reducing unnecessary external retrievals by over 30%.

Multimodal Federated Learning: A Survey through the Lens of Different FL Paradigms

May 27, 2025Abstract:Multimodal Federated Learning (MFL) lies at the intersection of two pivotal research areas: leveraging complementary information from multiple modalities to improve downstream inference performance and enabling distributed training to enhance efficiency and preserve privacy. Despite the growing interest in MFL, there is currently no comprehensive taxonomy that organizes MFL through the lens of different Federated Learning (FL) paradigms. This perspective is important because multimodal data introduces distinct challenges across various FL settings. These challenges, including modality heterogeneity, privacy heterogeneity, and communication inefficiency, are fundamentally different from those encountered in traditional unimodal or non-FL scenarios. In this paper, we systematically examine MFL within the context of three major FL paradigms: horizontal FL (HFL), vertical FL (VFL), and hybrid FL. For each paradigm, we present the problem formulation, review representative training algorithms, and highlight the most prominent challenge introduced by multimodal data in distributed settings. We also discuss open challenges and provide insights for future research. By establishing this taxonomy, we aim to uncover the novel challenges posed by multimodal data from the perspective of different FL paradigms and to offer a new lens through which to understand and advance the development of MFL.

Multi-Scale Target-Aware Representation Learning for Fundus Image Enhancement

May 03, 2025Abstract:High-quality fundus images provide essential anatomical information for clinical screening and ophthalmic disease diagnosis. Yet, due to hardware limitations, operational variability, and patient compliance, fundus images often suffer from low resolution and signal-to-noise ratio. Recent years have witnessed promising progress in fundus image enhancement. However, existing works usually focus on restoring structural details or global characteristics of fundus images, lacking a unified image enhancement framework to recover comprehensive multi-scale information. Moreover, few methods pinpoint the target of image enhancement, e.g., lesions, which is crucial for medical image-based diagnosis. To address these challenges, we propose a multi-scale target-aware representation learning framework (MTRL-FIE) for efficient fundus image enhancement. Specifically, we propose a multi-scale feature encoder (MFE) that employs wavelet decomposition to embed both low-frequency structural information and high-frequency details. Next, we design a structure-preserving hierarchical decoder (SHD) to fuse multi-scale feature embeddings for real fundus image restoration. SHD integrates hierarchical fusion and group attention mechanisms to achieve adaptive feature fusion while retaining local structural smoothness. Meanwhile, a target-aware feature aggregation (TFA) module is used to enhance pathological regions and reduce artifacts. Experimental results on multiple fundus image datasets demonstrate the effectiveness and generalizability of MTRL-FIE for fundus image enhancement. Compared to state-of-the-art methods, MTRL-FIE achieves superior enhancement performance with a more lightweight architecture. Furthermore, our approach generalizes to other ophthalmic image processing tasks without supervised fine-tuning, highlighting its potential for clinical applications.

A Self-Supervised Transformer for Unusable Shared Bike Detection

May 02, 2025Abstract:The rapid expansion of bike-sharing systems (BSS) has greatly improved urban "last-mile" connectivity, yet large-scale deployments face escalating operational challenges, particularly in detecting faulty bikes. Existing detection approaches either rely on static model-based thresholds that overlook dynamic spatiotemporal (ST) usage patterns or employ supervised learning methods that struggle with label scarcity and class imbalance. To address these limitations, this paper proposes a novel Self-Supervised Transformer (SSTransformer) framework for automatically detecting unusable shared bikes, leveraging ST features extracted from GPS trajectories and trip records. The model incorporates a self-supervised pre-training strategy to enhance its feature extraction capabilities, followed by fine-tuning for efficient status recognition. In the pre-training phase, the Transformer encoder learns generalized representations of bike movement via a self-supervised objective; in the fine-tuning phase, the encoder is adapted to a downstream binary classification task. Comprehensive experiments on a real-world dataset of 10,730 bikes (1,870 unusable, 8,860 normal) from Chengdu, China, demonstrate that SSTransformer significantly outperforms traditional machine learning, ensemble learning, and deep learning baselines, achieving the best accuracy (97.81%), precision (0.8889), and F1-score (0.9358). This work highlights the effectiveness of self-supervised Transformer on ST data for capturing complex anomalies in BSS, paving the way toward more reliable and scalable maintenance solutions for shared mobility.

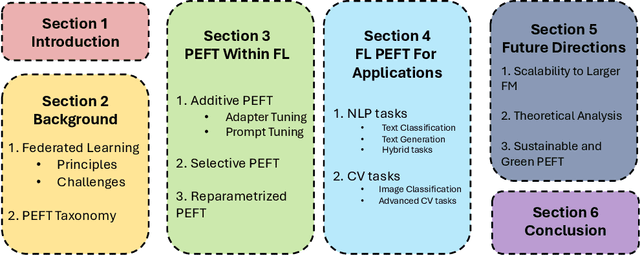

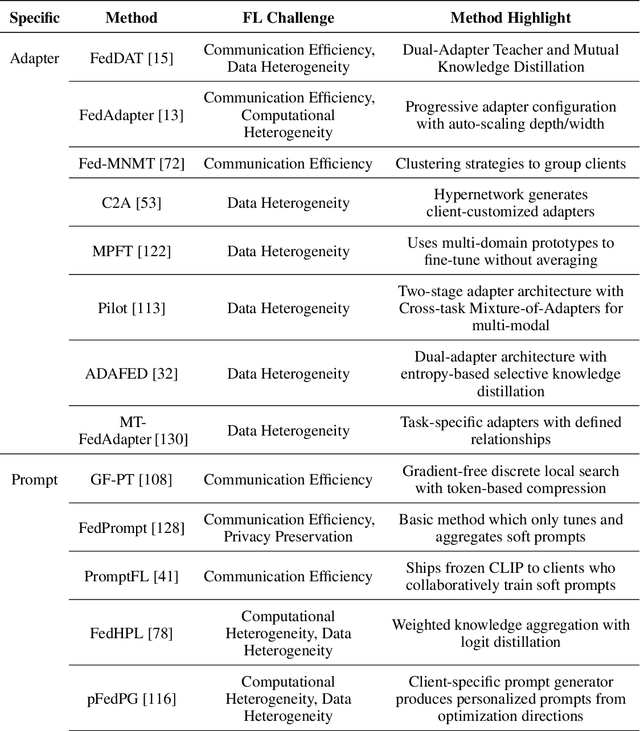

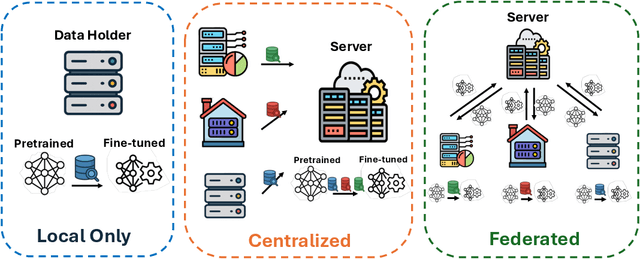

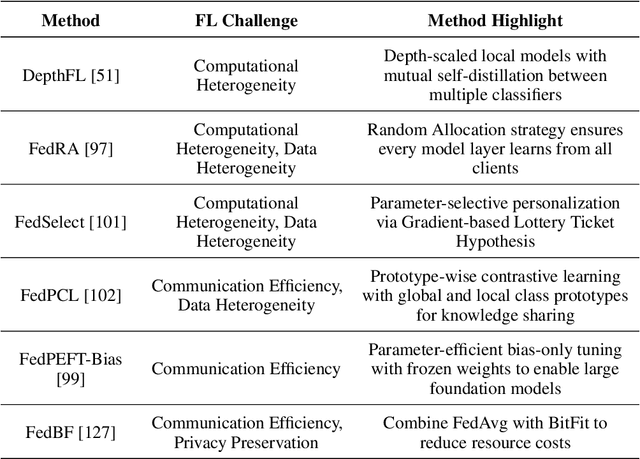

A Survey on Parameter-Efficient Fine-Tuning for Foundation Models in Federated Learning

Apr 29, 2025

Abstract:Foundation models have revolutionized artificial intelligence by providing robust, versatile architectures pre-trained on large-scale datasets. However, adapting these massive models to specific downstream tasks requires fine-tuning, which can be prohibitively expensive in computational resources. Parameter-Efficient Fine-Tuning (PEFT) methods address this challenge by selectively updating only a small subset of parameters. Meanwhile, Federated Learning (FL) enables collaborative model training across distributed clients without sharing raw data, making it ideal for privacy-sensitive applications. This survey provides a comprehensive review of the integration of PEFT techniques within federated learning environments. We systematically categorize existing approaches into three main groups: Additive PEFT (which introduces new trainable parameters), Selective PEFT (which fine-tunes only subsets of existing parameters), and Reparameterized PEFT (which transforms model architectures to enable efficient updates). For each category, we analyze how these methods address the unique challenges of federated settings, including data heterogeneity, communication efficiency, computational constraints, and privacy concerns. We further organize the literature based on application domains, covering both natural language processing and computer vision tasks. Finally, we discuss promising research directions, including scaling to larger foundation models, theoretical analysis of federated PEFT methods, and sustainable approaches for resource-constrained environments.

Learning the Optimal Path and DNN Partition for Collaborative Edge Inference

Oct 02, 2024

Abstract:Recent advancements in Deep Neural Networks (DNNs) have catalyzed the development of numerous intelligent mobile applications and services. However, they also introduce significant computational challenges for resource-constrained mobile devices. To address this, collaborative edge inference has been proposed. This method involves partitioning a DNN inference task into several subtasks and distributing these across multiple network nodes. Despite its potential, most current approaches presume known network parameters -- like node processing speeds and link transmission rates -- or rely on a fixed sequence of nodes for processing the DNN subtasks. In this paper, we tackle a more complex scenario where network parameters are unknown and must be learned, and multiple network paths are available for distributing inference tasks. Specifically, we explore the learning problem of selecting the optimal network path and assigning DNN layers to nodes along this path, considering potential security threats and the costs of switching paths. We begin by deriving structural insights from the DNN layer assignment with complete network information, which narrows down the decision space and provides crucial understanding of optimal assignments. We then cast the learning problem with incomplete network information as a novel adversarial group linear bandits problem with switching costs, featuring rewards generation through a combined stochastic and adversarial process. We introduce a new bandit algorithm, B-EXPUCB, which combines elements of the classical blocked EXP3 and LinUCB algorithms, and demonstrate its sublinear regret. Extensive simulations confirm B-EXPUCB's superior performance in learning for collaborative edge inference over existing algorithms.

Leverage Multi-source Traffic Demand Data Fusion with Transformer Model for Urban Parking Prediction

May 02, 2024Abstract:The escalation in urban private car ownership has worsened the urban parking predicament, necessitating effective parking availability prediction for urban planning and management. However, the existing prediction methods suffer from low prediction accuracy with the lack of spatial-temporal correlation features related to parking volume, and neglect of flow patterns and correlations between similar parking lots within certain areas. To address these challenges, this study proposes a parking availability prediction framework integrating spatial-temporal deep learning with multi-source data fusion, encompassing traffic demand data from multiple sources (e.g., metro, bus, taxi services), and parking lot data. The framework is based on the Transformer as the spatial-temporal deep learning model and leverages K-means clustering to establish parking cluster zones, extracting and integrating traffic demand characteristics from various transportation modes (i.e., metro, bus, online ride-hailing, and taxi) connected to parking lots. Real-world empirical data was used to verify the effectiveness of the proposed method compared with different machine learning, deep learning, and traditional statistical models for predicting parking availability. Experimental results reveal that, with the proposed pipeline, the developed Transformer model outperforms other models in terms of various metrics, e.g., Mean Squared Error (MSE), Mean Absolute Error (MAE), and Mean Absolute Percentage Error (MAPE). By fusing multi-source demanding data with spatial-temporal deep learning techniques, this approach offers the potential to develop parking availability prediction systems that furnish more accurate and timely information to both drivers and urban planners, thereby fostering more efficient and sustainable urban mobility.

Speaker Diaphragm Excursion Prediction: deep attention and online adaptation

May 11, 2023Abstract:Speaker protection algorithm is to leverage the playback signal properties to prevent over excursion while maintaining maximum loudness, especially for the mobile phone with tiny loudspeakers. This paper proposes efficient DL solutions to accurately model and predict the nonlinear excursion, which is challenging for conventional solutions. Firstly, we build the experiment and pre-processing pipeline, where the feedback current and voltage are sampled as input, and laser is employed to measure the excursion as ground truth. Secondly, one FFTNet model is proposed to explore the dominant low-frequency and other unknown harmonics, and compares to a baseline ConvNet model. In addition, BN re-estimation is designed to explore the online adaptation; and INT8 quantization based on AI Model efficiency toolkit (AIMET\footnote{AIMET is a product of Qualcomm Innovation Center, Inc.}) is applied to further reduce the complexity. The proposed algorithm is verified in two speakers and 3 typical deployment scenarios, and $>$99\% residual DC is less than 0.1 mm, much better than traditional solutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge