Xiaoping Zhang

Wavelet-guided Misalignment-aware Network for Visible-Infrared Object Detection

Jul 27, 2025Abstract:Visible-infrared object detection aims to enhance the detection robustness by exploiting the complementary information of visible and infrared image pairs. However, its performance is often limited by frequent misalignments caused by resolution disparities, spatial displacements, and modality inconsistencies. To address this issue, we propose the Wavelet-guided Misalignment-aware Network (WMNet), a unified framework designed to adaptively address different cross-modal misalignment patterns. WMNet incorporates wavelet-based multi-frequency analysis and modality-aware fusion mechanisms to improve the alignment and integration of cross-modal features. By jointly exploiting low and high-frequency information and introducing adaptive guidance across modalities, WMNet alleviates the adverse effects of noise, illumination variation, and spatial misalignment. Furthermore, it enhances the representation of salient target features while suppressing spurious or misleading information, thereby promoting more accurate and robust detection. Extensive evaluations on the DVTOD, DroneVehicle, and M3FD datasets demonstrate that WMNet achieves state-of-the-art performance on misaligned cross-modal object detection tasks, confirming its effectiveness and practical applicability.

PLPHP: Per-Layer Per-Head Vision Token Pruning for Efficient Large Vision-Language Models

Feb 20, 2025

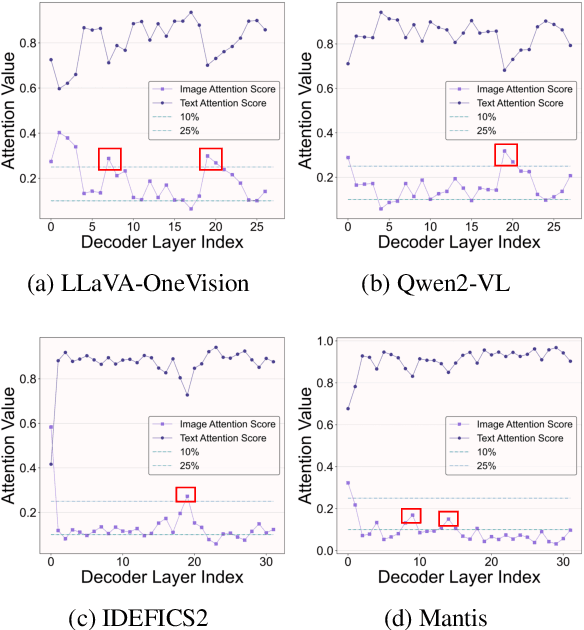

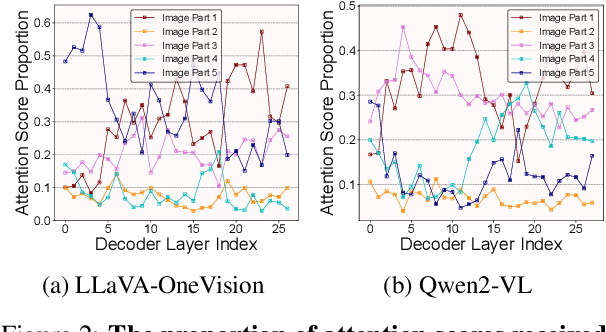

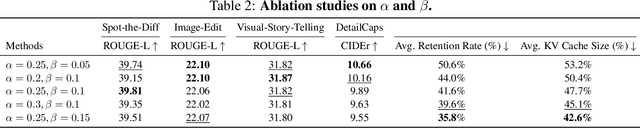

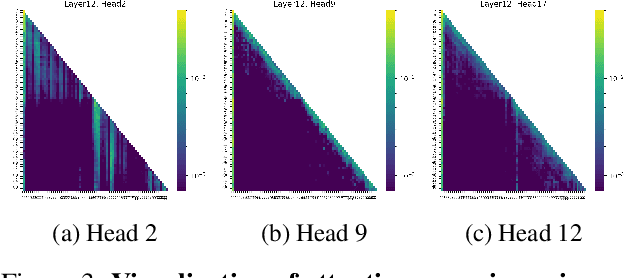

Abstract:Large Vision-Language Models (LVLMs) have demonstrated remarkable capabilities across a range of multimodal tasks. However, their inference efficiency is constrained by the large number of visual tokens processed during decoding. To address this challenge, we propose Per-Layer Per-Head Vision Token Pruning (PLPHP), a two-level fine-grained pruning method including Layer-Level Retention Rate Allocation and Head-Level Vision Token Pruning. Motivated by the Vision Token Re-attention phenomenon across decoder layers, we dynamically adjust token retention rates layer by layer. Layers that exhibit stronger attention to visual information preserve more vision tokens, while layers with lower vision attention are aggressively pruned. Furthermore, PLPHP applies pruning at the attention head level, enabling different heads within the same layer to independently retain critical context. Experiments on multiple benchmarks demonstrate that PLPHP delivers an 18% faster decoding speed and reduces the Key-Value Cache (KV Cache) size by over 50%, all at the cost of 0.46% average performance drop, while also achieving notable performance improvements in multi-image tasks. These results highlight the effectiveness of fine-grained token pruning and contribute to advancing the efficiency and scalability of LVLMs. Our source code will be made publicly available.

Human-Robot Cooperative Piano Playing with Learning-Based Real-Time Music Accompaniment

Sep 18, 2024Abstract:Recent advances in machine learning have paved the way for the development of musical and entertainment robots. However, human-robot cooperative instrument playing remains a challenge, particularly due to the intricate motor coordination and temporal synchronization. In this paper, we propose a theoretical framework for human-robot cooperative piano playing based on non-verbal cues. First, we present a music improvisation model that employs a recurrent neural network (RNN) to predict appropriate chord progressions based on the human's melodic input. Second, we propose a behavior-adaptive controller to facilitate seamless temporal synchronization, allowing the cobot to generate harmonious acoustics. The collaboration takes into account the bidirectional information flow between the human and robot. We have developed an entropy-based system to assess the quality of cooperation by analyzing the impact of different communication modalities during human-robot collaboration. Experiments demonstrate that our RNN-based improvisation can achieve a 93\% accuracy rate. Meanwhile, with the MPC adaptive controller, the robot could respond to the human teammate in homophony performances with real-time accompaniment. Our designed framework has been validated to be effective in allowing humans and robots to work collaboratively in the artistic piano-playing task.

Pi-ViMo: Physiology-inspired Robust Vital Sign Monitoring using mmWave Radars

Mar 24, 2023Abstract:Continuous monitoring of human vital signs using non-contact mmWave radars is attractive due to their ability to penetrate garments and operate under different lighting conditions. Unfortunately, most prior research requires subjects to stay at a fixed distance from radar sensors and to remain still during monitoring. These restrictions limit the applications of radar vital sign monitoring in real life scenarios. In this paper, we address these limitations and present "Pi-ViMo", a non-contact Physiology-inspired Robust Vital Sign Monitoring system, using mmWave radars. We first derive a multi-scattering point model for the human body, and introduce a coherent combining of multiple scatterings to enhance the quality of estimated chest-wall movements. It enables vital sign estimations of subjects at any location in a radar's field of view. We then propose a template matching method to extract human vital signs by adopting physical models of respiration and cardiac activities. The proposed method is capable to separate respiration and heartbeat in the presence of micro-level random body movements (RBM) when a subject is at any location within the field of view of a radar. Experiments in a radar testbed show average respiration rate errors of 6% and heart rate errors of 11.9% for the stationary subjects and average errors of 13.5% for respiration rate and 13.6% for heart rate for subjects under different RBMs.

Audio-Visual Quality Assessment for User Generated Content: Database and Method

Mar 04, 2023Abstract:With the explosive increase of User Generated Content (UGC), UGC video quality assessment (VQA) becomes more and more important for improving users' Quality of Experience (QoE). However, most existing UGC VQA studies only focus on the visual distortions of videos, ignoring that the user's QoE also depends on the accompanying audio signals. In this paper, we conduct the first study to address the problem of UGC audio and video quality assessment (AVQA). Specifically, we construct the first UGC AVQA database named the SJTU-UAV database, which includes 520 in-the-wild UGC audio and video (A/V) sequences, and conduct a user study to obtain the mean opinion scores of the A/V sequences. The content of the SJTU-UAV database is then analyzed from both the audio and video aspects to show the database characteristics. We also design a family of AVQA models, which fuse the popular VQA methods and audio features via support vector regressor (SVR). We validate the effectiveness of the proposed models on the three databases. The experimental results show that with the help of audio signals, the VQA models can evaluate the perceptual quality more accurately. The database will be released to facilitate further research.

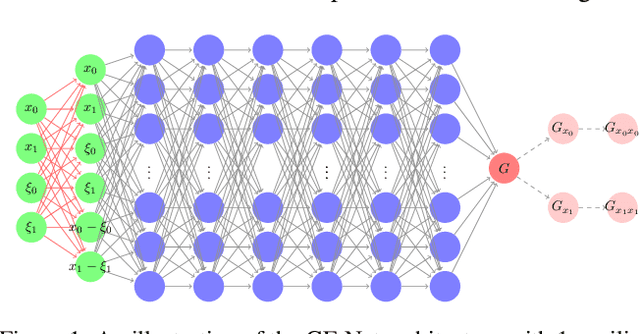

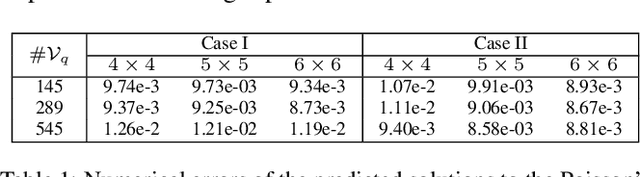

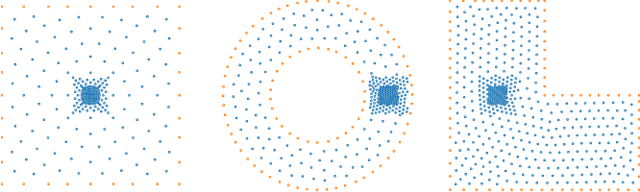

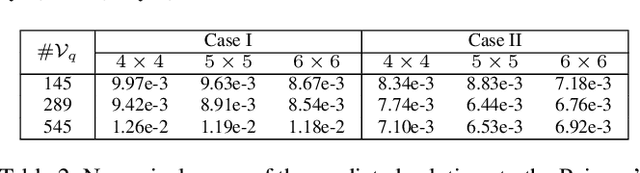

Learning Green's Functions of Linear Reaction-Diffusion Equations with Application to Fast Numerical Solver

May 23, 2021

Abstract:Partial differential equations are often used to model various physical phenomena, such as heat diffusion, wave propagation, fluid dynamics, elasticity, electrodynamics and image processing, and many analytic approaches or traditional numerical methods have been developed and widely used for their solutions. Inspired by rapidly growing impact of deep learning on scientific and engineering research, in this paper we propose a novel neural network, GF-Net, for learning the Green's functions of linear reaction-diffusion equations in an unsupervised fashion. The proposed method overcomes the challenges for finding the Green's functions of the equations on arbitrary domains by utilizing physics-informed approach and the symmetry of the Green's function. As a consequence, it particularly leads to an efficient way for solving the target equations under different boundary conditions and sources. We also demonstrate the effectiveness of the proposed approach by experiments in square, annular and L-shape domains.

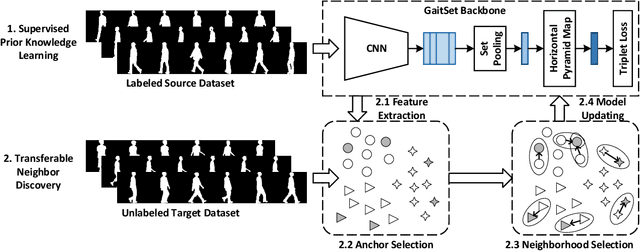

TraND: Transferable Neighborhood Discovery for Unsupervised Cross-domain Gait Recognition

Feb 09, 2021

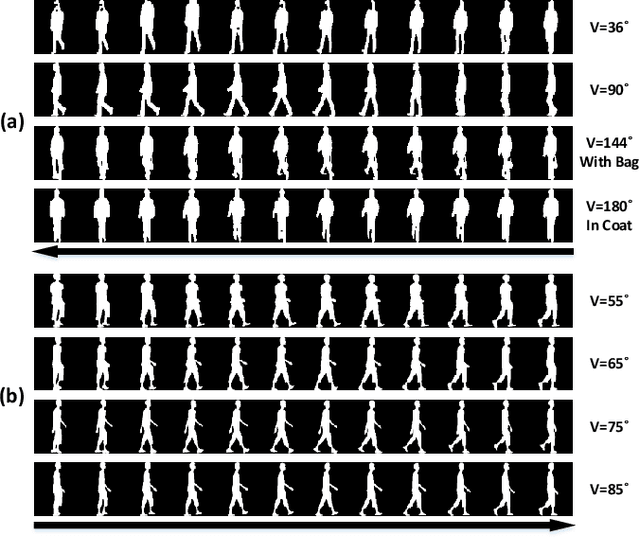

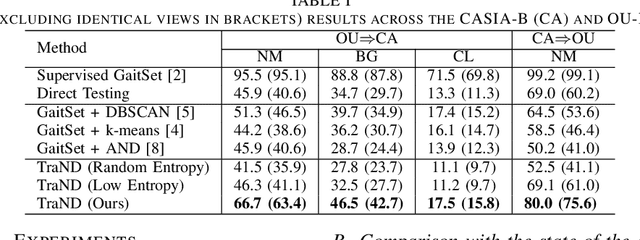

Abstract:Gait, i.e., the movement pattern of human limbs during locomotion, is a promising biometric for the identification of persons. Despite significant improvement in gait recognition with deep learning, existing studies still neglect a more practical but challenging scenario -- unsupervised cross-domain gait recognition which aims to learn a model on a labeled dataset then adapts it to an unlabeled dataset. Due to the domain shift and class gap, directly applying a model trained on one source dataset to other target datasets usually obtains very poor results. Therefore, this paper proposes a Transferable Neighborhood Discovery (TraND) framework to bridge the domain gap for unsupervised cross-domain gait recognition. To learn effective prior knowledge for gait representation, we first adopt a backbone network pre-trained on the labeled source data in a supervised manner. Then we design an end-to-end trainable approach to automatically discover the confident neighborhoods of unlabeled samples in the latent space. During training, the class consistency indicator is adopted to select confident neighborhoods of samples based on their entropy measurements. Moreover, we explore a high-entropy-first neighbor selection strategy, which can effectively transfer prior knowledge to the target domain. Our method achieves state-of-the-art results on two public datasets, i.e., CASIA-B and OU-LP.

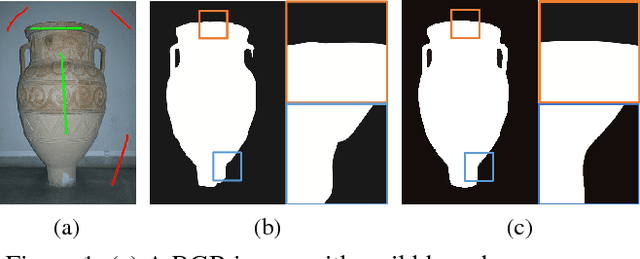

Interactive Binary Image Segmentation with Edge Preservation

Sep 10, 2018

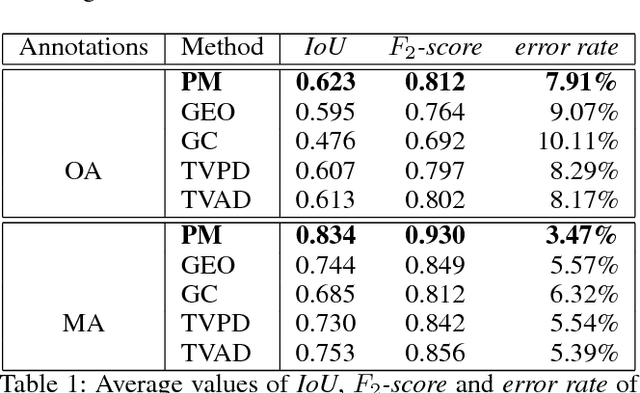

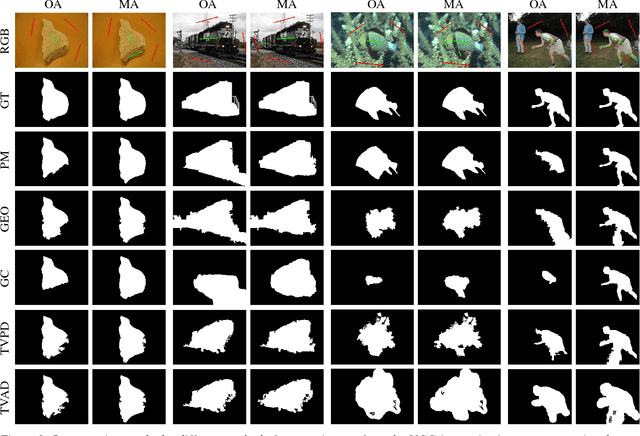

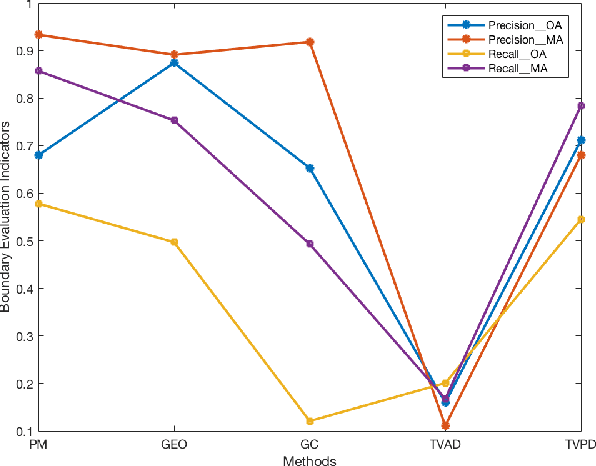

Abstract:Binary image segmentation plays an important role in computer vision and has been widely used in many applications such as image and video editing, object extraction, and photo composition. In this paper, we propose a novel interactive binary image segmentation method based on the Markov Random Field (MRF) framework and the fast bilateral solver (FBS) technique. Specifically, we employ the geodesic distance component to build the unary term. To ensure both computation efficiency and effective responsiveness for interactive segmentation, superpixels are used in computing geodesic distances instead of pixels. Furthermore, we take a bilateral affinity approach for the pairwise term in order to preserve edge information and denoise. Through the alternating direction strategy, the MRF energy minimization problem is divided into two subproblems, which then can be easily solved by steepest gradient descent (SGD) and FBS respectively. Experimental results on the VGG interactive image segmentation dataset show that the proposed algorithm outperforms several state-of-the-art ones, and in particular, it can achieve satisfactory edge-smooth segmentation results even when the foreground and background color appearances are quite indistinctive.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge