Fumiya Iida

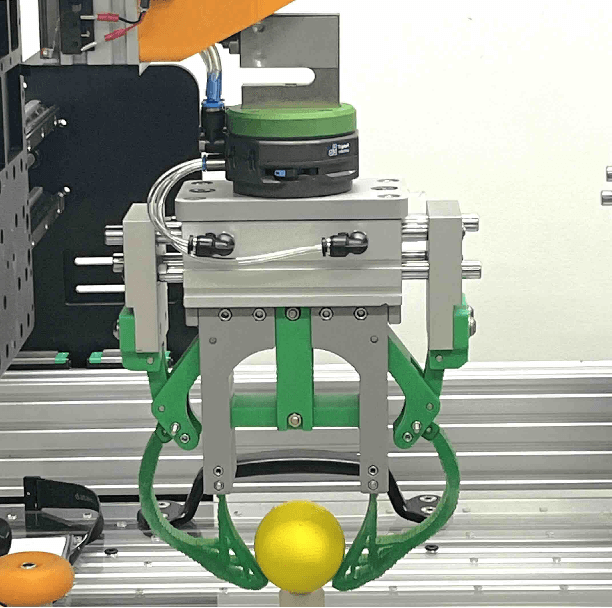

Self-Closing Suction Grippers for Industrial Grasping via Form-Flexible Design

Jul 02, 2025Abstract:Shape-morphing robots have shown benefits in industrial grasping. We propose form-flexible grippers for adaptive grasping. The design is based on the hybrid jamming and suction mechanism, which deforms to handle objects that vary significantly in size from the aperture, including both larger and smaller parts. Compared with traditional grippers, the gripper achieves self-closing to form an airtight seal. Under a vacuum, a wide range of grasping is realized through the passive morphing mechanism at the interface that harmonizes pressure and flow rate. This hybrid gripper showcases the capability to securely grasp an egg, as small as 54.5% of its aperture, while achieving a maximum load-to-mass ratio of 94.3.

Auditory-Tactile Congruence for Synthesis of Adaptive Pain Expressions in RoboPatients

Jun 13, 2025Abstract:Misdiagnosis can lead to delayed treatments and harm. Robotic patients offer a controlled way to train and evaluate clinicians in rare, subtle, or complex cases, reducing diagnostic errors. We present RoboPatient, a medical robotic simulator aimed at multimodal pain synthesis based on haptic and auditory feedback during palpation-based training scenarios. The robopatient functions as an adaptive intermediary, capable of synthesizing plausible pain expressions vocal and facial in response to tactile stimuli generated during palpation. Using an abdominal phantom, robopatient captures and processes haptic input via an internal palpation-to-pain mapping model. To evaluate perceptual congruence between palpation and the corresponding auditory output, we conducted a study involving 7680 trials across 20 participants, where they evaluated pain intensity through sound. Results show that amplitude and pitch significantly influence agreement with the robot's pain expressions, irrespective of pain sounds. Stronger palpation forces elicited stronger agreement, aligning with psychophysical patterns. The study revealed two key dimensions: pitch and amplitude are central to how people perceive pain sounds, with pitch being the most influential cue. These acoustic features shape how well the sound matches the applied force during palpation, impacting perceived realism. This approach lays the groundwork for high-fidelity robotic patients in clinical education and diagnostic simulation.

Palpation Alters Auditory Pain Expressions with Gender-Specific Variations in Robopatients

Jun 13, 2025Abstract:Diagnostic errors remain a major cause of preventable deaths, particularly in resource-limited regions. Medical training simulators, including robopatients, play a vital role in reducing these errors by mimicking real patients for procedural training such as palpation. However, generating multimodal feedback, especially auditory pain expressions, remains challenging due to the complex relationship between palpation behavior and sound. The high-dimensional nature of pain sounds makes exploration challenging with conventional methods. This study introduces a novel experimental paradigm for pain expressivity in robopatients where they dynamically generate auditory pain expressions in response to palpation force, by co-optimizing human feedback using machine learning. Using Proximal Policy Optimization (PPO), a reinforcement learning (RL) technique optimized for continuous adaptation, our robot iteratively refines pain sounds based on real-time human feedback. This robot initializes randomized pain responses to palpation forces, and the RL agent learns to adjust these sounds to align with human preferences. The results demonstrated that the system adapts to an individual's palpation forces and sound preferences and captures a broad spectrum of pain intensity, from mild discomfort to acute distress, through RL-guided exploration of the auditory pain space. The study further showed that pain sound perception exhibits saturation at lower forces with gender specific thresholds. These findings highlight the system's potential to enhance abdominal palpation training by offering a controllable and immersive simulation platform.

The Morphology-Control Trade-Off: Insights into Soft Robotic Efficiency

Mar 20, 2025Abstract:Soft robotics holds transformative potential for enabling adaptive and adaptable systems in dynamic environments. However, the interplay between morphological and control complexities and their collective impact on task performance remains poorly understood. Therefore, in this study, we investigate these trade-offs across tasks of differing difficulty levels using four well-used morphological complexity metrics and control complexity measured by FLOPs. We investigate how these factors jointly influence task performance by utilizing the evolutionary robot experiments. Results show that optimal performance depends on the alignment between morphology and control: simpler morphologies and lightweight controllers suffice for easier tasks, while harder tasks demand higher complexities in both dimensions. In addition, a clear trade-off between morphological and control complexities that achieve the same task performance can be observed. Moreover, we also propose a sensitivity analysis to expose the task-specific contributions of individual morphological metrics. Our study establishes a framework for investigating the relationships between morphology, control, and task performance, advancing the development of task-specific robotic designs that balance computational efficiency with adaptability. This study contributes to the practical application of soft robotics in real-world scenarios by providing actionable insights.

Embodied Manipulation with Past and Future Morphologies through an Open Parametric Hand Design

Oct 24, 2024Abstract:A human-shaped robotic hand offers unparalleled versatility and fine motor skills, enabling it to perform a broad spectrum of tasks with precision, power and robustness. Across the paleontological record and animal kingdom we see a wide range of alternative hand and actuation designs. Understanding the morphological design space and the resulting emergent behaviors can not only aid our understanding of dexterous manipulation and its evolution, but also assist design optimization, achieving, and eventually surpassing human capabilities. Exploration of hand embodiment has to date been limited by inaccessibility of customizable hands in the real-world, and by the reality gap in simulation of complex interactions. We introduce an open parametric design which integrates techniques for simplified customization, fabrication, and control with design features to maximize behavioral diversity. Non-linear rolling joints, anatomical tendon routing, and a low degree-of-freedom, modulating, actuation system, enable rapid production of single-piece 3D printable hands without compromising dexterous behaviors. To demonstrate this, we evaluated the design's low-level behavior range and stability, showing variable stiffness over two orders of magnitude. Additionally, we fabricated three hand designs: human, mirrored human with two thumbs, and aye-aye hands. Manipulation tests evaluate the variation in each hand's proficiency at handling diverse objects, and demonstrate emergent behaviors unique to each design. Overall, we shed light on new possible designs for robotic hands, provide a design space to compare and contrast different hand morphologies and structures, and share a practical and open-source design for exploring embodied manipulation.

Human-Robot Cooperative Piano Playing with Learning-Based Real-Time Music Accompaniment

Sep 18, 2024Abstract:Recent advances in machine learning have paved the way for the development of musical and entertainment robots. However, human-robot cooperative instrument playing remains a challenge, particularly due to the intricate motor coordination and temporal synchronization. In this paper, we propose a theoretical framework for human-robot cooperative piano playing based on non-verbal cues. First, we present a music improvisation model that employs a recurrent neural network (RNN) to predict appropriate chord progressions based on the human's melodic input. Second, we propose a behavior-adaptive controller to facilitate seamless temporal synchronization, allowing the cobot to generate harmonious acoustics. The collaboration takes into account the bidirectional information flow between the human and robot. We have developed an entropy-based system to assess the quality of cooperation by analyzing the impact of different communication modalities during human-robot collaboration. Experiments demonstrate that our RNN-based improvisation can achieve a 93\% accuracy rate. Meanwhile, with the MPC adaptive controller, the robot could respond to the human teammate in homophony performances with real-time accompaniment. Our designed framework has been validated to be effective in allowing humans and robots to work collaboratively in the artistic piano-playing task.

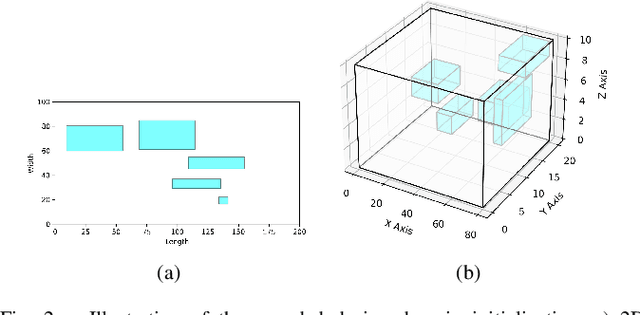

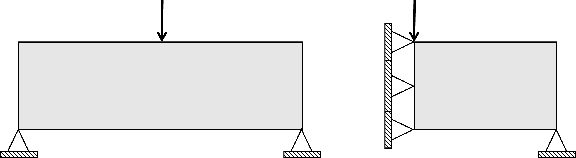

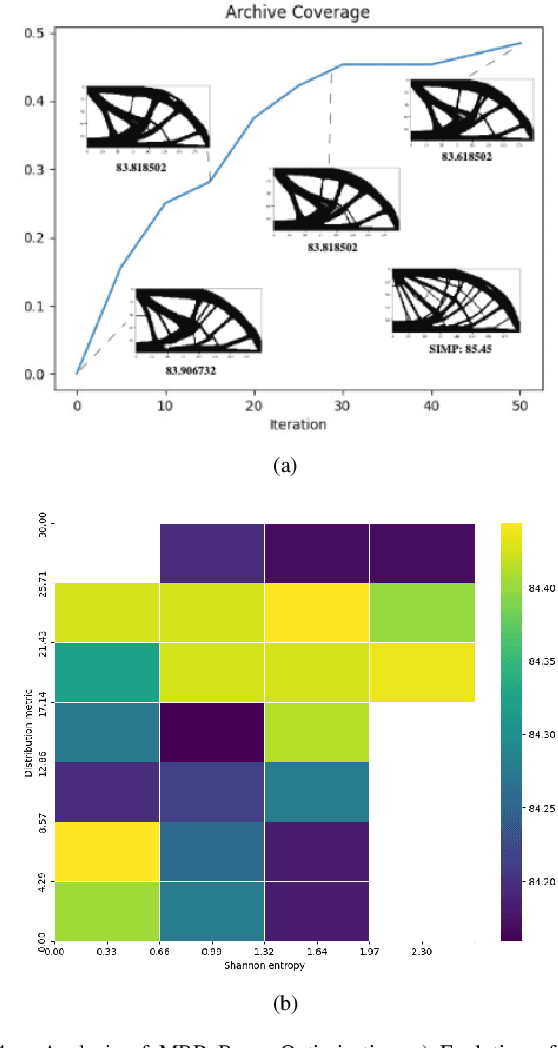

A 'MAP' to find high-performing soft robot designs: Traversing complex design spaces using MAP-elites and Topology Optimization

Jul 10, 2024

Abstract:Soft robotics has emerged as the standard solution for grasping deformable objects, and has proven invaluable for mobile robotic exploration in extreme environments. However, despite this growth, there are no widely adopted computational design tools that produce quality, manufacturable designs. To advance beyond the diminishing returns of heuristic bio-inspiration, the field needs efficient tools to explore the complex, non-linear design spaces present in soft robotics, and find novel high-performing designs. In this work, we investigate a hierarchical design optimization methodology which combines the strengths of topology optimization and quality diversity optimization to generate diverse and high-performance soft robots by evolving the design domain. The method embeds variably sized void regions within the design domain and evolves their size and position, to facilitating a richer exploration of the design space and find a diverse set of high-performing soft robots. We demonstrate its efficacy on both benchmark topology optimization problems and soft robotic design problems, and show the method enhances grasp performance when applied to soft grippers. Our method provides a new framework to design parts in complex design domains, both soft and rigid.

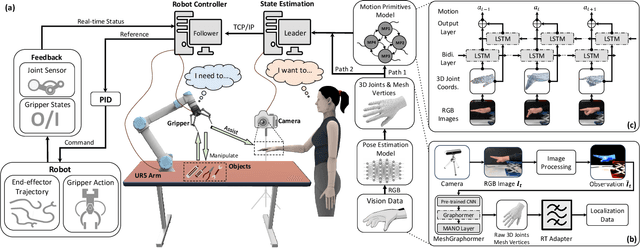

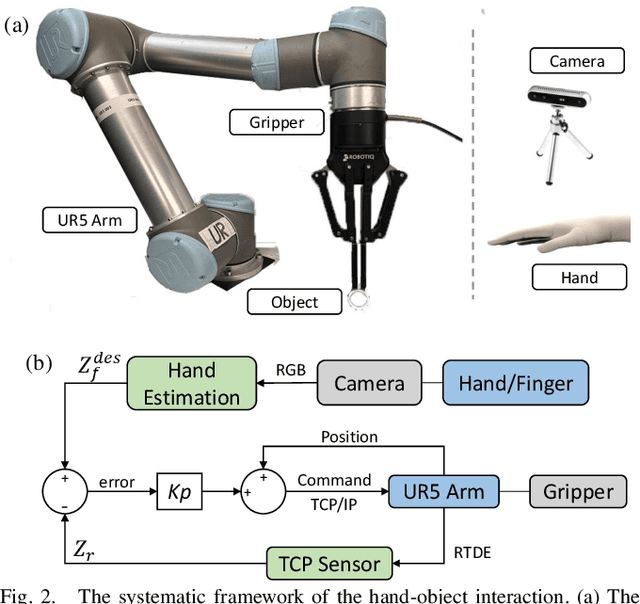

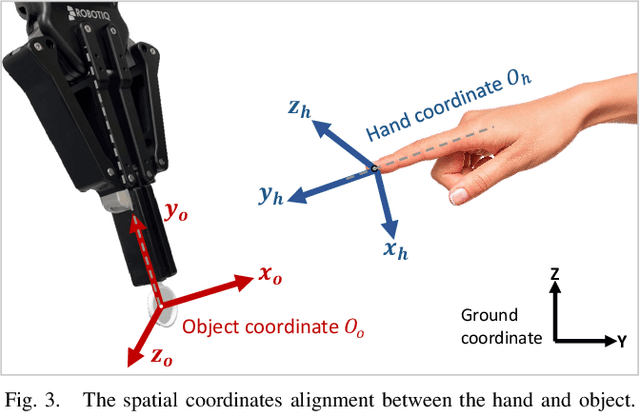

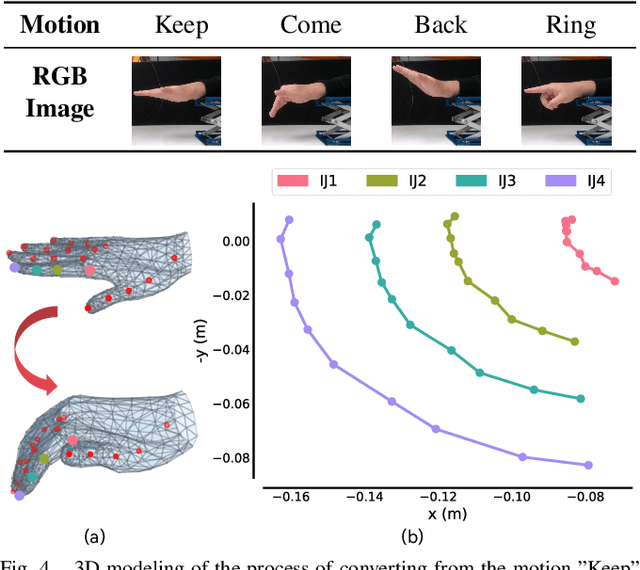

Real-Time Dynamic Robot-Assisted Hand-Object Interaction via Motion Primitives

May 29, 2024

Abstract:Advances in artificial intelligence (AI) have been propelling the evolution of human-robot interaction (HRI) technologies. However, significant challenges remain in achieving seamless interactions, particularly in tasks requiring physical contact with humans. These challenges arise from the need for accurate real-time perception of human actions, adaptive control algorithms for robots, and the effective coordination between human and robotic movements. In this paper, we propose an approach to enhancing physical HRI with a focus on dynamic robot-assisted hand-object interaction (HOI). Our methodology integrates hand pose estimation, adaptive robot control, and motion primitives to facilitate human-robot collaboration. Specifically, we employ a transformer-based algorithm to perform real-time 3D modeling of human hands from single RGB images, based on which a motion primitives model (MPM) is designed to translate human hand motions into robotic actions. The robot's action implementation is dynamically fine-tuned using the continuously updated 3D hand models. Experimental validations, including a ring-wearing task, demonstrate the system's effectiveness in adapting to real-time movements and assisting in precise task executions.

Fin-QD: A Computational Design Framework for Soft Grippers: Integrating MAP-Elites and High-fidelity FEM

Nov 21, 2023Abstract:Computational design can excite the full potential of soft robotics that has the drawbacks of being highly nonlinear from material, structure, and contact. Up to date, enthusiastic research interests have been demonstrated for individual soft fingers, but the frame design space (how each soft finger is assembled) remains largely unexplored. Computationally design remains challenging for the finger-based soft gripper to grip across multiple geometrical-distinct object types successfully. Including the design space for the gripper frame can bring huge difficulties for conventional optimisation algorithms and fitness calculation methods due to the exponential growth of high-dimensional design space. This work proposes an automated computational design optimisation framework that generates gripper diversity to individually grasp geometrically distinct object types based on a quality-diversity approach. This work first discusses a significantly large design space (28 design parameters) for a finger-based soft gripper, including the rarely-explored design space of finger arrangement that is converted to various configurations to arrange individual soft fingers. Then, a contact-based Finite Element Modelling (FEM) is proposed in SOFA to output high-fidelity grasping data for fitness evaluation and feature measurements. Finally, diverse gripper designs are obtained from the framework while considering features such as the volume and workspace of grippers. This work bridges the gap of computationally exploring the vast design space of finger-based soft grippers while grasping large geometrically distinct object types with a simple control scheme.

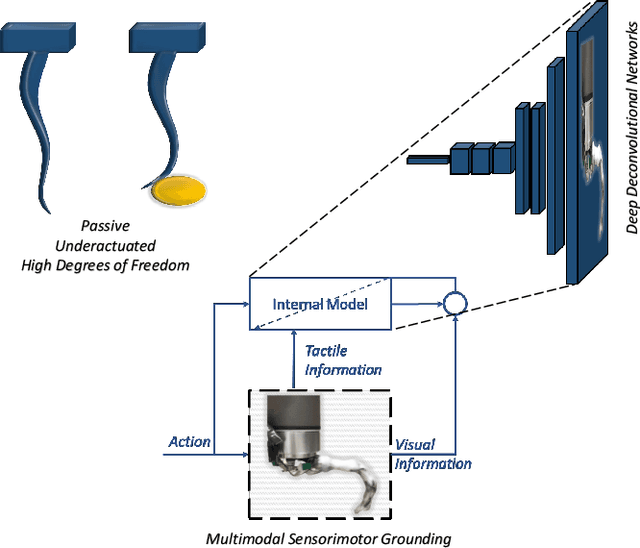

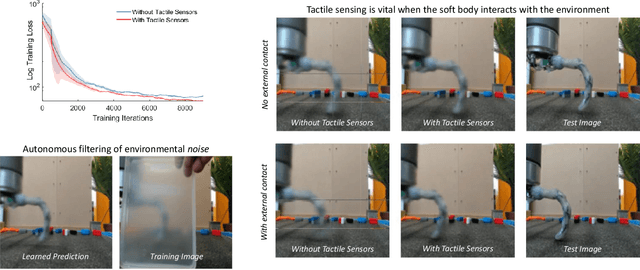

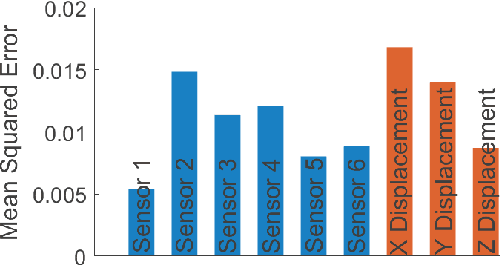

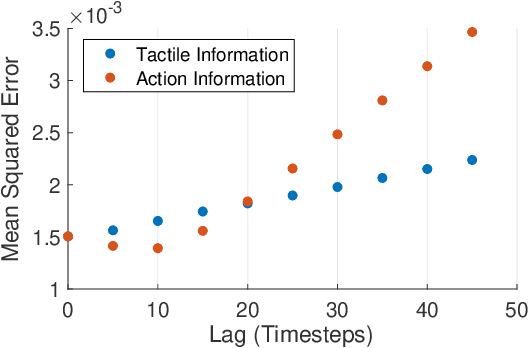

Multimodel Sensor Fusion for Learning Rich Models for Interacting Soft Robots

May 09, 2022

Abstract:Soft robots are typically approximated as low-dimensional systems, especially when learning-based methods are used. This leads to models that are limited in their capability to predict the large number of deformation modes and interactions that a soft robot can have. In this work, we present a deep-learning methodology to learn high-dimensional visual models of a soft robot combining multimodal sensorimotor information. The models are learned in an end-to-end fashion, thereby requiring no intermediate sensor processing or grounding of data. The capabilities and advantages of such a modelling approach are shown on a soft anthropomorphic finger with embedded soft sensors. We also show that how such an approach can be extended to develop higher level cognitive functions like identification of the self and the external environment and acquiring object manipulation skills. This work is a step towards the integration of soft robotics and developmental robotics architectures to create the next generation of intelligent soft robots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge