Mingqi Yuan

Continual Policy Distillation from Distributed Reinforcement Learning Teachers

Jan 30, 2026Abstract:Continual Reinforcement Learning (CRL) aims to develop lifelong learning agents to continuously acquire knowledge across diverse tasks while mitigating catastrophic forgetting. This requires efficiently managing the stability-plasticity dilemma and leveraging prior experience to rapidly generalize to novel tasks. While various enhancement strategies for both aspects have been proposed, achieving scalable performance by directly applying RL to sequential task streams remains challenging. In this paper, we propose a novel teacher-student framework that decouples CRL into two independent processes: training single-task teacher models through distributed RL and continually distilling them into a central generalist model. This design is motivated by the observation that RL excels at solving single tasks, while policy distillation -- a relatively stable supervised learning process -- is well aligned with large foundation models and multi-task learning. Moreover, a mixture-of-experts (MoE) architecture and a replay-based approach are employed to enhance the plasticity and stability of the continual policy distillation process. Extensive experiments on the Meta-World benchmark demonstrate that our framework enables efficient continual RL, recovering over 85% of teacher performance while constraining task-wise forgetting to within 10%.

PvP: Data-Efficient Humanoid Robot Learning with Proprioceptive-Privileged Contrastive Representations

Dec 15, 2025Abstract:Achieving efficient and robust whole-body control (WBC) is essential for enabling humanoid robots to perform complex tasks in dynamic environments. Despite the success of reinforcement learning (RL) in this domain, its sample inefficiency remains a significant challenge due to the intricate dynamics and partial observability of humanoid robots. To address this limitation, we propose PvP, a Proprioceptive-Privileged contrastive learning framework that leverages the intrinsic complementarity between proprioceptive and privileged states. PvP learns compact and task-relevant latent representations without requiring hand-crafted data augmentations, enabling faster and more stable policy learning. To support systematic evaluation, we develop SRL4Humanoid, the first unified and modular framework that provides high-quality implementations of representative state representation learning (SRL) methods for humanoid robot learning. Extensive experiments on the LimX Oli robot across velocity tracking and motion imitation tasks demonstrate that PvP significantly improves sample efficiency and final performance compared to baseline SRL methods. Our study further provides practical insights into integrating SRL with RL for humanoid WBC, offering valuable guidance for data-efficient humanoid robot learning.

Gait-Adaptive Perceptive Humanoid Locomotion with Real-Time Under-Base Terrain Reconstruction

Dec 08, 2025Abstract:For full-size humanoid robots, even with recent advances in reinforcement learning-based control, achieving reliable locomotion on complex terrains, such as long staircases, remains challenging. In such settings, limited perception, ambiguous terrain cues, and insufficient adaptation of gait timing can cause even a single misplaced or mistimed step to result in rapid loss of balance. We introduce a perceptive locomotion framework that merges terrain sensing, gait regulation, and whole-body control into a single reinforcement learning policy. A downward-facing depth camera mounted under the base observes the support region around the feet, and a compact U-Net reconstructs a dense egocentric height map from each frame in real time, operating at the same frequency as the control loop. The perceptual height map, together with proprioceptive observations, is processed by a unified policy that produces joint commands and a global stepping-phase signal, allowing gait timing and whole-body posture to be adapted jointly to the commanded motion and local terrain geometry. We further adopt a single-stage successive teacher-student training scheme for efficient policy learning and knowledge transfer. Experiments conducted on a 31-DoF, 1.65 m humanoid robot demonstrate robust locomotion in both simulation and real-world settings, including forward and backward stair ascent and descent, as well as crossing a 46 cm gap. Project Page:https://ga-phl.github.io/

Plasticine: Accelerating Research in Plasticity-Motivated Deep Reinforcement Learning

Apr 24, 2025Abstract:Developing lifelong learning agents is crucial for artificial general intelligence. However, deep reinforcement learning (RL) systems often suffer from plasticity loss, where neural networks gradually lose their ability to adapt during training. Despite its significance, this field lacks unified benchmarks and evaluation protocols. We introduce Plasticine, the first open-source framework for benchmarking plasticity optimization in deep RL. Plasticine provides single-file implementations of over 13 mitigation methods, 10 evaluation metrics, and learning scenarios with increasing non-stationarity levels from standard to open-ended environments. This framework enables researchers to systematically quantify plasticity loss, evaluate mitigation strategies, and analyze plasticity dynamics across different contexts. Our documentation, examples, and source code are available at https://github.com/RLE-Foundation/Plasticine.

ULTHO: Ultra-Lightweight yet Efficient Hyperparameter Optimization in Deep Reinforcement Learning

Mar 08, 2025Abstract:Hyperparameter optimization (HPO) is a billion-dollar problem in machine learning, which significantly impacts the training efficiency and model performance. However, achieving efficient and robust HPO in deep reinforcement learning (RL) is consistently challenging due to its high non-stationarity and computational cost. To tackle this problem, existing approaches attempt to adapt common HPO techniques (e.g., population-based training or Bayesian optimization) to the RL scenario. However, they remain sample-inefficient and computationally expensive, which cannot facilitate a wide range of applications. In this paper, we propose ULTHO, an ultra-lightweight yet powerful framework for fast HPO in deep RL within single runs. Specifically, we formulate the HPO process as a multi-armed bandit with clustered arms (MABC) and link it directly to long-term return optimization. ULTHO also provides a quantified and statistical perspective to filter the HPs efficiently. We test ULTHO on benchmarks including ALE, Procgen, MiniGrid, and PyBullet. Extensive experiments demonstrate that the ULTHO can achieve superior performance with simple architecture, contributing to the development of advanced and automated RL systems.

RLeXplore: Accelerating Research in Intrinsically-Motivated Reinforcement Learning

May 29, 2024

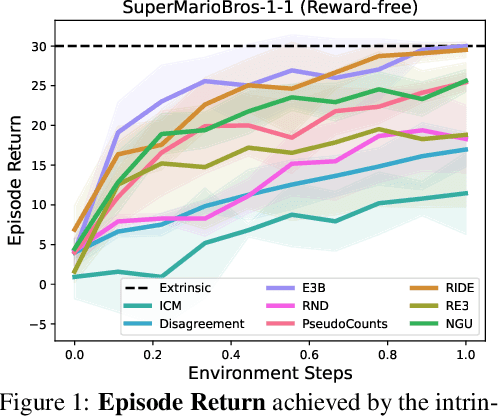

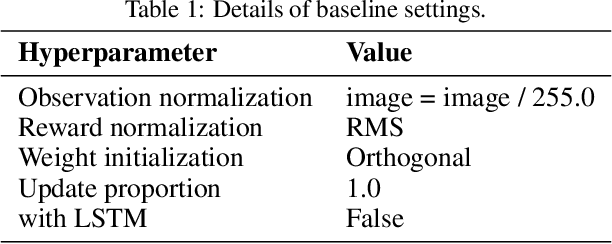

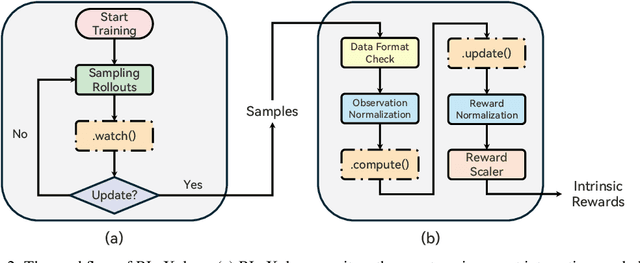

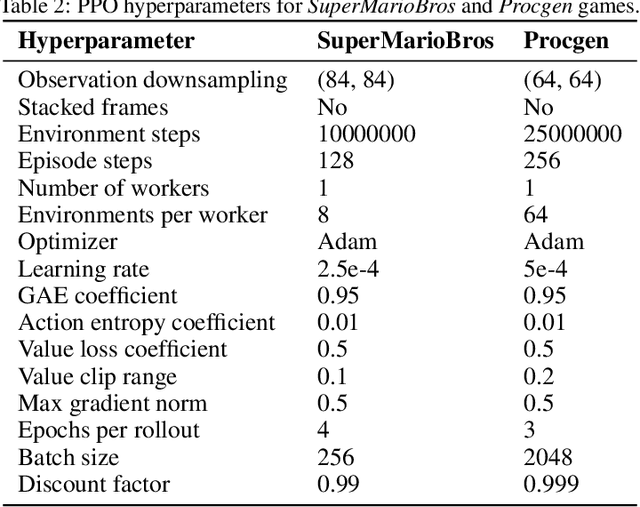

Abstract:Extrinsic rewards can effectively guide reinforcement learning (RL) agents in specific tasks. However, extrinsic rewards frequently fall short in complex environments due to the significant human effort needed for their design and annotation. This limitation underscores the necessity for intrinsic rewards, which offer auxiliary and dense signals and can enable agents to learn in an unsupervised manner. Although various intrinsic reward formulations have been proposed, their implementation and optimization details are insufficiently explored and lack standardization, thereby hindering research progress. To address this gap, we introduce RLeXplore, a unified, highly modularized, and plug-and-play framework offering reliable implementations of eight state-of-the-art intrinsic reward algorithms. Furthermore, we conduct an in-depth study that identifies critical implementation details and establishes well-justified standard practices in intrinsically-motivated RL. The source code for RLeXplore is available at https://github.com/RLE-Foundation/RLeXplore.

Real-Time Dynamic Robot-Assisted Hand-Object Interaction via Motion Primitives

May 29, 2024

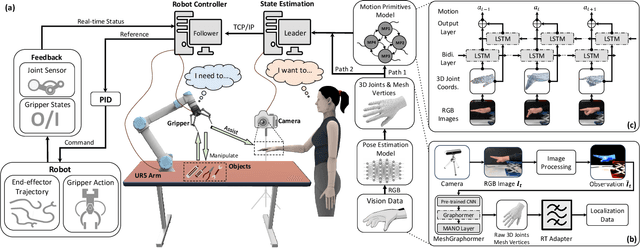

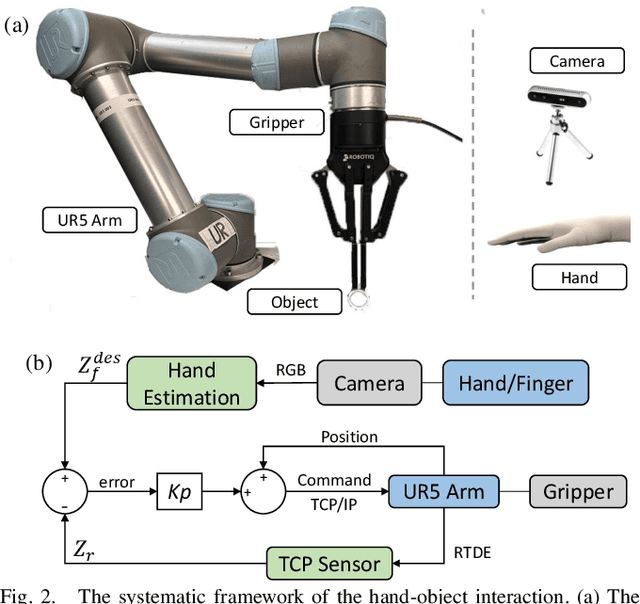

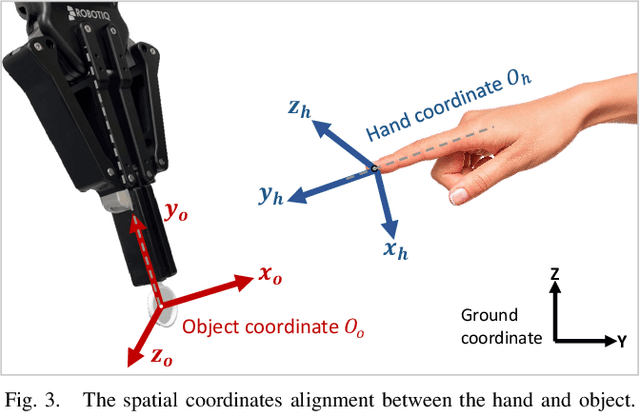

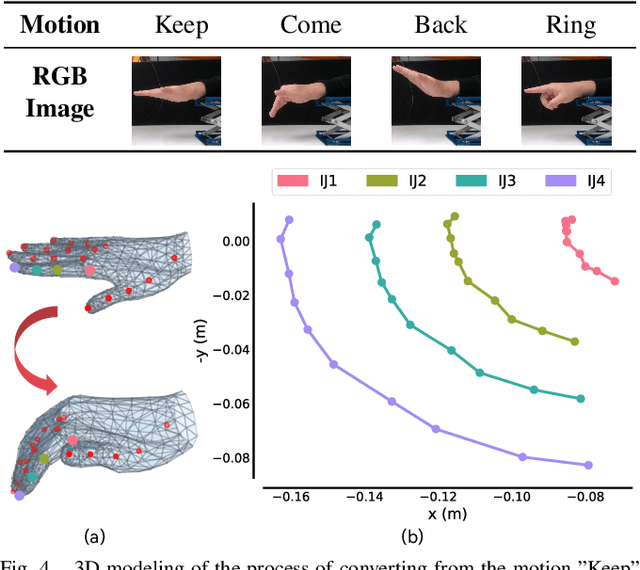

Abstract:Advances in artificial intelligence (AI) have been propelling the evolution of human-robot interaction (HRI) technologies. However, significant challenges remain in achieving seamless interactions, particularly in tasks requiring physical contact with humans. These challenges arise from the need for accurate real-time perception of human actions, adaptive control algorithms for robots, and the effective coordination between human and robotic movements. In this paper, we propose an approach to enhancing physical HRI with a focus on dynamic robot-assisted hand-object interaction (HOI). Our methodology integrates hand pose estimation, adaptive robot control, and motion primitives to facilitate human-robot collaboration. Specifically, we employ a transformer-based algorithm to perform real-time 3D modeling of human hands from single RGB images, based on which a motion primitives model (MPM) is designed to translate human hand motions into robotic actions. The robot's action implementation is dynamically fine-tuned using the continuously updated 3D hand models. Experimental validations, including a ring-wearing task, demonstrate the system's effectiveness in adapting to real-time movements and assisting in precise task executions.

RLLTE: Long-Term Evolution Project of Reinforcement Learning

Sep 28, 2023

Abstract:We present RLLTE: a long-term evolution, extremely modular, and open-source framework for reinforcement learning (RL) research and application. Beyond delivering top-notch algorithm implementations, RLLTE also serves as a toolkit for developing algorithms. More specifically, RLLTE decouples the RL algorithms completely from the exploitation-exploration perspective, providing a large number of components to accelerate algorithm development and evolution. In particular, RLLTE is the first RL framework to build a complete and luxuriant ecosystem, which includes model training, evaluation, deployment, benchmark hub, and large language model (LLM)-empowered copilot. RLLTE is expected to set standards for RL engineering practice and be highly stimulative for industry and academia.

Automatic Intrinsic Reward Shaping for Exploration in Deep Reinforcement Learning

Jan 26, 2023Abstract:We present AIRS: Automatic Intrinsic Reward Shaping that intelligently and adaptively provides high-quality intrinsic rewards to enhance exploration in reinforcement learning (RL). More specifically, AIRS selects shaping function from a predefined set based on the estimated task return in real-time, providing reliable exploration incentives and alleviating the biased objective problem. Moreover, we develop an intrinsic reward toolkit to provide efficient and reliable implementations of diverse intrinsic reward approaches. We test AIRS on various tasks of Procgen games and DeepMind Control Suite. Extensive simulation demonstrates that AIRS can outperform the benchmarking schemes and achieve superior performance with simple architecture.

Tackling Visual Control via Multi-View Exploration Maximization

Nov 28, 2022

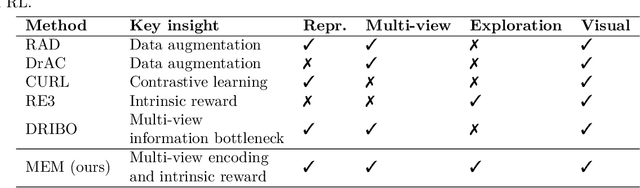

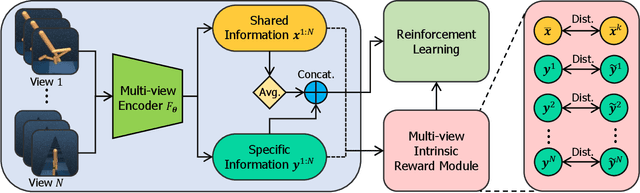

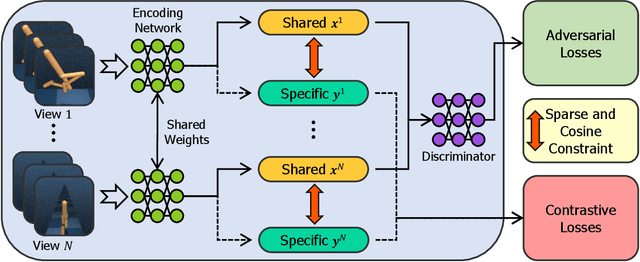

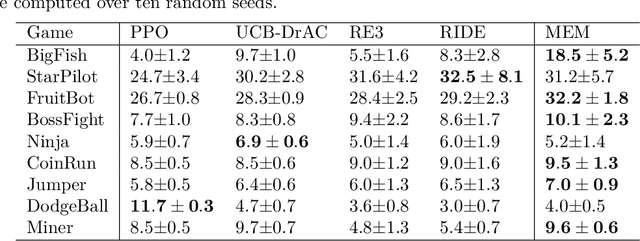

Abstract:We present MEM: Multi-view Exploration Maximization for tackling complex visual control tasks. To the best of our knowledge, MEM is the first approach that combines multi-view representation learning and intrinsic reward-driven exploration in reinforcement learning (RL). More specifically, MEM first extracts the specific and shared information of multi-view observations to form high-quality features before performing RL on the learned features, enabling the agent to fully comprehend the environment and yield better actions. Furthermore, MEM transforms the multi-view features into intrinsic rewards based on entropy maximization to encourage exploration. As a result, MEM can significantly promote the sample-efficiency and generalization ability of the RL agent, facilitating solving real-world problems with high-dimensional observations and spare-reward space. We evaluate MEM on various tasks from DeepMind Control Suite and Procgen games. Extensive simulation results demonstrate that MEM can achieve superior performance and outperform the benchmarking schemes with simple architecture and higher efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge