Huijiang Wang

Self-Closing Suction Grippers for Industrial Grasping via Form-Flexible Design

Jul 02, 2025Abstract:Shape-morphing robots have shown benefits in industrial grasping. We propose form-flexible grippers for adaptive grasping. The design is based on the hybrid jamming and suction mechanism, which deforms to handle objects that vary significantly in size from the aperture, including both larger and smaller parts. Compared with traditional grippers, the gripper achieves self-closing to form an airtight seal. Under a vacuum, a wide range of grasping is realized through the passive morphing mechanism at the interface that harmonizes pressure and flow rate. This hybrid gripper showcases the capability to securely grasp an egg, as small as 54.5% of its aperture, while achieving a maximum load-to-mass ratio of 94.3.

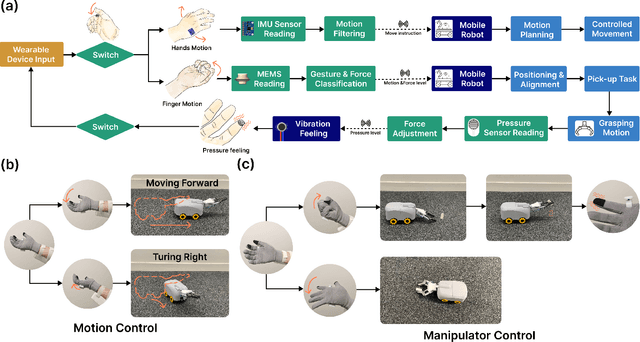

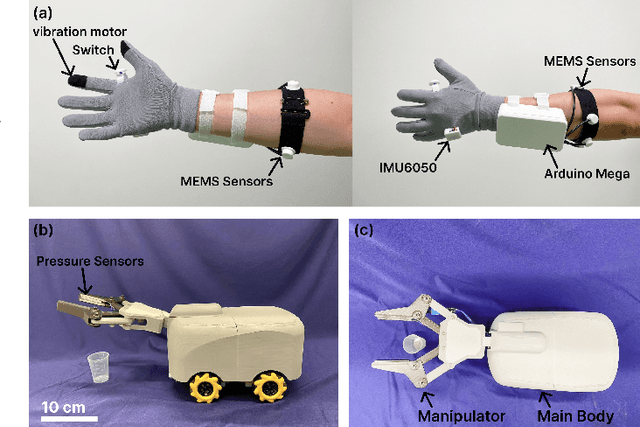

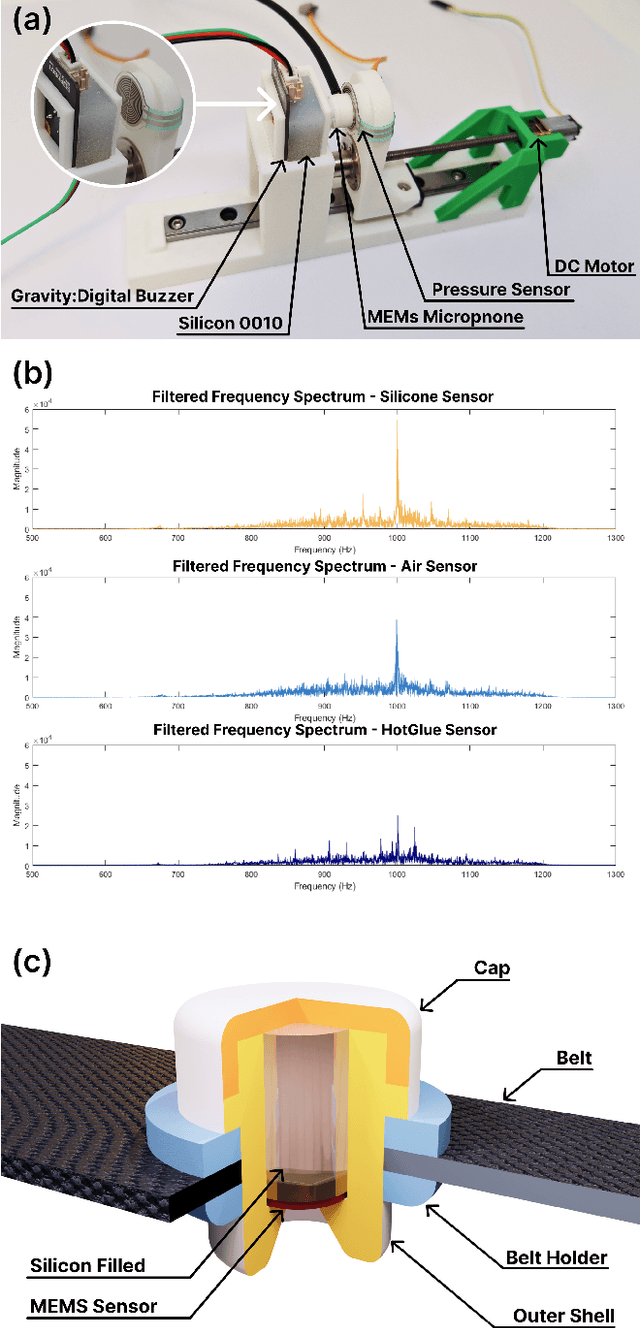

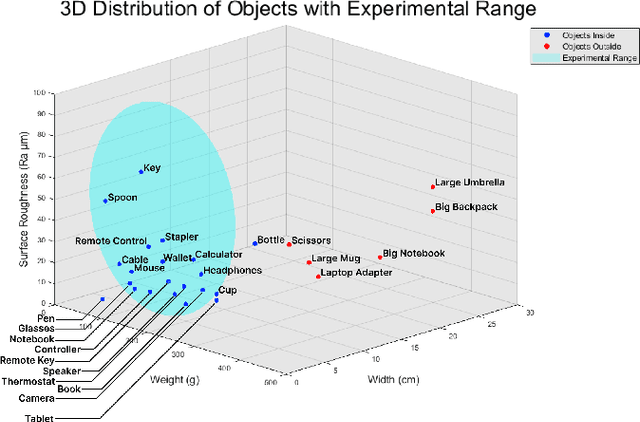

Multi-Sensor Fusion-Based Mobile Manipulator Remote Control for Intelligent Smart Home Assistance

Apr 17, 2025

Abstract:This paper proposes a wearable-controlled mobile manipulator system for intelligent smart home assistance, integrating MEMS capacitive microphones, IMU sensors, vibration motors, and pressure feedback to enhance human-robot interaction. The wearable device captures forearm muscle activity and converts it into real-time control signals for mobile manipulation. The wearable device achieves an offline classification accuracy of 88.33\%\ across six distinct movement-force classes for hand gestures by using a CNN-LSTM model, while real-world experiments involving five participants yield a practical accuracy of 83.33\%\ with an average system response time of 1.2 seconds. In Human-Robot synergy in navigation and grasping tasks, the robot achieved a 98\%\ task success rate with an average trajectory deviation of only 3.6 cm. Finally, the wearable-controlled mobile manipulator system achieved a 93.3\%\ gripping success rate, a transfer success of 95.6\%\, and a full-task success rate of 91.1\%\ during object grasping and transfer tests, in which a total of 9 object-texture combinations were evaluated. These three experiments' results validate the effectiveness of MEMS-based wearable sensing combined with multi-sensor fusion for reliable and intuitive control of assistive robots in smart home scenarios.

Human-Robot Cooperative Piano Playing with Learning-Based Real-Time Music Accompaniment

Sep 18, 2024Abstract:Recent advances in machine learning have paved the way for the development of musical and entertainment robots. However, human-robot cooperative instrument playing remains a challenge, particularly due to the intricate motor coordination and temporal synchronization. In this paper, we propose a theoretical framework for human-robot cooperative piano playing based on non-verbal cues. First, we present a music improvisation model that employs a recurrent neural network (RNN) to predict appropriate chord progressions based on the human's melodic input. Second, we propose a behavior-adaptive controller to facilitate seamless temporal synchronization, allowing the cobot to generate harmonious acoustics. The collaboration takes into account the bidirectional information flow between the human and robot. We have developed an entropy-based system to assess the quality of cooperation by analyzing the impact of different communication modalities during human-robot collaboration. Experiments demonstrate that our RNN-based improvisation can achieve a 93\% accuracy rate. Meanwhile, with the MPC adaptive controller, the robot could respond to the human teammate in homophony performances with real-time accompaniment. Our designed framework has been validated to be effective in allowing humans and robots to work collaboratively in the artistic piano-playing task.

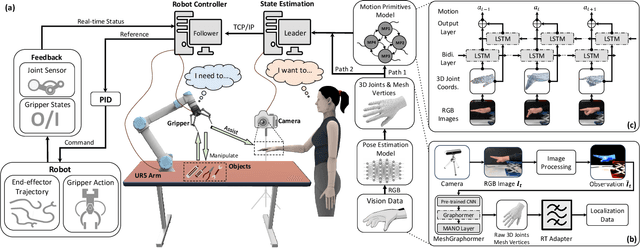

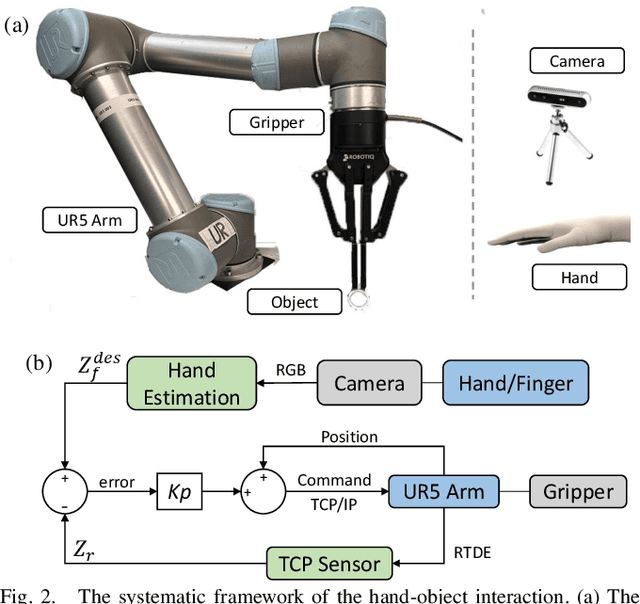

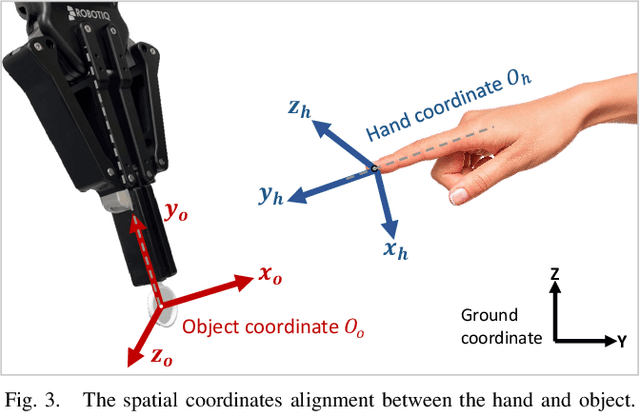

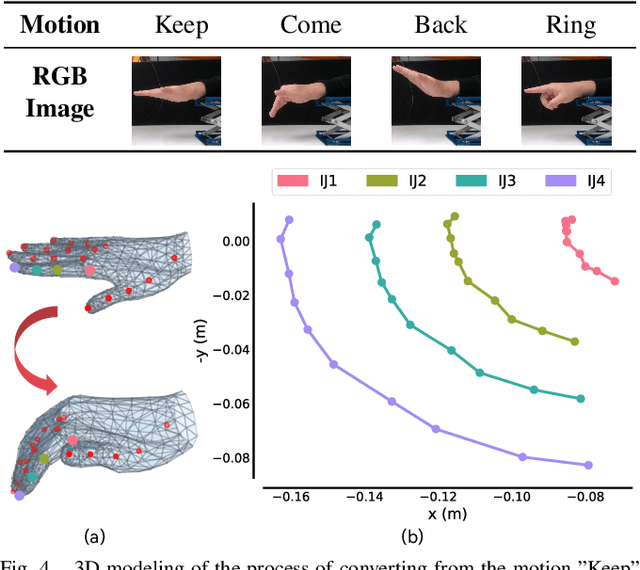

Real-Time Dynamic Robot-Assisted Hand-Object Interaction via Motion Primitives

May 29, 2024

Abstract:Advances in artificial intelligence (AI) have been propelling the evolution of human-robot interaction (HRI) technologies. However, significant challenges remain in achieving seamless interactions, particularly in tasks requiring physical contact with humans. These challenges arise from the need for accurate real-time perception of human actions, adaptive control algorithms for robots, and the effective coordination between human and robotic movements. In this paper, we propose an approach to enhancing physical HRI with a focus on dynamic robot-assisted hand-object interaction (HOI). Our methodology integrates hand pose estimation, adaptive robot control, and motion primitives to facilitate human-robot collaboration. Specifically, we employ a transformer-based algorithm to perform real-time 3D modeling of human hands from single RGB images, based on which a motion primitives model (MPM) is designed to translate human hand motions into robotic actions. The robot's action implementation is dynamically fine-tuned using the continuously updated 3D hand models. Experimental validations, including a ring-wearing task, demonstrate the system's effectiveness in adapting to real-time movements and assisting in precise task executions.

DGRC: An Effective Fine-tuning Framework for Distractor Generation in Chinese Multi-choice Reading Comprehension

May 29, 2024

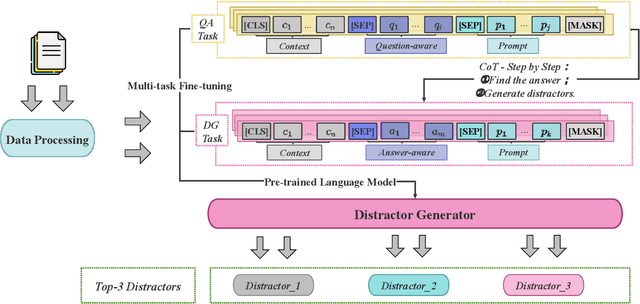

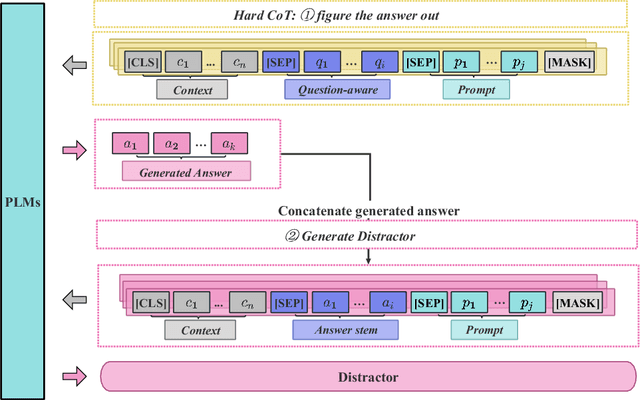

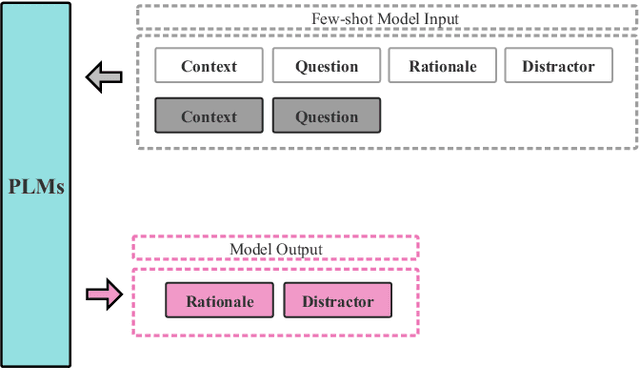

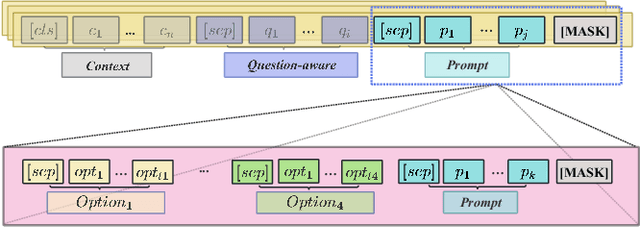

Abstract:When evaluating a learner's knowledge proficiency, the multiple-choice question is an efficient and widely used format in standardized tests. Nevertheless, generating these questions, particularly plausible distractors (incorrect options), poses a considerable challenge. Generally, the distractor generation can be classified into cloze-style distractor generation (CDG) and natural questions distractor generation (NQDG). In contrast to the CDG, utilizing pre-trained language models (PLMs) for NQDG presents three primary challenges: (1) PLMs are typically trained to generate ``correct'' content, like answers, while rarely trained to generate ``plausible" content, like distractors; (2) PLMs often struggle to produce content that aligns well with specific knowledge and the style of exams; (3) NQDG necessitates the model to produce longer, context-sensitive, and question-relevant distractors. In this study, we introduce a fine-tuning framework named DGRC for NQDG in Chinese multi-choice reading comprehension from authentic examinations. DGRC comprises three major components: hard chain-of-thought, multi-task learning, and generation mask patterns. The experiment results demonstrate that DGRC significantly enhances generation performance, achieving a more than 2.5-fold improvement in BLEU scores.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge