Wyatt Ubellacker

Flow-Based Synthesis of Reactive Tests for Discrete Decision-Making Systems with Temporal Logic Specifications

Apr 15, 2024

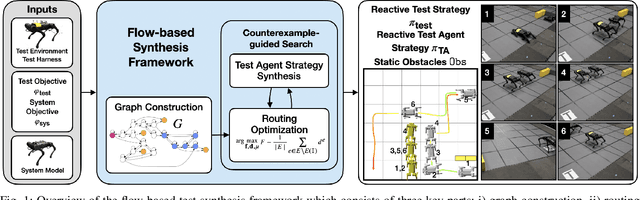

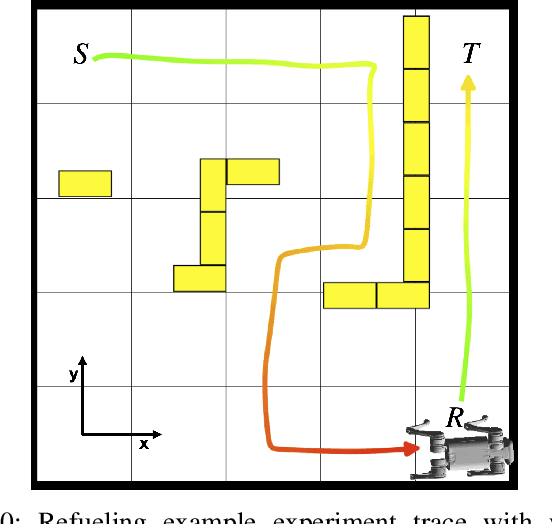

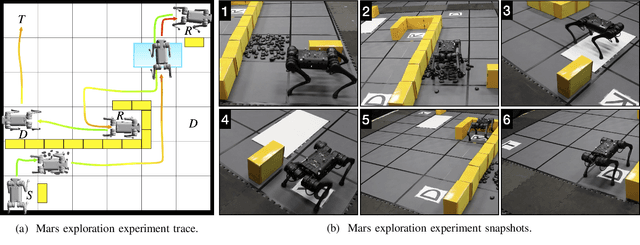

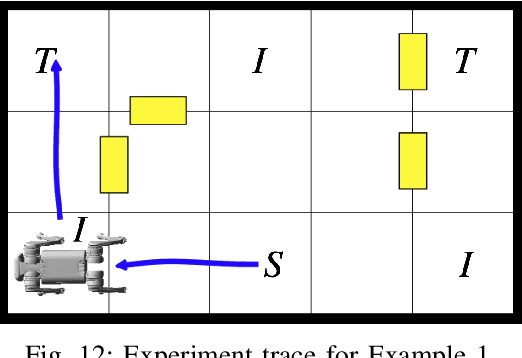

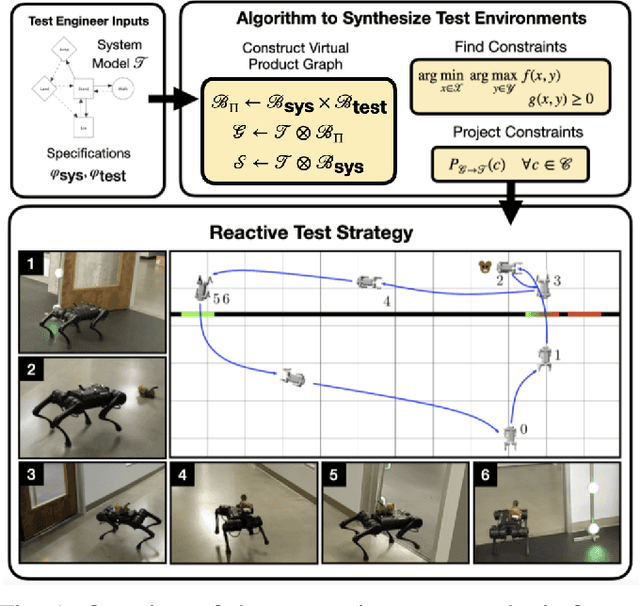

Abstract:Designing tests to evaluate if a given autonomous system satisfies complex specifications is challenging due to the complexity of these systems. This work proposes a flow-based approach for reactive test synthesis from temporal logic specifications, enabling the synthesis of test environments consisting of static and reactive obstacles and dynamic test agents. The temporal logic specifications describe desired test behavior, including system requirements as well as a test objective that is not revealed to the system. The synthesized test strategy places restrictions on system actions in reaction to the system state. The tests are minimally restrictive and accomplish the test objective while ensuring realizability of the system's objective without aiding it (semi-cooperative setting). Automata theory and flow networks are leveraged to formulate a mixed-integer linear program (MILP) to synthesize the test strategy. For a dynamic test agent, the agent strategy is synthesized for a GR(1) specification constructed from the solution of the MILP. If the specification is unrealizable by the dynamics of the test agent, a counterexample-guided approach is used to resolve the MILP until a strategy is found. This flow-based, reactive test synthesis is conducted offline and is agnostic to the system controller. Finally, the resulting test strategy is demonstrated in simulation and experimentally on a pair of quadrupedal robots for a variety of specifications.

Safety-critical Control of Quadrupedal Robots with Rolling Arms for Autonomous Inspection of Complex Environments

Dec 12, 2023

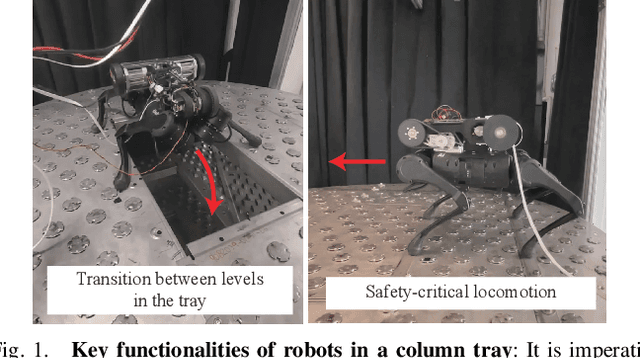

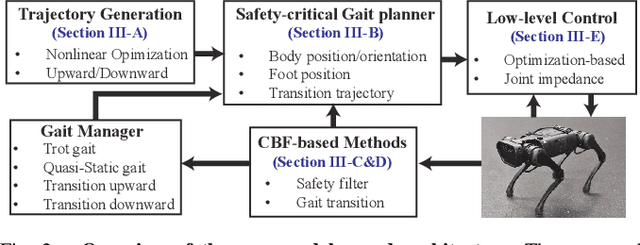

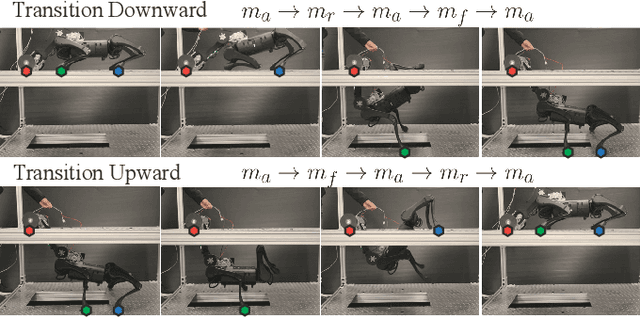

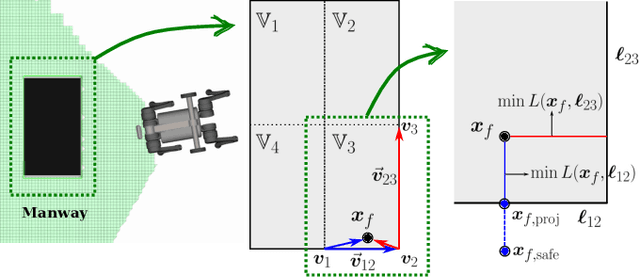

Abstract:This paper presents a safety-critical control framework tailored for quadruped robots equipped with a roller arm, particularly when performing locomotive tasks such as autonomous robotic inspection in complex, multi-tiered environments. In this study, we consider the problem of operating a quadrupedal robot in distillation columns, locomoting on column trays and transitioning between these trays with a roller arm. To address this problem, our framework encompasses the following key elements: 1) Trajectory generation for seamless transitions between columns, 2) Foothold re-planning in regions deemed unsafe, 3) Safety-critical control incorporating control barrier functions, 4) Gait transitions based on safety levels, and 5) A low-level controller. Our comprehensive framework, comprising these components, enables autonomous and safe locomotion across multiple layers. We incorporate reduced-order and full-body models to ensure safety, integrating safety-critical control and footstep re-planning approaches. We validate the effectiveness of our proposed framework through practical experiments involving a quadruped robot equipped with a roller arm, successfully navigating and transitioning between different levels within the column tray structure.

Probabilistic Guarantees for Nonlinear Safety-Critical Optimal Control

Mar 11, 2023Abstract:Leveraging recent developments in black-box risk-aware verification, we provide three algorithms that generate probabilistic guarantees on (1) optimality of solutions, (2) recursive feasibility, and (3) maximum controller runtimes for general nonlinear safety-critical finite-time optimal controllers. These methods forego the usual (perhaps) restrictive assumptions required for typical theoretical guarantees, e.g. terminal set calculation for recursive feasibility in Nonlinear Model Predictive Control, or convexification of optimal controllers to ensure optimality. Furthermore, we show that these methods can directly be applied to hardware systems to generate controller guarantees on their respective systems.

Synthesizing Reactive Test Environments for Autonomous Systems: Testing Reach-Avoid Specifications with Multi-Commodity Flows

Oct 19, 2022

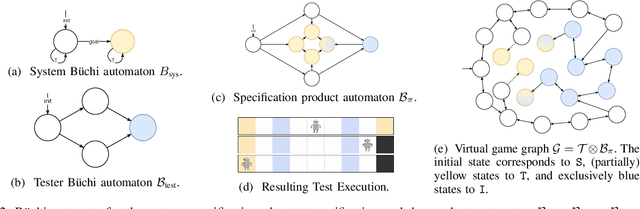

Abstract:We study automated test generation for verifying discrete decision-making modules in autonomous systems. We utilize linear temporal logic to encode the requirements on the system under test in the system specification and the behavior that we want to observe during the test is given as the test specification which is unknown to the system. First, we use the specifications and their corresponding non-deterministic B\"uchi automata to generate the specification product automaton. Second, a virtual product graph representing the high-level interaction between the system and the test environment is constructed modeling the product automaton encoding the system, the test environment, and specifications. The main result of this paper is an optimization problem, framed as a multi-commodity network flow problem, that solves for constraints on the virtual product graph which can then be projected to the test environment. Therefore, the result of the optimization problem is reactive test synthesis that ensures that the system meets the test specifications along with satisfying the system specifications. This framework is illustrated in simulation on grid world examples, and demonstrated on hardware with the Unitree A1 quadruped, wherein dynamic locomotion behaviors are verified in the context of reactive test environments.

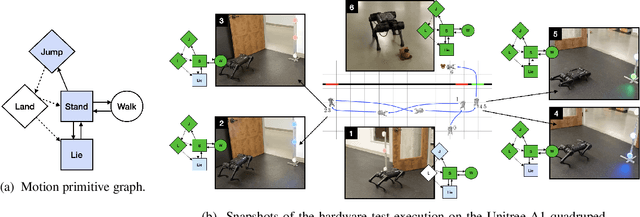

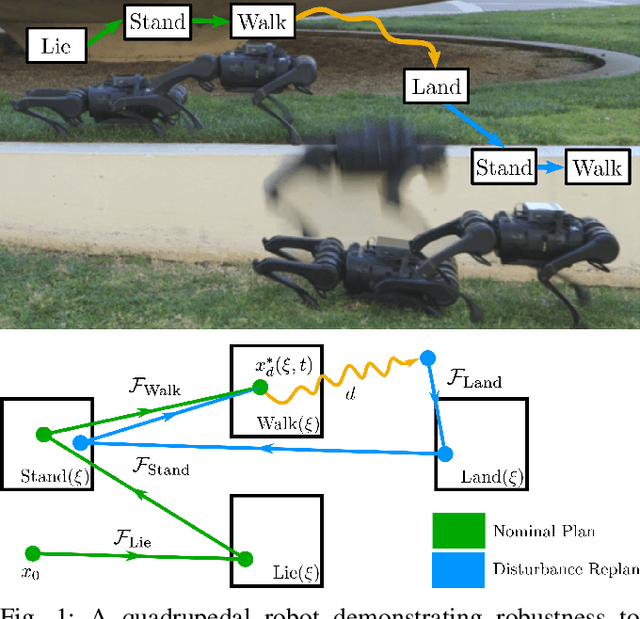

Robust Locomotion on Legged Robots through Planning on Motion Primitive Graphs

Sep 15, 2022

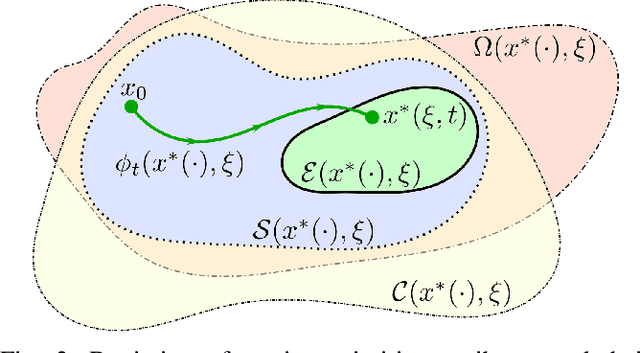

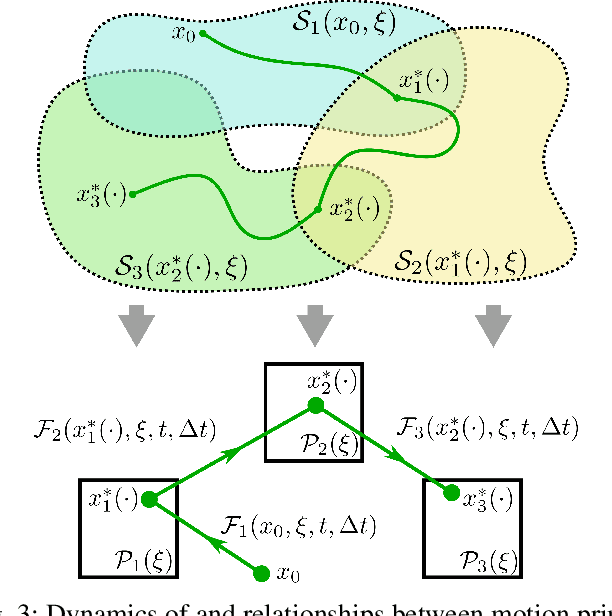

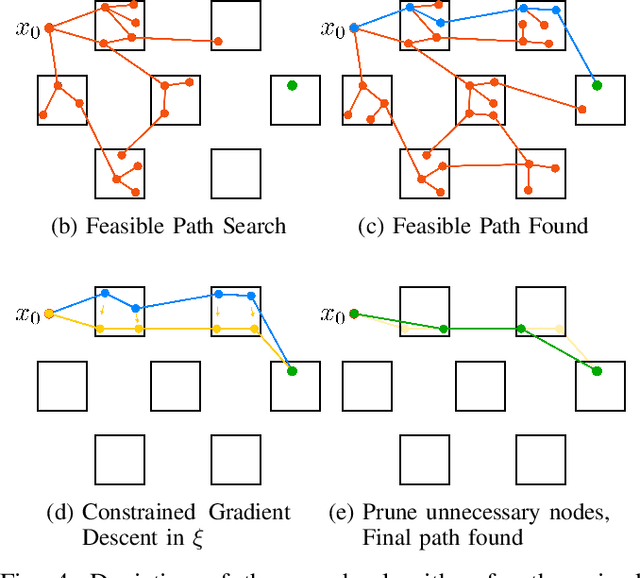

Abstract:The functional demands of robotic systems often require completing various tasks or behaviors under the effect of disturbances or uncertain environments. Of increasing interest is the autonomy for dynamic robots, such as multirotors, motor vehicles, and legged platforms. Here, disturbances and environmental conditions can have significant impact on the successful performance of the individual dynamic behaviors, referred to as "motion primitives". Despite this, robustness can be achieved by switching to and transitioning through suitable motion primitives. This paper contributes such a method by presenting an abstraction of the motion primitive dynamics and a corresponding "motion primitive transfer function". From this, a mixed discrete and continuous "motion primitive graph" is constructed, and an algorithm capable of online search of this graph is detailed. The result is a framework capable of realizing holistic robustness on dynamic systems. This is experimentally demonstrated for a set of motion primitives on a quadrupedal robot, subject to various environmental and intentional disturbances.

Self-Supervised Online Learning for Safety-Critical Control using Stereo Vision

Mar 02, 2022

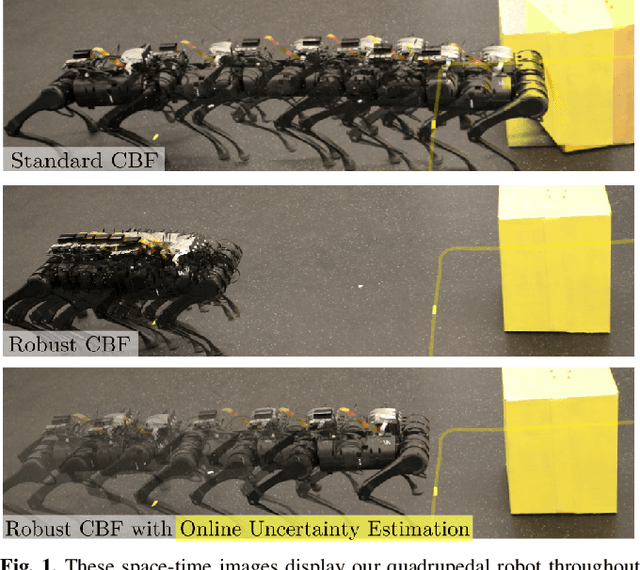

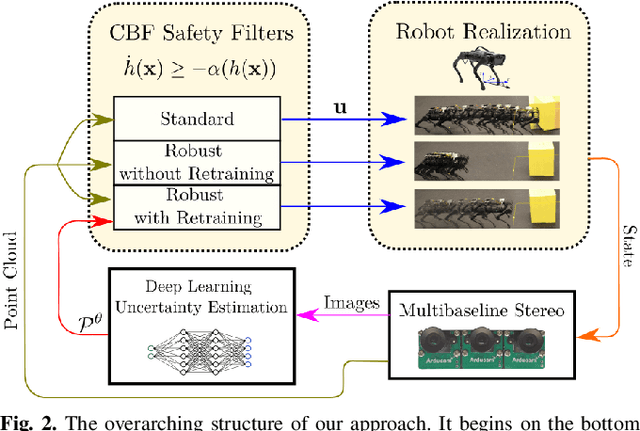

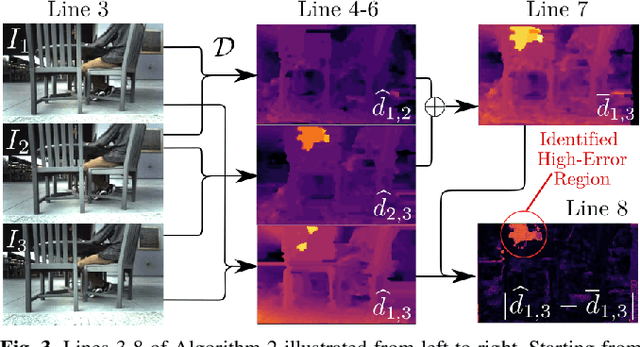

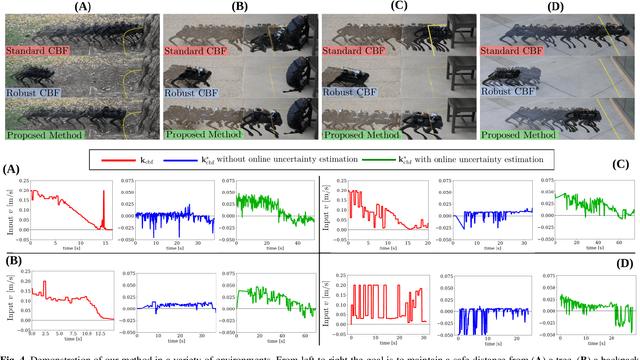

Abstract:With the increasing prevalence of complex vision-based sensing methods for use in obstacle identification and state estimation, characterizing environment-dependent measurement errors has become a difficult and essential part of modern robotics. This paper presents a self-supervised learning approach to safety-critical control. In particular, the uncertainty associated with stereo vision is estimated, and adapted online to new visual environments, wherein this estimate is leveraged in a safety-critical controller in a robust fashion. To this end, we propose an algorithm that exploits the structure of stereo-vision to learn an uncertainty estimate without the need for ground-truth data. We then robustify existing Control Barrier Function-based controllers to provide safety in the presence of this uncertainty estimate. We demonstrate the efficacy of our method on a quadrupedal robot in a variety of environments. When not using our method safety is violated. With offline training alone we observe the robot is safe, but overly-conservative. With our online method the quadruped remains safe and conservatism is reduced.

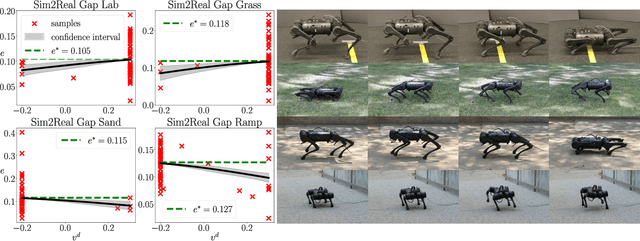

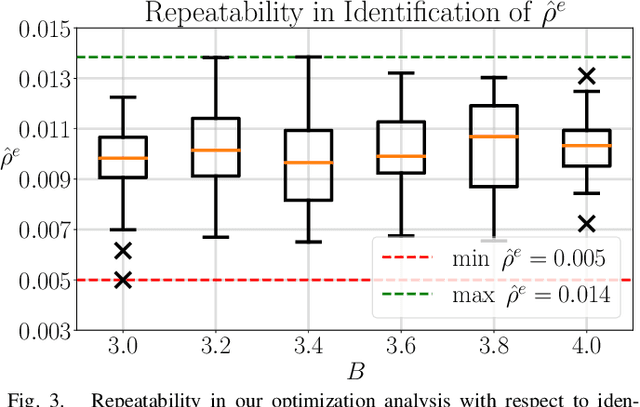

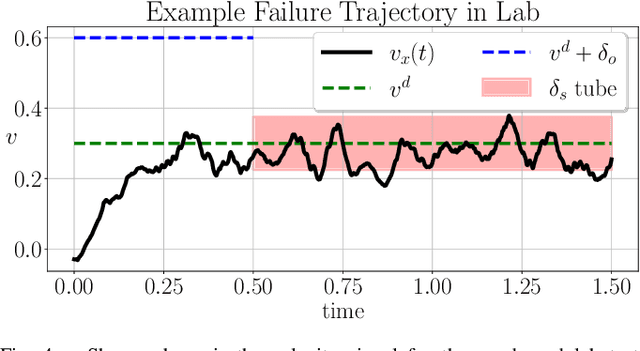

Test and Evaluation of Quadrupedal Walking Gaits through Sim2Real Gap Quantification

Jan 04, 2022

Abstract:In this letter, the authors propose a two-step approach to evaluate and verify a true system's capacity to satisfy its operational objective. Specifically, whenever the system objective has a quantifiable measure of satisfaction, i.e. a signal temporal logic specification, a barrier function, etc - the authors develop two separate optimization problems solvable via a Bayesian Optimization procedure detailed within. This dual approach has the added benefit of quantifying the Sim2Real Gap between a system simulator and its hardware counterpart. Our contributions are twofold. First, we show repeatability with respect to our outlined optimization procedure in solving these optimization problems. Second, we show that the same procedure can discriminate between different environments by identifying the Sim2Real Gap between a simulator and its hardware counterpart operating in different environments.

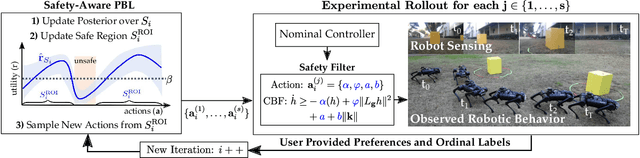

Safety-Aware Preference-Based Learning for Safety-Critical Control

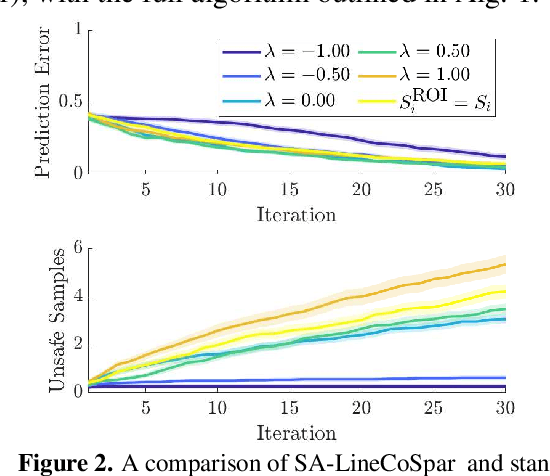

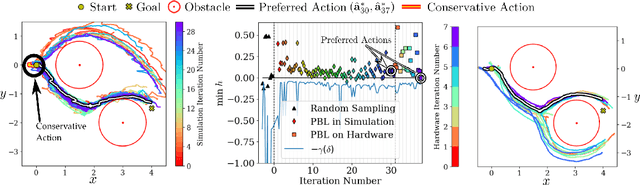

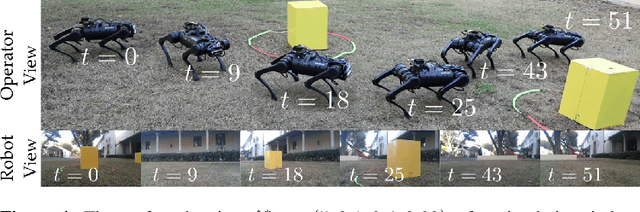

Dec 15, 2021

Abstract:Bringing dynamic robots into the wild requires a tenuous balance between performance and safety. Yet controllers designed to provide robust safety guarantees often result in conservative behavior, and tuning these controllers to find the ideal trade-off between performance and safety typically requires domain expertise or a carefully constructed reward function. This work presents a design paradigm for systematically achieving behaviors that balance performance and robust safety by integrating safety-aware Preference-Based Learning (PBL) with Control Barrier Functions (CBFs). Fusing these concepts -- safety-aware learning and safety-critical control -- gives a robust means to achieve safe behaviors on complex robotic systems in practice. We demonstrate the capability of this design paradigm to achieve safe and performant perception-based autonomous operation of a quadrupedal robot both in simulation and experimentally on hardware.

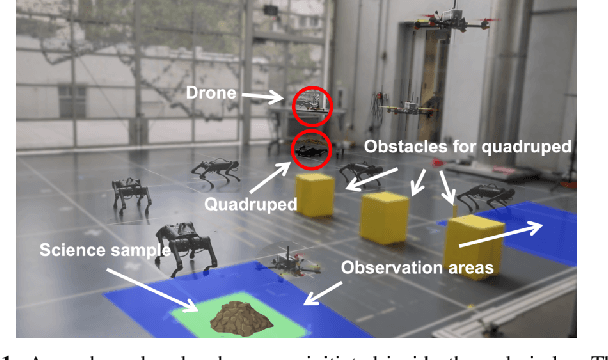

Mixed Observable RRT: Multi-Agent Mission-Planning in Partially Observable Environments

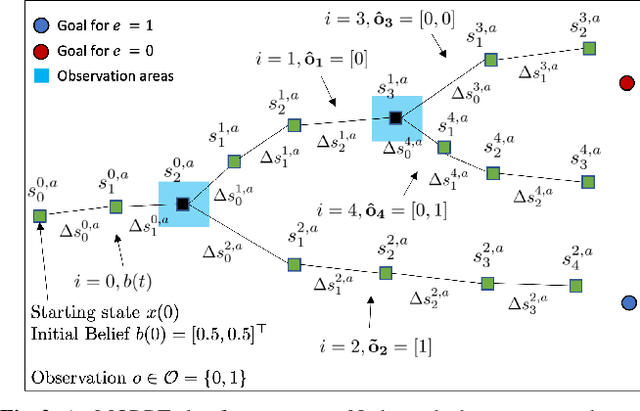

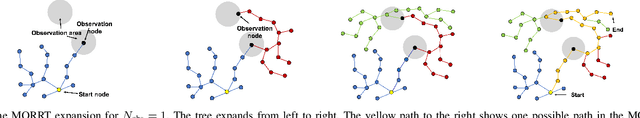

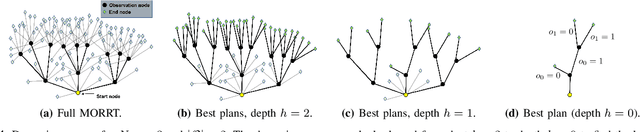

Oct 03, 2021

Abstract:This paper considers centralized mission-planning for a heterogeneous multi-agent system with the aim of locating a hidden target. We propose a mixed observable setting, consisting of a fully observable state-space and a partially observable environment, using a hidden Markov model. First, we construct rapidly exploring random trees (RRTs) to introduce the mixed observable RRT for finding plausible mission plans giving way-points for each agent. Leveraging this construction, we present a path-selection strategy based on a dynamic programming approach, which accounts for the uncertainty from partial observations and minimizes the expected cost. Finally, we combine the high-level plan with model predictive controllers to evaluate the approach on an experimental setup consisting of a quadruped robot and a drone. It is shown that agents are able to make intelligent decisions to explore the area efficiently and to locate the target through collaborative actions.

Model-Free Safety-Critical Control for Robotic Systems

Sep 19, 2021

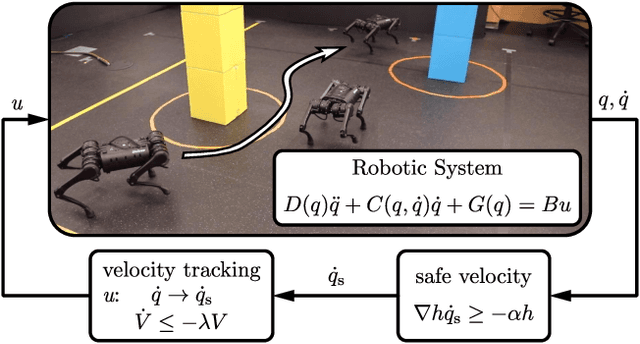

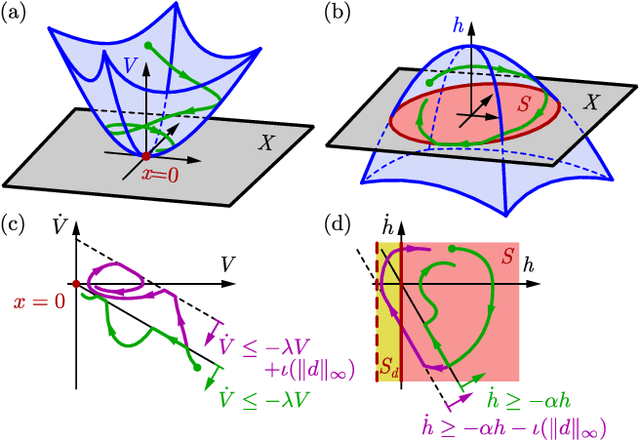

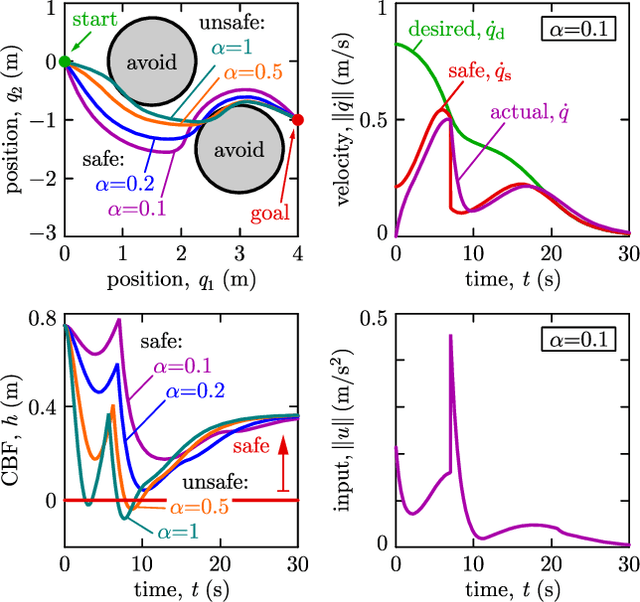

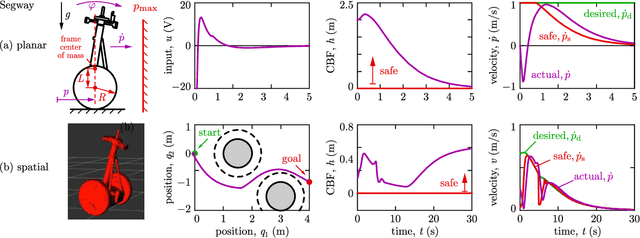

Abstract:This paper presents a framework for the safety-critical control of robotic systems, when safety is defined on safe regions in the configuration space. To maintain safety, we synthesize a safe velocity based on control barrier function theory without relying on a -- potentially complicated -- high-fidelity dynamical model of the robot. Then, we track the safe velocity with a tracking controller. This culminates in model-free safety critical control. We prove theoretical safety guarantees for the proposed method. Finally, we demonstrate that this approach is application-agnostic. We execute an obstacle avoidance task with a Segway in high-fidelity simulation, as well as with a Drone and a Quadruped in hardware experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge