Richard M. Murray

Flow-Based Synthesis of Reactive Tests for Discrete Decision-Making Systems with Temporal Logic Specifications

Apr 15, 2024

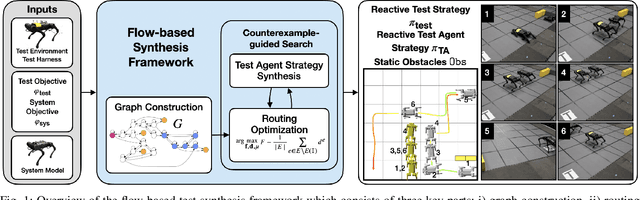

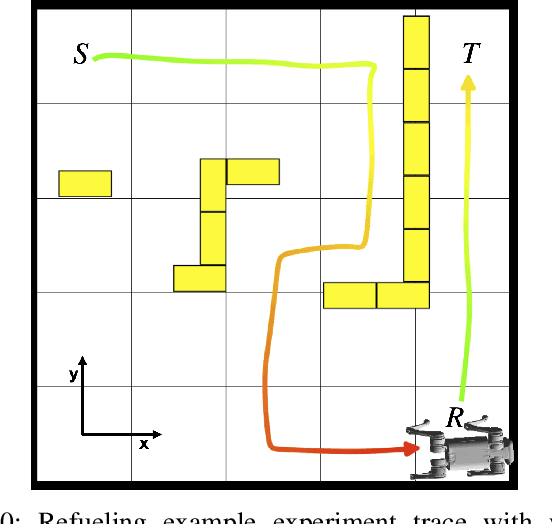

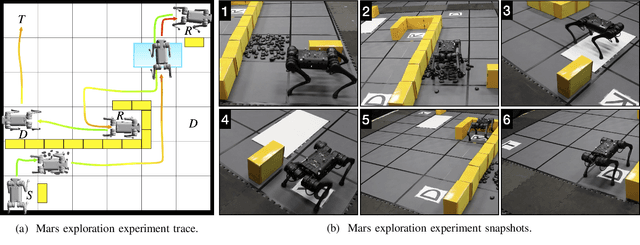

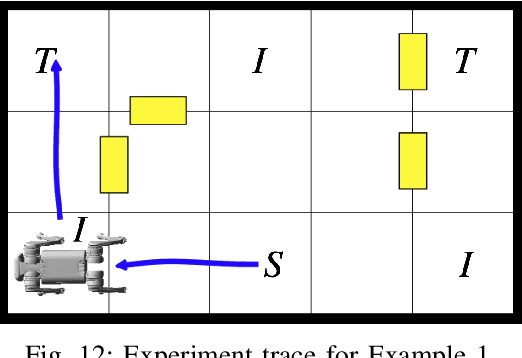

Abstract:Designing tests to evaluate if a given autonomous system satisfies complex specifications is challenging due to the complexity of these systems. This work proposes a flow-based approach for reactive test synthesis from temporal logic specifications, enabling the synthesis of test environments consisting of static and reactive obstacles and dynamic test agents. The temporal logic specifications describe desired test behavior, including system requirements as well as a test objective that is not revealed to the system. The synthesized test strategy places restrictions on system actions in reaction to the system state. The tests are minimally restrictive and accomplish the test objective while ensuring realizability of the system's objective without aiding it (semi-cooperative setting). Automata theory and flow networks are leveraged to formulate a mixed-integer linear program (MILP) to synthesize the test strategy. For a dynamic test agent, the agent strategy is synthesized for a GR(1) specification constructed from the solution of the MILP. If the specification is unrealizable by the dynamics of the test agent, a counterexample-guided approach is used to resolve the MILP until a strategy is found. This flow-based, reactive test synthesis is conducted offline and is agnostic to the system controller. Finally, the resulting test strategy is demonstrated in simulation and experimentally on a pair of quadrupedal robots for a variety of specifications.

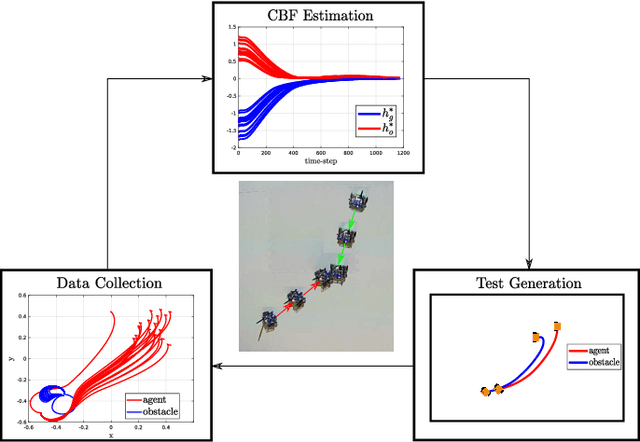

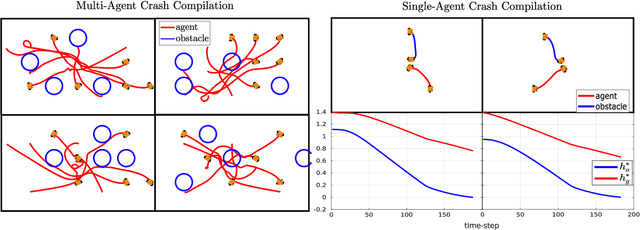

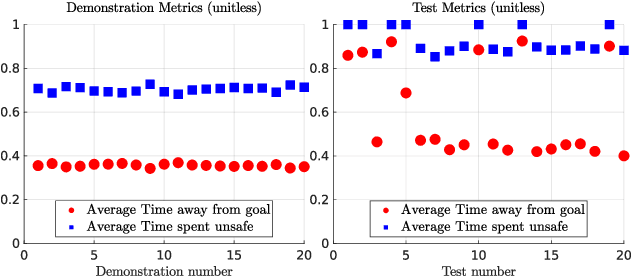

Barrier-Based Test Synthesis for Safety-Critical Systems Subject to Timed Reach-Avoid Specifications

Jan 23, 2023Abstract:We propose an adversarial, time-varying test-synthesis procedure for safety-critical systems without requiring specific knowledge of the underlying controller steering the system. From a broader test and evaluation context, determination of difficult tests of system behavior is important as these tests would elucidate problematic system phenomena before these mistakes can engender problematic outcomes, e.g. loss of human life in autonomous cars, costly failures for airplane systems, etc. Our approach builds on existing, simulation-based work in the test and evaluation literature by offering a controller-agnostic test-synthesis procedure that provides a series of benchmark tests with which to determine controller reliability. To achieve this, our approach codifies the system objective as a timed reach-avoid specification. Then, by coupling control barrier functions with this class of specifications, we construct an instantaneous difficulty metric whose minimizer corresponds to the most difficult test at that system state. We use this instantaneous difficulty metric in a game-theoretic fashion, to produce an adversarial, time-varying test-synthesis procedure that does not require specific knowledge of the system's controller, but can still provably identify realizable and maximally difficult tests of system behavior. Finally, we develop this test-synthesis procedure for both continuous and discrete-time systems and showcase our test-synthesis procedure on simulated and hardware examples.

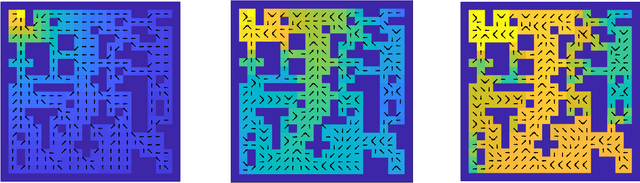

Synthesizing Reactive Test Environments for Autonomous Systems: Testing Reach-Avoid Specifications with Multi-Commodity Flows

Oct 19, 2022

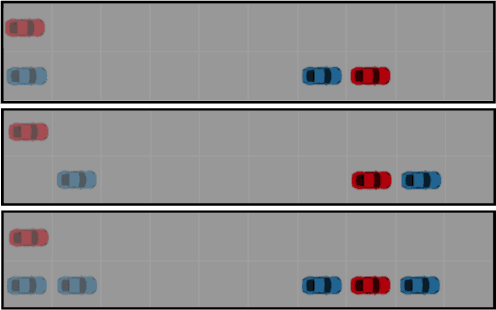

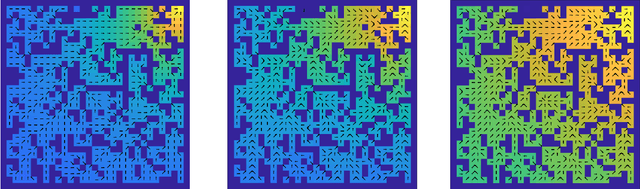

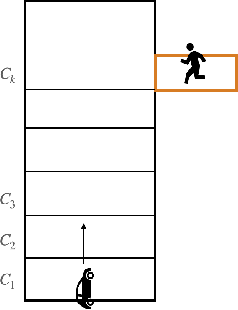

Abstract:We study automated test generation for verifying discrete decision-making modules in autonomous systems. We utilize linear temporal logic to encode the requirements on the system under test in the system specification and the behavior that we want to observe during the test is given as the test specification which is unknown to the system. First, we use the specifications and their corresponding non-deterministic B\"uchi automata to generate the specification product automaton. Second, a virtual product graph representing the high-level interaction between the system and the test environment is constructed modeling the product automaton encoding the system, the test environment, and specifications. The main result of this paper is an optimization problem, framed as a multi-commodity network flow problem, that solves for constraints on the virtual product graph which can then be projected to the test environment. Therefore, the result of the optimization problem is reactive test synthesis that ensures that the system meets the test specifications along with satisfying the system specifications. This framework is illustrated in simulation on grid world examples, and demonstrated on hardware with the Unitree A1 quadruped, wherein dynamic locomotion behaviors are verified in the context of reactive test environments.

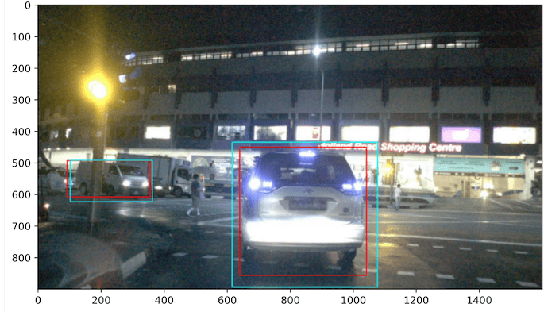

Evaluation Metrics for Object Detection for Autonomous Systems

Oct 19, 2022

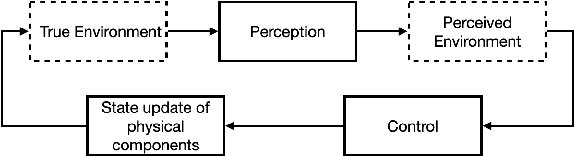

Abstract:This paper studies the evaluation of learning-based object detection models in conjunction with model-checking of formal specifications defined on an abstract model of an autonomous system and its environment. In particular, we define two metrics -- \emph{proposition-labeled} and \emph{class-labeled} confusion matrices -- for evaluating object detection, and we incorporate these metrics to compute the satisfaction probability of system-level safety requirements. While confusion matrices have been effective for comparative evaluation of classification and object detection models, our framework fills two key gaps. First, we relate the performance of object detection to formal requirements defined over downstream high-level planning tasks. In particular, we provide empirical results that show that the choice of a good object detection algorithm, with respect to formal requirements on the overall system, significantly depends on the downstream planning and control design. Secondly, unlike the traditional confusion matrix, our metrics account for variations in performance with respect to the distance between the ego and the object being detected. We demonstrate this framework on a car-pedestrian example by computing the satisfaction probabilities for safety requirements formalized in Linear Temporal Logic (LTL).

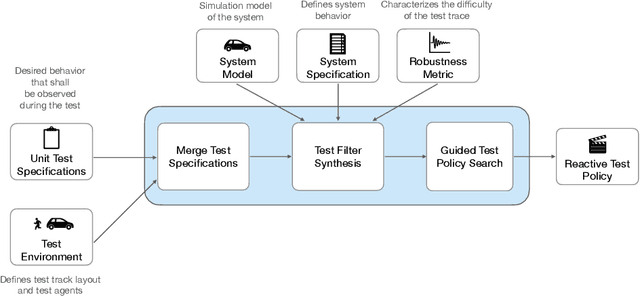

Towards Better Test Coverage: Merging Unit Tests for Autonomous Systems

Apr 06, 2022

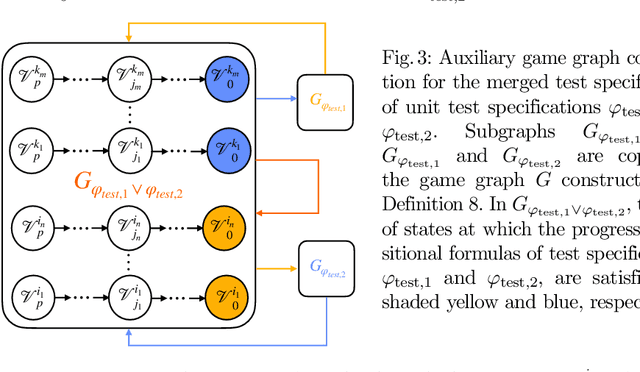

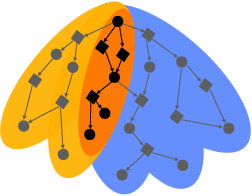

Abstract:We present a framework for merging unit tests for autonomous systems. Typically, it is intractable to test an autonomous system for every scenario in its operating environment. The question of whether it is possible to design a single test for multiple requirements of the system motivates this work. First, we formally define three attributes of a test: a test specification that characterizes behaviors observed in a test execution, a test environment, and a test policy. Using the merge operator from contract-based design theory, we provide a formalism to construct a merged test specification from two unit test specifications. Temporal constraints on the merged test specification guarantee that non-trivial satisfaction of both unit test specifications is necessary for a successful merged test execution. We assume that the test environment remains the same across the unit tests and the merged test. Given a test specification and a test environment, we synthesize a test policy filter using a receding horizon approach, and use the test policy filter to guide a search procedure (e.g. Monte-Carlo Tree Search) to find a test policy that is guaranteed to satisfy the test specification. This search procedure finds a test policy that maximizes a pre-defined robustness metric for the test while the filter guarantees a test policy for satisfying the test specification. We prove that our algorithm is sound. Furthermore, the receding horizon approach to synthesizing the filter ensures that our algorithm is scalable. Finally, we show that merging unit tests is impactful for designing efficient test campaigns to achieve similar levels of coverage in fewer test executions. We illustrate our framework on two self-driving examples in a discrete-state setting.

* Conference Paper

Risk-Averse Decision Making Under Uncertainty

Sep 09, 2021

Abstract:A large class of decision making under uncertainty problems can be described via Markov decision processes (MDPs) or partially observable MDPs (POMDPs), with application to artificial intelligence and operations research, among others. Traditionally, policy synthesis techniques are proposed such that a total expected cost or reward is minimized or maximized. However, optimality in the total expected cost sense is only reasonable if system behavior in the large number of runs is of interest, which has limited the use of such policies in practical mission-critical scenarios, wherein large deviations from the expected behavior may lead to mission failure. In this paper, we consider the problem of designing policies for MDPs and POMDPs with objectives and constraints in terms of dynamic coherent risk measures, which we refer to as the constrained risk-averse problem. For MDPs, we reformulate the problem into a infsup problem via the Lagrangian framework and propose an optimization-based method to synthesize Markovian policies. For MDPs, we demonstrate that the formulated optimization problems are in the form of difference convex programs (DCPs) and can be solved by the disciplined convex-concave programming (DCCP) framework. We show that these results generalize linear programs for constrained MDPs with total discounted expected costs and constraints. For POMDPs, we show that, if the coherent risk measures can be defined as a Markov risk transition mapping, an infinite-dimensional optimization can be used to design Markovian belief-based policies. For stochastic finite-state controllers (FSCs), we show that the latter optimization simplifies to a (finite-dimensional) DCP and can be solved by the DCCP framework. We incorporate these DCPs in a policy iteration algorithm to design risk-averse FSCs for POMDPs.

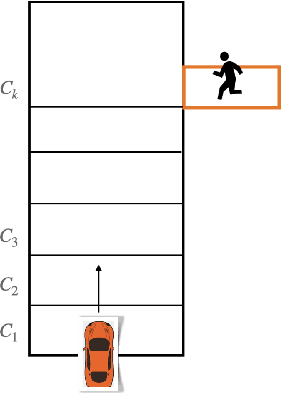

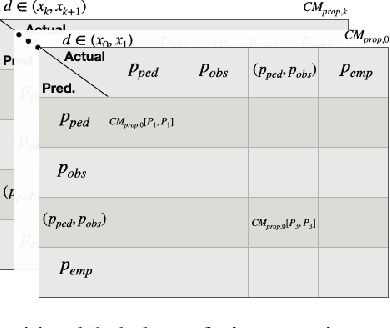

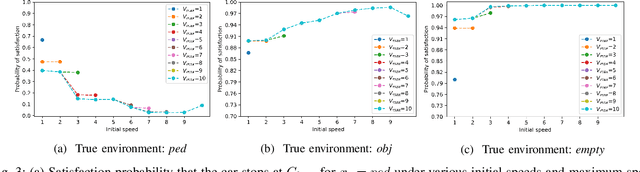

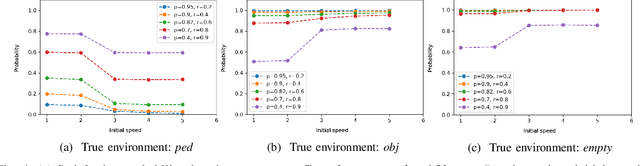

Leveraging Classification Metrics for Quantitative System-Level Analysis with Temporal Logic Specifications

May 16, 2021

Abstract:In many autonomy applications, performance of perception algorithms is important for effective planning and control. In this paper, we introduce a framework for computing the probability of satisfaction of formal system specifications given a confusion matrix, a statistical average performance measure for multi-class classification. We define the probability of satisfaction of a linear temporal logic formula given a specific initial state of the agent and true state of the environment. Then, we present an algorithm to construct a Markov chain that represents the system behavior under the composition of the perception and control components such that the probability of the temporal logic formula computed over the Markov chain is consistent with the probability that the temporal logic formula is satisfied by our system. We illustrate this approach on a simple example of a car with pedestrian on the sidewalk environment, and compute the probability of satisfaction of safety requirements for varying parameters of the vehicle. We also illustrate how satisfaction probability changes with varied precision and recall derived from the confusion matrix. Based on our results, we identify several opportunities for future work in developing quantitative system-level analysis that incorporates perception models.

Limits of Probabilistic Safety Guarantees when Considering Human Uncertainty

Mar 25, 2021

Abstract:When autonomous robots interact with humans, such as during autonomous driving, explicit safety guarantees are crucial in order to avoid potentially life-threatening accidents. Many data-driven methods have explored learning probabilistic bounds over human agents' trajectories (i.e. confidence tubes that contain trajectories with probability $\delta$), which can then be used to guarantee safety with probability $1-\delta$. However, almost all existing works consider $\delta \geq 0.001$. The purpose of this paper is to argue that (1) in safety-critical applications, it is necessary to provide safety guarantees with $\delta < 10^{-8}$, and (2) current learning-based methods are ill-equipped to compute accurate confidence bounds at such low $\delta$. Using human driving data (from the highD dataset), as well as synthetically generated data, we show that current uncertainty models use inaccurate distributional assumptions to describe human behavior and/or require infeasible amounts of data to accurately learn confidence bounds for $\delta \leq 10^{-8}$. These two issues result in unreliable confidence bounds, which can have dangerous implications if deployed on safety-critical systems.

Constrained Risk-Averse Markov Decision Processes

Dec 04, 2020

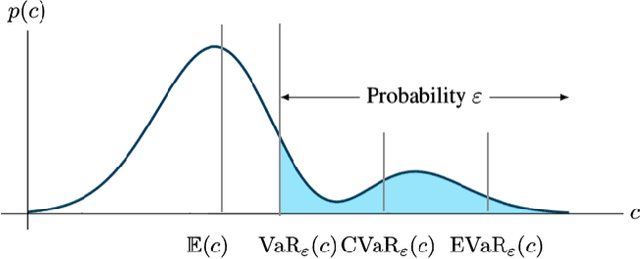

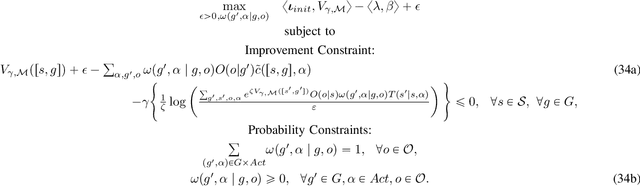

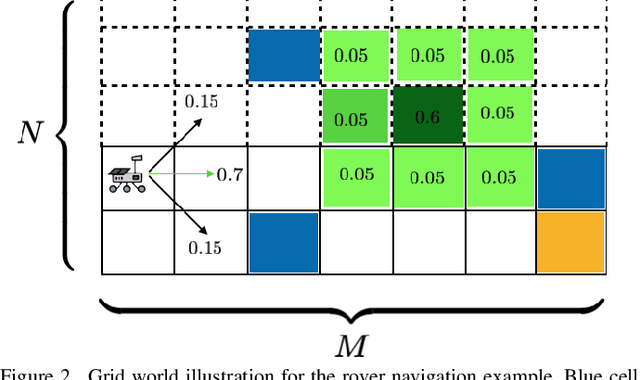

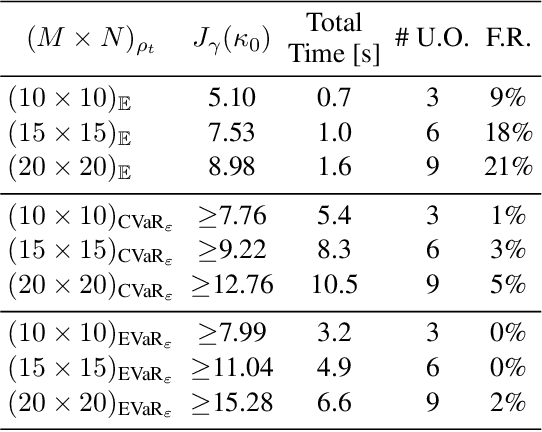

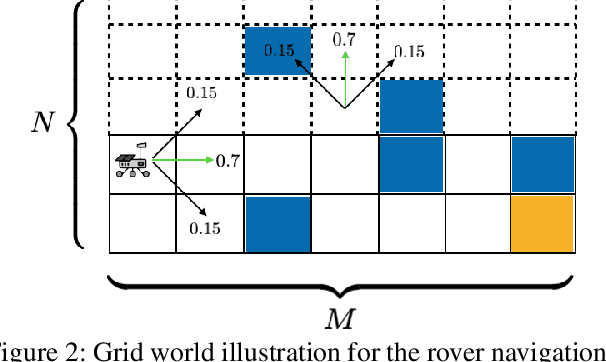

Abstract:We consider the problem of designing policies for Markov decision processes (MDPs) with dynamic coherent risk objectives and constraints. We begin by formulating the problem in a Lagrangian framework. Under the assumption that the risk objectives and constraints can be represented by a Markov risk transition mapping, we propose an optimization-based method to synthesize Markovian policies that lower-bound the constrained risk-averse problem. We demonstrate that the formulated optimization problems are in the form of difference convex programs (DCPs) and can be solved by the disciplined convex-concave programming (DCCP) framework. We show that these results generalize linear programs for constrained MDPs with total discounted expected costs and constraints. Finally, we illustrate the effectiveness of the proposed method with numerical experiments on a rover navigation problem involving conditional-value-at-risk (CVaR) and entropic-value-at-risk (EVaR) coherent risk measures.

Formal Test Synthesis for Safety-Critical Autonomous Systems based on Control Barrier Functions

Apr 08, 2020

Abstract:The prolific rise in autonomous systems has led to questions regarding their safe instantiation in real-world scenarios. Failures in safety-critical contexts such as human-robot interactions or even autonomous driving can ultimately lead to loss of life. In this context, this paper aims to provide a method by which one can algorithmically test and evaluate an autonomous system. Given a black-box autonomous system with some operational specifications, we construct a minimax problem based on control barrier functions to generate a family of test parameters designed to optimally evaluate whether the system can satisfy the specifications. To illustrate our results, we utilize the Robotarium as a case study for an autonomous system that claims to satisfy waypoint navigation and obstacle avoidance simultaneously. We demonstrate that the proposed test synthesis framework systematically finds those sequences of events (tests) that identify points of system failure.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge