Wenxin Li

Pareto-guided Pipeline for Distilling Featherweight AI Agents in Mobile MOBA Games

Feb 07, 2026Abstract:Recent advances in game AI have demonstrated the feasibility of training agents that surpass top-tier human professionals in complex environments such as Honor of Kings (HoK), a leading mobile multiplayer online battle arena (MOBA) game. However, deploying such powerful agents on mobile devices remains a major challenge. On one hand, the intricate multi-modal state representation and hierarchical action space of HoK demand large, sophisticated policy networks that are inherently difficult to compress into lightweight forms. On the other hand, production deployment requires high-frequency inference under strict energy and latency constraints on mobile platform. To the best of our knowledge, bridging large-scale game AI and practical on-device deployment has not been systematically studied. In this work, we propose a Pareto optimality guided pipeline and design a high-efficiency student architecture search space tailored for mobile execution, enabling systematic exploration of the trade-off between performance and efficiency. Experimental results demonstrate that the distilled model achieves remarkable efficiency, including an $12.4\times$ faster inference speed (under 0.5ms per frame) and a $15.6\times$ improvement in energy efficiency (under 0.5mAh per game), while retaining a 40.32% win rate against the original teacher model.

Decoupling Return-to-Go for Efficient Decision Transformer

Jan 22, 2026Abstract:The Decision Transformer (DT) has established a powerful sequence modeling approach to offline reinforcement learning. It conditions its action predictions on Return-to-Go (RTG), using it both to distinguish trajectory quality during training and to guide action generation at inference. In this work, we identify a critical redundancy in this design: feeding the entire sequence of RTGs into the Transformer is theoretically unnecessary, as only the most recent RTG affects action prediction. We show that this redundancy can impair DT's performance through experiments. To resolve this, we propose the Decoupled DT (DDT). DDT simplifies the architecture by processing only observation and action sequences through the Transformer, using the latest RTG to guide the action prediction. This streamlined approach not only improves performance but also reduces computational cost. Our experiments show that DDT significantly outperforms DT and establishes competitive performance against state-of-the-art DT variants across multiple offline RL tasks.

Adapting Rules of Official International Mahjong for Online Players

Jan 13, 2026Abstract:As one of the worldwide spread traditional game, Official International Mahjong can be played and promoted online through remote devices instead of requiring face-to-face interaction. However, online players have fragmented playtime and unfixed combination of opponents in contrary to offline players who have fixed opponents for multiple rounds of play. Therefore, the rules designed for offline players need to be modified to ensure the fairness of online single-round play. Specifically, We employ a world champion AI to engage in self-play competitions and conduct statistical data analysis. Our study reveals the first-mover advantage and issues in the subgoal scoring settings. Based on our findings, we propose rule adaptations to make the game more suitable for the online environment, such as introducing compensatory points for the first-mover advantage and refining the scores of subgoals for different tile patterns. Compared with the traditional method of rotating positions over multiple rounds to balance first-mover advantage, our compensatory points mechanism in each round is more convenient for online players. Furthermore, we implement the revised Mahjong game online, which is open for online players. This work is an initial attempt to use data from AI systems to evaluate Official Internatinoal Mahjong's game balance and develop a revised version of the traditional game better adapted for online players.

Mosaic: Unlocking Long-Context Inference for Diffusion LLMs via Global Memory Planning and Dynamic Peak Taming

Jan 10, 2026Abstract:Diffusion-based large language models (dLLMs) have emerged as a promising paradigm, utilizing simultaneous denoising to enable global planning and iterative refinement. While these capabilities are particularly advantageous for long-context generation, deploying such models faces a prohibitive memory capacity barrier stemming from severe system inefficiencies. We identify that existing inference systems are ill-suited for this paradigm: unlike autoregressive models constrained by the cumulative KV-cache, dLLMs are bottlenecked by transient activations recomputed at every step. Furthermore, general-purpose memory reuse mechanisms lack the global visibility to adapt to dLLMs' dynamic memory peaks, which toggle between logits and FFNs. To address these mismatches, we propose Mosaic, a memory-efficient inference system that shifts from local, static management to a global, dynamic paradigm. Mosaic integrates a mask-only logits kernel to eliminate redundancy, a lazy chunking optimizer driven by an online heuristic search to adaptively mitigate dynamic peaks, and a global memory manager to resolve fragmentation via virtual addressing. Extensive evaluations demonstrate that Mosaic achieves an average 2.71$\times$ reduction in the memory peak-to-average ratio and increases the maximum inference sequence length supportable on identical hardware by 15.89-32.98$\times$. This scalability is achieved without compromising accuracy and speed, and in fact reducing latency by 4.12%-23.26%.

HLSMAC: A New StarCraft Multi-Agent Challenge for High-Level Strategic Decision-Making

Sep 16, 2025

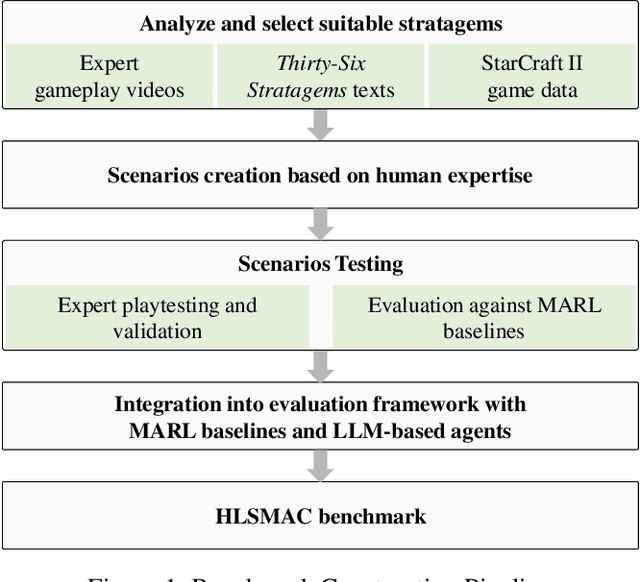

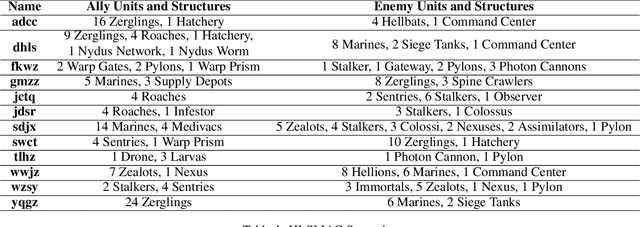

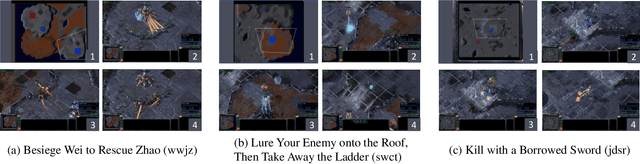

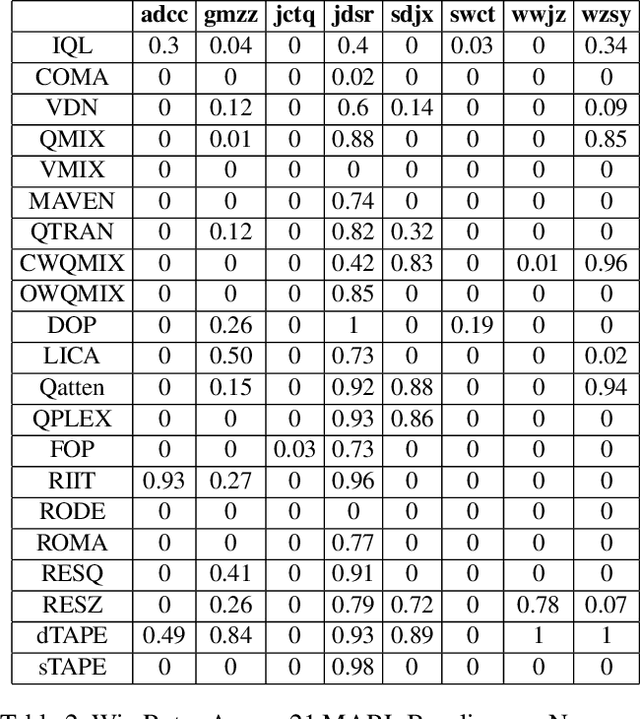

Abstract:Benchmarks are crucial for assessing multi-agent reinforcement learning (MARL) algorithms. While StarCraft II-related environments have driven significant advances in MARL, existing benchmarks like SMAC focus primarily on micromanagement, limiting comprehensive evaluation of high-level strategic intelligence. To address this, we introduce HLSMAC, a new cooperative MARL benchmark with 12 carefully designed StarCraft II scenarios based on classical stratagems from the Thirty-Six Stratagems. Each scenario corresponds to a specific stratagem and is designed to challenge agents with diverse strategic elements, including tactical maneuvering, timing coordination, and deception, thereby opening up avenues for evaluating high-level strategic decision-making capabilities. We also propose novel metrics across multiple dimensions beyond conventional win rate, such as ability utilization and advancement efficiency, to assess agents' overall performance within the HLSMAC environment. We integrate state-of-the-art MARL algorithms and LLM-based agents with our benchmark and conduct comprehensive experiments. The results demonstrate that HLSMAC serves as a robust testbed for advancing multi-agent strategic decision-making.

Mxplainer: Explain and Learn Insights by Imitating Mahjong Agents

Jun 17, 2025Abstract:People need to internalize the skills of AI agents to improve their own capabilities. Our paper focuses on Mahjong, a multiplayer game involving imperfect information and requiring effective long-term decision-making amidst randomness and hidden information. Through the efforts of AI researchers, several impressive Mahjong AI agents have already achieved performance levels comparable to those of professional human players; however, these agents are often treated as black boxes from which few insights can be gleaned. This paper introduces Mxplainer, a parameterized search algorithm that can be converted into an equivalent neural network to learn the parameters of black-box agents. Experiments conducted on AI and human player data demonstrate that the learned parameters provide human-understandable insights into these agents' characteristics and play styles. In addition to analyzing the learned parameters, we also showcase how our search-based framework can locally explain the decision-making processes of black-box agents for most Mahjong game states.

InterMT: Multi-Turn Interleaved Preference Alignment with Human Feedback

May 29, 2025Abstract:As multimodal large models (MLLMs) continue to advance across challenging tasks, a key question emerges: What essential capabilities are still missing? A critical aspect of human learning is continuous interaction with the environment -- not limited to language, but also involving multimodal understanding and generation. To move closer to human-level intelligence, models must similarly support multi-turn, multimodal interaction. In particular, they should comprehend interleaved multimodal contexts and respond coherently in ongoing exchanges. In this work, we present an initial exploration through the InterMT -- the first preference dataset for multi-turn multimodal interaction, grounded in real human feedback. In this exploration, we particularly emphasize the importance of human oversight, introducing expert annotations to guide the process, motivated by the fact that current MLLMs lack such complex interactive capabilities. InterMT captures human preferences at both global and local levels into nine sub-dimensions, consists of 15.6k prompts, 52.6k multi-turn dialogue instances, and 32.4k human-labeled preference pairs. To compensate for the lack of capability for multi-modal understanding and generation, we introduce an agentic workflow that leverages tool-augmented MLLMs to construct multi-turn QA instances. To further this goal, we introduce InterMT-Bench to assess the ability of MLLMs in assisting judges with multi-turn, multimodal tasks. We demonstrate the utility of \InterMT through applications such as judge moderation and further reveal the multi-turn scaling law of judge model. We hope the open-source of our data can help facilitate further research on aligning current MLLMs to the next step. Our project website can be found at https://pku-intermt.github.io .

Business Process Text Sketch Automation Generation Using Large Language Model

Sep 03, 2023Abstract:Business Process Management (BPM) is gaining increasing attention as it has the potential to cut costs while boosting output and quality. Business process document generation is a crucial stage in BPM. However, due to a shortage of datasets, data-driven deep learning techniques struggle to deliver the expected results. We propose an approach to transform Conditional Process Trees (CPTs) into Business Process Text Sketches (BPTSs) using Large Language Models (LLMs). The traditional prompting approach (Few-shot In-Context Learning) tries to get the correct answer in one go, and it can find the pattern of transforming simple CPTs into BPTSs, but for close-domain and CPTs with complex hierarchy, the traditional prompts perform weakly and with low correctness. We suggest using this technique to break down a difficult CPT into a number of basic CPTs and then solve each one in turn, drawing inspiration from the divide-and-conquer strategy. We chose 100 process trees with depths ranging from 2 to 5 at random, as well as CPTs with many nodes, many degrees of selection, and cyclic nesting. Experiments show that our method can achieve a correct rate of 93.42%, which is 45.17% better than traditional prompting methods. Our proposed method provides a solution for business process document generation in the absence of datasets, and secondly, it becomes potentially possible to provide a large number of datasets for the process model extraction (PME) domain.

Evolutionary Game-Theoretical Analysis for General Multiplayer Asymmetric Games

Jun 22, 2022

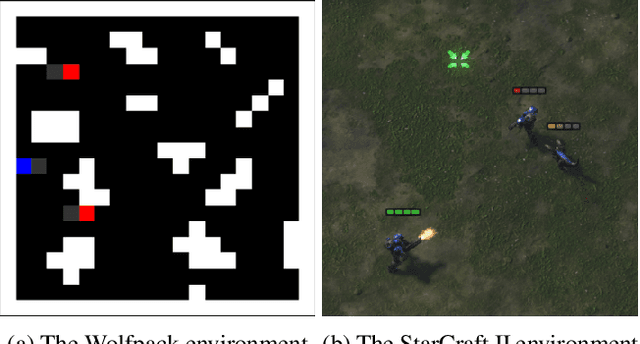

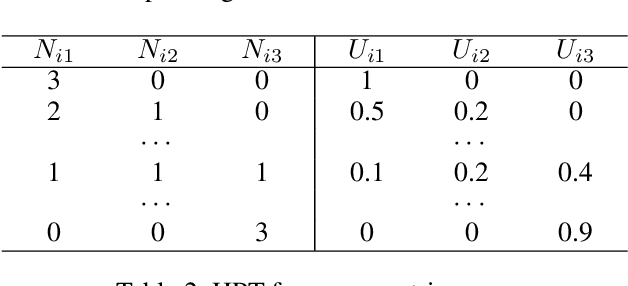

Abstract:Evolutionary game theory has been a successful tool to combine classical game theory with learning-dynamical descriptions in multiagent systems. Provided some symmetric structures of interacting players, many studies have been focused on using a simplified heuristic payoff table as input to analyse the dynamics of interactions. Nevertheless, even for the state-of-the-art method, there are two limits. First, there is inaccuracy when analysing the simplified payoff table. Second, no existing work is able to deal with 2-population multiplayer asymmetric games. In this paper, we fill the gap between heuristic payoff table and dynamic analysis without any inaccuracy. In addition, we propose a general framework for $m$ versus $n$ 2-population multiplayer asymmetric games. Then, we compare our method with the state-of-the-art in some classic games. Finally, to illustrate our method, we perform empirical game-theoretical analysis on Wolfpack as well as StarCraft II, both of which involve complex multiagent interactions.

A Note on Optimizing the Ratio of Monotone Supermodular Functions

Dec 16, 2020Abstract:We show that for the problem of minimizing (or maximizing) the ratio of two supermodular functions, no bounded approximation ratio can be achieved via polynomial number of queries, if the two supermodular functions are both monotone non-decreasing or non-increasing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge