Weiling Chen

Omne-R1: Learning to Reason with Memory for Multi-hop Question Answering

Aug 24, 2025Abstract:This paper introduces Omne-R1, a novel approach designed to enhance multi-hop question answering capabilities on schema-free knowledge graphs by integrating advanced reasoning models. Our method employs a multi-stage training workflow, including two reinforcement learning phases and one supervised fine-tuning phase. We address the challenge of limited suitable knowledge graphs and QA data by constructing domain-independent knowledge graphs and auto-generating QA pairs. Experimental results show significant improvements in answering multi-hop questions, with notable performance gains on more complex 3+ hop questions. Our proposed training framework demonstrates strong generalization abilities across diverse knowledge domains.

Structural Similarity in Deep Features: Image Quality Assessment Robust to Geometrically Disparate Reference

Dec 27, 2024Abstract:Image Quality Assessment (IQA) with references plays an important role in optimizing and evaluating computer vision tasks. Traditional methods assume that all pixels of the reference and test images are fully aligned. Such Aligned-Reference IQA (AR-IQA) approaches fail to address many real-world problems with various geometric deformations between the two images. Although significant effort has been made to attack Geometrically-Disparate-Reference IQA (GDR-IQA) problem, it has been addressed in a task-dependent fashion, for example, by dedicated designs for image super-resolution and retargeting, or by assuming the geometric distortions to be small that can be countered by translation-robust filters or by explicit image registrations. Here we rethink this problem and propose a unified, non-training-based Deep Structural Similarity (DeepSSIM) approach to address the above problems in a single framework, which assesses structural similarity of deep features in a simple but efficient way and uses an attention calibration strategy to alleviate attention deviation. The proposed method, without application-specific design, achieves state-of-the-art performance on AR-IQA datasets and meanwhile shows strong robustness to various GDR-IQA test cases. Interestingly, our test also shows the effectiveness of DeepSSIM as an optimization tool for training image super-resolution, enhancement and restoration, implying an even wider generalizability. \footnote{Source code will be made public after the review is completed.

Long Term Memory: The Foundation of AI Self-Evolution

Oct 21, 2024

Abstract:Large language models (LLMs) like GPTs, trained on vast datasets, have demonstrated impressive capabilities in language understanding, reasoning, and planning, achieving human-level performance in various tasks. Most studies focus on enhancing these models by training on ever-larger datasets to build more powerful foundation models. While training stronger models is important, enabling models to evolve during inference is equally crucial, a process we refer to as AI self-evolution. Unlike large-scale training, self-evolution may rely on limited data or interactions. Inspired by the columnar organization of the human cerebral cortex, we hypothesize that AI models could develop cognitive abilities and build internal representations through iterative interactions with their environment. To achieve this, models need long-term memory (LTM) to store and manage processed interaction data. LTM supports self-evolution by representing diverse experiences across environments and agents. In this report, we explore AI self-evolution and its potential to enhance models during inference. We examine LTM's role in lifelong learning, allowing models to evolve based on accumulated interactions. We outline the structure of LTM and the systems needed for effective data retention and representation. We also classify approaches for building personalized models with LTM data and show how these models achieve self-evolution through interaction. Using LTM, our multi-agent framework OMNE achieved first place on the GAIA benchmark, demonstrating LTM's potential for AI self-evolution. Finally, we present a roadmap for future research, emphasizing the importance of LTM for advancing AI technology and its practical applications.

Dual-Perspective Knowledge Enrichment for Semi-Supervised 3D Object Detection

Jan 10, 2024

Abstract:Semi-supervised 3D object detection is a promising yet under-explored direction to reduce data annotation costs, especially for cluttered indoor scenes. A few prior works, such as SESS and 3DIoUMatch, attempt to solve this task by utilizing a teacher model to generate pseudo-labels for unlabeled samples. However, the availability of unlabeled samples in the 3D domain is relatively limited compared to its 2D counterpart due to the greater effort required to collect 3D data. Moreover, the loose consistency regularization in SESS and restricted pseudo-label selection strategy in 3DIoUMatch lead to either low-quality supervision or a limited amount of pseudo labels. To address these issues, we present a novel Dual-Perspective Knowledge Enrichment approach named DPKE for semi-supervised 3D object detection. Our DPKE enriches the knowledge of limited training data, particularly unlabeled data, from two perspectives: data-perspective and feature-perspective. Specifically, from the data-perspective, we propose a class-probabilistic data augmentation method that augments the input data with additional instances based on the varying distribution of class probabilities. Our DPKE achieves feature-perspective knowledge enrichment by designing a geometry-aware feature matching method that regularizes feature-level similarity between object proposals from the student and teacher models. Extensive experiments on the two benchmark datasets demonstrate that our DPKE achieves superior performance over existing state-of-the-art approaches under various label ratio conditions. The source code will be made available to the public.

Geometry-based spherical JND modeling for 360$^\circ$ display

Mar 07, 2023Abstract:360$^\circ$ videos have received widespread attention due to its realistic and immersive experiences for users. To date, how to accurately model the user perceptions on 360$^\circ$ display is still a challenging issue. In this paper, we exploit the visual characteristics of 360$^\circ$ projection and display and extend the popular just noticeable difference (JND) model to spherical JND (SJND). First, we propose a quantitative 2D-JND model by jointly considering spatial contrast sensitivity, luminance adaptation and texture masking effect. In particular, our model introduces an entropy-based region classification and utilizes different parameters for different types of regions for better modeling performance. Second, we extend our 2D-JND model to SJND by jointly exploiting latitude projection and field of view during 360$^\circ$ display. With this operation, SJND reflects both the characteristics of human vision system and the 360$^\circ$ display. Third, our SJND model is more consistent with user perceptions during subjective test and also shows more tolerance in distortions with fewer bit rates during 360$^\circ$ video compression. To further examine the effectiveness of our SJND model, we embed it in Versatile Video Coding (VVC) compression. Compared with the state-of-the-arts, our SJND-VVC framework significantly reduced the bit rate with negligible loss in visual quality.

A Correction-Based Dynamic Enhancement Framework towards Underwater Detection

Feb 06, 2023Abstract:To assist underwater object detection for better performance, image enhancement technology is often used as a pre-processing step. However, most of the existing enhancement methods tend to pursue the visual quality of an image, instead of providing effective help for detection tasks. In fact, image enhancement algorithms should be optimized with the goal of utility improvement. In this paper, to adapt to the underwater detection tasks, we proposed a lightweight dynamic enhancement algorithm using a contribution dictionary to guide low-level corrections. Dynamic solutions are designed to capture differences in detection preferences. In addition, it can also balance the inconsistency between the contribution of correction operations and their time complexity. Experimental results in real underwater object detection tasks show the superiority of our proposed method in both generalization and real-time performance.

Saliency-Aware Spatio-Temporal Artifact Detection for Compressed Video Quality Assessment

Jan 03, 2023Abstract:Compressed videos often exhibit visually annoying artifacts, known as Perceivable Encoding Artifacts (PEAs), which dramatically degrade video visual quality. Subjective and objective measures capable of identifying and quantifying various types of PEAs are critical in improving visual quality. In this paper, we investigate the influence of four spatial PEAs (i.e. blurring, blocking, bleeding, and ringing) and two temporal PEAs (i.e. flickering and floating) on video quality. For spatial artifacts, we propose a visual saliency model with a low computational cost and higher consistency with human visual perception. In terms of temporal artifacts, self-attention based TimeSFormer is improved to detect temporal artifacts. Based on the six types of PEAs, a quality metric called Saliency-Aware Spatio-Temporal Artifacts Measurement (SSTAM) is proposed. Experimental results demonstrate that the proposed method outperforms state-of-the-art metrics. We believe that SSTAM will be beneficial for optimizing video coding techniques.

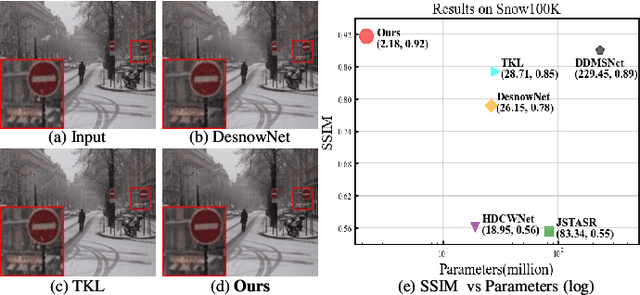

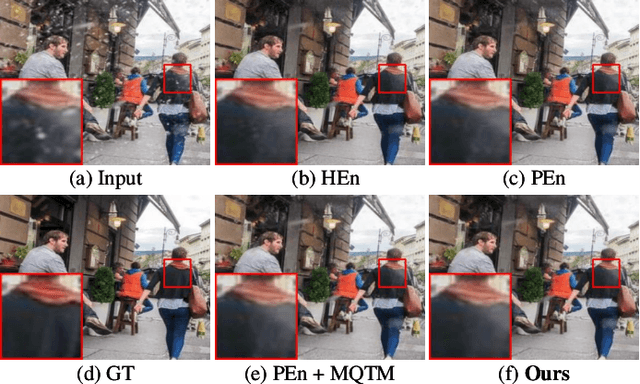

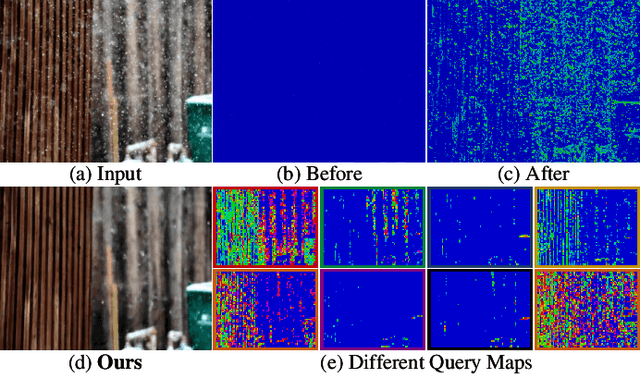

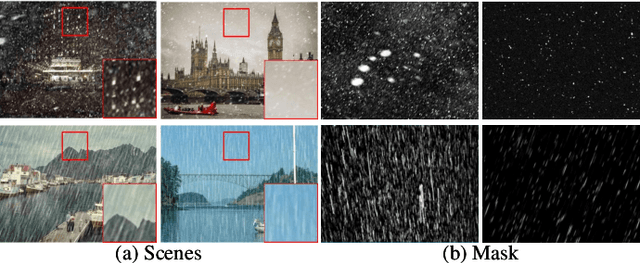

LMQFormer: A Laplace-Prior-Guided Mask Query Transformer for Lightweight Snow Removal

Oct 12, 2022

Abstract:Snow removal aims to locate snow areas and recover clean images without repairing traces. Unlike the regularity and semitransparency of rain, snow with various patterns and degradations seriously occludes the background. As a result, the state-of-the-art snow removal methods usually retains a large parameter size. In this paper, we propose a lightweight but high-efficient snow removal network called Laplace Mask Query Transformer (LMQFormer). Firstly, we present a Laplace-VQVAE to generate a coarse mask as prior knowledge of snow. Instead of using the mask in dataset, we aim at reducing both the information entropy of snow and the computational cost of recovery. Secondly, we design a Mask Query Transformer (MQFormer) to remove snow with the coarse mask, where we use two parallel encoders and a hybrid decoder to learn extensive snow features under lightweight requirements. Thirdly, we develop a Duplicated Mask Query Attention (DMQA) that converts the coarse mask into a specific number of queries, which constraint the attention areas of MQFormer with reduced parameters. Experimental results in popular datasets have demonstrated the efficiency of our proposed model, which achieves the state-of-the-art snow removal quality with significantly reduced parameters and the lowest running time.

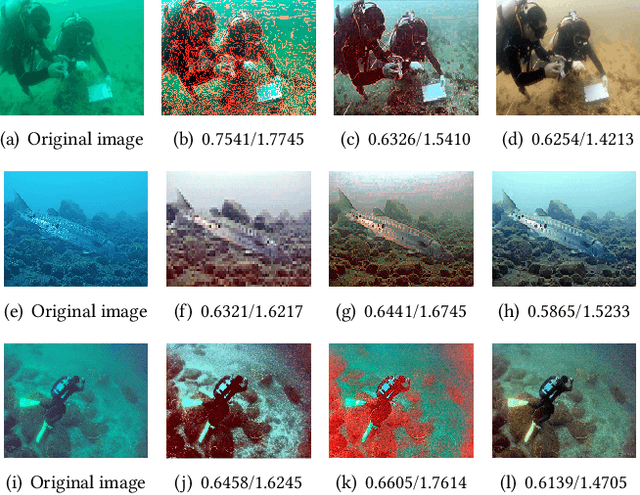

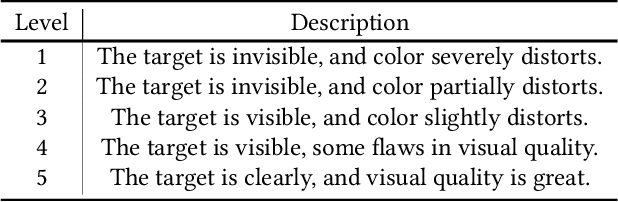

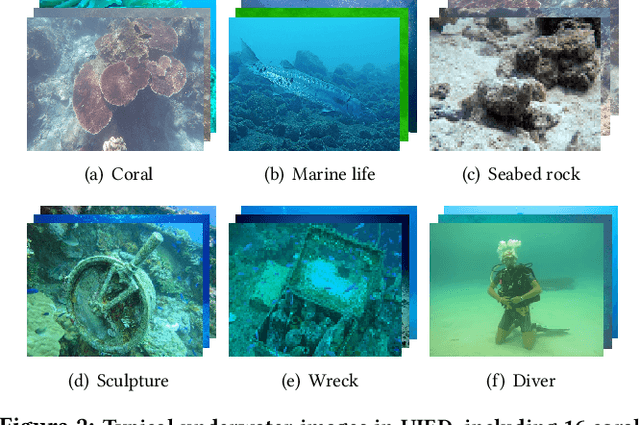

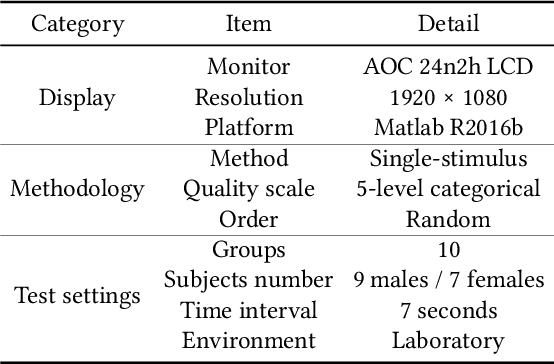

UIF: An Objective Quality Assessment for Underwater Image Enhancement

May 19, 2022

Abstract:Due to complex and volatile lighting environment, underwater imaging can be readily impaired by light scattering, warping, and noises. To improve the visual quality, Underwater Image Enhancement (UIE) techniques have been widely studied. Recent efforts have also been contributed to evaluate and compare the UIE performances with subjective and objective methods. However, the subjective evaluation is time-consuming and uneconomic for all images, while existing objective methods have limited capabilities for the newly-developed UIE approaches based on deep learning. To fill this gap, we propose an Underwater Image Fidelity (UIF) metric for objective evaluation of enhanced underwater images. By exploiting the statistical features of these images, we present to extract naturalness-related, sharpness-related, and structure-related features. Among them, the naturalness-related and sharpness-related features evaluate visual improvement of enhanced images; the structure-related feature indicates structural similarity between images before and after UIE. Then, we employ support vector regression to fuse the above three features into a final UIF metric. In addition, we have also established a large-scale UIE database with subjective scores, namely Underwater Image Enhancement Database (UIED), which is utilized as a benchmark to compare all objective metrics. Experimental results confirm that the proposed UIF outperforms a variety of underwater and general-purpose image quality metrics.

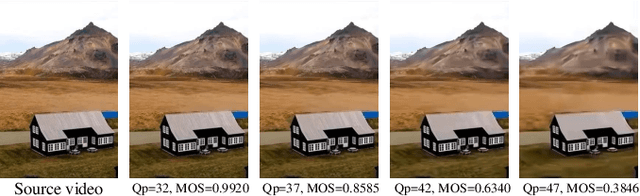

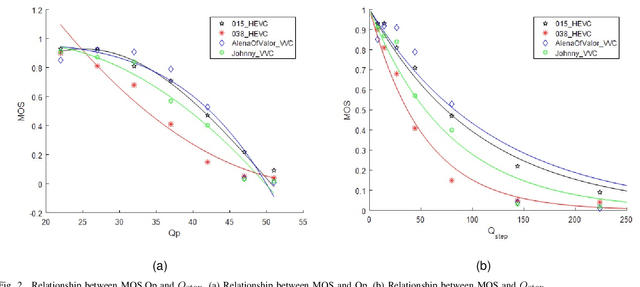

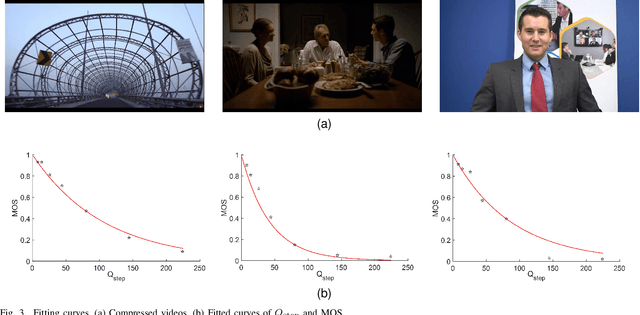

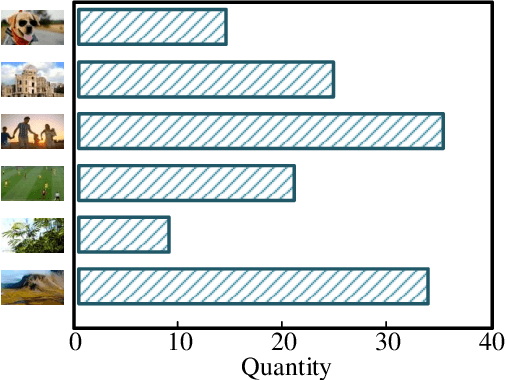

Deep Quality Assessment of Compressed Videos: A Subjective and Objective Study

May 07, 2022

Abstract:In the video coding process, the perceived quality of a compressed video is evaluated by full-reference quality evaluation metrics. However, it is difficult to obtain reference videos with perfect quality. To solve this problem, it is critical to design no-reference compressed video quality assessment algorithms, which assists in measuring the quality of experience on the server side and resource allocation on the network side. Convolutional Neural Network (CNN) has shown its advantage in Video Quality Assessment (VQA) with promising successes in recent years. A large-scale quality database is very important for learning accurate and powerful compressed video quality metrics. In this work, a semi-automatic labeling method is adopted to build a large-scale compressed video quality database, which allows us to label a large number of compressed videos with manageable human workload. The resulting Compressed Video quality database with Semi-Automatic Ratings (CVSAR), so far the largest of compressed video quality database. We train a no-reference compressed video quality assessment model with a 3D CNN for SpatioTemporal Feature Extraction and Evaluation (STFEE). Experimental results demonstrate that the proposed method outperforms state-of-the-art metrics and achieves promising generalization performance in cross-database tests. The CVSAR database and STFEE model will be made publicly available to facilitate reproducible research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge