Rongfu Lin

UIF: An Objective Quality Assessment for Underwater Image Enhancement

May 19, 2022

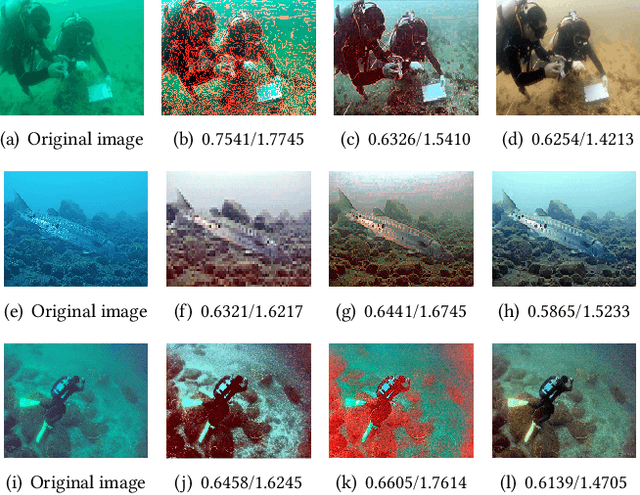

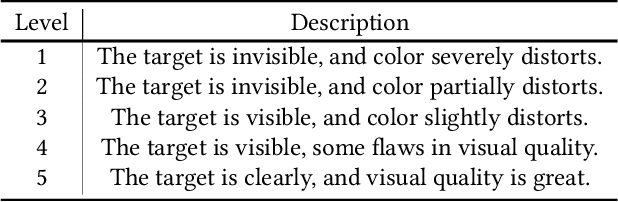

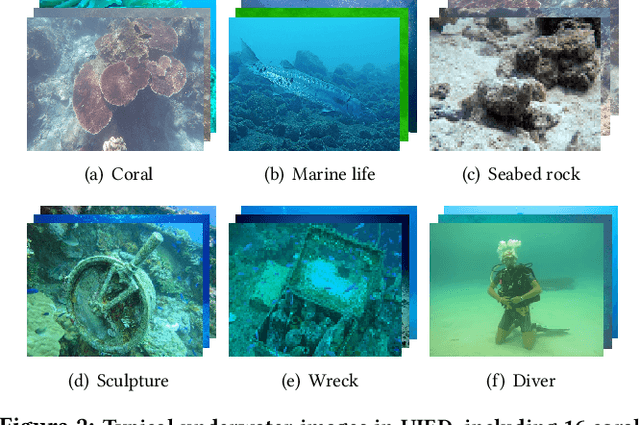

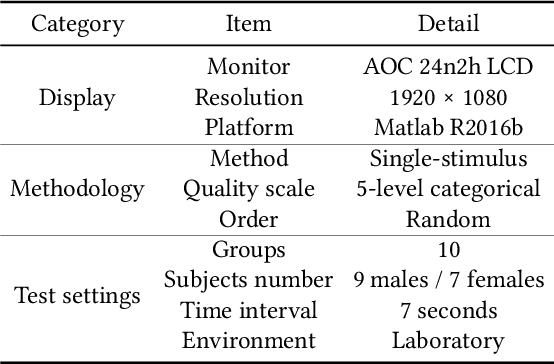

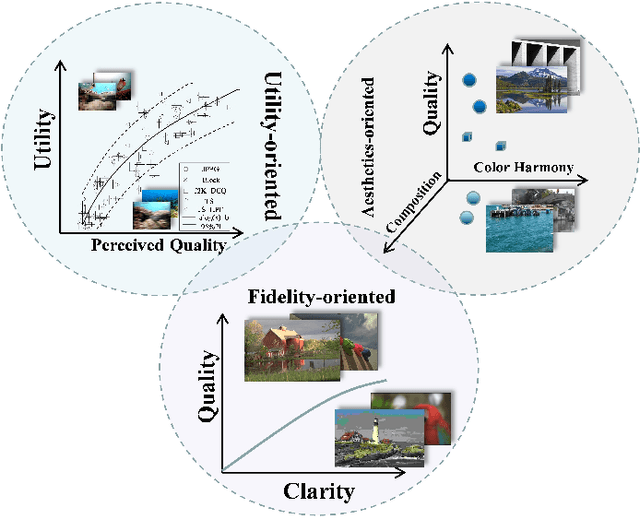

Abstract:Due to complex and volatile lighting environment, underwater imaging can be readily impaired by light scattering, warping, and noises. To improve the visual quality, Underwater Image Enhancement (UIE) techniques have been widely studied. Recent efforts have also been contributed to evaluate and compare the UIE performances with subjective and objective methods. However, the subjective evaluation is time-consuming and uneconomic for all images, while existing objective methods have limited capabilities for the newly-developed UIE approaches based on deep learning. To fill this gap, we propose an Underwater Image Fidelity (UIF) metric for objective evaluation of enhanced underwater images. By exploiting the statistical features of these images, we present to extract naturalness-related, sharpness-related, and structure-related features. Among them, the naturalness-related and sharpness-related features evaluate visual improvement of enhanced images; the structure-related feature indicates structural similarity between images before and after UIE. Then, we employ support vector regression to fuse the above three features into a final UIF metric. In addition, we have also established a large-scale UIE database with subjective scores, namely Underwater Image Enhancement Database (UIED), which is utilized as a benchmark to compare all objective metrics. Experimental results confirm that the proposed UIF outperforms a variety of underwater and general-purpose image quality metrics.

Utility-Oriented Underwater Image Quality Assessment Based on Transfer Learning

May 07, 2022

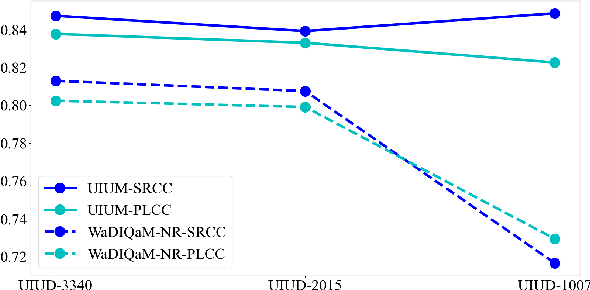

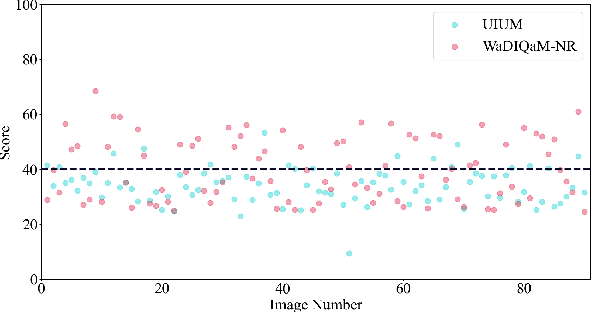

Abstract:The widespread image applications have greatly promoted the vision-based tasks, in which the Image Quality Assessment (IQA) technique has become an increasingly significant issue. For user enjoyment in multimedia systems, the IQA exploits image fidelity and aesthetics to characterize user experience; while for other tasks such as popular object recognition, there exists a low correlation between utilities and perceptions. In such cases, the fidelity-based and aesthetics-based IQA methods cannot be directly applied. To address this issue, this paper proposes a utility-oriented IQA in object recognition. In particular, we initialize our research in the scenario of underwater fish detection, which is a critical task that has not yet been perfectly addressed. Based on this task, we build an Underwater Image Utility Database (UIUD) and a learning-based Underwater Image Utility Measure (UIUM). Inspired by the top-down design of fidelity-based IQA, we exploit the deep models of object recognition and transfer their features to our UIUM. Experiments validate that the proposed transfer-learning-based UIUM achieves promising performance in the recognition task. We envision our research provides insights to bridge the researches of IQA and computer vision.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge