Tiesong Zhao

Event-based Visual Deformation Measurement

Feb 16, 2026Abstract:Visual Deformation Measurement (VDM) aims to recover dense deformation fields by tracking surface motion from camera observations. Traditional image-based methods rely on minimal inter-frame motion to constrain the correspondence search space, which limits their applicability to highly dynamic scenes or necessitates high-speed cameras at the cost of prohibitive storage and computational overhead. We propose an event-frame fusion framework that exploits events for temporally dense motion cues and frames for spatially dense precise estimation. Revisiting the solid elastic modeling prior, we propose an Affine Invariant Simplicial (AIS) framework. It partitions the deformation field into linearized sub-regions with low-parametric representation, effectively mitigating motion ambiguities arising from sparse and noisy events. To speed up parameter searching and reduce error accumulation, a neighborhood-greedy optimization strategy is introduced, enabling well-converged sub-regions to guide their poorly-converged neighbors, effectively suppress local error accumulation in long-term dense tracking. To evaluate the proposed method, a benchmark dataset with temporally aligned event streams and frames is established, encompassing over 120 sequences spanning diverse deformation scenarios. Experimental results show that our method outperforms the state-of-the-art baseline by 1.6% in survival rate. Remarkably, it achieves this using only 18.9% of the data storage and processing resources of high-speed video methods.

OmniVaT: Single Domain Generalization for Multimodal Visual-Tactile Learning

Jan 01, 2026Abstract:Visual-tactile learning (VTL) enables embodied agents to perceive the physical world by integrating visual (VIS) and tactile (TAC) sensors. However, VTL still suffers from modality discrepancies between VIS and TAC images, as well as domain gaps caused by non-standardized tactile sensors and inconsistent data collection procedures. We formulate these challenges as a new task, termed single domain generalization for multimodal VTL (SDG-VTL). In this paper, we propose an OmniVaT framework that, for the first time, successfully addresses this task. On the one hand, OmniVaT integrates a multimodal fractional Fourier adapter (MFFA) to map VIS and TAC embeddings into a unified embedding-frequency space, thereby effectively mitigating the modality gap without multi-domain training data or careful cross-modal fusion strategies. On the other hand, it also incorporates a discrete tree generation (DTG) module that obtains diverse and reliable multimodal fractional representations through a hierarchical tree structure, thereby enhancing its adaptivity to fluctuating domain shifts in unseen domains. Extensive experiments demonstrate the superior cross-domain generalization performance of OmniVaT on the SDG-VTL task.

Structural Similarity in Deep Features: Image Quality Assessment Robust to Geometrically Disparate Reference

Dec 27, 2024Abstract:Image Quality Assessment (IQA) with references plays an important role in optimizing and evaluating computer vision tasks. Traditional methods assume that all pixels of the reference and test images are fully aligned. Such Aligned-Reference IQA (AR-IQA) approaches fail to address many real-world problems with various geometric deformations between the two images. Although significant effort has been made to attack Geometrically-Disparate-Reference IQA (GDR-IQA) problem, it has been addressed in a task-dependent fashion, for example, by dedicated designs for image super-resolution and retargeting, or by assuming the geometric distortions to be small that can be countered by translation-robust filters or by explicit image registrations. Here we rethink this problem and propose a unified, non-training-based Deep Structural Similarity (DeepSSIM) approach to address the above problems in a single framework, which assesses structural similarity of deep features in a simple but efficient way and uses an attention calibration strategy to alleviate attention deviation. The proposed method, without application-specific design, achieves state-of-the-art performance on AR-IQA datasets and meanwhile shows strong robustness to various GDR-IQA test cases. Interestingly, our test also shows the effectiveness of DeepSSIM as an optimization tool for training image super-resolution, enhancement and restoration, implying an even wider generalizability. \footnote{Source code will be made public after the review is completed.

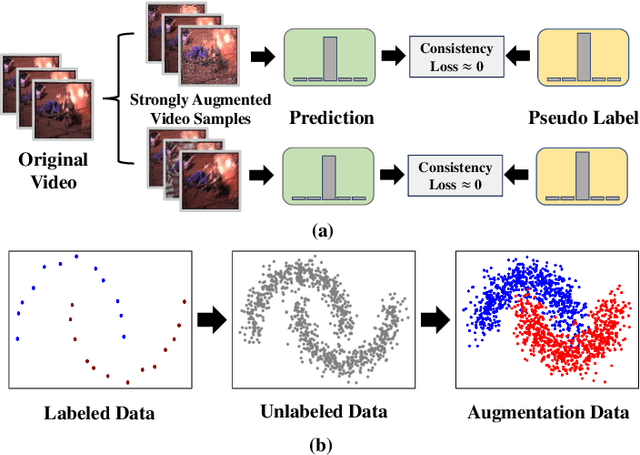

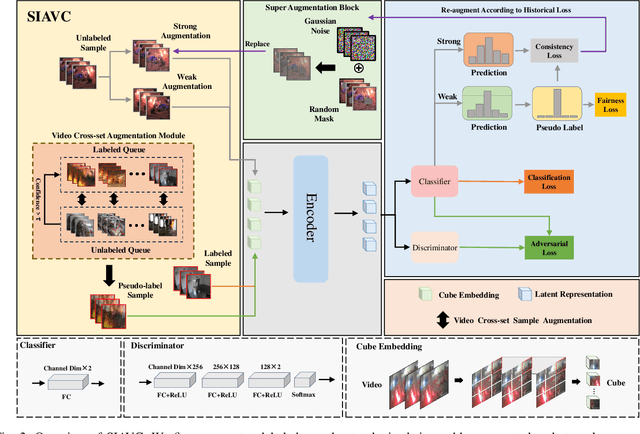

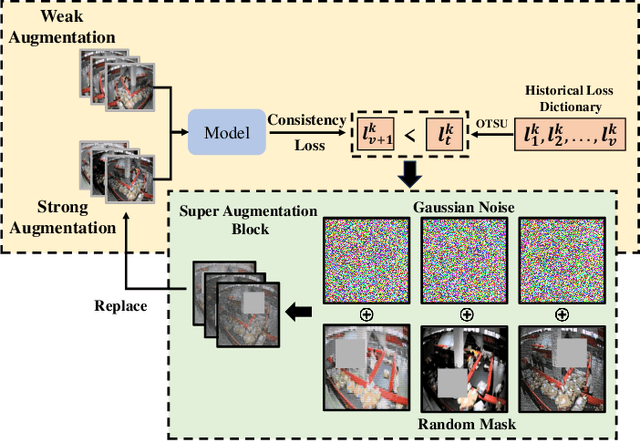

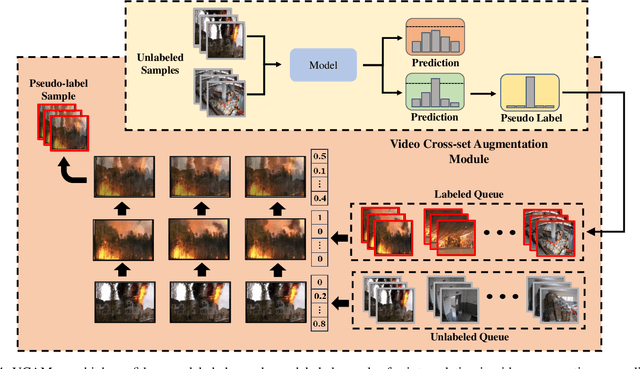

SIAVC: Semi-Supervised Framework for Industrial Accident Video Classification

May 23, 2024

Abstract:Semi-supervised learning suffers from the imbalance of labeled and unlabeled training data in the video surveillance scenario. In this paper, we propose a new semi-supervised learning method called SIAVC for industrial accident video classification. Specifically, we design a video augmentation module called the Super Augmentation Block (SAB). SAB adds Gaussian noise and randomly masks video frames according to historical loss on the unlabeled data for model optimization. Then, we propose a Video Cross-set Augmentation Module (VCAM) to generate diverse pseudo-label samples from the high-confidence unlabeled samples, which alleviates the mismatch of sampling experience and provides high-quality training data. Additionally, we construct a new industrial accident surveillance video dataset with frame-level annotation, namely ECA9, to evaluate our proposed method. Compared with the state-of-the-art semi-supervised learning based methods, SIAVC demonstrates outstanding video classification performance, achieving 88.76\% and 89.13\% accuracy on ECA9 and Fire Detection datasets, respectively. The source code and the constructed dataset ECA9 will be released in \url{https://github.com/AlchemyEmperor/SIAVC}.

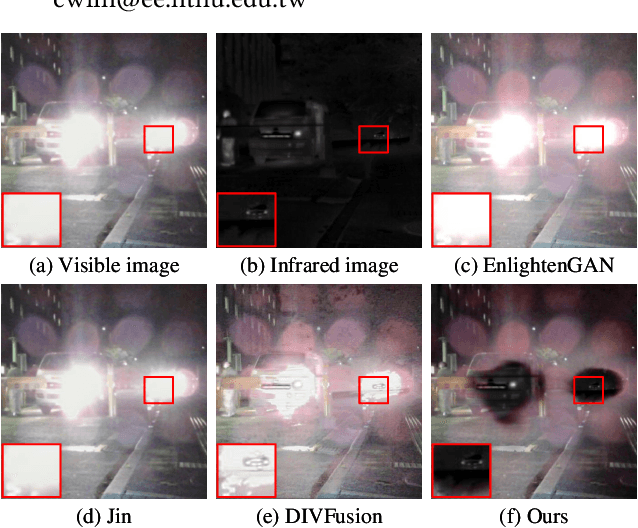

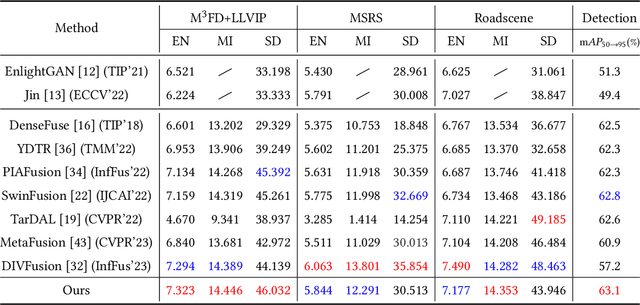

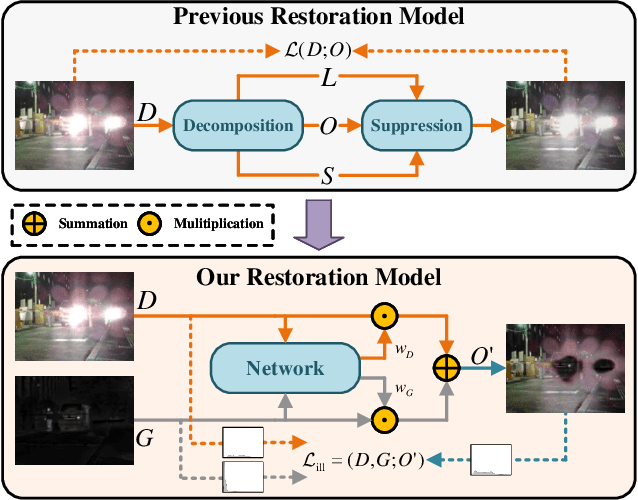

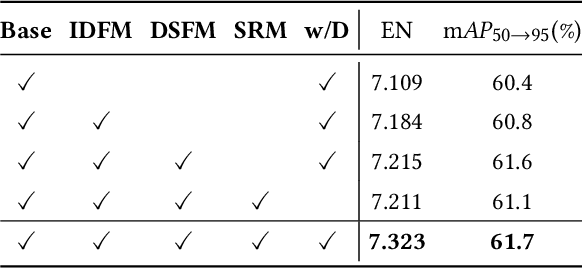

Beyond Night Visibility: Adaptive Multi-Scale Fusion of Infrared and Visible Images

Mar 02, 2024

Abstract:In addition to low light, night images suffer degradation from light effects (e.g., glare, floodlight, etc). However, existing nighttime visibility enhancement methods generally focus on low-light regions, which neglects, or even amplifies the light effects. To address this issue, we propose an Adaptive Multi-scale Fusion network (AMFusion) with infrared and visible images, which designs fusion rules according to different illumination regions. First, we separately fuse spatial and semantic features from infrared and visible images, where the former are used for the adjustment of light distribution and the latter are used for the improvement of detection accuracy. Thereby, we obtain an image free of low light and light effects, which improves the performance of nighttime object detection. Second, we utilize detection features extracted by a pre-trained backbone that guide the fusion of semantic features. Hereby, we design a Detection-guided Semantic Fusion Module (DSFM) to bridge the domain gap between detection and semantic features. Third, we propose a new illumination loss to constrain fusion image with normal light intensity. Experimental results demonstrate the superiority of AMFusion with better visual quality and detection accuracy. The source code will be released after the peer review process.

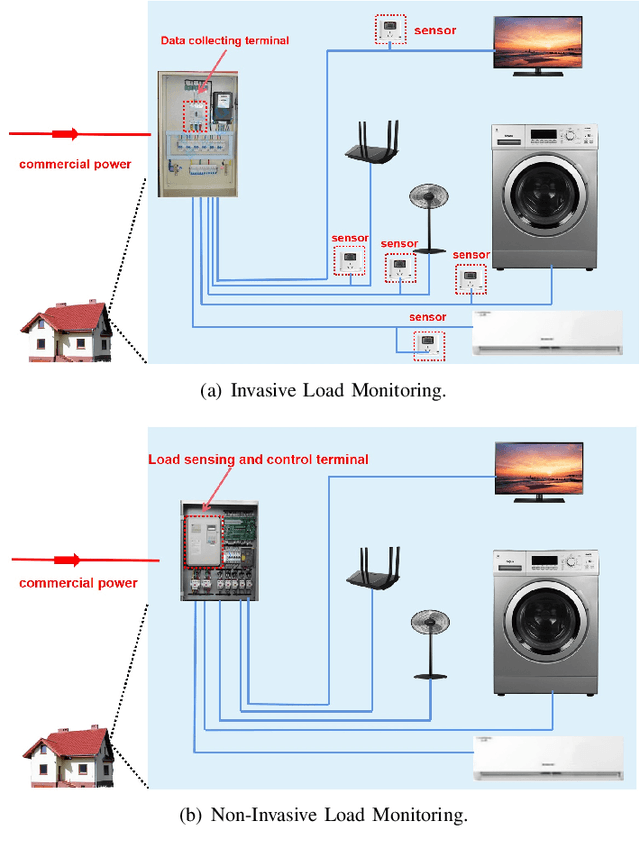

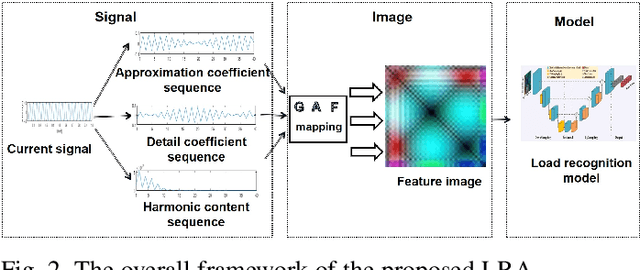

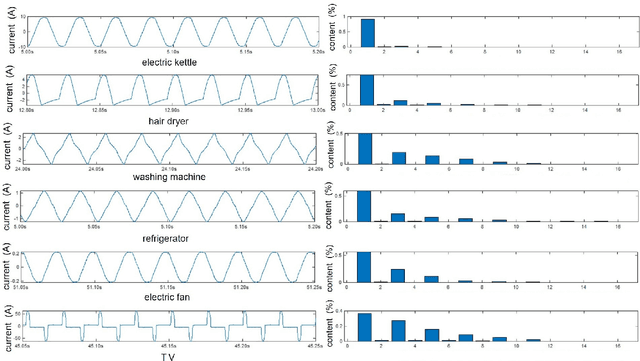

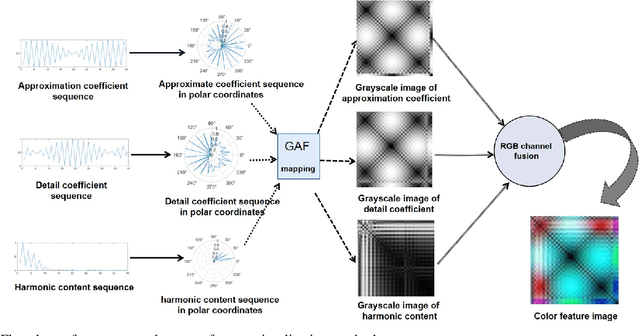

Non-Intrusive Electric Load Monitoring Approach Based on Current Feature Visualization for Smart Energy Management

Aug 08, 2023

Abstract:The state-of-the-art smart city has been calling for an economic but efficient energy management over large-scale network, especially for the electric power system. It is a critical issue to monitor, analyze and control electric loads of all users in system. In this paper, we employ the popular computer vision techniques of AI to design a non-invasive load monitoring method for smart electric energy management. First of all, we utilize both signal transforms (including wavelet transform and discrete Fourier transform) and Gramian Angular Field (GAF) methods to map one-dimensional current signals onto two-dimensional color feature images. Second, we propose to recognize all electric loads from color feature images using a U-shape deep neural network with multi-scale feature extraction and attention mechanism. Third, we design our method as a cloud-based, non-invasive monitoring of all users, thereby saving energy cost during electric power system control. Experimental results on both public and our private datasets have demonstrated our method achieves superior performances than its peers, and thus supports efficient energy management over large-scale Internet of Things (IoT).

Geometry-based spherical JND modeling for 360$^\circ$ display

Mar 07, 2023Abstract:360$^\circ$ videos have received widespread attention due to its realistic and immersive experiences for users. To date, how to accurately model the user perceptions on 360$^\circ$ display is still a challenging issue. In this paper, we exploit the visual characteristics of 360$^\circ$ projection and display and extend the popular just noticeable difference (JND) model to spherical JND (SJND). First, we propose a quantitative 2D-JND model by jointly considering spatial contrast sensitivity, luminance adaptation and texture masking effect. In particular, our model introduces an entropy-based region classification and utilizes different parameters for different types of regions for better modeling performance. Second, we extend our 2D-JND model to SJND by jointly exploiting latitude projection and field of view during 360$^\circ$ display. With this operation, SJND reflects both the characteristics of human vision system and the 360$^\circ$ display. Third, our SJND model is more consistent with user perceptions during subjective test and also shows more tolerance in distortions with fewer bit rates during 360$^\circ$ video compression. To further examine the effectiveness of our SJND model, we embed it in Versatile Video Coding (VVC) compression. Compared with the state-of-the-arts, our SJND-VVC framework significantly reduced the bit rate with negligible loss in visual quality.

Saliency-Aware Spatio-Temporal Artifact Detection for Compressed Video Quality Assessment

Jan 03, 2023Abstract:Compressed videos often exhibit visually annoying artifacts, known as Perceivable Encoding Artifacts (PEAs), which dramatically degrade video visual quality. Subjective and objective measures capable of identifying and quantifying various types of PEAs are critical in improving visual quality. In this paper, we investigate the influence of four spatial PEAs (i.e. blurring, blocking, bleeding, and ringing) and two temporal PEAs (i.e. flickering and floating) on video quality. For spatial artifacts, we propose a visual saliency model with a low computational cost and higher consistency with human visual perception. In terms of temporal artifacts, self-attention based TimeSFormer is improved to detect temporal artifacts. Based on the six types of PEAs, a quality metric called Saliency-Aware Spatio-Temporal Artifacts Measurement (SSTAM) is proposed. Experimental results demonstrate that the proposed method outperforms state-of-the-art metrics. We believe that SSTAM will be beneficial for optimizing video coding techniques.

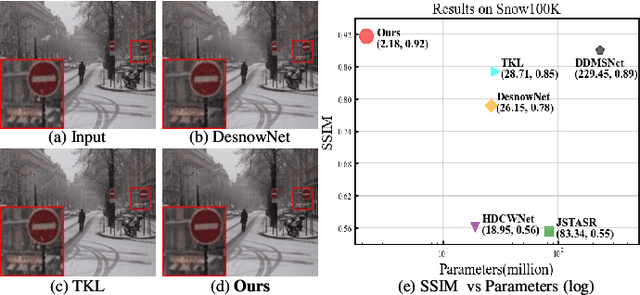

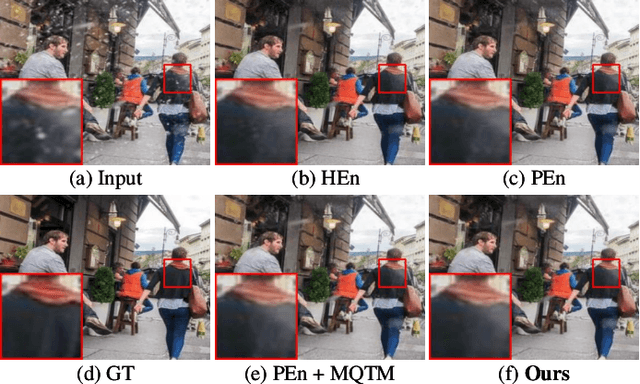

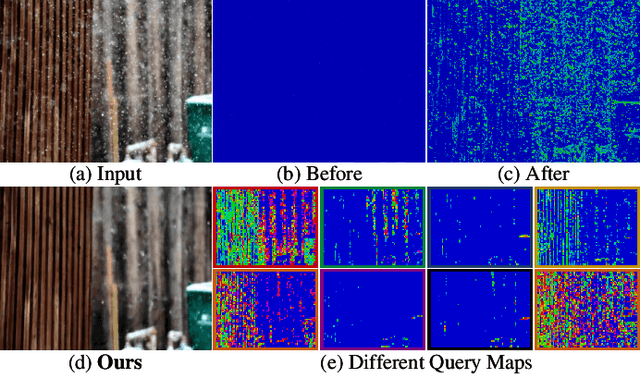

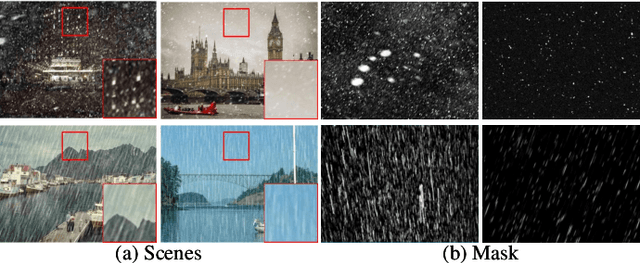

LMQFormer: A Laplace-Prior-Guided Mask Query Transformer for Lightweight Snow Removal

Oct 12, 2022

Abstract:Snow removal aims to locate snow areas and recover clean images without repairing traces. Unlike the regularity and semitransparency of rain, snow with various patterns and degradations seriously occludes the background. As a result, the state-of-the-art snow removal methods usually retains a large parameter size. In this paper, we propose a lightweight but high-efficient snow removal network called Laplace Mask Query Transformer (LMQFormer). Firstly, we present a Laplace-VQVAE to generate a coarse mask as prior knowledge of snow. Instead of using the mask in dataset, we aim at reducing both the information entropy of snow and the computational cost of recovery. Secondly, we design a Mask Query Transformer (MQFormer) to remove snow with the coarse mask, where we use two parallel encoders and a hybrid decoder to learn extensive snow features under lightweight requirements. Thirdly, we develop a Duplicated Mask Query Attention (DMQA) that converts the coarse mask into a specific number of queries, which constraint the attention areas of MQFormer with reduced parameters. Experimental results in popular datasets have demonstrated the efficiency of our proposed model, which achieves the state-of-the-art snow removal quality with significantly reduced parameters and the lowest running time.

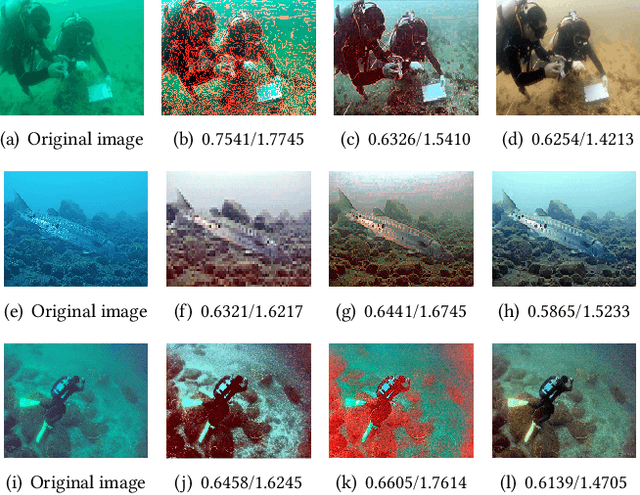

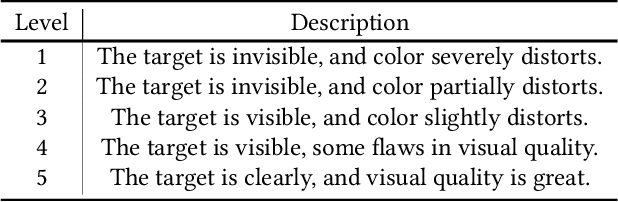

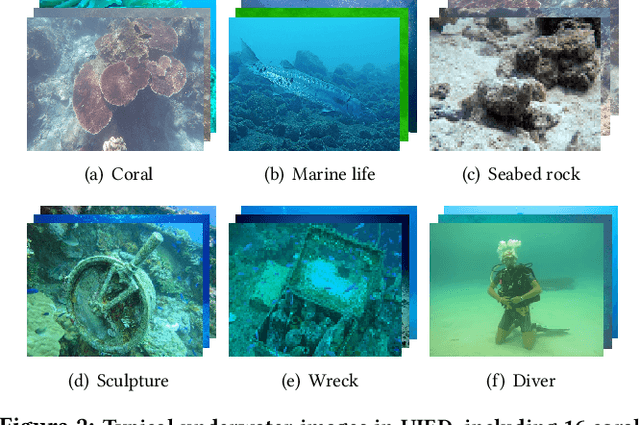

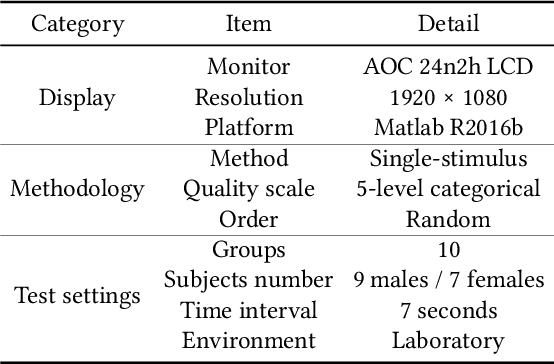

UIF: An Objective Quality Assessment for Underwater Image Enhancement

May 19, 2022

Abstract:Due to complex and volatile lighting environment, underwater imaging can be readily impaired by light scattering, warping, and noises. To improve the visual quality, Underwater Image Enhancement (UIE) techniques have been widely studied. Recent efforts have also been contributed to evaluate and compare the UIE performances with subjective and objective methods. However, the subjective evaluation is time-consuming and uneconomic for all images, while existing objective methods have limited capabilities for the newly-developed UIE approaches based on deep learning. To fill this gap, we propose an Underwater Image Fidelity (UIF) metric for objective evaluation of enhanced underwater images. By exploiting the statistical features of these images, we present to extract naturalness-related, sharpness-related, and structure-related features. Among them, the naturalness-related and sharpness-related features evaluate visual improvement of enhanced images; the structure-related feature indicates structural similarity between images before and after UIE. Then, we employ support vector regression to fuse the above three features into a final UIF metric. In addition, we have also established a large-scale UIE database with subjective scores, namely Underwater Image Enhancement Database (UIED), which is utilized as a benchmark to compare all objective metrics. Experimental results confirm that the proposed UIF outperforms a variety of underwater and general-purpose image quality metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge