Wei Ren

Graph Unlearning: Efficient Node Removal in Graph Neural Networks

Sep 05, 2025Abstract:With increasing concerns about privacy attacks and potential sensitive information leakage, researchers have actively explored methods to efficiently remove sensitive training data and reduce privacy risks in graph neural network (GNN) models. Node unlearning has emerged as a promising technique for protecting the privacy of sensitive nodes by efficiently removing specific training node information from GNN models. However, existing node unlearning methods either impose restrictions on the GNN structure or do not effectively utilize the graph topology for node unlearning. Some methods even compromise the graph's topology, making it challenging to achieve a satisfactory performance-complexity trade-off. To address these issues and achieve efficient unlearning for training node removal in GNNs, we propose three novel node unlearning methods: Class-based Label Replacement, Topology-guided Neighbor Mean Posterior Probability, and Class-consistent Neighbor Node Filtering. Among these methods, Topology-guided Neighbor Mean Posterior Probability and Class-consistent Neighbor Node Filtering effectively leverage the topological features of the graph, resulting in more effective node unlearning. To validate the superiority of our proposed methods in node unlearning, we conducted experiments on three benchmark datasets. The evaluation criteria included model utility, unlearning utility, and unlearning efficiency. The experimental results demonstrate the utility and efficiency of the proposed methods and illustrate their superiority compared to state-of-the-art node unlearning methods. Overall, the proposed methods efficiently remove sensitive training nodes and protect the privacy information of sensitive nodes in GNNs. The findings contribute to enhancing the privacy and security of GNN models and provide valuable insights into the field of node unlearning.

PLK-Calib: Single-shot and Target-less LiDAR-Camera Extrinsic Calibration using Plücker Lines

Mar 11, 2025Abstract:Accurate LiDAR-Camera (LC) calibration is challenging but crucial for autonomous systems and robotics. In this paper, we propose two single-shot and target-less algorithms to estimate the calibration parameters between LiDAR and camera using line features. The first algorithm constructs line-to-line constraints by defining points-to-line projection errors and minimizes the projection error. The second algorithm (PLK-Calib) utilizes the co-perpendicular and co-parallel geometric properties of lines in Pl\"ucker (PLK) coordinate, and decouples the rotation and translation into two constraints, enabling more accurate estimates. Our degenerate analysis and Monte Carlo simulation indicate that three nonparallel line pairs are the minimal requirements to estimate the extrinsic parameters. Furthermore, we collect an LC calibration dataset with varying extrinsic under three different scenarios and use it to evaluate the performance of our proposed algorithms.

NeRF-VIO: Map-Based Visual-Inertial Odometry with Initialization Leveraging Neural Radiance Fields

Mar 11, 2025Abstract:A prior map serves as a foundational reference for localization in context-aware applications such as augmented reality (AR). Providing valuable contextual information about the environment, the prior map is a vital tool for mitigating drift. In this paper, we propose a map-based visual-inertial localization algorithm (NeRF-VIO) with initialization using neural radiance fields (NeRF). Our algorithm utilizes a multilayer perceptron model and redefines the loss function as the geodesic distance on \(SE(3)\), ensuring the invariance of the initialization model under a frame change within \(\mathfrak{se}(3)\). The evaluation demonstrates that our model outperforms existing NeRF-based initialization solution in both accuracy and efficiency. By integrating a two-stage update mechanism within a multi-state constraint Kalman filter (MSCKF) framework, the state of NeRF-VIO is constrained by both captured images from an onboard camera and rendered images from a pre-trained NeRF model. The proposed algorithm is validated using a real-world AR dataset, the results indicate that our two-stage update pipeline outperforms MSCKF across all data sequences.

Large Language Models Merging for Enhancing the Link Stealing Attack on Graph Neural Networks

Dec 08, 2024

Abstract:Graph Neural Networks (GNNs), specifically designed to process the graph data, have achieved remarkable success in various applications. Link stealing attacks on graph data pose a significant privacy threat, as attackers aim to extract sensitive relationships between nodes (entities), potentially leading to academic misconduct, fraudulent transactions, or other malicious activities. Previous studies have primarily focused on single datasets and did not explore cross-dataset attacks, let alone attacks that leverage the combined knowledge of multiple attackers. However, we find that an attacker can combine the data knowledge of multiple attackers to create a more effective attack model, which can be referred to cross-dataset attacks. Moreover, if knowledge can be extracted with the help of Large Language Models (LLMs), the attack capability will be more significant. In this paper, we propose a novel link stealing attack method that takes advantage of cross-dataset and Large Language Models (LLMs). The LLM is applied to process datasets with different data structures in cross-dataset attacks. Each attacker fine-tunes the LLM on their specific dataset to generate a tailored attack model. We then introduce a novel model merging method to integrate the parameters of these attacker-specific models effectively. The result is a merged attack model with superior generalization capabilities, enabling effective attacks not only on the attackers' datasets but also on previously unseen (out-of-domain) datasets. We conducted extensive experiments in four datasets to demonstrate the effectiveness of our method. Additional experiments with three different GNN and LLM architectures further illustrate the generality of our approach.

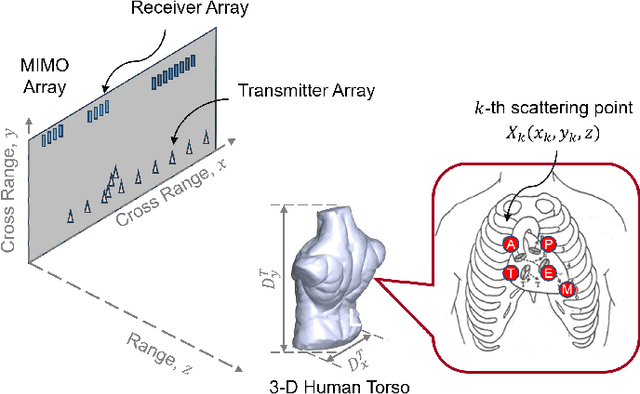

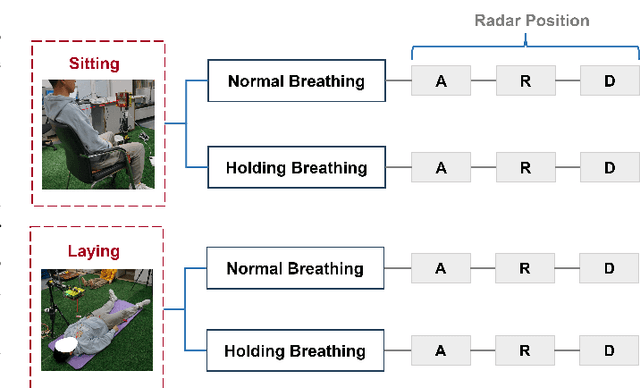

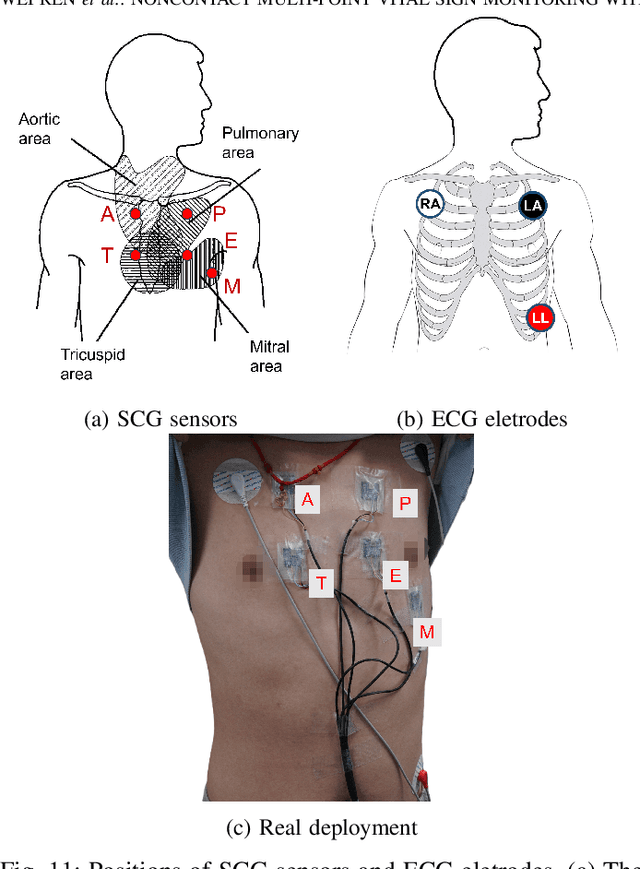

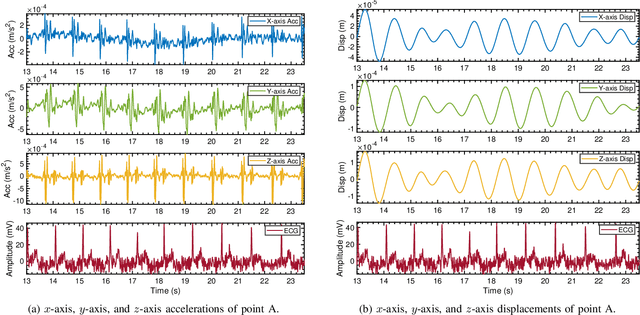

Noncontact Multi-Point Vital Sign Monitoring with mmWave MIMO Radar

Nov 14, 2024

Abstract:Multi-point vital sign monitoring is essential for providing detailed insights into physiological changes. Traditional single-sensor approaches are inadequate for capturing multi-point vibrations. Existing contact-based solutions, while addressing this need, can cause discomfort and skin allergies, whereas noncontact optical and acoustic methods are highly susceptible to light interference and environmental noise. In this paper, we aim to develop a non-contact, multi-point vital sign monitoring technique using MIMO radar, focused on physically differentiating and precisely measuring chest-wall surface vibrations at multiple points induced by cardiopulmonary mechanical activity. The primary challenges in developing such a technique involve developing algorithms to extract and separate entangled signals, as well as establishing a reliable method for validating detection accuracy. To address these limitations, we introduce MultiVital, a wireless system that leverages mmWave Multiple-input Multiple-output (MIMO) radar for synchronous multi-point vital sign monitoring. It integrates two reference modalities: five-channel seismocardiography (SCG) sensors and a one-channel electrocardiogram (ECG) electrode, enabling comprehensive radar-based research and performance validation across multiple physiological metrics. Additionally, we have developed a multi-modal signal processing framework, consisting of a radar signal processing module, an SCG calibration module, and a spatial alignment scheme. To evaluate the radar signal processing module, we conducted mathematical derivation and simulation. The experimental results indicate that the noncontact MultiVital system achieves multi-point synchronous monitoring with high precision, highly consistent with the results from reference modalities.

Ideal-LLM: Integrating Dual Encoders and Language-Adapted LLM for Multilingual Speech-to-Text

Sep 17, 2024

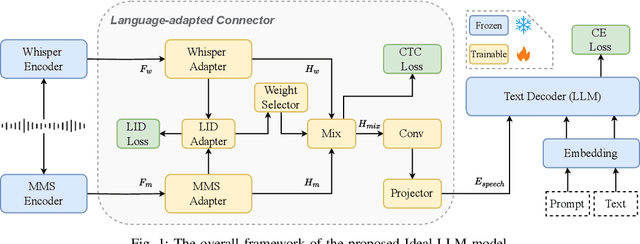

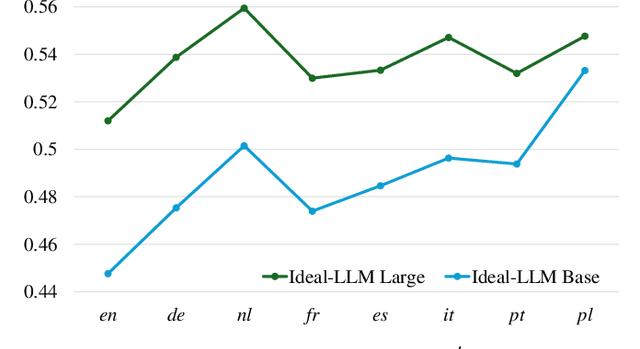

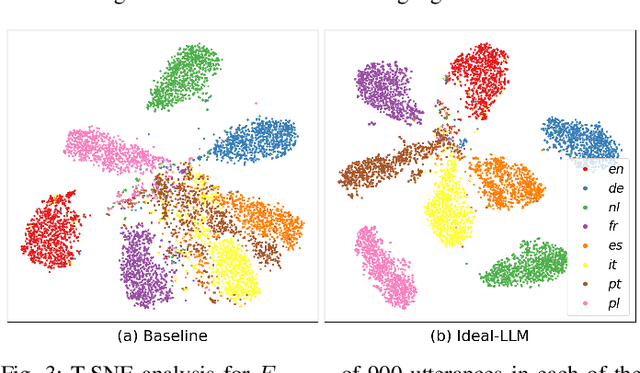

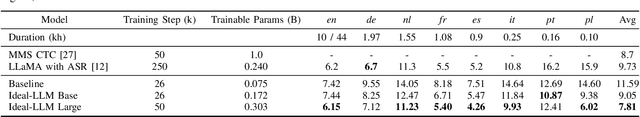

Abstract:Integrating audio encoders with LLMs through connectors has enabled these models to process and comprehend audio modalities, significantly enhancing speech-to-text tasks, including automatic speech recognition (ASR) and automatic speech translation (AST). However, these methods often overlook the critical aspect of language adaptation in multilingual settings, relying instead on multilingual data without adequately addressing language differences. To address this gap, we propose the Ideal-LLM model, which employs dual multilingual encoders to enrich language feature information and utilizes a language-adapted connector to target the adaptation of each language specifically. By leveraging the complementary strengths of Whisper and MMS encoders, our approach ensures richer multilingual representations. Additionally, the language-adapted connector enhances modal transformation via a language weight selector tailored for each language. Experimental results demonstrate that Ideal-LLM significantly improves ASR performance, achieving a 32.6% relative reduction in average word error rates compared to the standard speech encoder integrated with LLMs and yields an average BLEU score of 36.78 for AST task.

Zonotope-based Symbolic Controller Synthesis for Linear Temporal Logic Specifications

May 02, 2024

Abstract:This paper studies the controller synthesis problem for nonlinear control systems under linear temporal logic (LTL) specifications using zonotope techniques. A local-to-global control strategy is proposed for the desired specification expressed as an LTL formula. First, a novel approach is developed to divide the state space into finite zonotopes and constrained zonotopes, which are called cells and allowed to intersect with the neighbor cells. Second, from the intersection relation, a graph among all cells is generated to verify the realization of the accepting path for the LTL formula. The realization verification determines if there is a need for the control design, and also results in finite local LTL formulas. Third, once the accepting path is realized, a novel abstraction-based method is derived for the controller design. In particular, we only focus on the cells from the realization verification and approximate each cell thanks to properties of zonotopes. Based on local symbolic models and local LTL formulas, an iterative synthesis algorithm is proposed to design all local abstract controllers, whose existence and combination establish the global controller for the LTL formula. Finally, the proposed framework is illustrated via a path planning problem of mobile robots.

TIER: Text-Image Encoder-based Regression for AIGC Image Quality Assessment

Jan 11, 2024Abstract:Recently, AIGC image quality assessment (AIGCIQA), which aims to assess the quality of AI-generated images (AIGIs) from a human perception perspective, has emerged as a new topic in computer vision. Unlike common image quality assessment tasks where images are derived from original ones distorted by noise, blur, and compression, \textit{etc.}, in AIGCIQA tasks, images are typically generated by generative models using text prompts. Considerable efforts have been made in the past years to advance AIGCIQA. However, most existing AIGCIQA methods regress predicted scores directly from individual generated images, overlooking the information contained in the text prompts of these images. This oversight partially limits the performance of these AIGCIQA methods. To address this issue, we propose a text-image encoder-based regression (TIER) framework. Specifically, we process the generated images and their corresponding text prompts as inputs, utilizing a text encoder and an image encoder to extract features from these text prompts and generated images, respectively. To demonstrate the effectiveness of our proposed TIER method, we conduct extensive experiments on several mainstream AIGCIQA databases, including AGIQA-1K, AGIQA-3K, and AIGCIQA2023. The experimental results indicate that our proposed TIER method generally demonstrates superior performance compared to baseline in most cases.

Motion Control of Two Mobile Robots under Allowable Collisions

Nov 15, 2023Abstract:This letter investigates the motion control problem of two mobile robots under allowable collisions. Here, the allowable collisions mean that the collisions do not damage the mobile robots. The occurrence of the collisions is discussed and the effects of the collisions on the mobile robots are analyzed to develop a hybrid model of each mobile robot under allowable collisions. Based on the effects of the collisions, we show the necessity of redesigning the motion control strategy for mobile robots. Furthermore, impulsive control techniques are applied to redesign the motion control strategy to guarantee the task accomplishment for each mobile robot. Finally, an example is used to illustrate the redesigned motion control strategy.

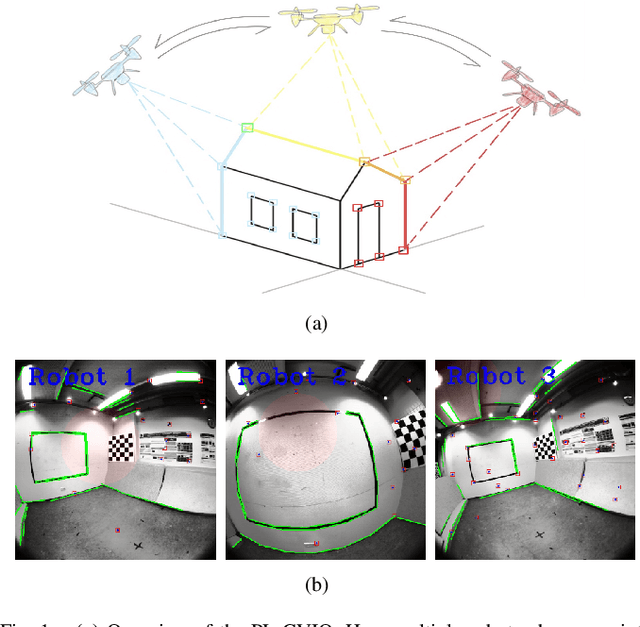

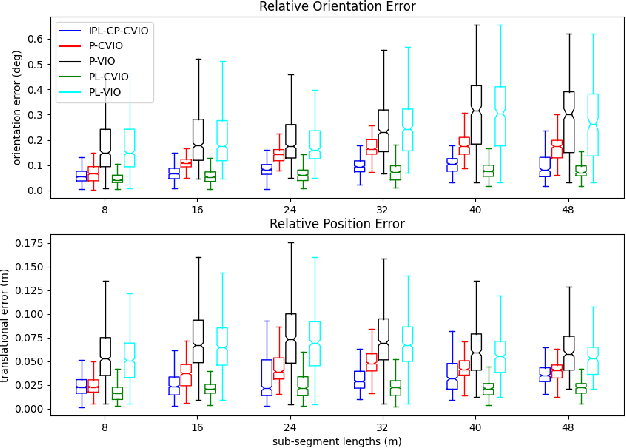

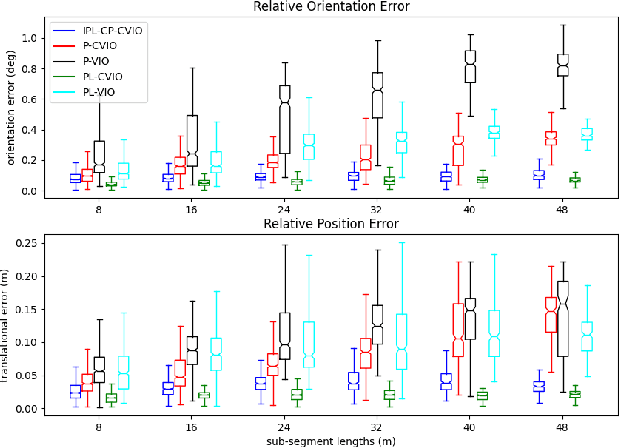

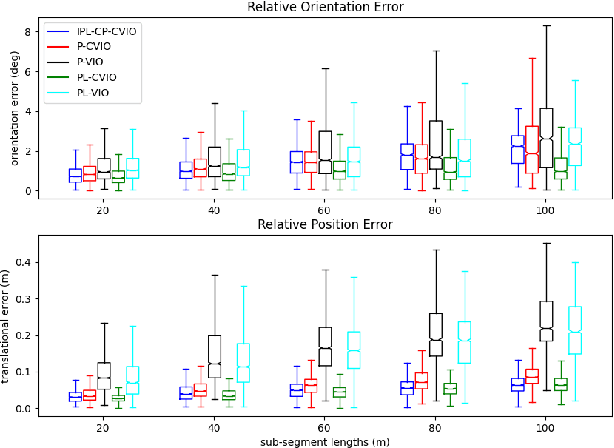

PL-CVIO: Point-Line Cooperative Visual-Inertial Odometry

Nov 09, 2023

Abstract:Low-feature environments are one of the main Achilles' heels of geometric computer vision (CV) algorithms. In most human-built scenes often with low features, lines can be considered complements to points. In this paper, we present a multi-robot cooperative visual-inertial navigation system (VINS) using both point and line features. By utilizing the covariance intersection (CI) update within the multi-state constraint Kalman filter (MSCKF) framework, each robot exploits not only its own point and line measurements, but also constraints of common point and common line features observed by its neighbors. The line features are parameterized and updated by utilizing the Closest Point representation. The proposed algorithm is validated extensively in both Monte-Carlo simulations and a real-world dataset. The results show that the point-line cooperative visual-inertial odometry (PL-CVIO) outperforms the independent MSCKF and our previous work CVIO in both low-feature and rich-feature environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge