Yanyu Zhang

PLK-Calib: Single-shot and Target-less LiDAR-Camera Extrinsic Calibration using Plücker Lines

Mar 11, 2025Abstract:Accurate LiDAR-Camera (LC) calibration is challenging but crucial for autonomous systems and robotics. In this paper, we propose two single-shot and target-less algorithms to estimate the calibration parameters between LiDAR and camera using line features. The first algorithm constructs line-to-line constraints by defining points-to-line projection errors and minimizes the projection error. The second algorithm (PLK-Calib) utilizes the co-perpendicular and co-parallel geometric properties of lines in Pl\"ucker (PLK) coordinate, and decouples the rotation and translation into two constraints, enabling more accurate estimates. Our degenerate analysis and Monte Carlo simulation indicate that three nonparallel line pairs are the minimal requirements to estimate the extrinsic parameters. Furthermore, we collect an LC calibration dataset with varying extrinsic under three different scenarios and use it to evaluate the performance of our proposed algorithms.

NeRF-VIO: Map-Based Visual-Inertial Odometry with Initialization Leveraging Neural Radiance Fields

Mar 11, 2025Abstract:A prior map serves as a foundational reference for localization in context-aware applications such as augmented reality (AR). Providing valuable contextual information about the environment, the prior map is a vital tool for mitigating drift. In this paper, we propose a map-based visual-inertial localization algorithm (NeRF-VIO) with initialization using neural radiance fields (NeRF). Our algorithm utilizes a multilayer perceptron model and redefines the loss function as the geodesic distance on \(SE(3)\), ensuring the invariance of the initialization model under a frame change within \(\mathfrak{se}(3)\). The evaluation demonstrates that our model outperforms existing NeRF-based initialization solution in both accuracy and efficiency. By integrating a two-stage update mechanism within a multi-state constraint Kalman filter (MSCKF) framework, the state of NeRF-VIO is constrained by both captured images from an onboard camera and rendered images from a pre-trained NeRF model. The proposed algorithm is validated using a real-world AR dataset, the results indicate that our two-stage update pipeline outperforms MSCKF across all data sequences.

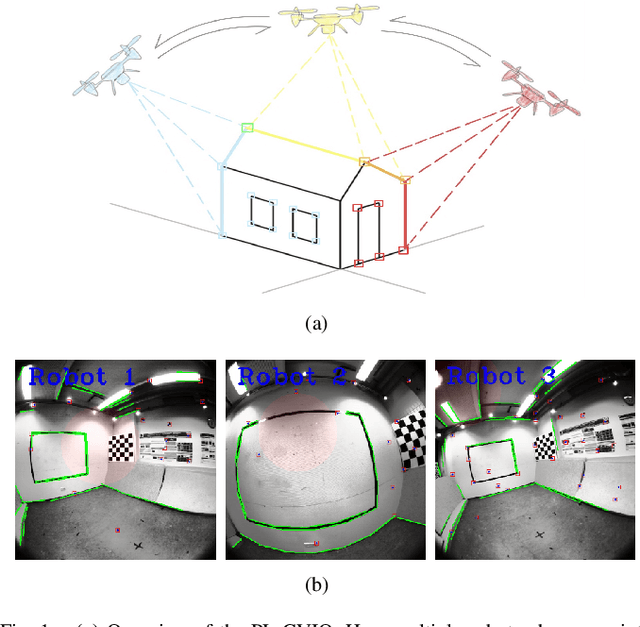

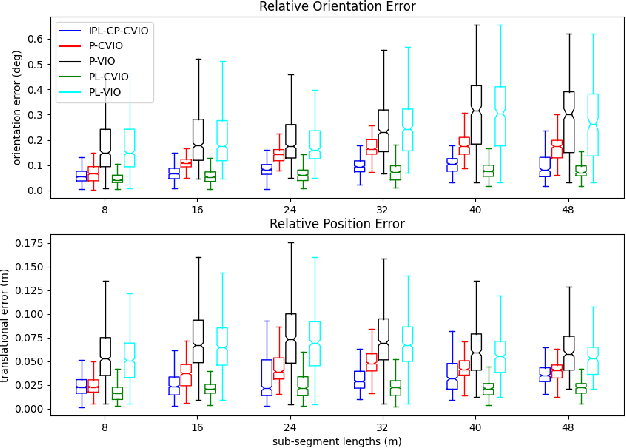

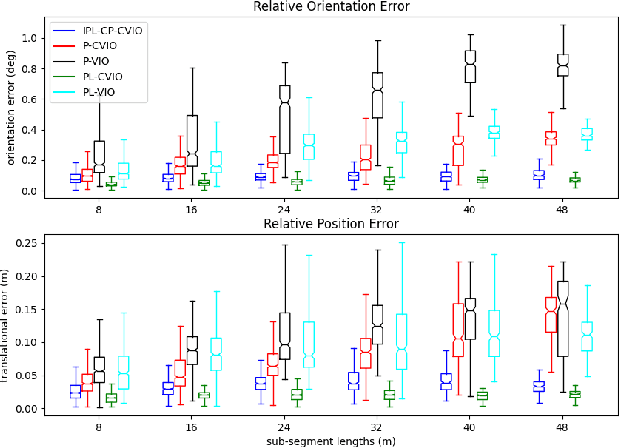

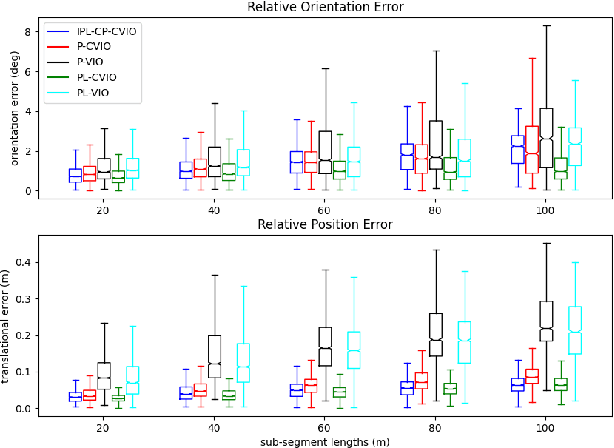

PL-CVIO: Point-Line Cooperative Visual-Inertial Odometry

Nov 09, 2023

Abstract:Low-feature environments are one of the main Achilles' heels of geometric computer vision (CV) algorithms. In most human-built scenes often with low features, lines can be considered complements to points. In this paper, we present a multi-robot cooperative visual-inertial navigation system (VINS) using both point and line features. By utilizing the covariance intersection (CI) update within the multi-state constraint Kalman filter (MSCKF) framework, each robot exploits not only its own point and line measurements, but also constraints of common point and common line features observed by its neighbors. The line features are parameterized and updated by utilizing the Closest Point representation. The proposed algorithm is validated extensively in both Monte-Carlo simulations and a real-world dataset. The results show that the point-line cooperative visual-inertial odometry (PL-CVIO) outperforms the independent MSCKF and our previous work CVIO in both low-feature and rich-feature environments.

Visual Navigation Using Sparse Optical Flow and Time-to-Transit

Nov 18, 2021

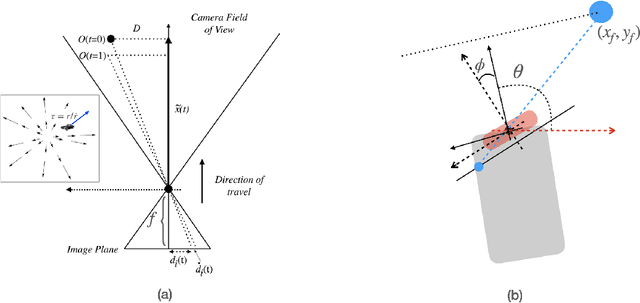

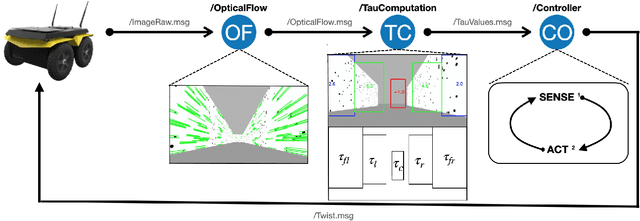

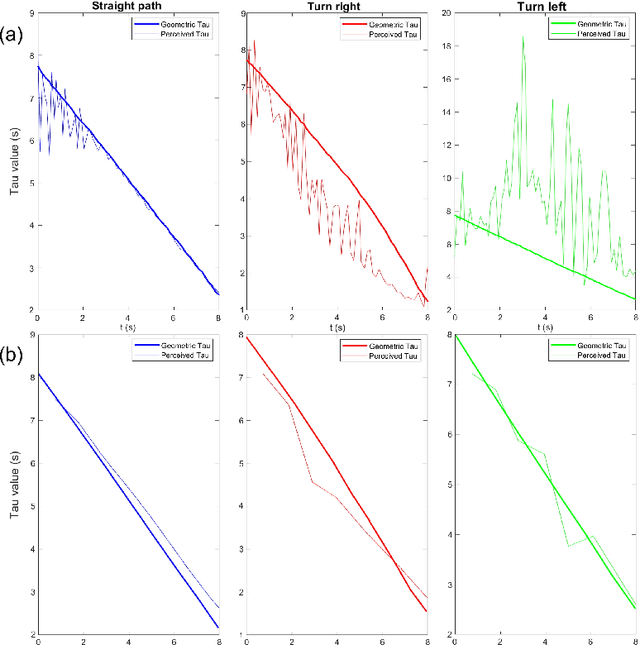

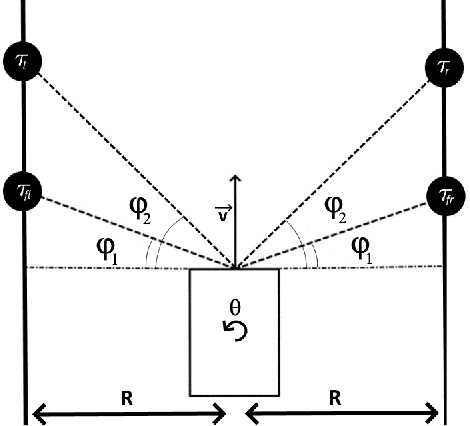

Abstract:Drawing inspiration from biology, we describe the way in which visual sensing with a monocular camera can provide a reliable signal for navigation of mobile robots. The work takes inspiration from a classic paper by Lee and Reddish (Nature, 1981, https://doi.org/10.1038/293293a0) in which they outline a behavioral strategy pursued by diving sea birds based on a visual cue called time-to-contact. A closely related concept of time-to-transit, tau, is defined, and it is shown that idealized steering laws based on monocular camera perceptions of tau can reliably and robustly steer a mobile vehicle within a wide variety of spaces in which features perceived to lie on walls and other objects in the environment provide adequate visual cues. The contribution of the paper is two-fold. It provides a simple theory of robust vision-based steering control. It goes on to show how the theory guides the implementation of robust visual navigation using ROS-Gazebo simulations as well as deployment and experiments with a camera-equipped Jackal robot. As far as we know, the experiments described below are the first to demonstrate visual navigation based on tau.

Deep Reinforcement Learning with Mixed Convolutional Network

Oct 06, 2020

Abstract:Recent research has shown that map raw pixels from a single front-facing camera directly to steering commands are surprisingly powerful. This paper presents a convolutional neural network (CNN) to playing the CarRacing-v0 using imitation learning in OpenAI Gym. The dataset is generated by playing the game manually in Gym and used a data augmentation method to expand the dataset to 4 times larger than before. Also, we read the true speed, four ABS sensors, steering wheel position, and gyroscope for each image and designed a mixed model by combining the sensor input and image input. After training, this model can automatically detect the boundaries of road features and drive the robot like a human. By comparing with AlexNet and VGG16 using the average reward in CarRacing-v0, our model wins the maximum overall system performance.

Intelligent Hotel ROS-based Service Robot

Sep 01, 2020Abstract:With the advances of artificial intelligence (AI) technology, many studies and work have been carried out on how robots could replace human labor. In this paper, we present a ROS based intelligence hotel robot, which simplifies the check-in process. We use pioneer 3dx robot and considered different environment settings. The robot combined with Hokuyo Lidar and Kinect Xbox camera, can plan the routes accurately and reach rooms in different floors. In addition, we added an intelligent voice system which provides an assistant for the customers.

5G Utility Pole Planner Using Google Street View and Mask R-CNN

Aug 26, 2020

Abstract:With the advances of fifth-generation (5G) cellular networks technology, many studies and work have been carried out on how to build 5G networks for smart cities. In the previous research, street lighting poles and smart light poles are capable of being a 5G access point. In order to determine the position of the points, this paper discusses a new way to identify poles based on Mask R-CNN, which extends Fast R-CNNs by making it employ recursive Bayesian filtering and perform proposal propagation and reuse. The dataset contains 3,000 high-resolution images from google map. To make training faster, we used a very efficient GPU implementation of the convolution operation. We achieved a train error rate of 7.86% and a test error rate of 32.03%. At last, we used the immune algorithm to set 5G poles in the smart cities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge