Chiara Boretti

Event-Based Eye Tracking. AIS 2024 Challenge Survey

Apr 17, 2024

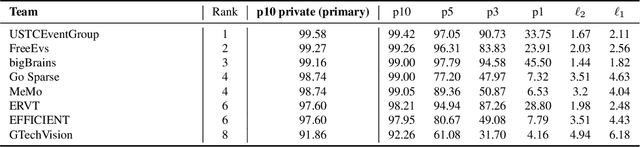

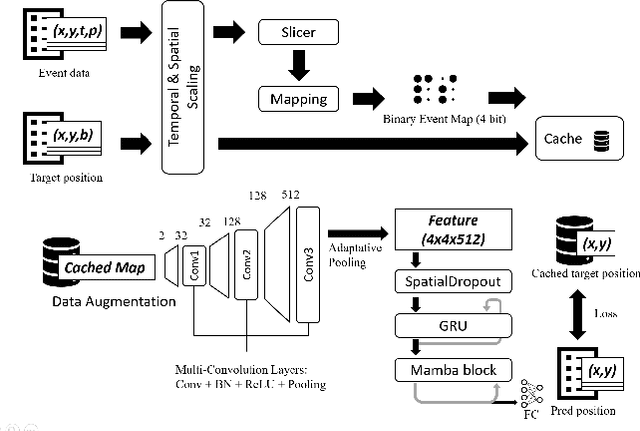

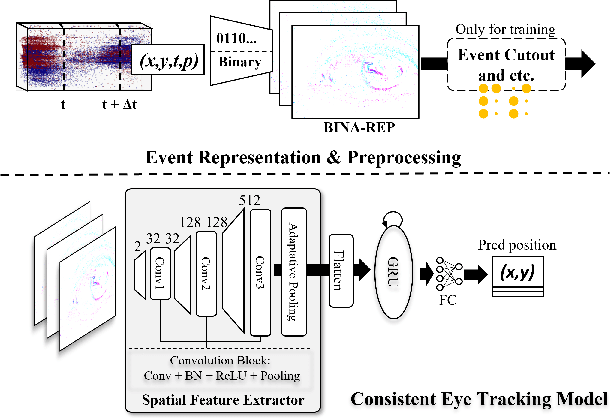

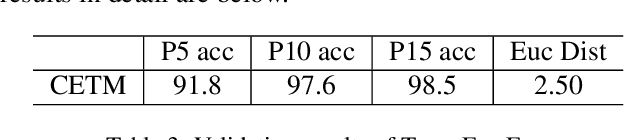

Abstract:This survey reviews the AIS 2024 Event-Based Eye Tracking (EET) Challenge. The task of the challenge focuses on processing eye movement recorded with event cameras and predicting the pupil center of the eye. The challenge emphasizes efficient eye tracking with event cameras to achieve good task accuracy and efficiency trade-off. During the challenge period, 38 participants registered for the Kaggle competition, and 8 teams submitted a challenge factsheet. The novel and diverse methods from the submitted factsheets are reviewed and analyzed in this survey to advance future event-based eye tracking research.

Visual Navigation Using Sparse Optical Flow and Time-to-Transit

Nov 18, 2021

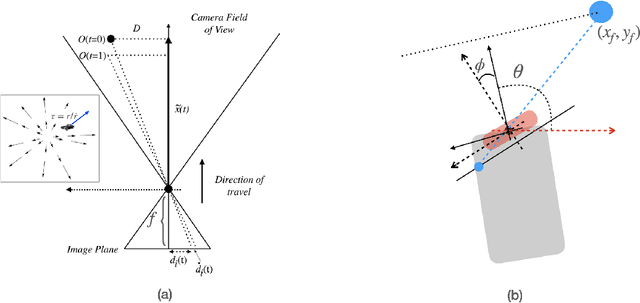

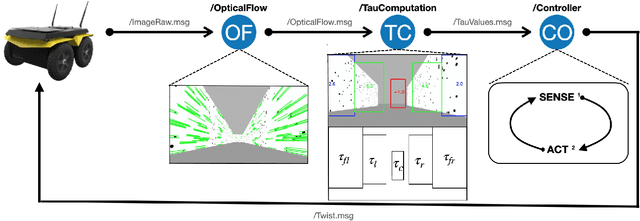

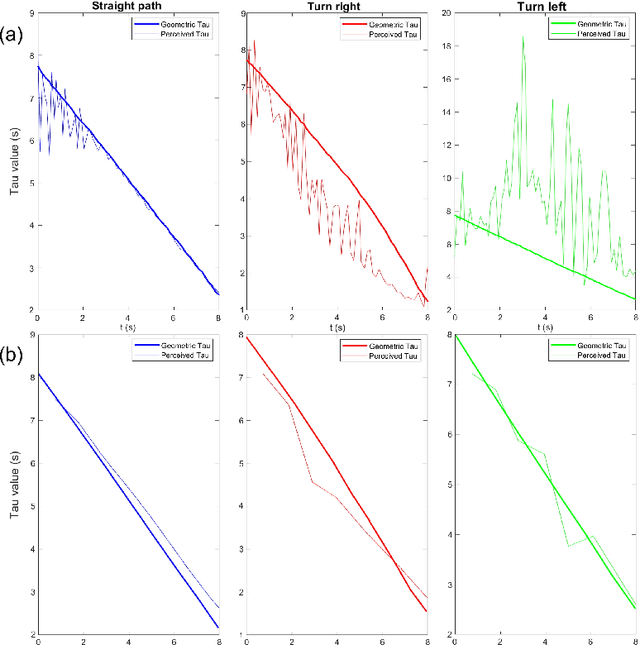

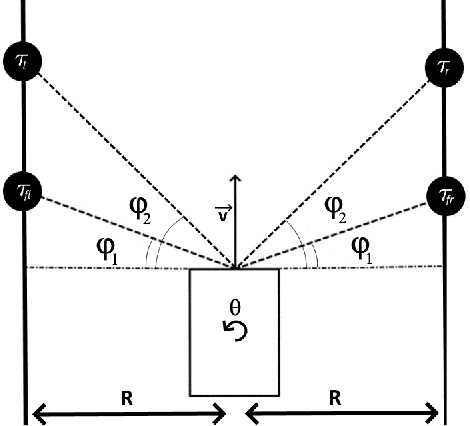

Abstract:Drawing inspiration from biology, we describe the way in which visual sensing with a monocular camera can provide a reliable signal for navigation of mobile robots. The work takes inspiration from a classic paper by Lee and Reddish (Nature, 1981, https://doi.org/10.1038/293293a0) in which they outline a behavioral strategy pursued by diving sea birds based on a visual cue called time-to-contact. A closely related concept of time-to-transit, tau, is defined, and it is shown that idealized steering laws based on monocular camera perceptions of tau can reliably and robustly steer a mobile vehicle within a wide variety of spaces in which features perceived to lie on walls and other objects in the environment provide adequate visual cues. The contribution of the paper is two-fold. It provides a simple theory of robust vision-based steering control. It goes on to show how the theory guides the implementation of robust visual navigation using ROS-Gazebo simulations as well as deployment and experiments with a camera-equipped Jackal robot. As far as we know, the experiments described below are the first to demonstrate visual navigation based on tau.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge