Pierre Gentine

Power Ensemble Aggregation for Improved Extreme Event AI Prediction

Nov 14, 2025Abstract:This paper addresses the critical challenge of improving predictions of climate extreme events, specifically heat waves, using machine learning methods. Our work is framed as a classification problem in which we try to predict whether surface air temperature will exceed its q-th local quantile within a specified timeframe. Our key finding is that aggregating ensemble predictions using a power mean significantly enhances the classifier's performance. By making a machine-learning based weather forecasting model generative and applying this non-linear aggregation method, we achieve better accuracy in predicting extreme heat events than with the typical mean prediction from the same model. Our power aggregation method shows promise and adaptability, as its optimal performance varies with the quantile threshold chosen, demonstrating increased effectiveness for higher extremes prediction.

PnP-DA: Towards Principled Plug-and-Play Integration of Variational Data Assimilation and Generative Models

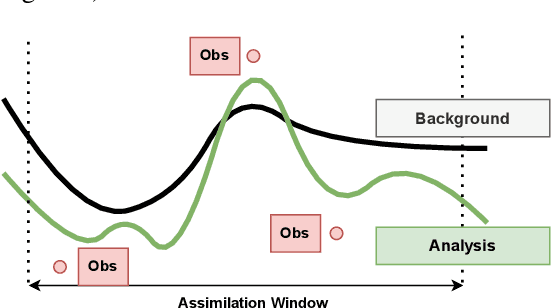

Aug 01, 2025Abstract:Earth system modeling presents a fundamental challenge in scientific computing: capturing complex, multiscale nonlinear dynamics in computationally efficient models while minimizing forecast errors caused by necessary simplifications. Even the most powerful AI- or physics-based forecast system suffer from gradual error accumulation. Data assimilation (DA) aims to mitigate these errors by optimally blending (noisy) observations with prior model forecasts, but conventional variational methods often assume Gaussian error statistics that fail to capture the true, non-Gaussian behavior of chaotic dynamical systems. We propose PnP-DA, a Plug-and-Play algorithm that alternates (1) a lightweight, gradient-based analysis update (using a Mahalanobis-distance misfit on new observations) with (2) a single forward pass through a pretrained generative prior conditioned on the background forecast via a conditional Wasserstein coupling. This strategy relaxes restrictive statistical assumptions and leverages rich historical data without requiring an explicit regularization functional, and it also avoids the need to backpropagate gradients through the complex neural network that encodes the prior during assimilation cycles. Experiments on standard chaotic testbeds demonstrate that this strategy consistently reduces forecast errors across a range of observation sparsities and noise levels, outperforming classical variational methods.

Align-DA: Align Score-based Atmospheric Data Assimilation with Multiple Preferences

May 28, 2025Abstract:Data assimilation (DA) aims to estimate the full state of a dynamical system by combining partial and noisy observations with a prior model forecast, commonly referred to as the background. In atmospheric applications, this problem is fundamentally ill-posed due to the sparsity of observations relative to the high-dimensional state space. Traditional methods address this challenge by simplifying background priors to regularize the solution, which are empirical and require continual tuning for application. Inspired by alignment techniques in text-to-image diffusion models, we propose Align-DA, which formulates DA as a generative process and uses reward signals to guide background priors, replacing manual tuning with data-driven alignment. Specifically, we train a score-based model in the latent space to approximate the background-conditioned prior, and align it using three complementary reward signals for DA: (1) assimilation accuracy, (2) forecast skill initialized from the assimilated state, and (3) physical adherence of the analysis fields. Experiments with multiple reward signals demonstrate consistent improvements in analysis quality across different evaluation metrics and observation-guidance strategies. These results show that preference alignment, implemented as a soft constraint, can automatically adapt complex background priors tailored to DA, offering a promising new direction for advancing the field.

Strictly Constrained Generative Modeling via Split Augmented Langevin Sampling

May 26, 2025Abstract:Deep generative models hold great promise for representing complex physical systems, but their deployment is currently limited by the lack of guarantees on the physical plausibility of the generated outputs. Ensuring that known physical constraints are enforced is therefore critical when applying generative models to scientific and engineering problems. We address this limitation by developing a principled framework for sampling from a target distribution while rigorously satisfying physical constraints. Leveraging the variational formulation of Langevin dynamics, we propose Split Augmented Langevin (SAL), a novel primal-dual sampling algorithm that enforces constraints progressively through variable splitting, with convergence guarantees. While the method is developed theoretically for Langevin dynamics, we demonstrate its effective applicability to diffusion models. In particular, we use constrained diffusion models to generate physical fields satisfying energy and mass conservation laws. We apply our method to diffusion-based data assimilation on a complex physical system, where enforcing physical constraints substantially improves both forecast accuracy and the preservation of critical conserved quantities. We also demonstrate the potential of SAL for challenging feasibility problems in optimal control.

CausalDynamics: A large-scale benchmark for structural discovery of dynamical causal models

May 22, 2025Abstract:Causal discovery for dynamical systems poses a major challenge in fields where active interventions are infeasible. Most methods used to investigate these systems and their associated benchmarks are tailored to deterministic, low-dimensional and weakly nonlinear time-series data. To address these limitations, we present CausalDynamics, a large-scale benchmark and extensible data generation framework to advance the structural discovery of dynamical causal models. Our benchmark consists of true causal graphs derived from thousands of coupled ordinary and stochastic differential equations as well as two idealized climate models. We perform a comprehensive evaluation of state-of-the-art causal discovery algorithms for graph reconstruction on systems with noisy, confounded, and lagged dynamics. CausalDynamics consists of a plug-and-play, build-your-own coupling workflow that enables the construction of a hierarchy of physical systems. We anticipate that our framework will facilitate the development of robust causal discovery algorithms that are broadly applicable across domains while addressing their unique challenges. We provide a user-friendly implementation and documentation on https://kausable.github.io/CausalDynamics.

Deep Koopman operator framework for causal discovery in nonlinear dynamical systems

May 20, 2025Abstract:We use a deep Koopman operator-theoretic formalism to develop a novel causal discovery algorithm, Kausal. Causal discovery aims to identify cause-effect mechanisms for better scientific understanding, explainable decision-making, and more accurate modeling. Standard statistical frameworks, such as Granger causality, lack the ability to quantify causal relationships in nonlinear dynamics due to the presence of complex feedback mechanisms, timescale mixing, and nonstationarity. This presents a challenge in studying many real-world systems, such as the Earth's climate. Meanwhile, Koopman operator methods have emerged as a promising tool for approximating nonlinear dynamics in a linear space of observables. In Kausal, we propose to leverage this powerful idea for causal analysis where optimal observables are inferred using deep learning. Causal estimates are then evaluated in a reproducing kernel Hilbert space, and defined as the distance between the marginal dynamics of the effect and the joint dynamics of the cause-effect observables. Our numerical experiments demonstrate Kausal's superior ability in discovering and characterizing causal signals compared to existing approaches of prescribed observables. Lastly, we extend our analysis to observations of El Ni\~no-Southern Oscillation highlighting our algorithm's applicability to real-world phenomena. Our code is available at https://github.com/juannat7/kausal.

Generative emulation of chaotic dynamics with coherent prior

Apr 19, 2025Abstract:Data-driven emulation of nonlinear dynamics is challenging due to long-range skill decay that often produces physically unrealistic outputs. Recent advances in generative modeling aim to address these issues by providing uncertainty quantification and correction. However, the quality of generated simulation remains heavily dependent on the choice of conditioning priors. In this work, we present an efficient generative framework for dynamics emulation, unifying principles of turbulence with diffusion-based modeling: Cohesion. Specifically, our method estimates large-scale coherent structure of the underlying dynamics as guidance during the denoising process, where small-scale fluctuation in the flow is then resolved. These coherent priors are efficiently approximated using reduced-order models, such as deep Koopman operators, that allow for rapid generation of long prior sequences while maintaining stability over extended forecasting horizon. With this gain, we can reframe forecasting as trajectory planning, a common task in reinforcement learning, where conditional denoising is performed once over entire sequences, minimizing the computational cost of autoregressive-based generative methods. Empirical evaluations on chaotic systems of increasing complexity, including Kolmogorov flow, shallow water equations, and subseasonal-to-seasonal climate dynamics, demonstrate Cohesion superior long-range forecasting skill that can efficiently generate physically-consistent simulations, even in the presence of partially-observed guidance.

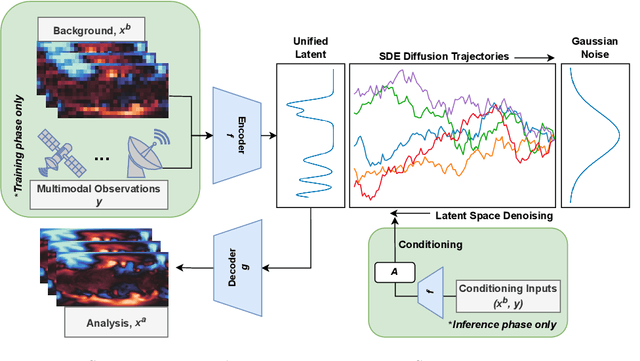

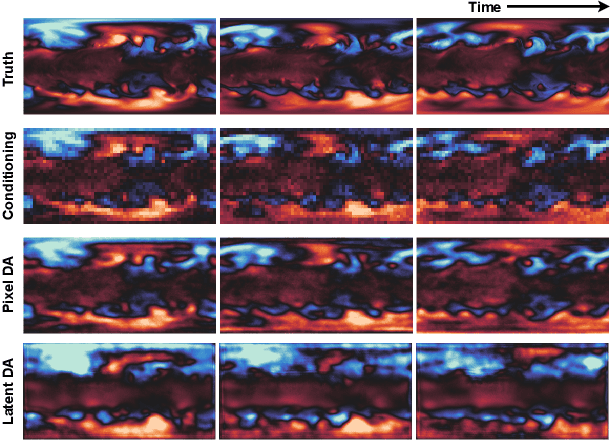

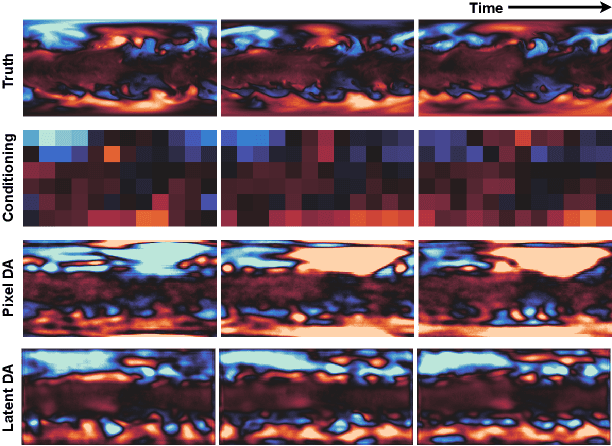

Deep Generative Data Assimilation in Multimodal Setting

Apr 10, 2024

Abstract:Robust integration of physical knowledge and data is key to improve computational simulations, such as Earth system models. Data assimilation is crucial for achieving this goal because it provides a systematic framework to calibrate model outputs with observations, which can include remote sensing imagery and ground station measurements, with uncertainty quantification. Conventional methods, including Kalman filters and variational approaches, inherently rely on simplifying linear and Gaussian assumptions, and can be computationally expensive. Nevertheless, with the rapid adoption of data-driven methods in many areas of computational sciences, we see the potential of emulating traditional data assimilation with deep learning, especially generative models. In particular, the diffusion-based probabilistic framework has large overlaps with data assimilation principles: both allows for conditional generation of samples with a Bayesian inverse framework. These models have shown remarkable success in text-conditioned image generation or image-controlled video synthesis. Likewise, one can frame data assimilation as observation-conditioned state calibration. In this work, we propose SLAMS: Score-based Latent Assimilation in Multimodal Setting. Specifically, we assimilate in-situ weather station data and ex-situ satellite imagery to calibrate the vertical temperature profiles, globally. Through extensive ablation, we demonstrate that SLAMS is robust even in low-resolution, noisy, and sparse data settings. To our knowledge, our work is the first to apply deep generative framework for multimodal data assimilation using real-world datasets; an important step for building robust computational simulators, including the next-generation Earth system models. Our code is available at: https://github.com/yongquan-qu/SLAMS

Joint Parameter and Parameterization Inference with Uncertainty Quantification through Differentiable Programming

Mar 04, 2024

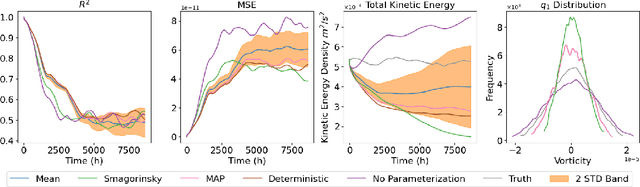

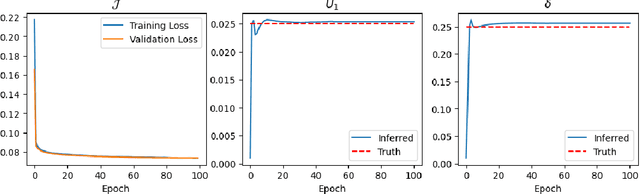

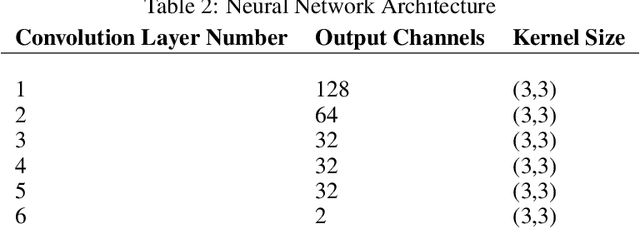

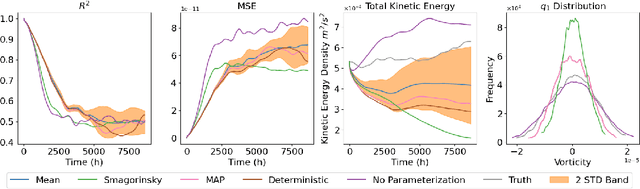

Abstract:Accurate representations of unknown and sub-grid physical processes through parameterizations (or closure) in numerical simulations with quantified uncertainty are critical for resolving the coarse-grained partial differential equations that govern many problems ranging from weather and climate prediction to turbulence simulations. Recent advances have seen machine learning (ML) increasingly applied to model these subgrid processes, resulting in the development of hybrid physics-ML models through the integration with numerical solvers. In this work, we introduce a novel framework for the joint estimation and uncertainty quantification of physical parameters and machine learning parameterizations in tandem, leveraging differentiable programming. Achieved through online training and efficient Bayesian inference within a high-dimensional parameter space, this approach is enabled by the capabilities of differentiable programming. This proof of concept underscores the substantial potential of differentiable programming in synergistically combining machine learning with differential equations, thereby enhancing the capabilities of hybrid physics-ML modeling.

ChaosBench: A Multi-Channel, Physics-Based Benchmark for Subseasonal-to-Seasonal Climate Prediction

Feb 01, 2024

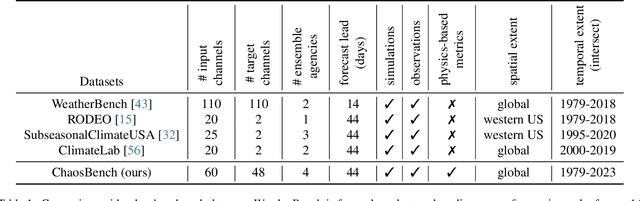

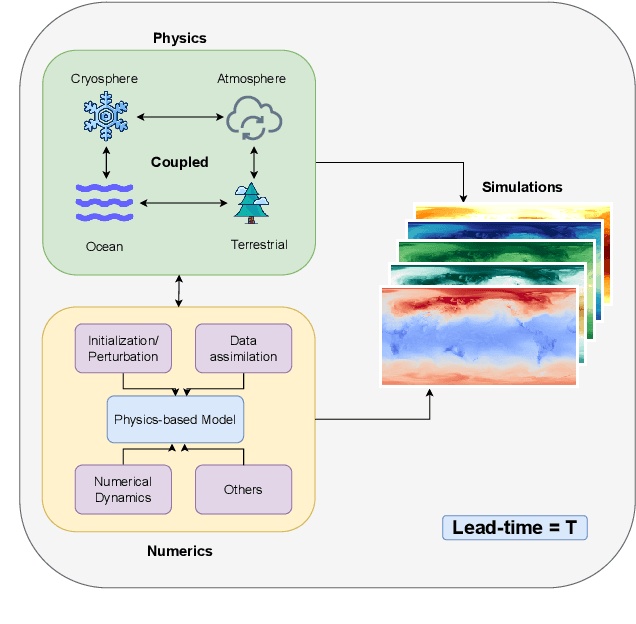

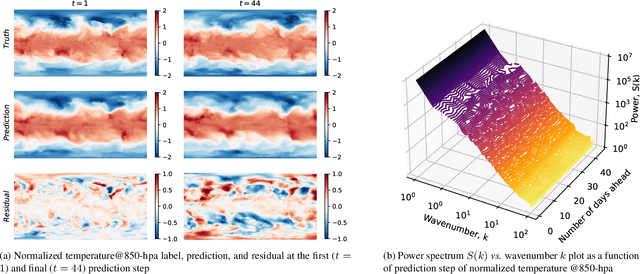

Abstract:Accurate prediction of climate in the subseasonal-to-seasonal scale is crucial for disaster readiness, reduced economic risk, and improved policy-making amidst climate change. Yet, S2S prediction remains challenging due to the chaotic nature of the system. At present, existing benchmarks for weather and climate applications, tend to (1) have shorter forecasting range of up-to 14 days, (2) do not include a wide range of operational baseline forecasts, and (3) lack physics-based constraints for explainability. Thus, we propose ChaosBench, a large-scale, multi-channel, physics-based benchmark for S2S prediction. ChaosBench has over 460K frames of real-world observations and simulations, each with 60 variable-channels and spanning for up-to 45 years. We also propose several physics-based, in addition to vision-based metrics, that enables for a more physically-consistent model. Furthermore, we include a diverse set of physics-based forecasts from 4 national weather agencies as baselines to our data-driven counterpart. We establish two tasks that vary in complexity: full and sparse dynamics prediction. Our benchmark is one of the first to perform large-scale evaluation on existing models including PanguWeather, FourCastNetV2, GraphCast, and ClimaX, and finds methods originally developed for weather-scale applications fails on S2S task. We release our benchmark code and datasets at https://leap-stc.github.io/ChaosBench.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge