Steven Lu

Jet Propulsion Laboratory, California Institute of Technology

Leveraging Deep Learning for Physical Model Bias of Global Air Quality Estimates

Aug 06, 2025Abstract:Air pollution is the world's largest environmental risk factor for human disease and premature death, resulting in more than 6 million permature deaths in 2019. Currently, there is still a challenge to model one of the most important air pollutants, surface ozone, particularly at scales relevant for human health impacts, with the drivers of global ozone trends at these scales largely unknown, limiting the practical use of physics-based models. We employ a 2D Convolutional Neural Network based architecture that estimate surface ozone MOMO-Chem model residuals, referred to as model bias. We demonstrate the potential of this technique in North America and Europe, highlighting its ability better to capture physical model residuals compared to a traditional machine learning method. We assess the impact of incorporating land use information from high-resolution satellite imagery to improve model estimates. Importantly, we discuss how our results can improve our scientific understanding of the factors impacting ozone bias at urban scales that can be used to improve environmental policy.

Uncertainty Quantification for Surface Ozone Emulators using Deep Learning

Aug 06, 2025Abstract:Air pollution is a global hazard, and as of 2023, 94\% of the world's population is exposed to unsafe pollution levels. Surface Ozone (O3), an important pollutant, and the drivers of its trends are difficult to model, and traditional physics-based models fall short in their practical use for scales relevant to human-health impacts. Deep Learning-based emulators have shown promise in capturing complex climate patterns, but overall lack the interpretability necessary to support critical decision making for policy changes and public health measures. We implement an uncertainty-aware U-Net architecture to predict the Multi-mOdel Multi-cOnstituent Chemical data assimilation (MOMO-Chem) model's surface ozone residuals (bias) using Bayesian and quantile regression methods. We demonstrate the capability of our techniques in regional estimation of bias in North America and Europe for June 2019. We highlight the uncertainty quantification (UQ) scores between our two UQ methodologies and discern which ground stations are optimal and sub-optimal candidates for MOMO-Chem bias correction, and evaluate the impact of land-use information in surface ozone residual modeling.

Evaluating the Retrieval Robustness of Large Language Models

May 28, 2025Abstract:Retrieval-augmented generation (RAG) generally enhances large language models' (LLMs) ability to solve knowledge-intensive tasks. But RAG may also lead to performance degradation due to imperfect retrieval and the model's limited ability to leverage retrieved content. In this work, we evaluate the robustness of LLMs in practical RAG setups (henceforth retrieval robustness). We focus on three research questions: (1) whether RAG is always better than non-RAG; (2) whether more retrieved documents always lead to better performance; (3) and whether document orders impact results. To facilitate this study, we establish a benchmark of 1500 open-domain questions, each with retrieved documents from Wikipedia. We introduce three robustness metrics, each corresponds to one research question. Our comprehensive experiments, involving 11 LLMs and 3 prompting strategies, reveal that all of these LLMs exhibit surprisingly high retrieval robustness; nonetheless, different degrees of imperfect robustness hinders them from fully utilizing the benefits of RAG.

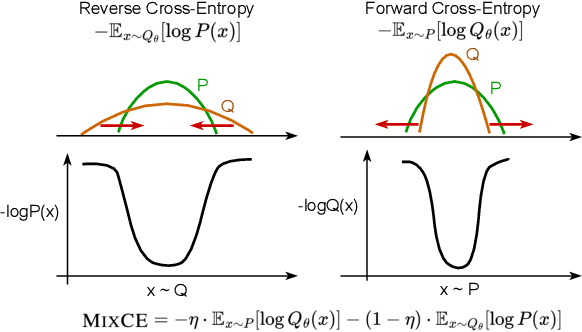

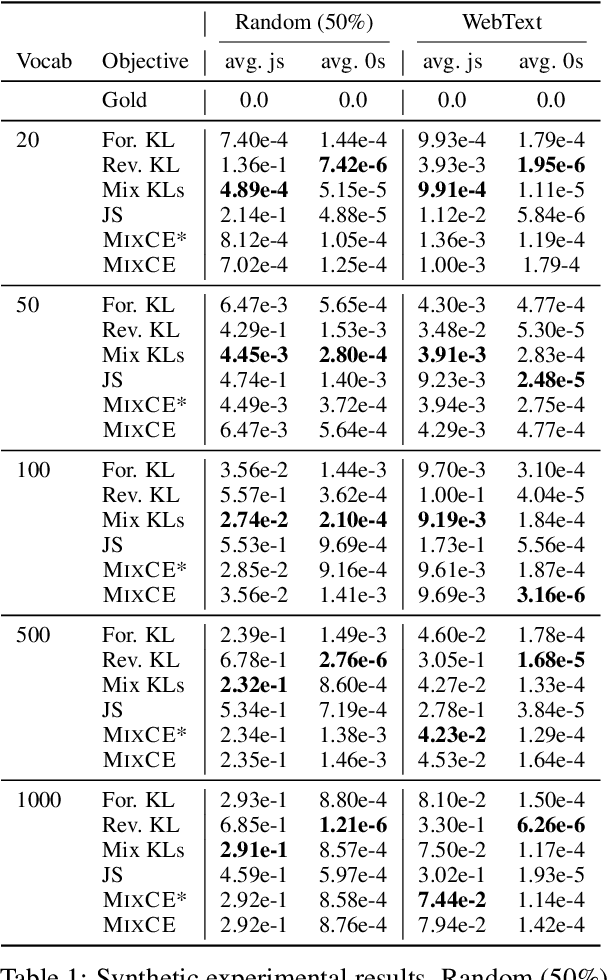

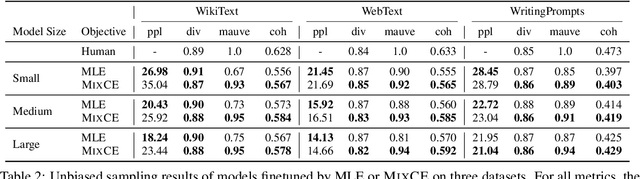

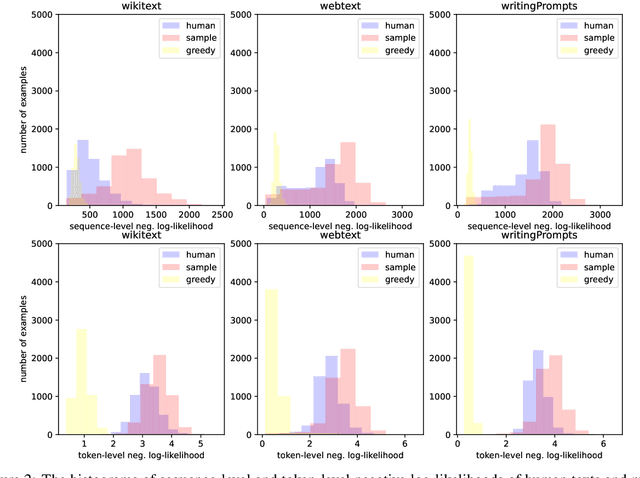

MixCE: Training Autoregressive Language Models by Mixing Forward and Reverse Cross-Entropies

May 26, 2023

Abstract:Autoregressive language models are trained by minimizing the cross-entropy of the model distribution Q relative to the data distribution P -- that is, minimizing the forward cross-entropy, which is equivalent to maximum likelihood estimation (MLE). We have observed that models trained in this way may "over-generalize", in the sense that they produce non-human-like text. Moreover, we believe that reverse cross-entropy, i.e., the cross-entropy of P relative to Q, is a better reflection of how a human would evaluate text generated by a model. Hence, we propose learning with MixCE, an objective that mixes the forward and reverse cross-entropies. We evaluate models trained with this objective on synthetic data settings (where P is known) and real data, and show that the resulting models yield better generated text without complex decoding strategies. Our code and models are publicly available at https://github.com/bloomberg/mixce-acl2023

BloombergGPT: A Large Language Model for Finance

Mar 30, 2023

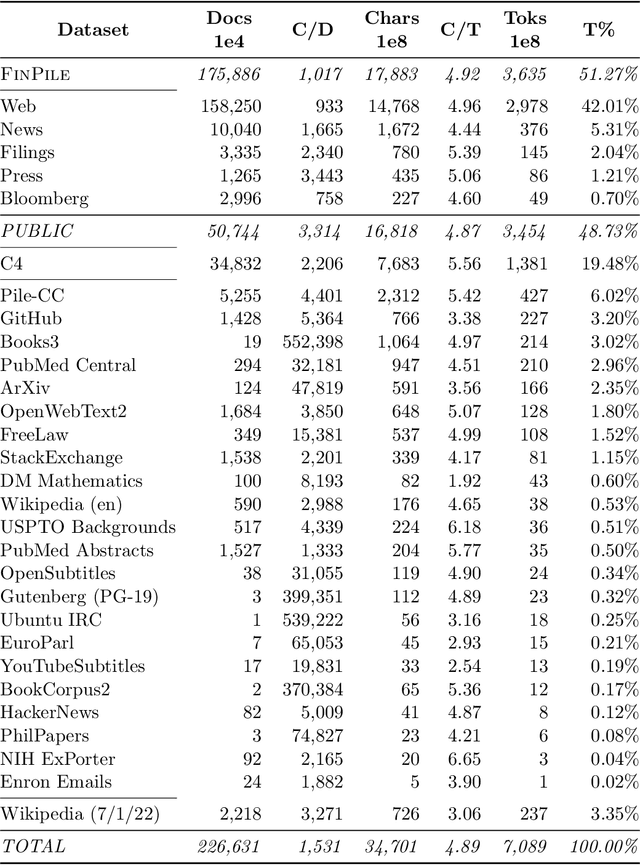

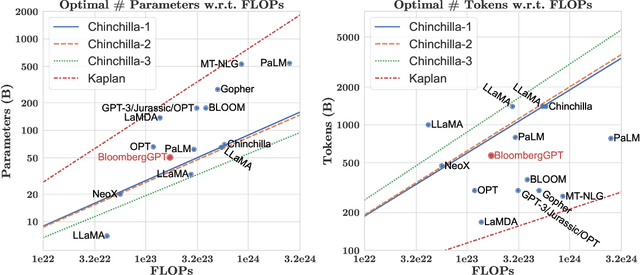

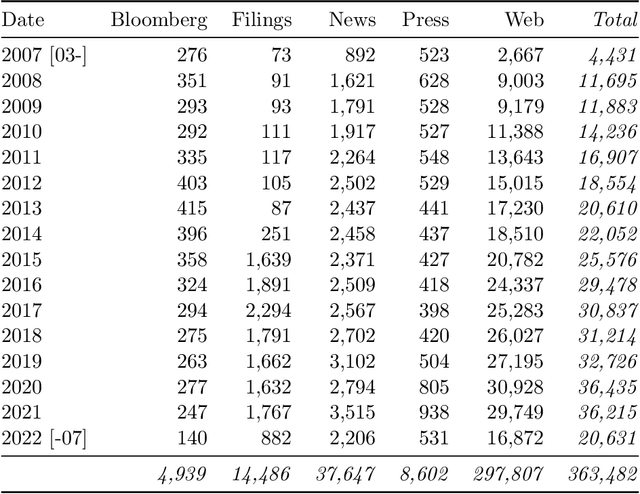

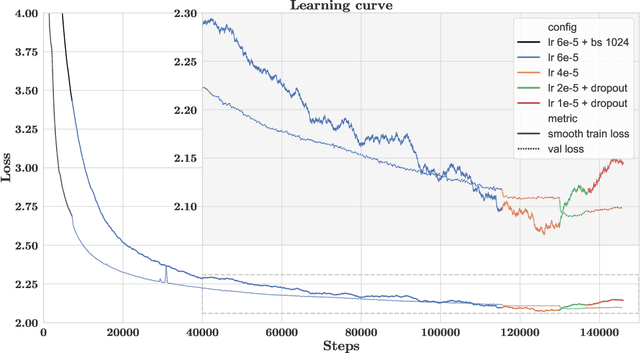

Abstract:The use of NLP in the realm of financial technology is broad and complex, with applications ranging from sentiment analysis and named entity recognition to question answering. Large Language Models (LLMs) have been shown to be effective on a variety of tasks; however, no LLM specialized for the financial domain has been reported in literature. In this work, we present BloombergGPT, a 50 billion parameter language model that is trained on a wide range of financial data. We construct a 363 billion token dataset based on Bloomberg's extensive data sources, perhaps the largest domain-specific dataset yet, augmented with 345 billion tokens from general purpose datasets. We validate BloombergGPT on standard LLM benchmarks, open financial benchmarks, and a suite of internal benchmarks that most accurately reflect our intended usage. Our mixed dataset training leads to a model that outperforms existing models on financial tasks by significant margins without sacrificing performance on general LLM benchmarks. Additionally, we explain our modeling choices, training process, and evaluation methodology. As a next step, we plan to release training logs (Chronicles) detailing our experience in training BloombergGPT.

Cosmic Microwave Background Recovery: A Graph-Based Bayesian Convolutional Network Approach

Feb 24, 2023

Abstract:The cosmic microwave background (CMB) is a significant source of knowledge about the origin and evolution of our universe. However, observations of the CMB are contaminated by foreground emissions, obscuring the CMB signal and reducing its efficacy in constraining cosmological parameters. We employ deep learning as a data-driven approach to CMB cleaning from multi-frequency full-sky maps. In particular, we develop a graph-based Bayesian convolutional neural network based on the U-Net architecture that predicts cleaned CMB with pixel-wise uncertainty estimates. We demonstrate the potential of this technique on realistic simulated data based on the Planck mission. We show that our model accurately recovers the cleaned CMB sky map and resulting angular power spectrum while identifying regions of uncertainty. Finally, we discuss the current challenges and the path forward for deploying our model for CMB recovery on real observations.

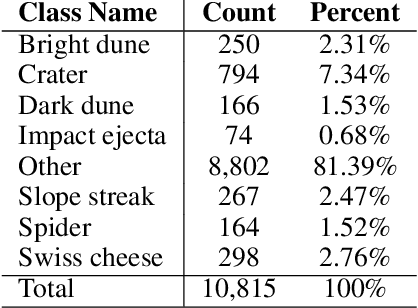

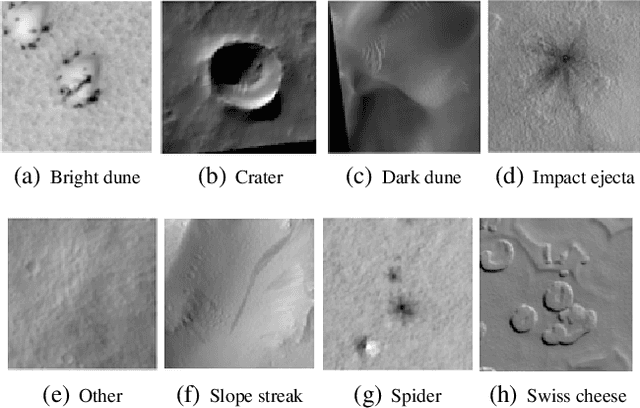

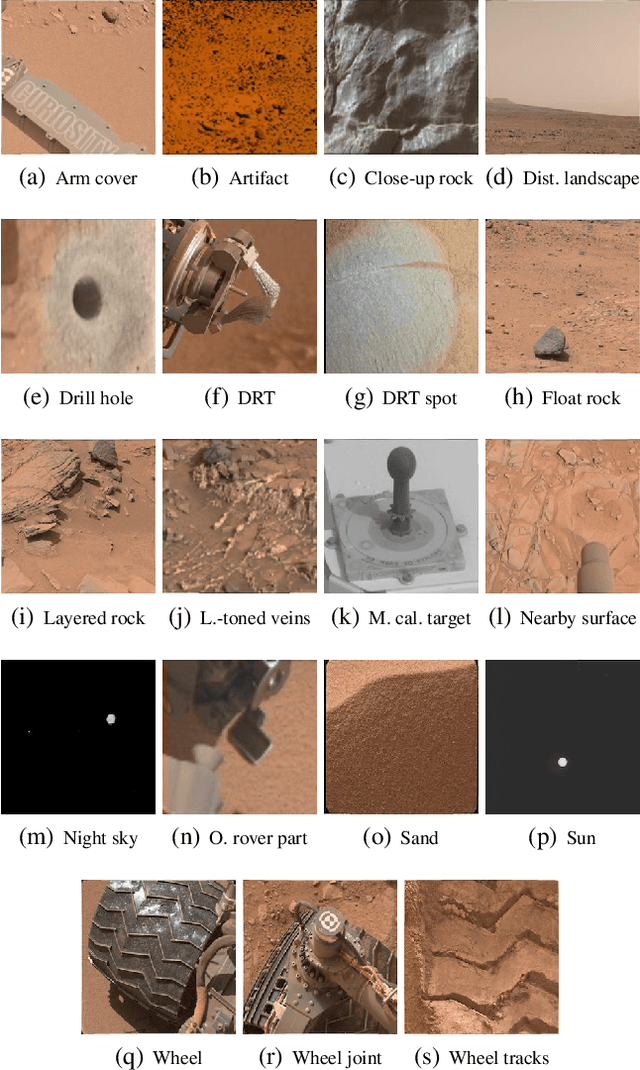

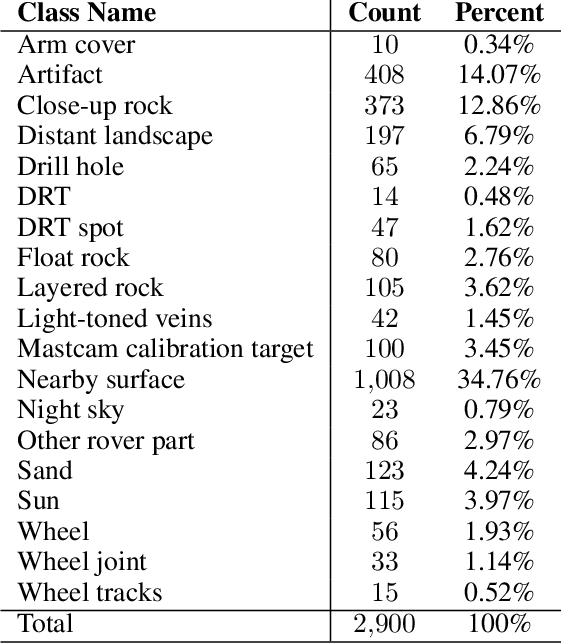

Mars Image Content Classification: Three Years of NASA Deployment and Recent Advances

Feb 09, 2021

Abstract:The NASA Planetary Data System hosts millions of images acquired from the planet Mars. To help users quickly find images of interest, we have developed and deployed content-based classification and search capabilities for Mars orbital and surface images. The deployed systems are publicly accessible using the PDS Image Atlas. We describe the process of training, evaluating, calibrating, and deploying updates to two CNN classifiers for images collected by Mars missions. We also report on three years of deployment including usage statistics, lessons learned, and plans for the future.

Constructing Dynamic Knowledge Graph for Visual Semantic Understanding and Applications in Autonomous Robotics

Sep 16, 2019

Abstract:Interpreting semantic knowledge describing entities, relations and attributes explicitly with visuals and implicitly with in behind-scene common senses gain more attention in autonomous robotics. By incorporating vision and language modeling with common-sense knowledge, we can provide rich features indicating strong semantic meanings for human and robot action relationships, which can be utilized further in autonomous robotic controls. In this paper, we propose a systematic scheme to generate high-conceptual dynamic knowledge graphs representing Entity-Relation-Entity (E-R-E) and Entity-Attribute-Value (E-A-V) knowledges by "watching" a video clip. A combination of Vision-Language model and static ontology tree is used to illustrate workspace, configurations, functions and usages for both human and robot. The proposed method is flexible and well-versed. It will serve as our first positioning investigation for further research in various applications for autonomous robots.

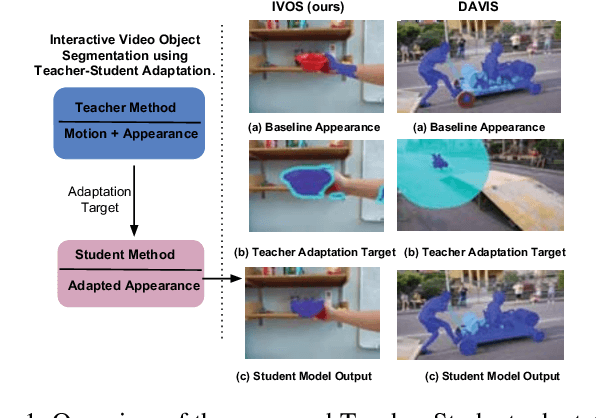

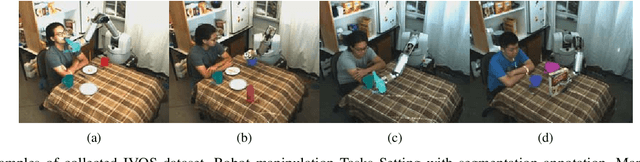

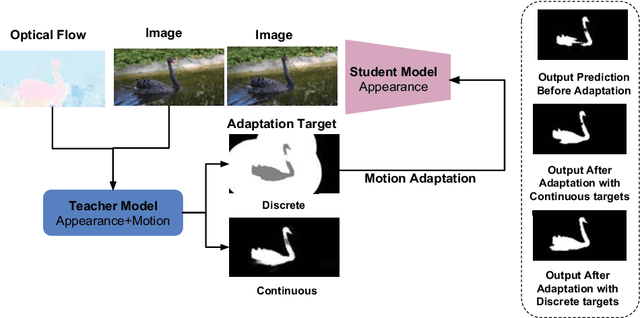

Video Segmentation using Teacher-Student Adaptation in a Human Robot Interaction (HRI) Setting

Oct 17, 2018

Abstract:Video segmentation is a challenging task that has many applications in robotics. Learning segmentation from few examples on-line is important for robotics in unstructured environments. The total number of objects and their variation in the real world is intractable, but for a specific task the robot deals with a small subset. Our network is taught, by a human moving a hand-held object through different poses. A novel two-stream motion and appearance "teacher" network provides pseudo-labels. These labels are used to adapt an appearance "student" network. Segmentation can be used to support a variety of robot vision functionality, such as grasping or affordance segmentation. We propose different variants of motion adaptation training and extensively compare against the state-of-the-art methods. We collected a carefully designed dataset in the human robot interaction (HRI) setting. We denote our dataset as (L)ow-shot (O)bject (R)ecognition, (D)etection and (S)egmentation using HRI. Our dataset contains teaching videos of different hand-held objects moving in translation, scale and rotation. It contains kitchen manipulation tasks as well, performed by humans and robots. Our proposed method outperforms the state-of-the-art on DAVIS and FBMS with 7% and 1.2% in F-measure respectively. In our more challenging LORDS-HRI dataset, our approach achieves significantly better performance with 46.7% and 24.2% relative improvement in mIoU over the baseline.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge