Freddie Kalaitzis

Leveraging Deep Learning for Physical Model Bias of Global Air Quality Estimates

Aug 06, 2025Abstract:Air pollution is the world's largest environmental risk factor for human disease and premature death, resulting in more than 6 million permature deaths in 2019. Currently, there is still a challenge to model one of the most important air pollutants, surface ozone, particularly at scales relevant for human health impacts, with the drivers of global ozone trends at these scales largely unknown, limiting the practical use of physics-based models. We employ a 2D Convolutional Neural Network based architecture that estimate surface ozone MOMO-Chem model residuals, referred to as model bias. We demonstrate the potential of this technique in North America and Europe, highlighting its ability better to capture physical model residuals compared to a traditional machine learning method. We assess the impact of incorporating land use information from high-resolution satellite imagery to improve model estimates. Importantly, we discuss how our results can improve our scientific understanding of the factors impacting ozone bias at urban scales that can be used to improve environmental policy.

Uncertainty Quantification for Surface Ozone Emulators using Deep Learning

Aug 06, 2025Abstract:Air pollution is a global hazard, and as of 2023, 94\% of the world's population is exposed to unsafe pollution levels. Surface Ozone (O3), an important pollutant, and the drivers of its trends are difficult to model, and traditional physics-based models fall short in their practical use for scales relevant to human-health impacts. Deep Learning-based emulators have shown promise in capturing complex climate patterns, but overall lack the interpretability necessary to support critical decision making for policy changes and public health measures. We implement an uncertainty-aware U-Net architecture to predict the Multi-mOdel Multi-cOnstituent Chemical data assimilation (MOMO-Chem) model's surface ozone residuals (bias) using Bayesian and quantile regression methods. We demonstrate the capability of our techniques in regional estimation of bias in North America and Europe for June 2019. We highlight the uncertainty quantification (UQ) scores between our two UQ methodologies and discern which ground stations are optimal and sub-optimal candidates for MOMO-Chem bias correction, and evaluate the impact of land-use information in surface ozone residual modeling.

Dargana: fine-tuning EarthPT for dynamic tree canopy mapping from space

Apr 24, 2025

Abstract:We present Dargana, a fine-tuned variant of the EarthPT time-series foundation model that achieves specialisation using <3% of its pre-training data volume and 5% of its pre-training compute. Dargana is fine-tuned to generate regularly updated classification of tree canopy cover at 10m resolution, distinguishing conifer and broadleaved tree types. Using Cornwall, UK, as a test case, the model achieves a pixel-level ROC-AUC of 0.98 and a PR-AUC of 0.83 on unseen satellite imagery. Dargana can identify fine structures like hedgerows and coppice below the training sample limit, and can track temporal changes to canopy cover such as new woodland establishment. Our results demonstrate how pre-trained Large Observation Models like EarthPT can be specialised for granular, dynamic land cover monitoring from space, providing a valuable, scalable tool for natural capital management and conservation.

Rapid Adaptation of Earth Observation Foundation Models for Segmentation

Sep 16, 2024Abstract:This study investigates the efficacy of Low-Rank Adaptation (LoRA) in fine-tuning Earth Observation (EO) foundation models for flood segmentation. We hypothesize that LoRA, a parameter-efficient technique, can significantly accelerate the adaptation of large-scale EO models to this critical task while maintaining high performance. We apply LoRA to fine-tune a state-of-the-art EO foundation model pre-trained on diverse satellite imagery, using a curated dataset of flood events. Our results demonstrate that LoRA-based fine-tuning (r-256) improves F1 score by 6.66 points and IoU by 0.11 compared to a frozen encoder baseline, while significantly reducing computational costs. Notably, LoRA outperforms full fine-tuning, which proves computationally infeasible on our hardware. We further assess generalization through out-of-distribution (OOD) testing on a geographically distinct flood event. While LoRA configurations show improved OOD performance over the baseline. This work contributes to research on efficient adaptation of foundation models for specialized EO tasks, with implications for rapid response systems in disaster management. Our findings demonstrate LoRA's potential for enabling faster deployment of accurate flood segmentation models in resource-constrained, time-critical scenarios.

Uncertainty and Generalizability in Foundation Models for Earth Observation

Sep 13, 2024Abstract:We take the perspective in which we want to design a downstream task (such as estimating vegetation coverage) on a certain area of interest (AOI) with a limited labeling budget. By leveraging an existing Foundation Model (FM) we must decide whether we train a downstream model on a different but label-rich AOI hoping it generalizes to our AOI, or we split labels in our AOI for training and validating. In either case, we face choices concerning what FM to use, how to sample our AOI for labeling, etc. which affect both the performance and uncertainty of the results. In this work, we perform a large ablative study using eight existing FMs on either Sentinel 1 or Sentinel 2 as input data, and the classes from the ESA World Cover product as downstream tasks across eleven AOIs. We do repeated sampling and training, resulting in an ablation of some 500K simple linear regression models. Our results show both the limits of spatial generalizability across AOIs and the power of FMs where we are able to get over 0.9 correlation coefficient between predictions and targets on different chip level predictive tasks. And still, performance and uncertainty vary greatly across AOIs, tasks and FMs. We believe this is a key issue in practice, because there are many design decisions behind each FM and downstream task (input modalities, sampling, architectures, pretraining, etc.) and usually a downstream task designer is aware of and can decide upon a few of them. Through this work, we advocate for the usage of the methodology herein described (large ablations on reference global labels and simple probes), both when publishing new FMs, and to make informed decisions when designing downstream tasks to use them.

M3LEO: A Multi-Modal, Multi-Label Earth Observation Dataset Integrating Interferometric SAR and RGB Data

Jun 06, 2024

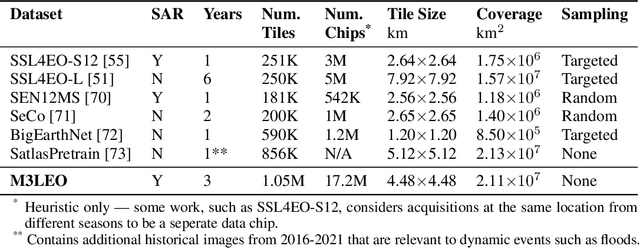

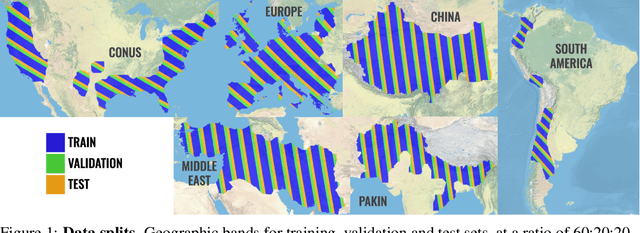

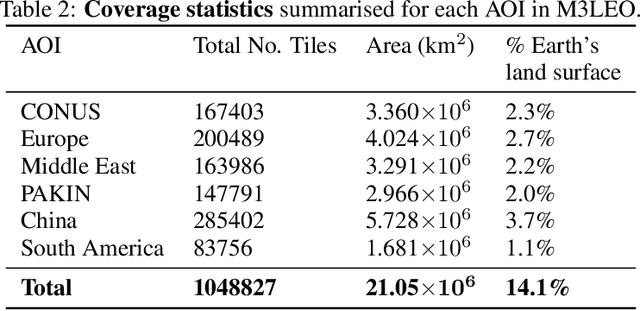

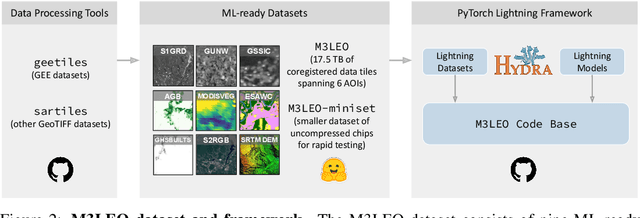

Abstract:Satellite-based remote sensing has revolutionised the way we address global challenges in a rapidly evolving world. Huge quantities of Earth Observation (EO) data are generated by satellite sensors daily, but processing these large datasets for use in ML pipelines is technically and computationally challenging. Specifically, different types of EO data are often hosted on a variety of platforms, with differing availability for Python preprocessing tools. In addition, spatial alignment across data sources and data tiling can present significant technical hurdles for novice users. While some preprocessed EO datasets exist, their content is often limited to optical or near-optical wavelength data, which is ineffective at night or in adverse weather conditions. Synthetic Aperture Radar (SAR), an active sensing technique based on microwave length radiation, offers a viable alternative. However, the application of machine learning to SAR has been limited due to a lack of ML-ready data and pipelines, particularly for the full diversity of SAR data, including polarimetry, coherence and interferometry. We introduce M3LEO, a multi-modal, multi-label EO dataset that includes polarimetric, interferometric, and coherence SAR data derived from Sentinel-1, alongside Sentinel-2 RGB imagery and a suite of labelled tasks for model evaluation. M3LEO spans 17.5TB and contains approximately 10M data chips across six geographic regions. The dataset is complemented by a flexible PyTorch Lightning framework, with configuration management using Hydra. We provide tools to process any dataset available on popular platforms such as Google Earth Engine for integration with our framework. Initial experiments validate the utility of our data and framework, showing that SAR imagery contains information additional to that extractable from RGB data. Data at huggingface.co/M3LEO, and code at github.com/spaceml-org/M3LEO.

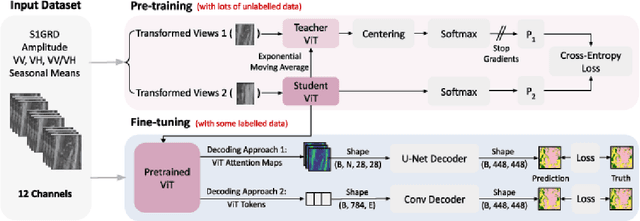

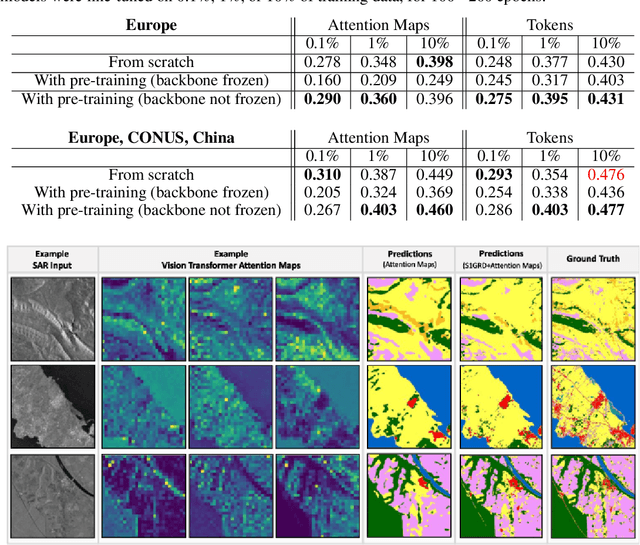

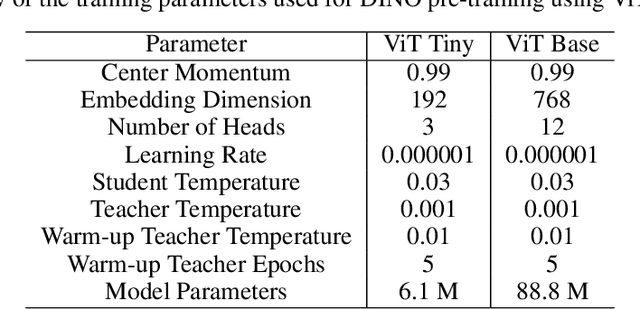

Exploring DINO: Emergent Properties and Limitations for Synthetic Aperture Radar Imagery

Oct 05, 2023

Abstract:Self-supervised learning (SSL) models have recently demonstrated remarkable performance across various tasks, including image segmentation. This study delves into the emergent characteristics of the Self-Distillation with No Labels (DINO) algorithm and its application to Synthetic Aperture Radar (SAR) imagery. We pre-train a vision transformer (ViT)-based DINO model using unlabeled SAR data, and later fine-tune the model to predict high-resolution land cover maps. We rigorously evaluate the utility of attention maps generated by the ViT backbone, and compare them with the model's token embedding space. We observe a small improvement in model performance with pre-training compared to training from scratch, and discuss the limitations and opportunities of SSL for remote sensing and land cover segmentation. Beyond small performance increases, we show that ViT attention maps hold great intrinsic value for remote sensing, and could provide useful inputs to other algorithms. With this, our work lays the ground-work for bigger and better SSL models for Earth Observation.

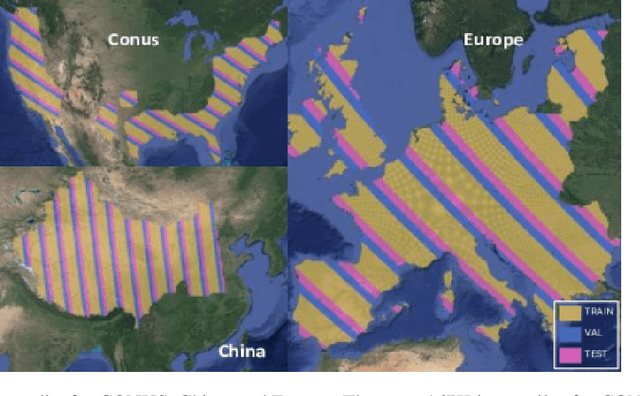

Exploring Generalisability of Self-Distillation with No Labels for SAR-Based Vegetation Prediction

Oct 03, 2023Abstract:In this work we pre-train a DINO-ViT based model using two Synthetic Aperture Radar datasets (S1GRD or GSSIC) across three regions (China, Conus, Europe). We fine-tune the models on smaller labeled datasets to predict vegetation percentage, and empirically study the connection between the embedding space of the models and their ability to generalize across diverse geographic regions and to unseen data. For S1GRD, embedding spaces of different regions are clearly separated, while GSSIC's overlaps. Positional patterns remain during fine-tuning, and greater distances in embeddings often result in higher errors for unfamiliar regions. With this, our work increases our understanding of generalizability for self-supervised models applied to remote sensing.

Large Scale Masked Autoencoding for Reducing Label Requirements on SAR Data

Oct 02, 2023

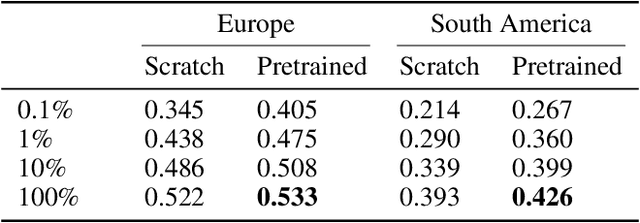

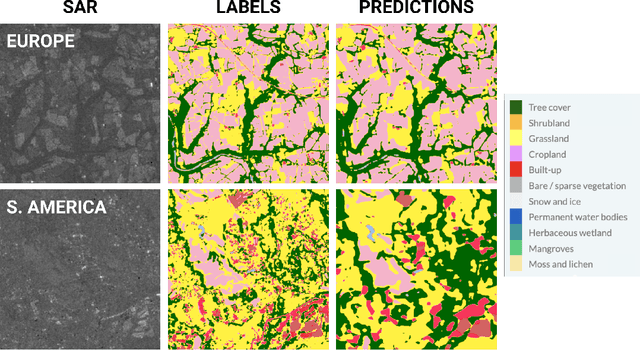

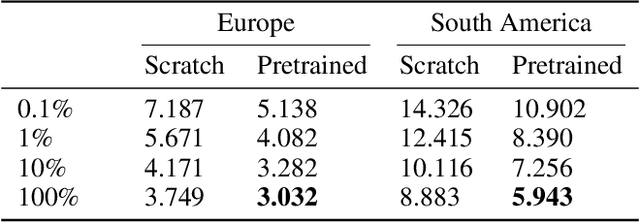

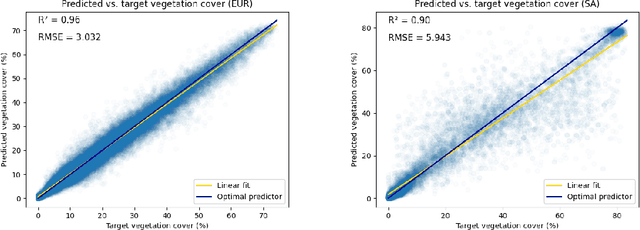

Abstract:Satellite-based remote sensing is instrumental in the monitoring and mitigation of the effects of anthropogenic climate change. Large scale, high resolution data derived from these sensors can be used to inform intervention and policy decision making, but the timeliness and accuracy of these interventions is limited by use of optical data, which cannot operate at night and is affected by adverse weather conditions. Synthetic Aperture Radar (SAR) offers a robust alternative to optical data, but its associated complexities limit the scope of labelled data generation for traditional deep learning. In this work, we apply a self-supervised pretraining scheme, masked autoencoding, to SAR amplitude data covering 8.7\% of the Earth's land surface area, and tune the pretrained weights on two downstream tasks crucial to monitoring climate change - vegetation cover prediction and land cover classification. We show that the use of this pretraining scheme reduces labelling requirements for the downstream tasks by more than an order of magnitude, and that this pretraining generalises geographically, with the performance gain increasing when tuned downstream on regions outside the pretraining set. Our findings significantly advance climate change mitigation by facilitating the development of task and region-specific SAR models, allowing local communities and organizations to deploy tailored solutions for rapid, accurate monitoring of climate change effects.

Fewshot learning on global multimodal embeddings for earth observation tasks

Sep 29, 2023Abstract:In this work we pretrain a CLIP/ViT based model using three different modalities of satellite imagery across five AOIs covering over ~10\% of the earth total landmass, namely Sentinel 2 RGB optical imagery, Sentinel 1 SAR amplitude and Sentinel 1 SAR interferometric coherence. This model uses $\sim 250$ M parameters. Then, we use the embeddings produced for each modality with a classical machine learning method to attempt different downstream tasks for earth observation related to vegetation, built up surface, croplands and permanent water. We consistently show how we reduce the need for labeled data by 99\%, so that with ~200-500 randomly selected labeled examples (around 4K-10K km$^2$) we reach performance levels analogous to those achieved with the full labeled datasets (about 150K image chips or 3M km$^2$ in each AOI) on all modalities, AOIs and downstream tasks. This leads us to think that the model has captured significant earth features useful in a wide variety of scenarios. To enhance our model's usability in practice, its architecture allows inference in contexts with missing modalities and even missing channels within each modality. Additionally, we visually show that this embedding space, obtained with no labels, is sensible to the different earth features represented by the labelled datasets we selected.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge