Joseph A. Gallego-Mejia

Tomographic SAR Reconstruction for Forest Height Estimation

Dec 03, 2024

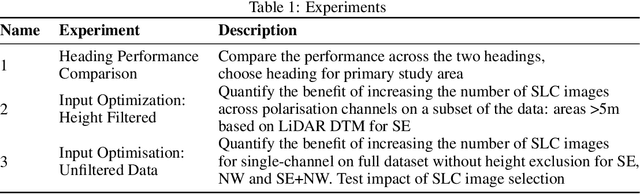

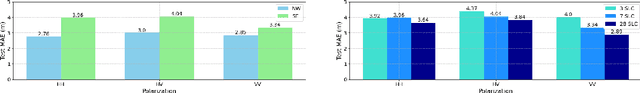

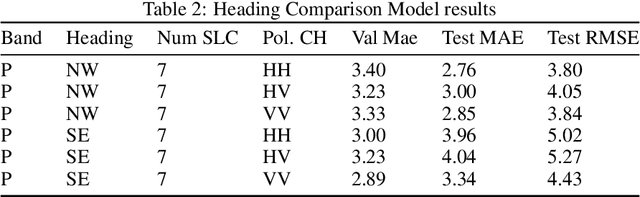

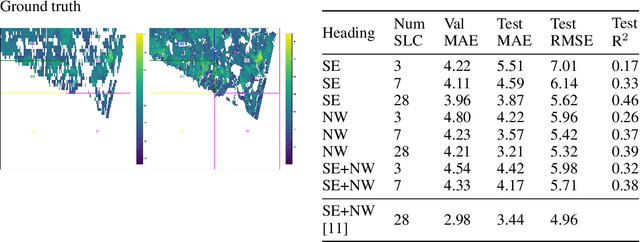

Abstract:Tree height estimation serves as an important proxy for biomass estimation in ecological and forestry applications. While traditional methods such as photogrammetry and Light Detection and Ranging (LiDAR) offer accurate height measurements, their application on a global scale is often cost-prohibitive and logistically challenging. In contrast, remote sensing techniques, particularly 3D tomographic reconstruction from Synthetic Aperture Radar (SAR) imagery, provide a scalable solution for global height estimation. SAR images have been used in earth observation contexts due to their ability to work in all weathers, unobscured by clouds. In this study, we use deep learning to estimate forest canopy height directly from 2D Single Look Complex (SLC) images, a derivative of SAR. Our method attempts to bypass traditional tomographic signal processing, potentially reducing latency from SAR capture to end product. We also quantify the impact of varying numbers of SLC images on height estimation accuracy, aiming to inform future satellite operations and optimize data collection strategies. Compared to full tomographic processing combined with deep learning, our minimal method (partial processing + deep learning) falls short, with an error 16-21\% higher, highlighting the continuing relevance of geometric signal processing.

3D-SAR Tomography and Machine Learning for High-Resolution Tree Height Estimation

Sep 09, 2024Abstract:Accurately estimating forest biomass is crucial for global carbon cycle modelling and climate change mitigation. Tree height, a key factor in biomass calculations, can be measured using Synthetic Aperture Radar (SAR) technology. This study applies machine learning to extract forest height data from two SAR products: Single Look Complex (SLC) images and tomographic cubes, in preparation for the ESA Biomass Satellite mission. We use the TomoSense dataset, containing SAR and LiDAR data from Germany's Eifel National Park, to develop and evaluate height estimation models. Our approach includes classical methods, deep learning with a 3D U-Net, and Bayesian-optimized techniques. By testing various SAR frequencies and polarimetries, we establish a baseline for future height and biomass modelling. Best-performing models predict forest height to be within 2.82m mean absolute error for canopies around 30m, advancing our ability to measure global carbon stocks and support climate action.

Exploring DINO: Emergent Properties and Limitations for Synthetic Aperture Radar Imagery

Oct 05, 2023

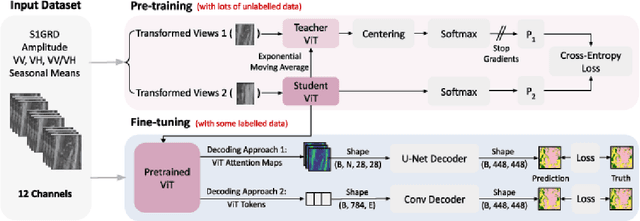

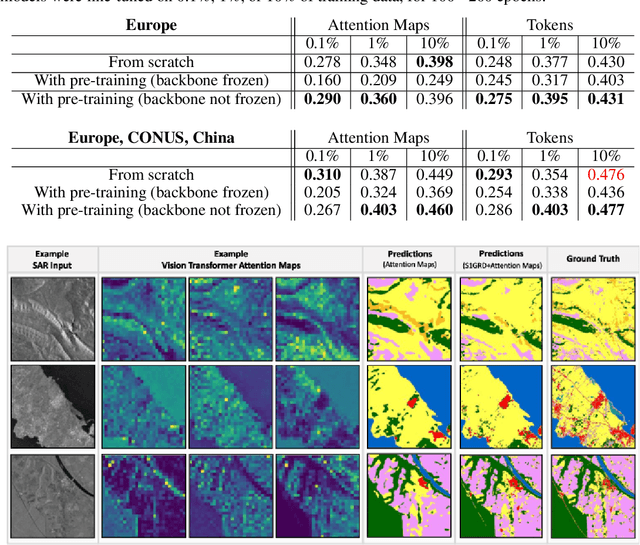

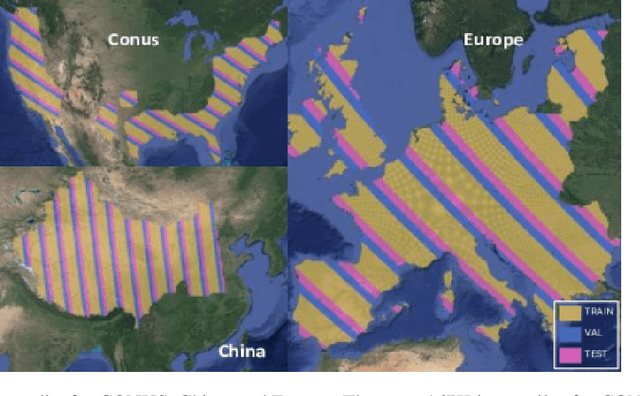

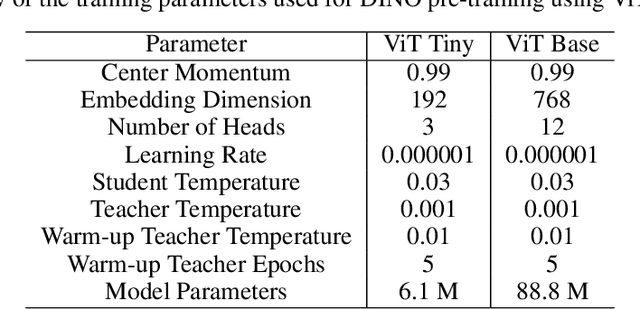

Abstract:Self-supervised learning (SSL) models have recently demonstrated remarkable performance across various tasks, including image segmentation. This study delves into the emergent characteristics of the Self-Distillation with No Labels (DINO) algorithm and its application to Synthetic Aperture Radar (SAR) imagery. We pre-train a vision transformer (ViT)-based DINO model using unlabeled SAR data, and later fine-tune the model to predict high-resolution land cover maps. We rigorously evaluate the utility of attention maps generated by the ViT backbone, and compare them with the model's token embedding space. We observe a small improvement in model performance with pre-training compared to training from scratch, and discuss the limitations and opportunities of SSL for remote sensing and land cover segmentation. Beyond small performance increases, we show that ViT attention maps hold great intrinsic value for remote sensing, and could provide useful inputs to other algorithms. With this, our work lays the ground-work for bigger and better SSL models for Earth Observation.

Exploring Generalisability of Self-Distillation with No Labels for SAR-Based Vegetation Prediction

Oct 03, 2023Abstract:In this work we pre-train a DINO-ViT based model using two Synthetic Aperture Radar datasets (S1GRD or GSSIC) across three regions (China, Conus, Europe). We fine-tune the models on smaller labeled datasets to predict vegetation percentage, and empirically study the connection between the embedding space of the models and their ability to generalize across diverse geographic regions and to unseen data. For S1GRD, embedding spaces of different regions are clearly separated, while GSSIC's overlaps. Positional patterns remain during fine-tuning, and greater distances in embeddings often result in higher errors for unfamiliar regions. With this, our work increases our understanding of generalizability for self-supervised models applied to remote sensing.

Large Scale Masked Autoencoding for Reducing Label Requirements on SAR Data

Oct 02, 2023

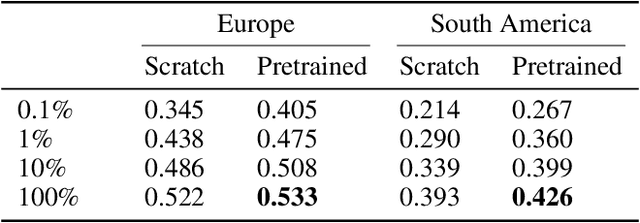

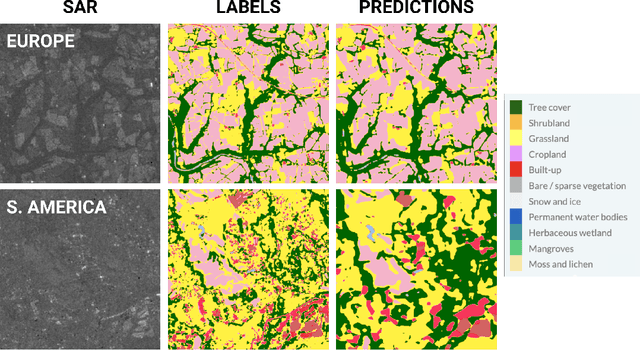

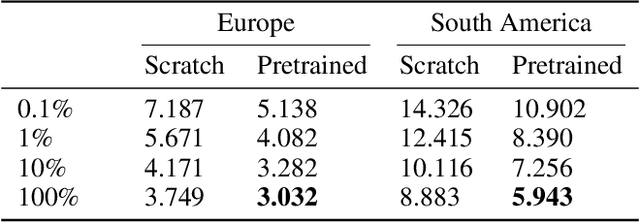

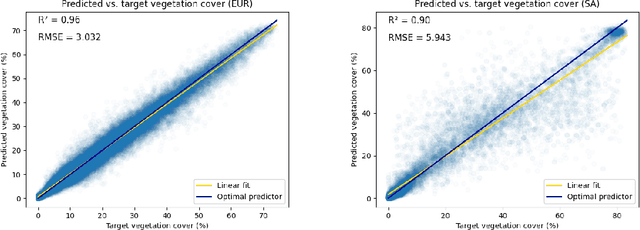

Abstract:Satellite-based remote sensing is instrumental in the monitoring and mitigation of the effects of anthropogenic climate change. Large scale, high resolution data derived from these sensors can be used to inform intervention and policy decision making, but the timeliness and accuracy of these interventions is limited by use of optical data, which cannot operate at night and is affected by adverse weather conditions. Synthetic Aperture Radar (SAR) offers a robust alternative to optical data, but its associated complexities limit the scope of labelled data generation for traditional deep learning. In this work, we apply a self-supervised pretraining scheme, masked autoencoding, to SAR amplitude data covering 8.7\% of the Earth's land surface area, and tune the pretrained weights on two downstream tasks crucial to monitoring climate change - vegetation cover prediction and land cover classification. We show that the use of this pretraining scheme reduces labelling requirements for the downstream tasks by more than an order of magnitude, and that this pretraining generalises geographically, with the performance gain increasing when tuned downstream on regions outside the pretraining set. Our findings significantly advance climate change mitigation by facilitating the development of task and region-specific SAR models, allowing local communities and organizations to deploy tailored solutions for rapid, accurate monitoring of climate change effects.

Fewshot learning on global multimodal embeddings for earth observation tasks

Sep 29, 2023Abstract:In this work we pretrain a CLIP/ViT based model using three different modalities of satellite imagery across five AOIs covering over ~10\% of the earth total landmass, namely Sentinel 2 RGB optical imagery, Sentinel 1 SAR amplitude and Sentinel 1 SAR interferometric coherence. This model uses $\sim 250$ M parameters. Then, we use the embeddings produced for each modality with a classical machine learning method to attempt different downstream tasks for earth observation related to vegetation, built up surface, croplands and permanent water. We consistently show how we reduce the need for labeled data by 99\%, so that with ~200-500 randomly selected labeled examples (around 4K-10K km$^2$) we reach performance levels analogous to those achieved with the full labeled datasets (about 150K image chips or 3M km$^2$ in each AOI) on all modalities, AOIs and downstream tasks. This leads us to think that the model has captured significant earth features useful in a wide variety of scenarios. To enhance our model's usability in practice, its architecture allows inference in contexts with missing modalities and even missing channels within each modality. Additionally, we visually show that this embedding space, obtained with no labels, is sensible to the different earth features represented by the labelled datasets we selected.

Quantum Kernel Mixtures for Probabilistic Deep Learning

May 26, 2023

Abstract:This paper presents a novel approach to probabilistic deep learning (PDL), quantum kernel mixtures, derived from the mathematical formalism of quantum density matrices, which provides a simpler yet effective mechanism for representing joint probability distributions of both continuous and discrete random variables. The framework allows for the construction of differentiable models for density estimation, inference, and sampling, enabling integration into end-to-end deep neural models. In doing so, we provide a versatile representation of marginal and joint probability distributions that allows us to develop a differentiable, compositional, and reversible inference procedure that covers a wide range of machine learning tasks, including density estimation, discriminative learning, and generative modeling. We illustrate the broad applicability of the framework with two examples: an image classification model, which can be naturally transformed into a conditional generative model thanks to the reversibility of our inference procedure; and a model for learning with label proportions, which is a weakly supervised classification task, demonstrating the framework's ability to deal with uncertainty in the training samples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge