Matthew J Allen

M3LEO: A Multi-Modal, Multi-Label Earth Observation Dataset Integrating Interferometric SAR and RGB Data

Jun 06, 2024

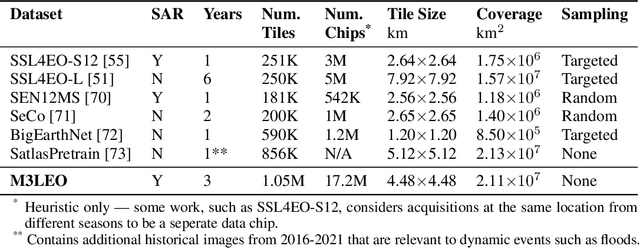

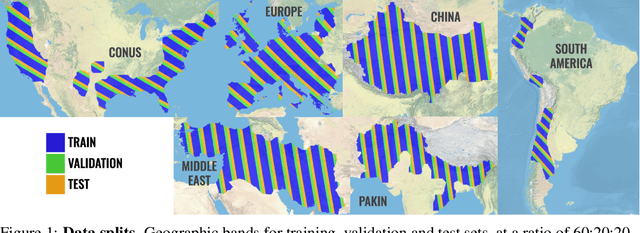

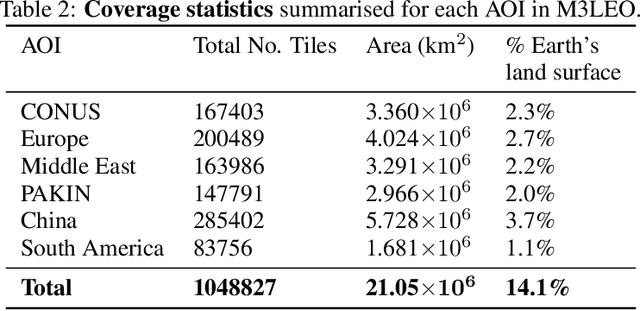

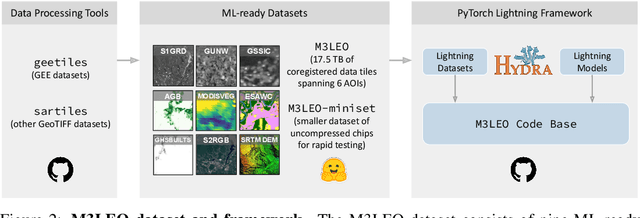

Abstract:Satellite-based remote sensing has revolutionised the way we address global challenges in a rapidly evolving world. Huge quantities of Earth Observation (EO) data are generated by satellite sensors daily, but processing these large datasets for use in ML pipelines is technically and computationally challenging. Specifically, different types of EO data are often hosted on a variety of platforms, with differing availability for Python preprocessing tools. In addition, spatial alignment across data sources and data tiling can present significant technical hurdles for novice users. While some preprocessed EO datasets exist, their content is often limited to optical or near-optical wavelength data, which is ineffective at night or in adverse weather conditions. Synthetic Aperture Radar (SAR), an active sensing technique based on microwave length radiation, offers a viable alternative. However, the application of machine learning to SAR has been limited due to a lack of ML-ready data and pipelines, particularly for the full diversity of SAR data, including polarimetry, coherence and interferometry. We introduce M3LEO, a multi-modal, multi-label EO dataset that includes polarimetric, interferometric, and coherence SAR data derived from Sentinel-1, alongside Sentinel-2 RGB imagery and a suite of labelled tasks for model evaluation. M3LEO spans 17.5TB and contains approximately 10M data chips across six geographic regions. The dataset is complemented by a flexible PyTorch Lightning framework, with configuration management using Hydra. We provide tools to process any dataset available on popular platforms such as Google Earth Engine for integration with our framework. Initial experiments validate the utility of our data and framework, showing that SAR imagery contains information additional to that extractable from RGB data. Data at huggingface.co/M3LEO, and code at github.com/spaceml-org/M3LEO.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge