Brandon Zhao

Duke University

Revealing the 3D Cosmic Web through Gravitationally Constrained Neural Fields

Apr 21, 2025

Abstract:Weak gravitational lensing is the slight distortion of galaxy shapes caused primarily by the gravitational effects of dark matter in the universe. In our work, we seek to invert the weak lensing signal from 2D telescope images to reconstruct a 3D map of the universe's dark matter field. While inversion typically yields a 2D projection of the dark matter field, accurate 3D maps of the dark matter distribution are essential for localizing structures of interest and testing theories of our universe. However, 3D inversion poses significant challenges. First, unlike standard 3D reconstruction that relies on multiple viewpoints, in this case, images are only observed from a single viewpoint. This challenge can be partially addressed by observing how galaxy emitters throughout the volume are lensed. However, this leads to the second challenge: the shapes and exact locations of unlensed galaxies are unknown, and can only be estimated with a very large degree of uncertainty. This introduces an overwhelming amount of noise which nearly drowns out the lensing signal completely. Previous approaches tackle this by imposing strong assumptions about the structures in the volume. We instead propose a methodology using a gravitationally-constrained neural field to flexibly model the continuous matter distribution. We take an analysis-by-synthesis approach, optimizing the weights of the neural network through a fully differentiable physical forward model to reproduce the lensing signal present in image measurements. We showcase our method on simulations, including realistic simulated measurements of dark matter distributions that mimic data from upcoming telescope surveys. Our results show that our method can not only outperform previous methods, but importantly is also able to recover potentially surprising dark matter structures.

This Looks Like Those: Illuminating Prototypical Concepts Using Multiple Visualizations

Oct 28, 2023Abstract:We present ProtoConcepts, a method for interpretable image classification combining deep learning and case-based reasoning using prototypical parts. Existing work in prototype-based image classification uses a ``this looks like that'' reasoning process, which dissects a test image by finding prototypical parts and combining evidence from these prototypes to make a final classification. However, all of the existing prototypical part-based image classifiers provide only one-to-one comparisons, where a single training image patch serves as a prototype to compare with a part of our test image. With these single-image comparisons, it can often be difficult to identify the underlying concept being compared (e.g., ``is it comparing the color or the shape?''). Our proposed method modifies the architecture of prototype-based networks to instead learn prototypical concepts which are visualized using multiple image patches. Having multiple visualizations of the same prototype allows us to more easily identify the concept captured by that prototype (e.g., ``the test image and the related training patches are all the same shade of blue''), and allows our model to create richer, more interpretable visual explanations. Our experiments show that our ``this looks like those'' reasoning process can be applied as a modification to a wide range of existing prototypical image classification networks while achieving comparable accuracy on benchmark datasets.

Single View Refractive Index Tomography with Neural Fields

Sep 08, 2023

Abstract:Refractive Index Tomography is an inverse problem in which we seek to reconstruct a scene's 3D refractive field from 2D projected image measurements. The refractive field is not visible itself, but instead affects how the path of a light ray is continuously curved as it travels through space. Refractive fields appear across a wide variety of scientific applications, from translucent cell samples in microscopy to fields of dark matter bending light from faraway galaxies. This problem poses a unique challenge because the refractive field directly affects the path that light takes, making its recovery a non-linear problem. In addition, in contrast with traditional tomography, we seek to recover the refractive field using a projected image from only a single viewpoint by leveraging knowledge of light sources scattered throughout the medium. In this work, we introduce a method that uses a coordinate-based neural network to model the underlying continuous refractive field in a scene. We then use explicit modeling of rays' 3D spatial curvature to optimize the parameters of this network, reconstructing refractive fields with an analysis-by-synthesis approach. The efficacy of our approach is demonstrated by recovering refractive fields in simulation, and analyzing how recovery is affected by the light source distribution. We then test our method on a simulated dark matter mapping problem, where we recover the refractive field underlying a realistic simulated dark matter distribution.

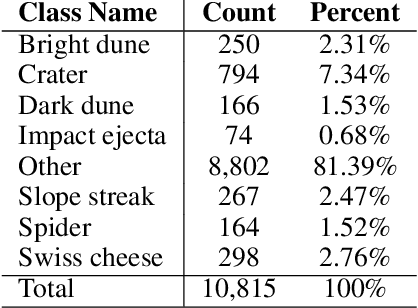

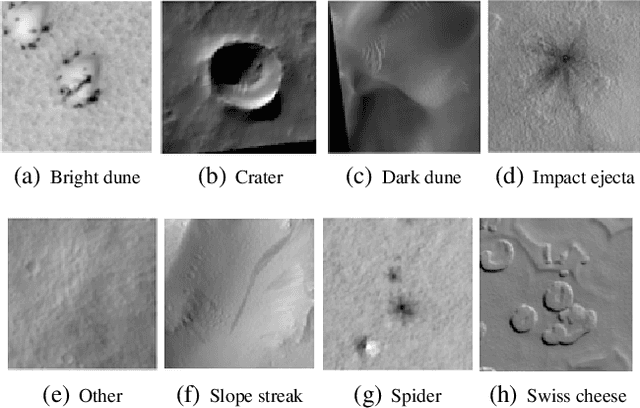

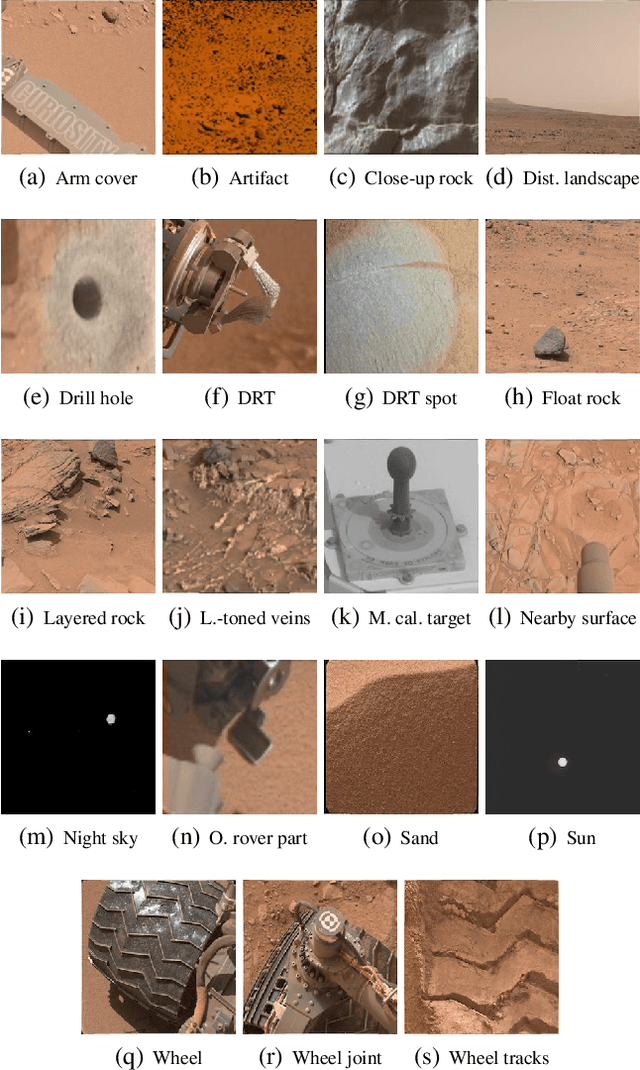

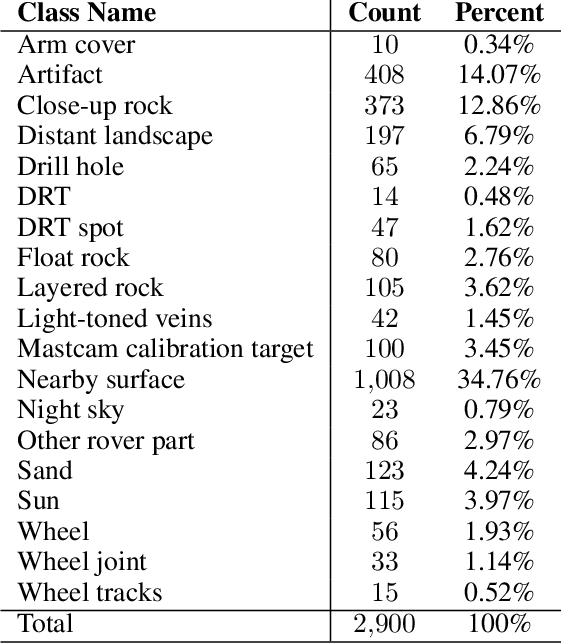

Mars Image Content Classification: Three Years of NASA Deployment and Recent Advances

Feb 09, 2021

Abstract:The NASA Planetary Data System hosts millions of images acquired from the planet Mars. To help users quickly find images of interest, we have developed and deployed content-based classification and search capabilities for Mars orbital and surface images. The deployed systems are publicly accessible using the PDS Image Atlas. We describe the process of training, evaluating, calibrating, and deploying updates to two CNN classifiers for images collected by Mars missions. We also report on three years of deployment including usage statistics, lessons learned, and plans for the future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge