Aviad Levis

Revealing Fine Structure in Protoplanetary Disks with Physics Constrained Neural Fields

Sep 03, 2025Abstract:Protoplanetary disks are the birthplaces of planets, and resolving their three-dimensional structure is key to understanding disk evolution. The unprecedented resolution of ALMA demands modeling approaches that capture features beyond the reach of traditional methods. We introduce a computational framework that integrates physics-constrained neural fields with differentiable rendering and present RadJAX, a GPU-accelerated, fully differentiable line radiative transfer solver achieving up to 10,000x speedups over conventional ray tracers, enabling previously intractable, high-dimensional neural reconstructions. Applied to ALMA CO observations of HD 163296, this framework recovers the vertical morphology of the CO-rich layer, revealing a pronounced narrowing and flattening of the emission surface beyond 400 au - a feature missed by existing approaches. Our work establish a new paradigm for extracting complex disk structure and advancing our understanding of protoplanetary evolution.

Revealing the 3D Cosmic Web through Gravitationally Constrained Neural Fields

Apr 21, 2025

Abstract:Weak gravitational lensing is the slight distortion of galaxy shapes caused primarily by the gravitational effects of dark matter in the universe. In our work, we seek to invert the weak lensing signal from 2D telescope images to reconstruct a 3D map of the universe's dark matter field. While inversion typically yields a 2D projection of the dark matter field, accurate 3D maps of the dark matter distribution are essential for localizing structures of interest and testing theories of our universe. However, 3D inversion poses significant challenges. First, unlike standard 3D reconstruction that relies on multiple viewpoints, in this case, images are only observed from a single viewpoint. This challenge can be partially addressed by observing how galaxy emitters throughout the volume are lensed. However, this leads to the second challenge: the shapes and exact locations of unlensed galaxies are unknown, and can only be estimated with a very large degree of uncertainty. This introduces an overwhelming amount of noise which nearly drowns out the lensing signal completely. Previous approaches tackle this by imposing strong assumptions about the structures in the volume. We instead propose a methodology using a gravitationally-constrained neural field to flexibly model the continuous matter distribution. We take an analysis-by-synthesis approach, optimizing the weights of the neural network through a fully differentiable physical forward model to reproduce the lensing signal present in image measurements. We showcase our method on simulations, including realistic simulated measurements of dark matter distributions that mimic data from upcoming telescope surveys. Our results show that our method can not only outperform previous methods, but importantly is also able to recover potentially surprising dark matter structures.

Exoplanet Detection via Differentiable Rendering

Jan 03, 2025Abstract:Direct imaging of exoplanets is crucial for advancing our understanding of planetary systems beyond our solar system, but it faces significant challenges due to the high contrast between host stars and their planets. Wavefront aberrations introduce speckles in the telescope science images, which are patterns of diffracted starlight that can mimic the appearance of planets, complicating the detection of faint exoplanet signals. Traditional post-processing methods, operating primarily in the image intensity domain, do not integrate wavefront sensing data. These data, measured mainly for adaptive optics corrections, have been overlooked as a potential resource for post-processing, partly due to the challenge of the evolving nature of wavefront aberrations. In this paper, we present a differentiable rendering approach that leverages these wavefront sensing data to improve exoplanet detection. Our differentiable renderer models wave-based light propagation through a coronagraphic telescope system, allowing gradient-based optimization to significantly improve starlight subtraction and increase sensitivity to faint exoplanets. Simulation experiments based on the James Webb Space Telescope configuration demonstrate the effectiveness of our approach, achieving substantial improvements in contrast and planet detection limits. Our results showcase how the computational advancements enabled by differentiable rendering can revitalize previously underexploited wavefront data, opening new avenues for enhancing exoplanet imaging and characterization.

Learned 3D volumetric recovery of clouds and its uncertainty for climate analysis

Mar 09, 2024

Abstract:Significant uncertainty in climate prediction and cloud physics is tied to observational gaps relating to shallow scattered clouds. Addressing these challenges requires remote sensing of their three-dimensional (3D) heterogeneous volumetric scattering content. This calls for passive scattering computed tomography (CT). We design a learning-based model (ProbCT) to achieve CT of such clouds, based on noisy multi-view spaceborne images. ProbCT infers - for the first time - the posterior probability distribution of the heterogeneous extinction coefficient, per 3D location. This yields arbitrary valuable statistics, e.g., the 3D field of the most probable extinction and its uncertainty. ProbCT uses a neural-field representation, making essentially real-time inference. ProbCT undergoes supervised training by a new labeled multi-class database of physics-based volumetric fields of clouds and their corresponding images. To improve out-of-distribution inference, we incorporate self-supervised learning through differential rendering. We demonstrate the approach in simulations and on real-world data, and indicate the relevance of 3D recovery and uncertainty to precipitation and renewable energy.

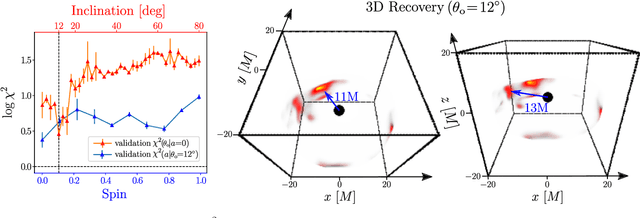

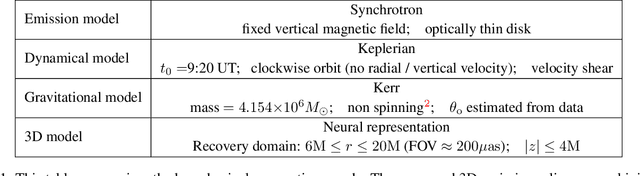

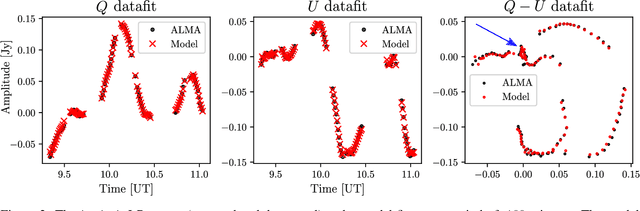

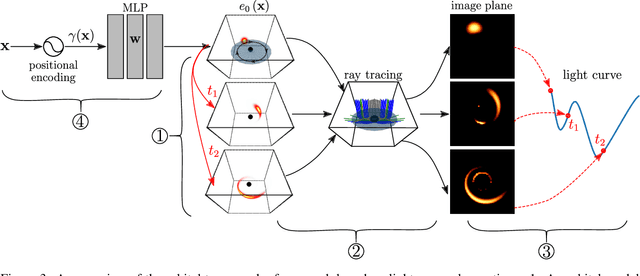

Orbital Polarimetric Tomography of a Flare Near the Sagittarius A* Supermassive Black Hole

Oct 11, 2023

Abstract:The interaction between the supermassive black hole at the center of the Milky Way, Sagittarius A$^*$, and its accretion disk, occasionally produces high energy flares seen in X-ray, infrared and radio. One mechanism for observed flares is the formation of compact bright regions that appear within the accretion disk and close to the event horizon. Understanding these flares can provide a window into black hole accretion processes. Although sophisticated simulations predict the formation of these flares, their structure has yet to be recovered by observations. Here we show the first three-dimensional (3D) reconstruction of an emission flare in orbit recovered from ALMA light curves observed on April 11, 2017. Our recovery results show compact bright regions at a distance of roughly 6 times the event horizon. Moreover, our recovery suggests a clockwise rotation in a low-inclination orbital plane, a result consistent with prior studies by EHT and GRAVITY collaborations. To recover this emission structure we solve a highly ill-posed tomography problem by integrating a neural 3D representation (an emergent artificial intelligence approach for 3D reconstruction) with a gravitational model for black holes. Although the recovered 3D structure is subject, and sometimes sensitive, to the model assumptions, under physically motivated choices we find that our results are stable and our approach is successful on simulated data. We anticipate that in the future, this approach could be used to analyze a richer collection of time-series data that could shed light on the mechanisms governing black hole and plasma dynamics.

Single View Refractive Index Tomography with Neural Fields

Sep 08, 2023

Abstract:Refractive Index Tomography is an inverse problem in which we seek to reconstruct a scene's 3D refractive field from 2D projected image measurements. The refractive field is not visible itself, but instead affects how the path of a light ray is continuously curved as it travels through space. Refractive fields appear across a wide variety of scientific applications, from translucent cell samples in microscopy to fields of dark matter bending light from faraway galaxies. This problem poses a unique challenge because the refractive field directly affects the path that light takes, making its recovery a non-linear problem. In addition, in contrast with traditional tomography, we seek to recover the refractive field using a projected image from only a single viewpoint by leveraging knowledge of light sources scattered throughout the medium. In this work, we introduce a method that uses a coordinate-based neural network to model the underlying continuous refractive field in a scene. We then use explicit modeling of rays' 3D spatial curvature to optimize the parameters of this network, reconstructing refractive fields with an analysis-by-synthesis approach. The efficacy of our approach is demonstrated by recovering refractive fields in simulation, and analyzing how recovery is affected by the light source distribution. We then test our method on a simulated dark matter mapping problem, where we recover the refractive field underlying a realistic simulated dark matter distribution.

Gravitationally Lensed Black Hole Emission Tomography

Apr 07, 2022

Abstract:Measurements from the Event Horizon Telescope enabled the visualization of light emission around a black hole for the first time. So far, these measurements have been used to recover a 2D image under the assumption that the emission field is static over the period of acquisition. In this work, we propose BH-NeRF, a novel tomography approach that leverages gravitational lensing to recover the continuous 3D emission field near a black hole. Compared to other 3D reconstruction or tomography settings, this task poses two significant challenges: first, rays near black holes follow curved paths dictated by general relativity, and second, we only observe measurements from a single viewpoint. Our method captures the unknown emission field using a continuous volumetric function parameterized by a coordinate-based neural network, and uses knowledge of Keplerian orbital dynamics to establish correspondence between 3D points over time. Together, these enable BH-NeRF to recover accurate 3D emission fields, even in challenging situations with sparse measurements and uncertain orbital dynamics. This work takes the first steps in showing how future measurements from the Event Horizon Telescope could be used to recover evolving 3D emission around the supermassive black hole in our Galactic center.

Multi-view polarimetric scattering cloud tomography and retrieval of droplet size

May 22, 2020

Abstract:Tomography aims to recover a three-dimensional (3D) density map of a medium or an object. In medical imaging, it is extensively used for diagnostics via X-ray computed tomography (CT). Optical diffusion tomography is an alternative to X-ray CT that uses multiply scattered light to deliver coarse density maps for soft tissues. We define and derive tomography of cloud droplet distributions via passive remote sensing. We use multi-view polarimetric images to fit a 3D polarized radiative transfer (RT) forward model. Our motivation is 3D volumetric probing of vertically-developed convectively-driven clouds that are ill-served by current methods in operational passive remote sensing. These techniques are based on strictly 1D RT modeling and applied to a single cloudy pixel, where cloud geometry is assumed to be that of a plane-parallel slab. Incident unpolarized sunlight, once scattered by cloud-droplets, changes its polarization state according to droplet size. Therefore, polarimetric measurements in the rainbow and glory angular regions can be used to infer the droplet size distribution. This work defines and derives a framework for a full 3D tomography of cloud droplets for both their mass concentration in space and their distribution across a range of sizes. This 3D retrieval of key microphysical properties is made tractable by our novel approach that involves a restructuring and differentiation of an open-source polarized 3D RT code to accommodate a special two-step optimization technique. Physically-realistic synthetic clouds are used to demonstrate the methodology with rigorous uncertainty quantification.

In-situ multi-scattering tomography

Dec 07, 2015

Abstract:To recover the three dimensional (3D) volumetric distribution of matter in an object, images of the object are captured from multiple directions and locations. Using these images tomographic computations extract the distribution. In highly scattering media and constrained, natural irradiance, tomography must explicitly account for off-axis scattering. Furthermore, the tomographic model and recovery must function when imaging is done in-situ, as occurs in medical imaging and ground-based atmospheric sensing. We formulate tomography that handles arbitrary orders of scattering, using a monte-carlo model. Moreover, the model is highly parallelizable in our formulation. This enables large scale rendering and recovery of volumetric scenes having a large number of variables. We solve stability and conditioning problems that stem from radiative transfer (RT) modeling in-situ.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge