Anat Levin

Department of Electrical and Computer Engineering, Technion, Haifa, Israel

Passive Micron-scale Time-of-Flight with Sunlight Interferometry

Nov 19, 2022

Abstract:We introduce an interferometric technique for passive time-of-flight imaging and depth sensing at micrometer axial resolutions. Our technique uses a full-field Michelson interferometer, modified to use sunlight as the only light source. The large spectral bandwidth of sunlight makes it possible to acquire micrometer-resolution time-resolved scene responses, through a simple axial scanning operation. Additionally, the angular bandwidth of sunlight makes it possible to capture time-of-flight measurements insensitive to indirect illumination effects, such as interreflections and subsurface scattering. We build an experimental prototype that we operate outdoors, under direct sunlight, and in adverse environmental conditions such as mechanical vibrations and vehicle traffic. We use this prototype to demonstrate, for the first time, passive imaging capabilities such as micrometer-scale depth sensing robust to indirect illumination, direct-only imaging, and imaging through diffusers.

Swept-Angle Synthetic Wavelength Interferometry

May 21, 2022

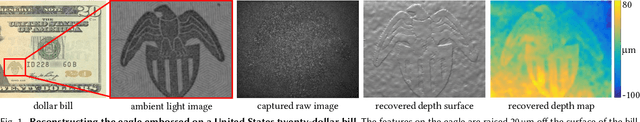

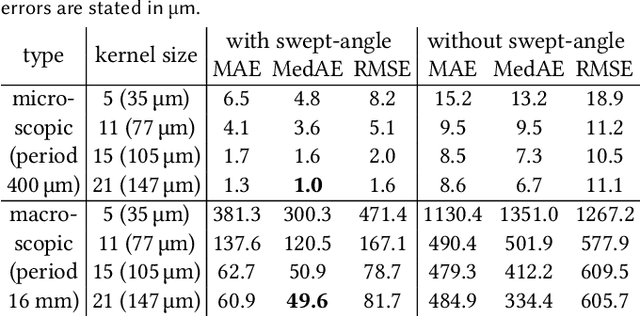

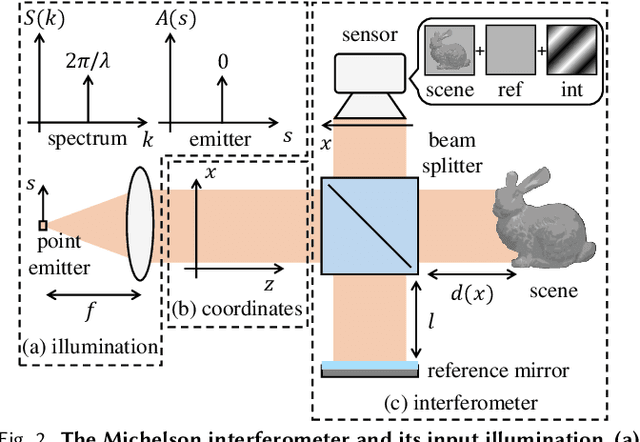

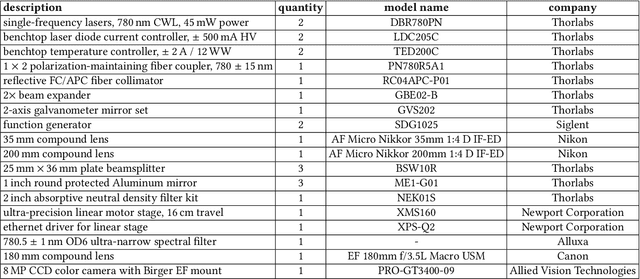

Abstract:We present a new imaging technique, swept-angle synthetic wavelength interferometry, for full-field micron-scale 3D sensing. As in conventional synthetic wavelength interferometry, our technique uses light consisting of two optical wavelengths, resulting in per-pixel interferometric measurements whose phase encodes scene depth. Our technique additionally uses a new type of light source that, by emulating spatially-incoherent illumination, makes interferometric measurements insensitive to global illumination effects that confound depth information. The resulting technique combines the speed of full-field interferometric setups with the robustness to global illumination of scanning interferometric setups. Overall, our technique can recover full-frame depth at a spatial and axial resolution of a few micrometers using as few as 16 measurements, resulting in fast acquisition at frame rates of 10 Hz. We build an experimental prototype and use it to demonstrate these capabilities, by scanning a variety of scenes that contain challenging light transport effects such as interreflections, subsurface scattering, and specularities. We validate the accuracy of our measurements by showing that they closely match reference measurements from a full-field optical coherence tomography system, despite being captured at orders of magnitude faster acquisition times and while operating under strong ambient light.

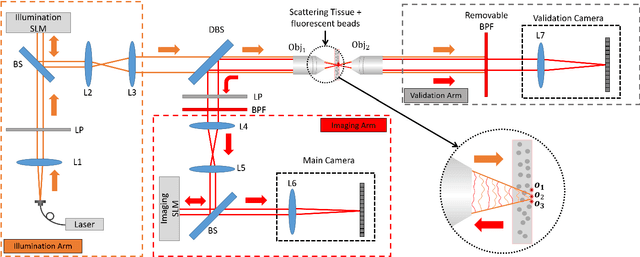

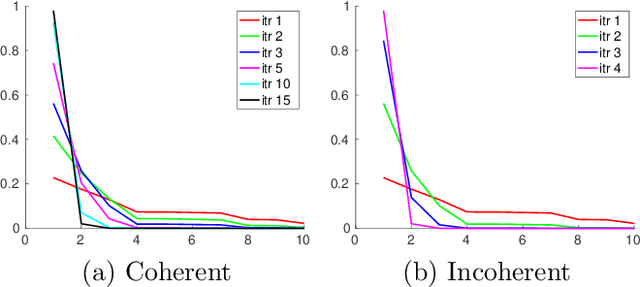

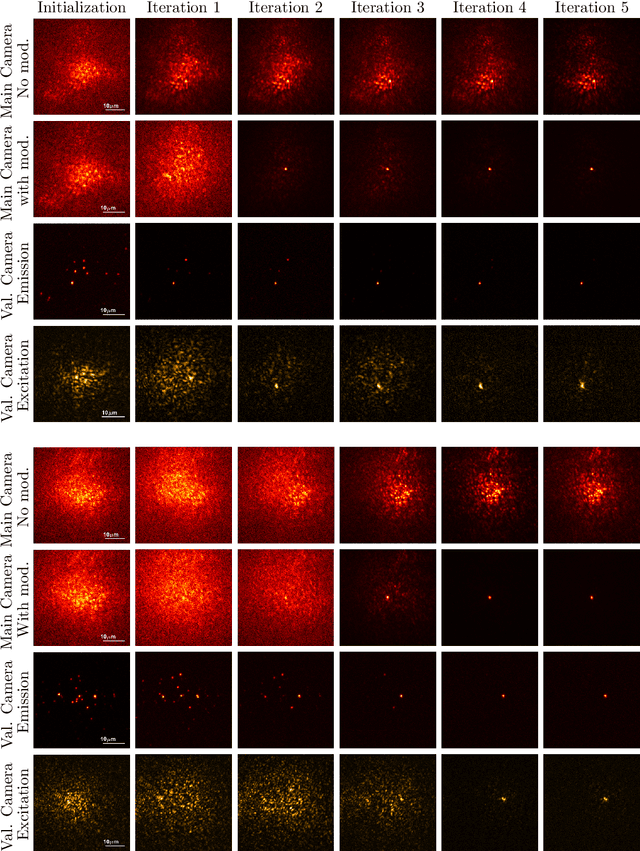

Fluorescent wavefront shaping using incoherent iterative phase conjugation

May 15, 2022

Abstract:Wavefront shaping correction makes it possible to image fluorescent particles deep inside scattering tissue. This requires determining a correction mask to be placed in both excitation and emission paths. Standard approaches select correction masks by optimizing various image metrics, a process that requires capturing a prohibitively large number of images. To reduce acquisition cost, iterative phase conjugation techniques use the observation that the desired correction mask is an eigenvector of the tissue transmission operator. They then determine this eigenvector via optical implementations of the power iteration method, which require capturing orders of magnitude fewer images. Existing iterative phase conjugation techniques assume a linear model for the transmission of light through tissue, and thus only apply to fully-coherent imaging systems. We extend such techniques to the incoherent case for the first time. The fact that light emitted from different sources sums incoherently violates the linear model and makes linear transmission operators inapplicable. We show that, surprisingly, the non-linearity due to incoherent summation results in an order-of-magnitude acceleration in the convergence of the phase conjugation iteration.

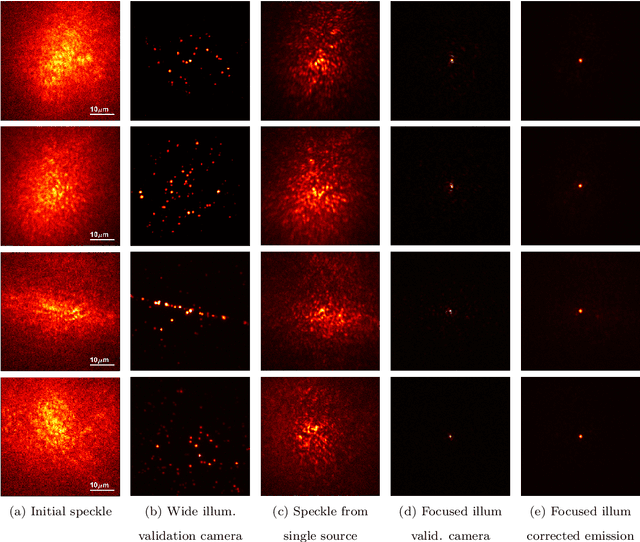

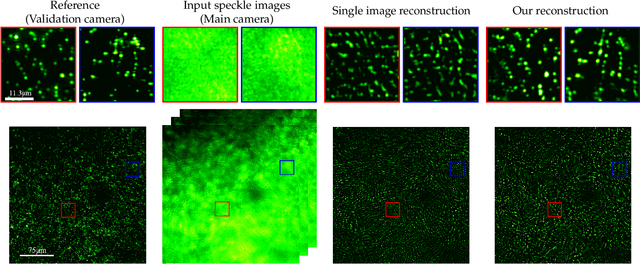

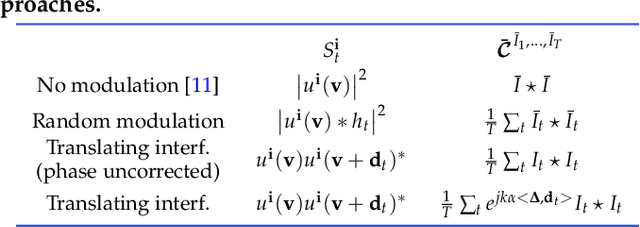

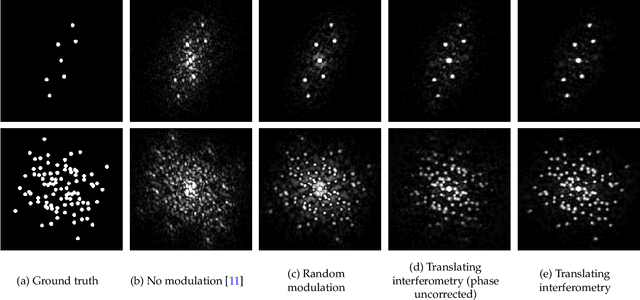

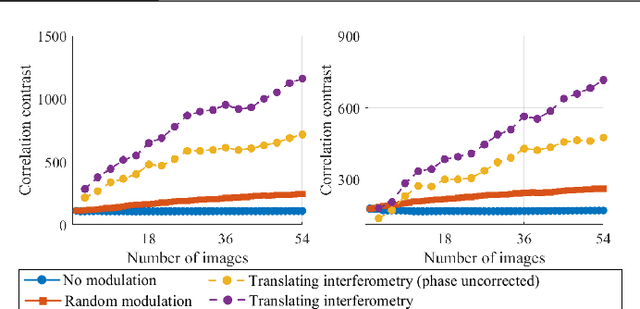

Enhancing Speckle Statistics for Imaging inside Scattering Media

Mar 27, 2022

Abstract:We exploit memory effect speckle correlations for the imaging of incoherent linear (single-photon) fluorescent sources behind scattering tissue. While memory effect-based imaging techniques have been heavily studied in the past, for thick scattering layers and complex illumination patterns these correlations are weak, limiting the practice applicability of the idea. In this work, we introduce a Spatial Light Modulator (SLM) between the tissue sample and the imaging sensor and capture multiple modulations of the speckle pattern. We show that by correctly designing the modulation pattern and the reconstruction algorithm we can greatly enhance statistical correlations in the data. We exploit this to demonstrate the reconstruction of mega-pixel wide fluorescent patterns behind scattering tissue.

Towards Occlusion-Aware Multifocal Displays

May 02, 2020

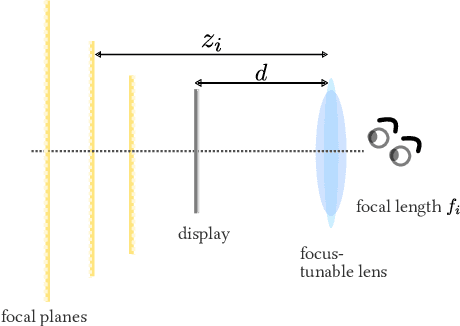

Abstract:The human visual system uses numerous cues for depth perception, including disparity, accommodation, motion parallax and occlusion. It is incumbent upon virtual-reality displays to satisfy these cues to provide an immersive user experience. Multifocal displays, one of the classic approaches to satisfy the accommodation cue, place virtual content at multiple focal planes, each at a di erent depth. However, the content on focal planes close to the eye do not occlude those farther away; this deteriorates the occlusion cue as well as reduces contrast at depth discontinuities due to leakage of the defocus blur. This paper enables occlusion-aware multifocal displays using a novel ConeTilt operator that provides an additional degree of freedom -- tilting the light cone emitted at each pixel of the display panel. We show that, for scenes with relatively simple occlusion con gurations, tilting the light cones provides the same e ect as physical occlusion. We demonstrate that ConeTilt can be easily implemented by a phase-only spatial light modulator. Using a lab prototype, we show results that demonstrate the presence of occlusion cues and the increased contrast of the display at depth edges.

In-situ multi-scattering tomography

Dec 07, 2015

Abstract:To recover the three dimensional (3D) volumetric distribution of matter in an object, images of the object are captured from multiple directions and locations. Using these images tomographic computations extract the distribution. In highly scattering media and constrained, natural irradiance, tomography must explicitly account for off-axis scattering. Furthermore, the tomographic model and recovery must function when imaging is done in-situ, as occurs in medical imaging and ground-based atmospheric sensing. We formulate tomography that handles arbitrary orders of scattering, using a monte-carlo model. Moreover, the model is highly parallelizable in our formulation. This enables large scale rendering and recovery of volumetric scenes having a large number of variables. We solve stability and conditioning problems that stem from radiative transfer (RT) modeling in-situ.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge