Ioannis Gkioulekas

Robotics Institute, Carnegie Mellon University, PA, USA

Structured light with a million light planes per second

Nov 27, 2024

Abstract:We introduce a structured light system that captures full-frame depth at rates of a thousand frames per second, four times faster than the previous state of the art. Our key innovation to this end is the design of an acousto-optic light scanning device that can scan light planes at rates up to two million planes per second. We combine this device with an event camera for structured light, using the sparse events triggered on the camera as we sweep a light plane on the scene for depth triangulation. In contrast to prior work, where light scanning is the bottleneck towards faster structured light operation, our light scanning device is three orders of magnitude faster than the event camera's full-frame bandwidth, thus allowing us to take full advantage of the event camera's fast operation. To surpass this bandwidth, we additionally demonstrate adaptive scanning of only regions of interest, at speeds an order of magnitude faster than the theoretical full-frame limit for event cameras.

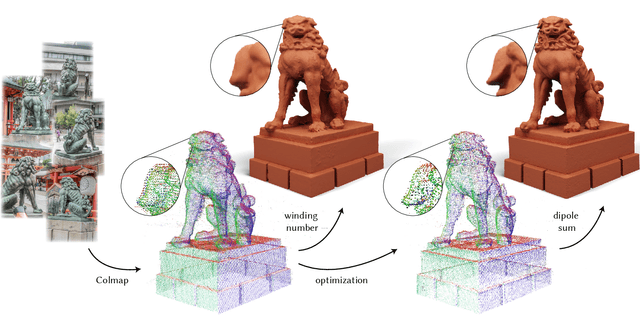

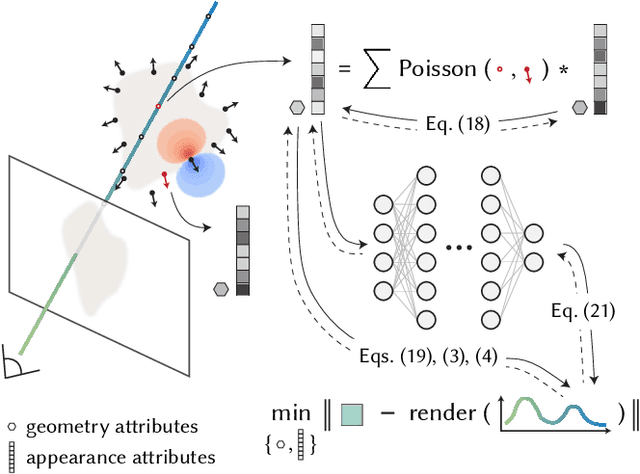

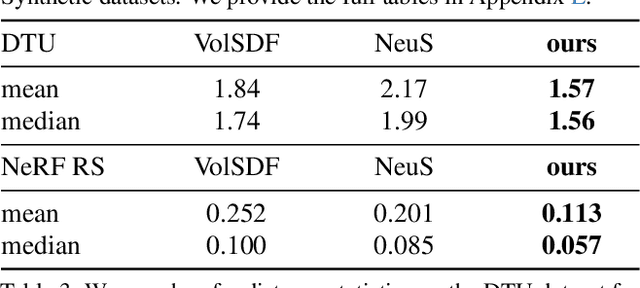

3D Reconstruction with Fast Dipole Sums

May 29, 2024

Abstract:We introduce a technique for the reconstruction of high-fidelity surfaces from multi-view images. Our technique uses a new point-based representation, the dipole sum, which generalizes the winding number to allow for interpolation of arbitrary per-point attributes in point clouds with noisy or outlier points. Using dipole sums allows us to represent implicit geometry and radiance fields as per-point attributes of a point cloud, which we initialize directly from structure from motion. We additionally derive Barnes-Hut fast summation schemes for accelerated forward and reverse-mode dipole sum queries. These queries facilitate the use of ray tracing to efficiently and differentiably render images with our point-based representations, and thus update their point attributes to optimize scene geometry and appearance. We evaluate this inverse rendering framework against state-of-the-art alternatives, based on ray tracing of neural representations or rasterization of Gaussian point-based representations. Our technique significantly improves reconstruction quality at equal runtimes, while also supporting more general rendering techniques such as shadow rays for direct illumination. In the supplement, we provide interactive visualizations of our results.

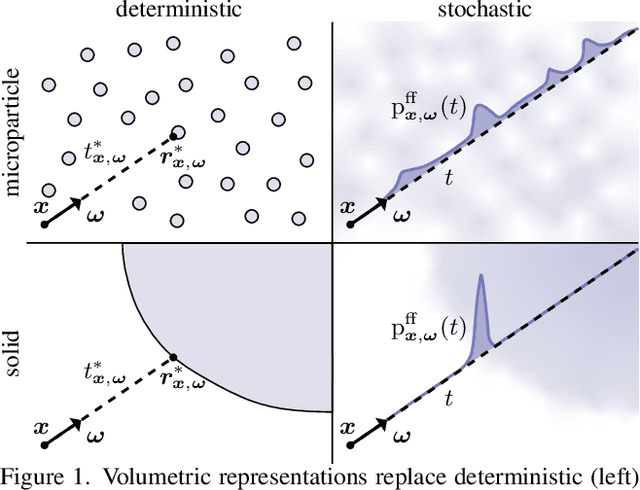

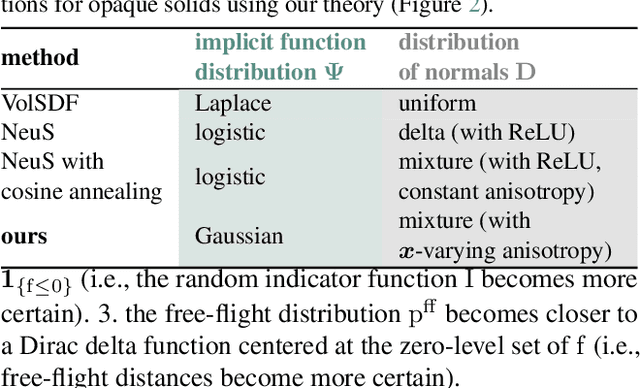

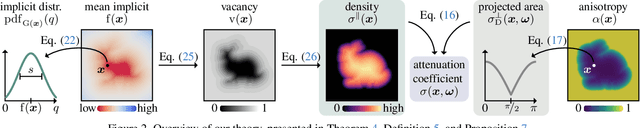

A theory of volumetric representations for opaque solids

Dec 24, 2023

Abstract:We develop a theory for the representation of opaque solids as volumetric models. Starting from a stochastic representation of opaque solids as random indicator functions, we prove the conditions under which such solids can be modeled using exponential volumetric transport. We also derive expressions for the volumetric attenuation coefficient as a functional of the probability distributions of the underlying indicator functions. We generalize our theory to account for isotropic and anisotropic scattering at different parts of the solid, and for representations of opaque solids as implicit surfaces. We derive our volumetric representation from first principles, which ensures that it satisfies physical constraints such as reciprocity and reversibility. We use our theory to explain, compare, and correct previous volumetric representations, as well as propose meaningful extensions that lead to improved performance in 3D reconstruction tasks.

NOVA: NOvel View Augmentation for Neural Composition of Dynamic Objects

Aug 24, 2023Abstract:We propose a novel-view augmentation (NOVA) strategy to train NeRFs for photo-realistic 3D composition of dynamic objects in a static scene. Compared to prior work, our framework significantly reduces blending artifacts when inserting multiple dynamic objects into a 3D scene at novel views and times; achieves comparable PSNR without the need for additional ground truth modalities like optical flow; and overall provides ease, flexibility, and scalability in neural composition. Our codebase is on GitHub.

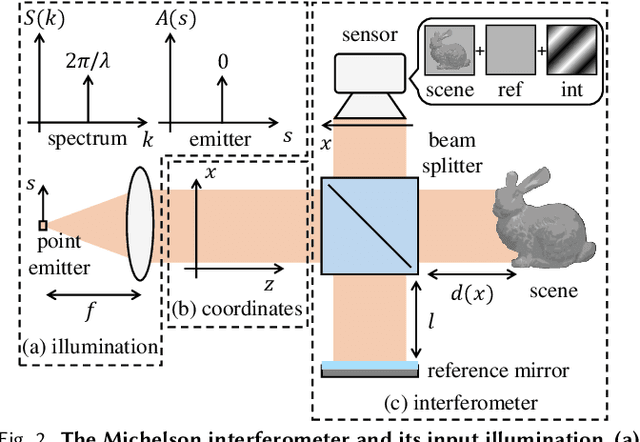

Passive Micron-scale Time-of-Flight with Sunlight Interferometry

Nov 19, 2022

Abstract:We introduce an interferometric technique for passive time-of-flight imaging and depth sensing at micrometer axial resolutions. Our technique uses a full-field Michelson interferometer, modified to use sunlight as the only light source. The large spectral bandwidth of sunlight makes it possible to acquire micrometer-resolution time-resolved scene responses, through a simple axial scanning operation. Additionally, the angular bandwidth of sunlight makes it possible to capture time-of-flight measurements insensitive to indirect illumination effects, such as interreflections and subsurface scattering. We build an experimental prototype that we operate outdoors, under direct sunlight, and in adverse environmental conditions such as mechanical vibrations and vehicle traffic. We use this prototype to demonstrate, for the first time, passive imaging capabilities such as micrometer-scale depth sensing robust to indirect illumination, direct-only imaging, and imaging through diffusers.

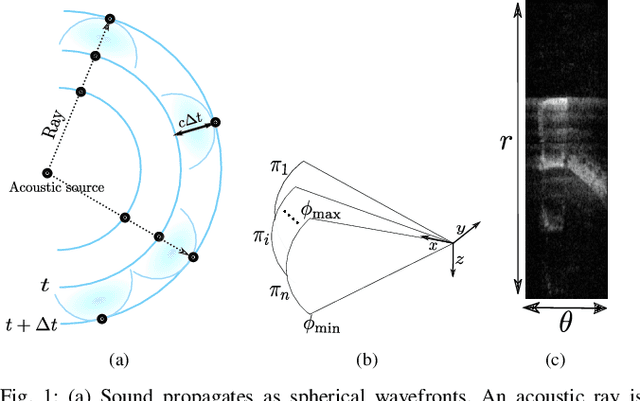

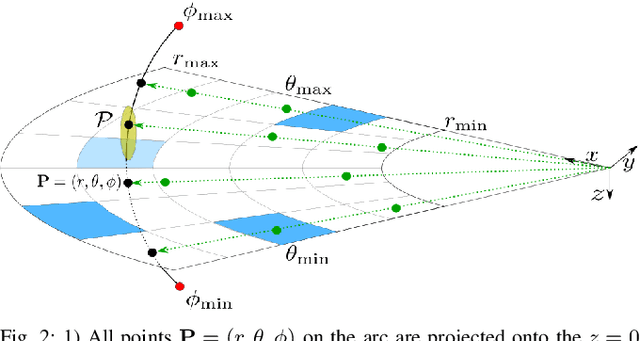

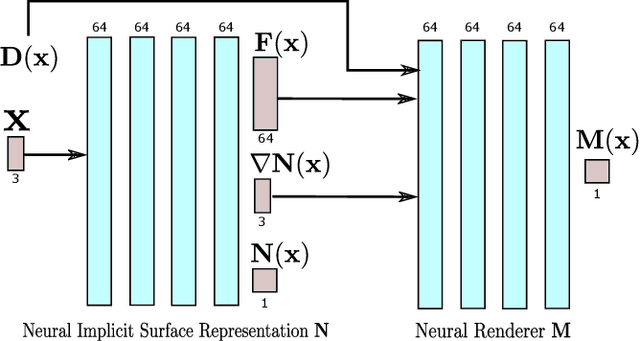

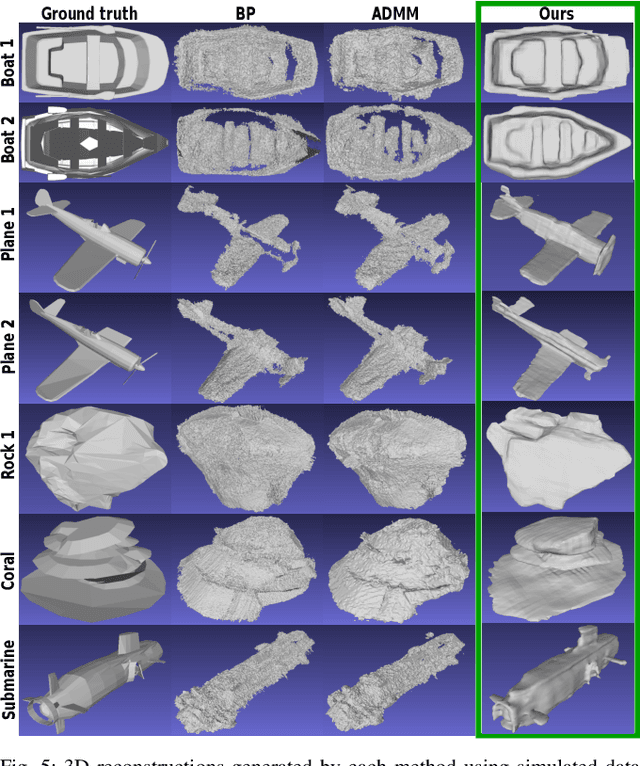

Neural Implicit Surface Reconstruction using Imaging Sonar

Sep 17, 2022

Abstract:We present a technique for dense 3D reconstruction of objects using an imaging sonar, also known as forward-looking sonar (FLS). Compared to previous methods that model the scene geometry as point clouds or volumetric grids, we represent the geometry as a neural implicit function. Additionally, given such a representation, we use a differentiable volumetric renderer that models the propagation of acoustic waves to synthesize imaging sonar measurements. We perform experiments on real and synthetic datasets and show that our algorithm reconstructs high-fidelity surface geometry from multi-view FLS images at much higher quality than was possible with previous techniques and without suffering from their associated memory overhead.

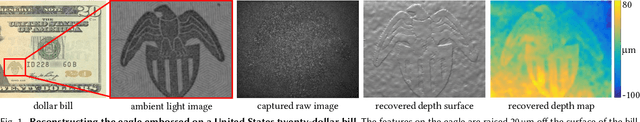

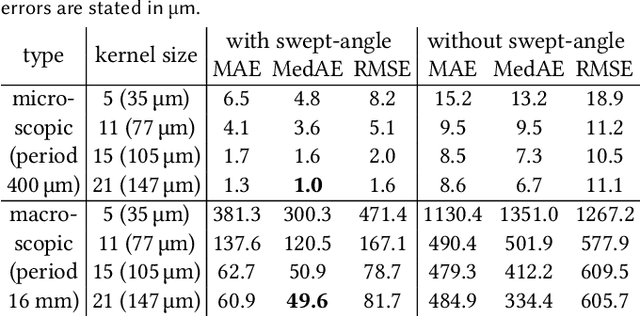

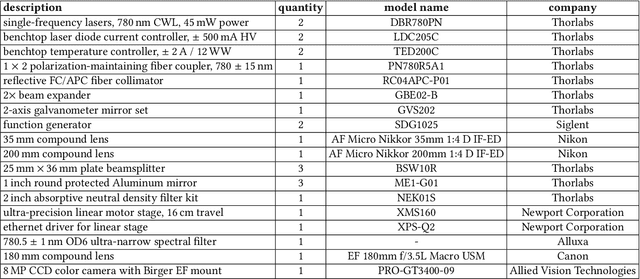

Swept-Angle Synthetic Wavelength Interferometry

May 21, 2022

Abstract:We present a new imaging technique, swept-angle synthetic wavelength interferometry, for full-field micron-scale 3D sensing. As in conventional synthetic wavelength interferometry, our technique uses light consisting of two optical wavelengths, resulting in per-pixel interferometric measurements whose phase encodes scene depth. Our technique additionally uses a new type of light source that, by emulating spatially-incoherent illumination, makes interferometric measurements insensitive to global illumination effects that confound depth information. The resulting technique combines the speed of full-field interferometric setups with the robustness to global illumination of scanning interferometric setups. Overall, our technique can recover full-frame depth at a spatial and axial resolution of a few micrometers using as few as 16 measurements, resulting in fast acquisition at frame rates of 10 Hz. We build an experimental prototype and use it to demonstrate these capabilities, by scanning a variety of scenes that contain challenging light transport effects such as interreflections, subsurface scattering, and specularities. We validate the accuracy of our measurements by showing that they closely match reference measurements from a full-field optical coherence tomography system, despite being captured at orders of magnitude faster acquisition times and while operating under strong ambient light.

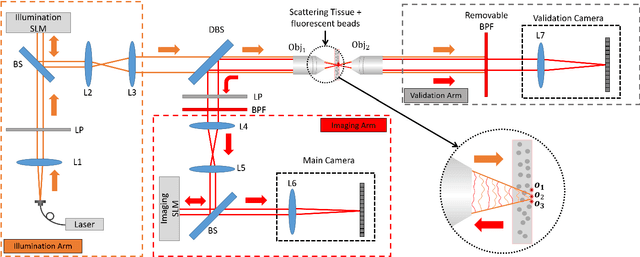

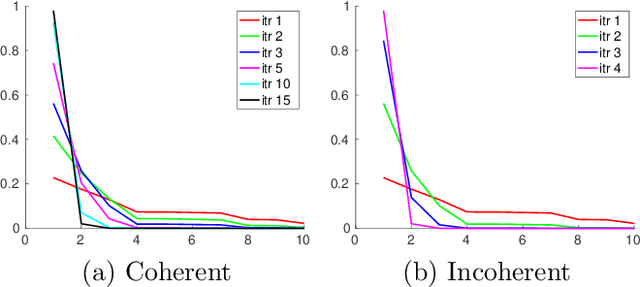

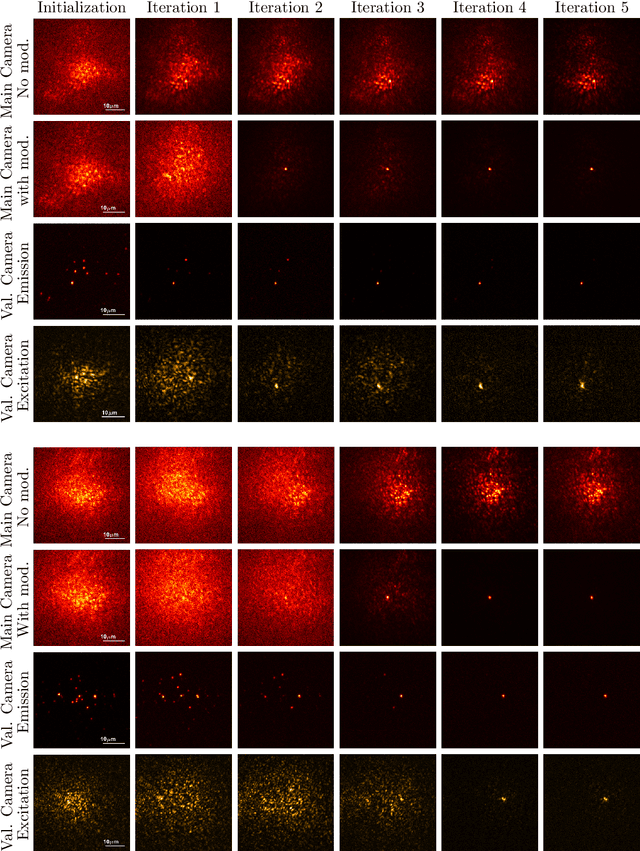

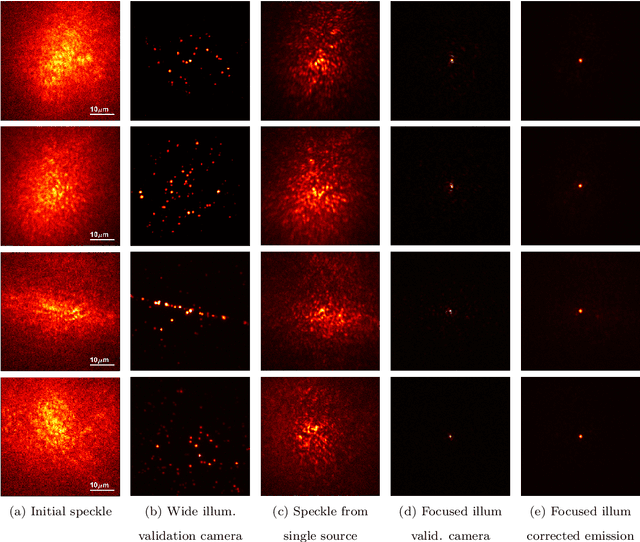

Fluorescent wavefront shaping using incoherent iterative phase conjugation

May 15, 2022

Abstract:Wavefront shaping correction makes it possible to image fluorescent particles deep inside scattering tissue. This requires determining a correction mask to be placed in both excitation and emission paths. Standard approaches select correction masks by optimizing various image metrics, a process that requires capturing a prohibitively large number of images. To reduce acquisition cost, iterative phase conjugation techniques use the observation that the desired correction mask is an eigenvector of the tissue transmission operator. They then determine this eigenvector via optical implementations of the power iteration method, which require capturing orders of magnitude fewer images. Existing iterative phase conjugation techniques assume a linear model for the transmission of light through tissue, and thus only apply to fully-coherent imaging systems. We extend such techniques to the incoherent case for the first time. The fact that light emitted from different sources sums incoherently violates the linear model and makes linear transmission operators inapplicable. We show that, surprisingly, the non-linearity due to incoherent summation results in an order-of-magnitude acceleration in the convergence of the phase conjugation iteration.

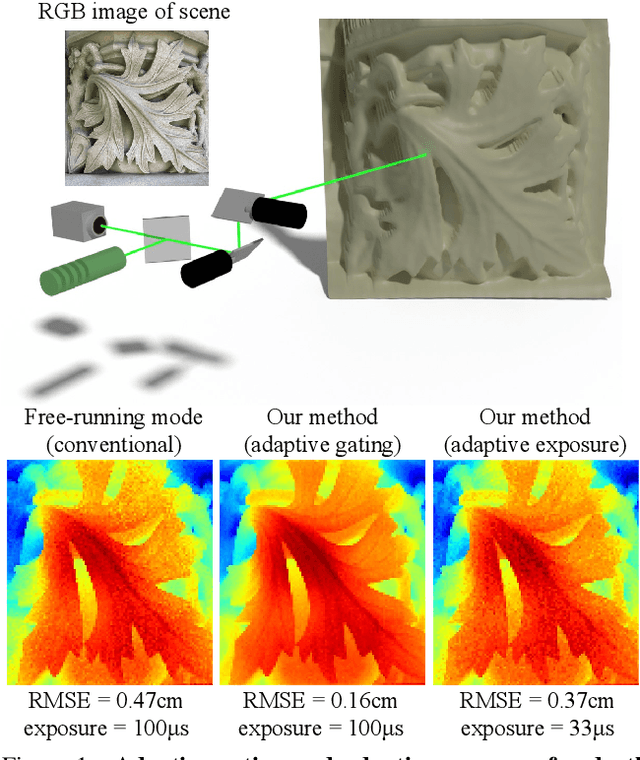

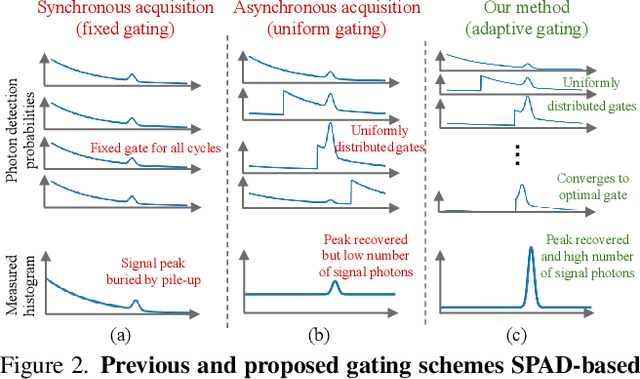

Adaptive Gating for Single-Photon 3D Imaging

Nov 30, 2021

Abstract:Single-photon avalanche diodes (SPADs) are growing in popularity for depth sensing tasks. However, SPADs still struggle in the presence of high ambient light due to the effects of pile-up. Conventional techniques leverage fixed or asynchronous gating to minimize pile-up effects, but these gating schemes are all non-adaptive, as they are unable to incorporate factors such as scene priors and previous photon detections into their gating strategy. We propose an adaptive gating scheme built upon Thompson sampling. Adaptive gating periodically updates the gate position based on prior photon observations in order to minimize depth errors. Our experiments show that our gating strategy results in significantly reduced depth reconstruction error and acquisition time, even when operating outdoors under strong sunlight conditions.

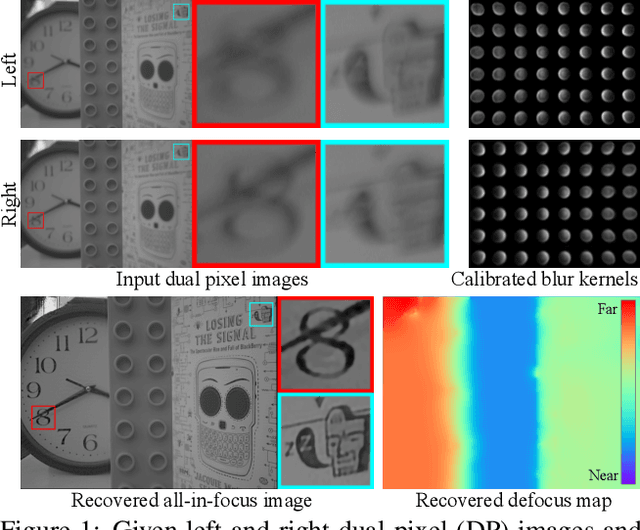

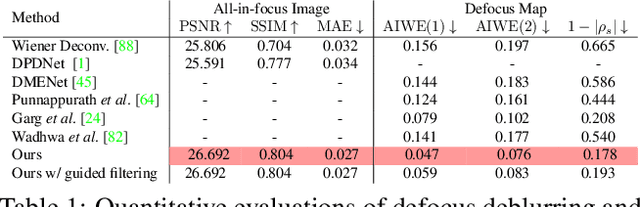

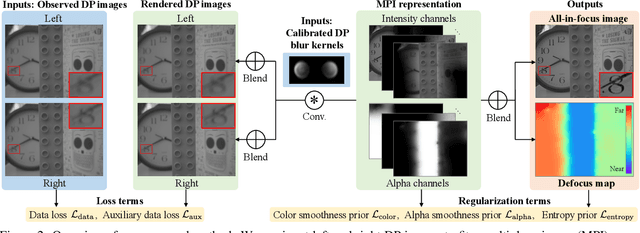

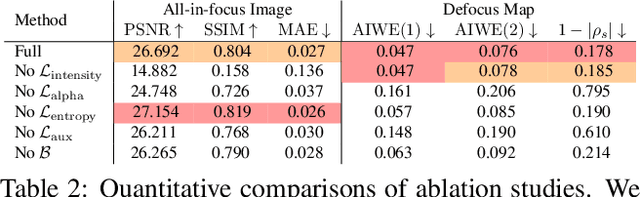

Defocus Map Estimation and Deblurring from a Single Dual-Pixel Image

Oct 12, 2021

Abstract:We present a method that takes as input a single dual-pixel image, and simultaneously estimates the image's defocus map -- the amount of defocus blur at each pixel -- and recovers an all-in-focus image. Our method is inspired from recent works that leverage the dual-pixel sensors available in many consumer cameras to assist with autofocus, and use them for recovery of defocus maps or all-in-focus images. These prior works have solved the two recovery problems independently of each other, and often require large labeled datasets for supervised training. By contrast, we show that it is beneficial to treat these two closely-connected problems simultaneously. To this end, we set up an optimization problem that, by carefully modeling the optics of dual-pixel images, jointly solves both problems. We use data captured with a consumer smartphone camera to demonstrate that, after a one-time calibration step, our approach improves upon prior works for both defocus map estimation and blur removal, despite being entirely unsupervised.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge