Aswin C. Sankaranarayanan

Precise Forecasting of Sky Images Using Spatial Warping

Sep 18, 2024

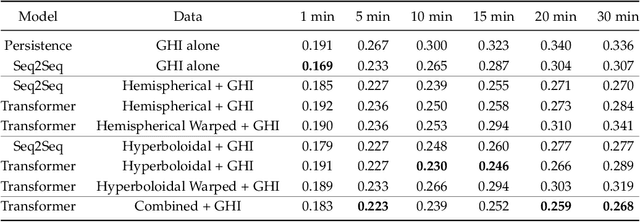

Abstract:The intermittency of solar power, due to occlusion from cloud cover, is one of the key factors inhibiting its widespread use in both commercial and residential settings. Hence, real-time forecasting of solar irradiance for grid-connected photovoltaic systems is necessary to schedule and allocate resources across the grid. Ground-based imagers that capture wide field-of-view images of the sky are commonly used to monitor cloud movement around a particular site in an effort to forecast solar irradiance. However, these wide FOV imagers capture a distorted image of sky image, where regions near the horizon are heavily compressed. This hinders the ability to precisely predict cloud motion near the horizon which especially affects prediction over longer time horizons. In this work, we combat the aforementioned constraint by introducing a deep learning method to predict a future sky image frame with higher resolution than previous methods. Our main contribution is to derive an optimal warping method to counter the adverse affects of clouds at the horizon, and learn a framework for future sky image prediction which better determines cloud evolution for longer time horizons.

Computational Imaging for Long-Term Prediction of Solar Irradiance

Sep 18, 2024

Abstract:The occlusion of the sun by clouds is one of the primary sources of uncertainties in solar power generation, and is a factor that affects the wide-spread use of solar power as a primary energy source. Real-time forecasting of cloud movement and, as a result, solar irradiance is necessary to schedule and allocate energy across grid-connected photovoltaic systems. Previous works monitored cloud movement using wide-angle field of view imagery of the sky. However, such images have poor resolution for clouds that appear near the horizon, which reduces their effectiveness for long term prediction of solar occlusion. Specifically, to be able to predict occlusion of the sun over long time periods, clouds that are near the horizon need to be detected, and their velocities estimated precisely. To enable such a system, we design and deploy a catadioptric system that delivers wide-angle imagery with uniform spatial resolution of the sky over its field of view. To enable prediction over a longer time horizon, we design an algorithm that uses carefully selected spatio-temporal slices of the imagery using estimated wind direction and velocity as inputs. Using ray-tracing simulations as well as a real testbed deployed outdoors, we show that the system is capable of predicting solar occlusion as well as irradiance for tens of minutes in the future, which is an order of magnitude improvement over prior work.

Angle Sensitive Pixels for Lensless Imaging on Spherical Sensors

Jun 28, 2023Abstract:We propose OrbCam, a lensless architecture for imaging with spherical sensors. Prior work in lensless imager techniques have focused largely on using planar sensors; for such designs, it is important to use a modulation element, e.g. amplitude or phase masks, to construct a invertible imaging system. In contrast, we show that the diversity of pixel orientations on a curved surface is sufficient to improve the conditioning of the mapping between the scene and the sensor. Hence, when imaging on a spherical sensor, all pixels can have the same angular response function such that the lensless imager is comprised of pixels that are identical to each other and differ only in their orientations. We provide the computational tools for the design of the angular response of the pixels in a spherical sensor that leads to well-conditioned and noise-robust measurements. We validate our design in both simulation and a lab prototype. The implications of our design is that the lensless imaging can be enabled easily for curved and flexible surfaces thereby opening up a new set of application domains.

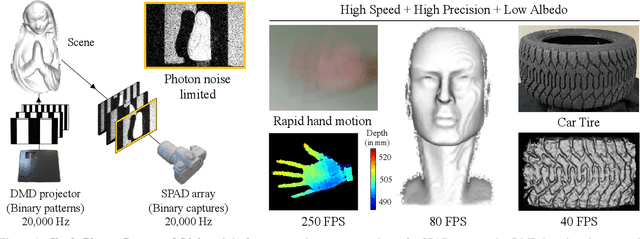

Single-Photon Structured Light

Apr 11, 2022

Abstract:We present a novel structured light technique that uses Single Photon Avalanche Diode (SPAD) arrays to enable 3D scanning at high-frame rates and low-light levels. This technique, called "Single-Photon Structured Light", works by sensing binary images that indicates the presence or absence of photon arrivals during each exposure; the SPAD array is used in conjunction with a high-speed binary projector, with both devices operated at speeds as high as 20~kHz. The binary images that we acquire are heavily influenced by photon noise and are easily corrupted by ambient sources of light. To address this, we develop novel temporal sequences using error correction codes that are designed to be robust to short-range effects like projector and camera defocus as well as resolution mismatch between the two devices. Our lab prototype is capable of 3D imaging in challenging scenarios involving objects with extremely low albedo or undergoing fast motion, as well as scenes under strong ambient illumination.

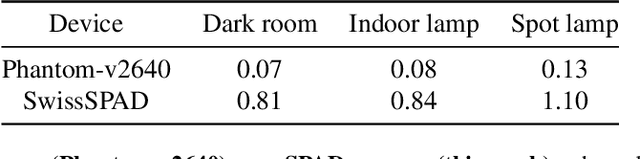

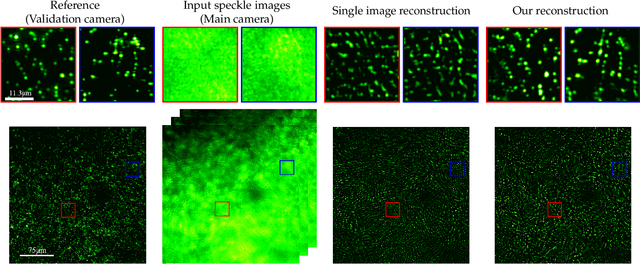

Enhancing Speckle Statistics for Imaging inside Scattering Media

Mar 27, 2022

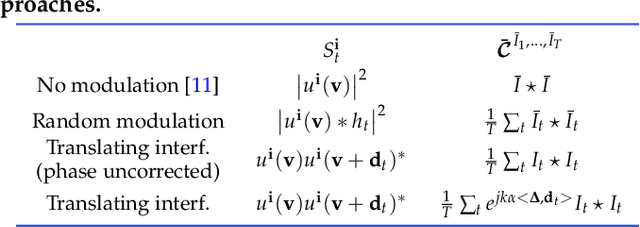

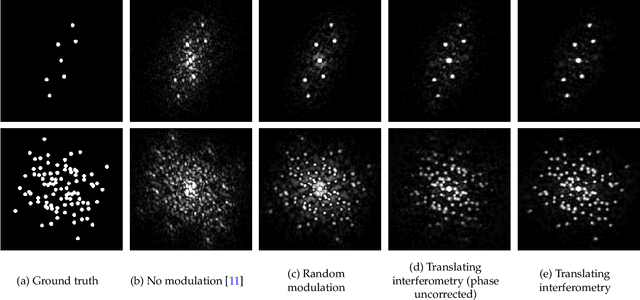

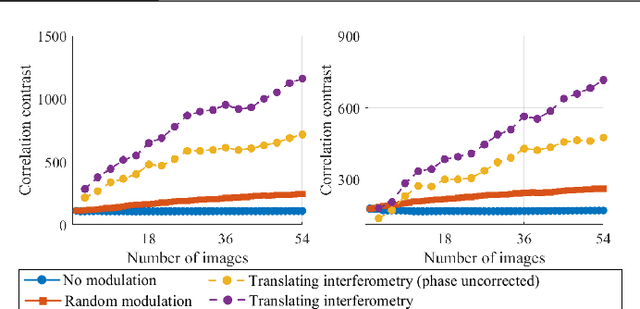

Abstract:We exploit memory effect speckle correlations for the imaging of incoherent linear (single-photon) fluorescent sources behind scattering tissue. While memory effect-based imaging techniques have been heavily studied in the past, for thick scattering layers and complex illumination patterns these correlations are weak, limiting the practice applicability of the idea. In this work, we introduce a Spatial Light Modulator (SLM) between the tissue sample and the imaging sensor and capture multiple modulations of the speckle pattern. We show that by correctly designing the modulation pattern and the reconstruction algorithm we can greatly enhance statistical correlations in the data. We exploit this to demonstrate the reconstruction of mega-pixel wide fluorescent patterns behind scattering tissue.

Programmable Spectral Filter Arrays for Hyperspectral Imaging

Sep 29, 2021

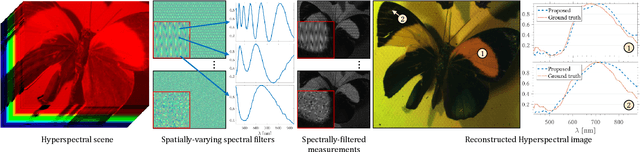

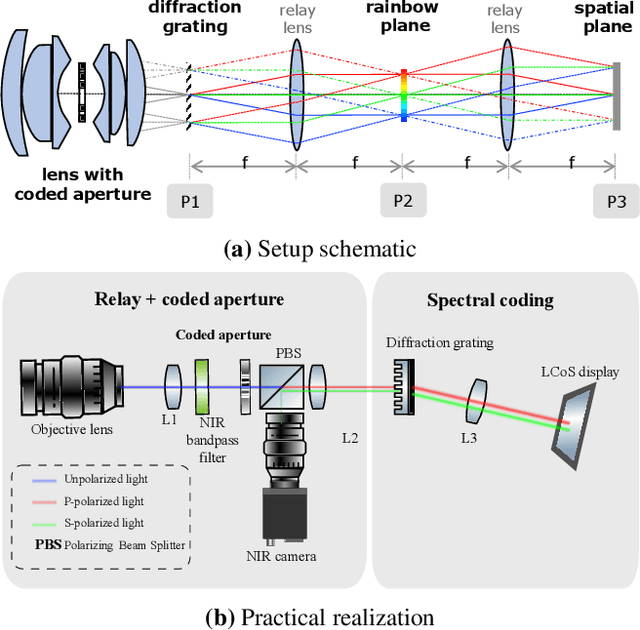

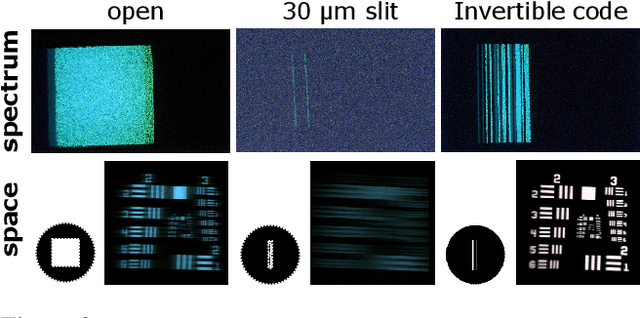

Abstract:Modulating the spectral dimension of light has numerous applications in computational imaging. While there are many techniques for achieving this, there are few, if any, for implementing a spatially-varying and programmable spectral filter. This paper provides an optical design for implementing such a capability. Our key insight is that spatially-varying spectral modulation can be implemented using a liquid crystal spatial light modulator since it provides an array of liquid crystal cells, each of which can be purposed to act as a programmable spectral filter array. Relying on this insight, we provide an optical schematic and an associated lab prototype for realizing the capability, as well as address the associated challenges at implementation using optical and computational innovations. We show a number of unique operating points with our prototype including single- and multi-image hyperspectral imaging, as well as its application in material identification.

A Simple Framework for 3D Lensless Imaging with Programmable Masks

Aug 18, 2021

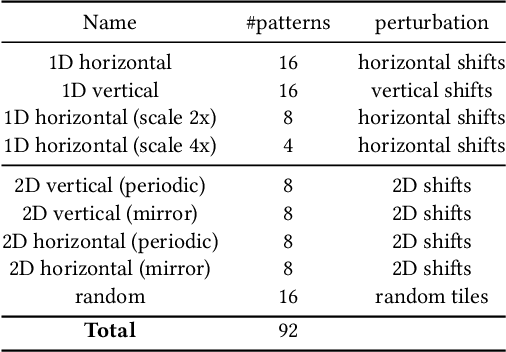

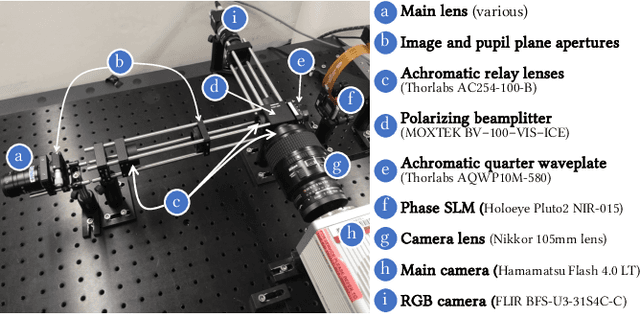

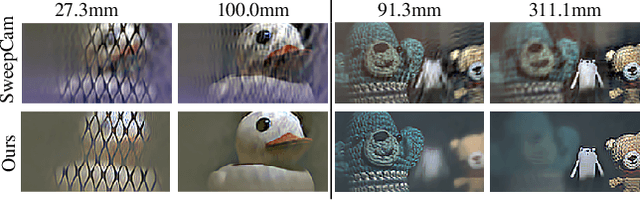

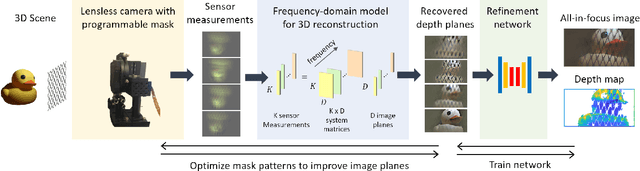

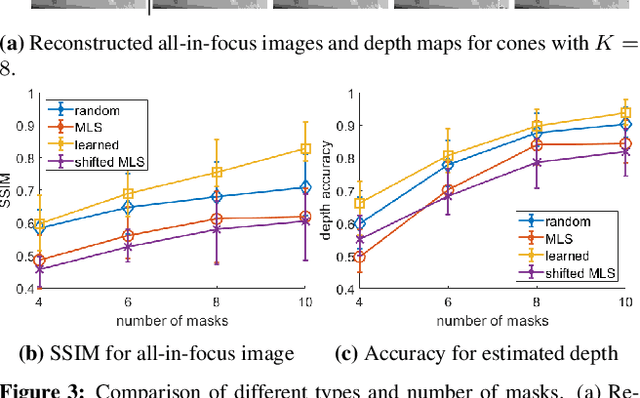

Abstract:Lensless cameras provide a framework to build thin imaging systems by replacing the lens in a conventional camera with an amplitude or phase mask near the sensor. Existing methods for lensless imaging can recover the depth and intensity of the scene, but they require solving computationally-expensive inverse problems. Furthermore, existing methods struggle to recover dense scenes with large depth variations. In this paper, we propose a lensless imaging system that captures a small number of measurements using different patterns on a programmable mask. In this context, we make three contributions. First, we present a fast recovery algorithm to recover textures on a fixed number of depth planes in the scene. Second, we consider the mask design problem, for programmable lensless cameras, and provide a design template for optimizing the mask patterns with the goal of improving depth estimation. Third, we use a refinement network as a post-processing step to identify and remove artifacts in the reconstruction. These modifications are evaluated extensively with experimental results on a lensless camera prototype to showcase the performance benefits of the optimized masks and recovery algorithms over the state of the art.

* Supplementary material available at https://github.com/CSIPlab/Programmable3Dcam.git

Towards Occlusion-Aware Multifocal Displays

May 02, 2020

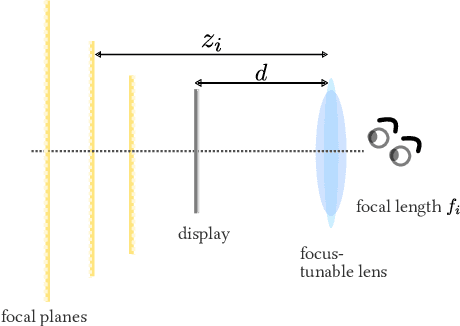

Abstract:The human visual system uses numerous cues for depth perception, including disparity, accommodation, motion parallax and occlusion. It is incumbent upon virtual-reality displays to satisfy these cues to provide an immersive user experience. Multifocal displays, one of the classic approaches to satisfy the accommodation cue, place virtual content at multiple focal planes, each at a di erent depth. However, the content on focal planes close to the eye do not occlude those farther away; this deteriorates the occlusion cue as well as reduces contrast at depth discontinuities due to leakage of the defocus blur. This paper enables occlusion-aware multifocal displays using a novel ConeTilt operator that provides an additional degree of freedom -- tilting the light cone emitted at each pixel of the display panel. We show that, for scenes with relatively simple occlusion con gurations, tilting the light cones provides the same e ect as physical occlusion. We demonstrate that ConeTilt can be easily implemented by a phase-only spatial light modulator. Using a lab prototype, we show results that demonstrate the presence of occlusion cues and the increased contrast of the display at depth edges.

Photosequencing of Motion Blur using Short and Long Exposures

Dec 11, 2019

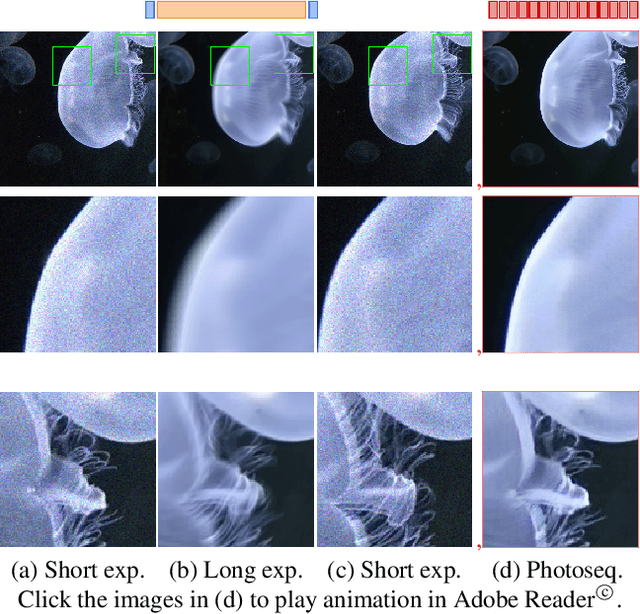

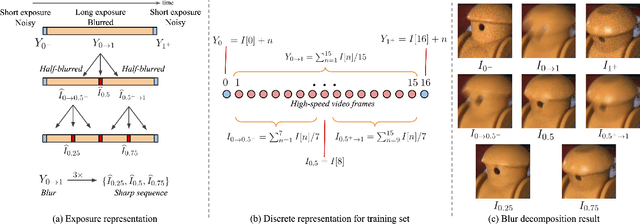

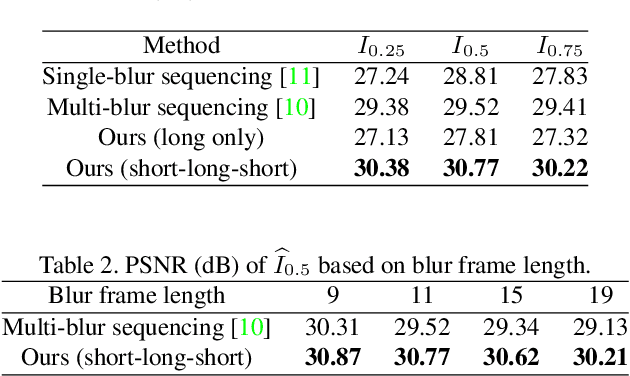

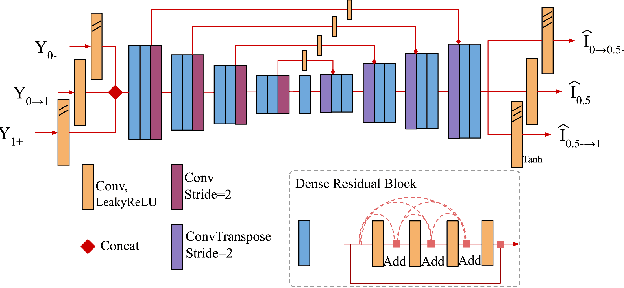

Abstract:Photosequencing aims to transform a motion blurred image to a sequence of sharp images. This problem is challenging due to the inherent ambiguities in temporal ordering as well as the recovery of lost spatial textures due to blur. Adopting a computational photography approach, we propose to capture two short exposure images, along with the original blurred long exposure image to aid in the aforementioned challenges. Post-capture, we recover the sharp photosequence using a novel blur decomposition strategy that recursively splits the long exposure image into smaller exposure intervals. We validate the approach by capturing a variety of scenes with interesting motions using machine vision cameras programmed to capture short and long exposure sequences. Our experimental results show that the proposed method resolves both fast and fine motions better than prior works.

Programmable Spectrometry -- Per-pixel Classification of Materials using Learned Spectral Filters

May 13, 2019

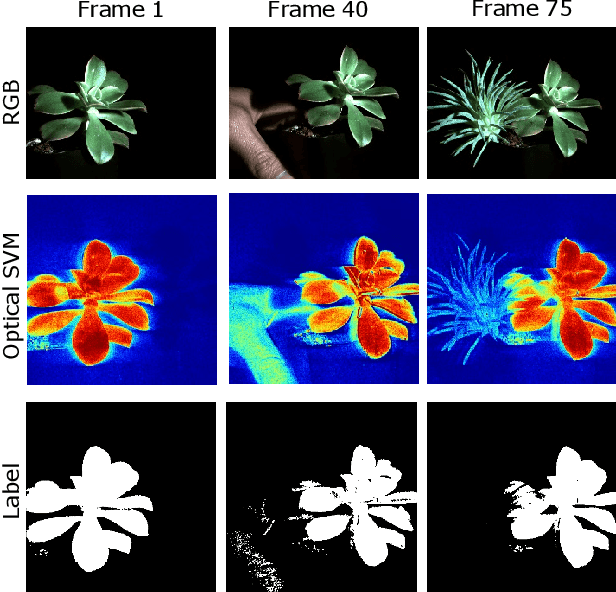

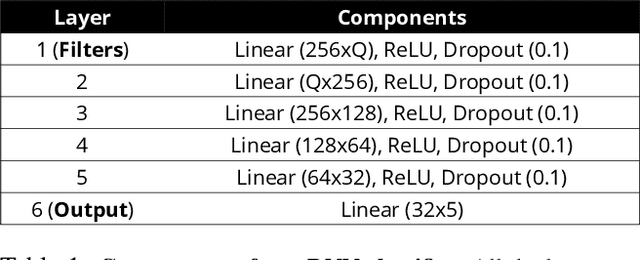

Abstract:Many materials have distinct spectral profiles. This facilitates estimation of the material composition of a scene at each pixel by first acquiring its hyperspectral image, and subsequently filtering it using a bank of spectral profiles. This process is inherently wasteful since only a set of linear projections of the acquired measurements contribute to the classification task. We propose a novel programmable camera that is capable of producing images of a scene with an arbitrary spectral filter. We use this camera to optically implement the spectral filtering of the scene's hyperspectral image with the bank of spectral profiles needed to perform per-pixel material classification. This provides gains both in terms of acquisition speed --- since only the relevant measurements are acquired --- and in signal-to-noise ratio --- since we invariably avoid narrowband filters that are light inefficient. Given training data, we use a range of classical and modern techniques including SVMs and neural networks to identify the bank of spectral profiles that facilitate material classification. We verify the method in simulations on standard datasets as well as real data using a lab prototype of the camera.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge